Failure Recovery

The DBMS module responsible for recovery management must satisfy two requirements:

- (regarding atomicity) enable a transaction to be

- committed (with a guarantee database changes are permanent), or

- aborted (with a guarantee that there are no database changes)

- (regarding reliability) enable a database to be recovered to a consistent state in case of hardware or software failure.

Interaction with recovery management:

- Input: a 2PL and ACA schedule of operations produced by the transaction manager, and

- Output: a schedule of object reads, object writes, and object forced writes.

There are 2 main approaches to Recovery:

- Shadowing

- copy-on-write and merge-on-commit approaches

- poor clustering

- used in system R, but not in modern systems

- Logging

- uses log files on (separate) stable media

- good utilization of buffers

- preserves original clusters

The logging approach is much better.

Log records record several types of information:

- UNDO information: Used to to undo database changes made by a transaction that aborts (OLD versions of objects)

- REDO information: Used to redo the work done by a transaction that commits (NEW versions of objects)

- BEGIN/COMMIT/ABORT information: record when transactions begin, commit, or abort

AHH I understand. Basically, undo is how the database was before, redo is how the database is afterwards

So how does this work in practice?

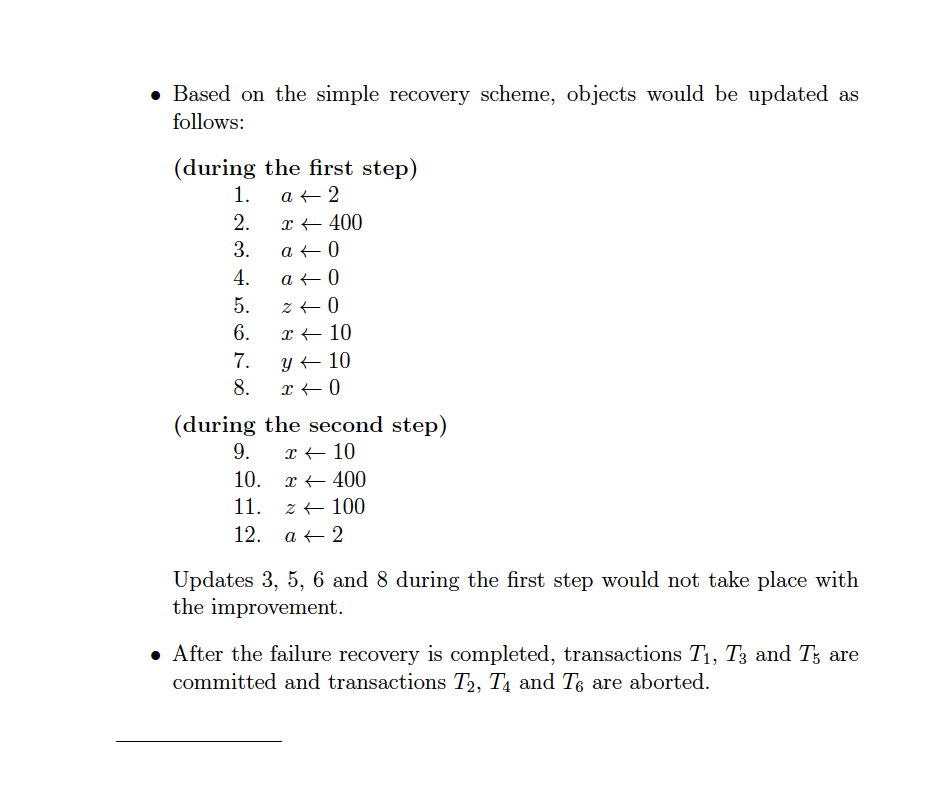

Understood this by looking at the assignment solution. A simple recovery from failure can proceed as follows:

- Step 1. Scan the log from the tail to the head remembering which transactions committed and applying the UNDO logs. Step 2. Scan the log from the head to the tail applying the REDO logs for committed transactions. One improvement is to not apply UNDO logs for committed transactions.