N-Gram

The goal is to generate text. We can use a probabilistic model.

Andrej Karpathy was the first to introduce me this idea.

Resources

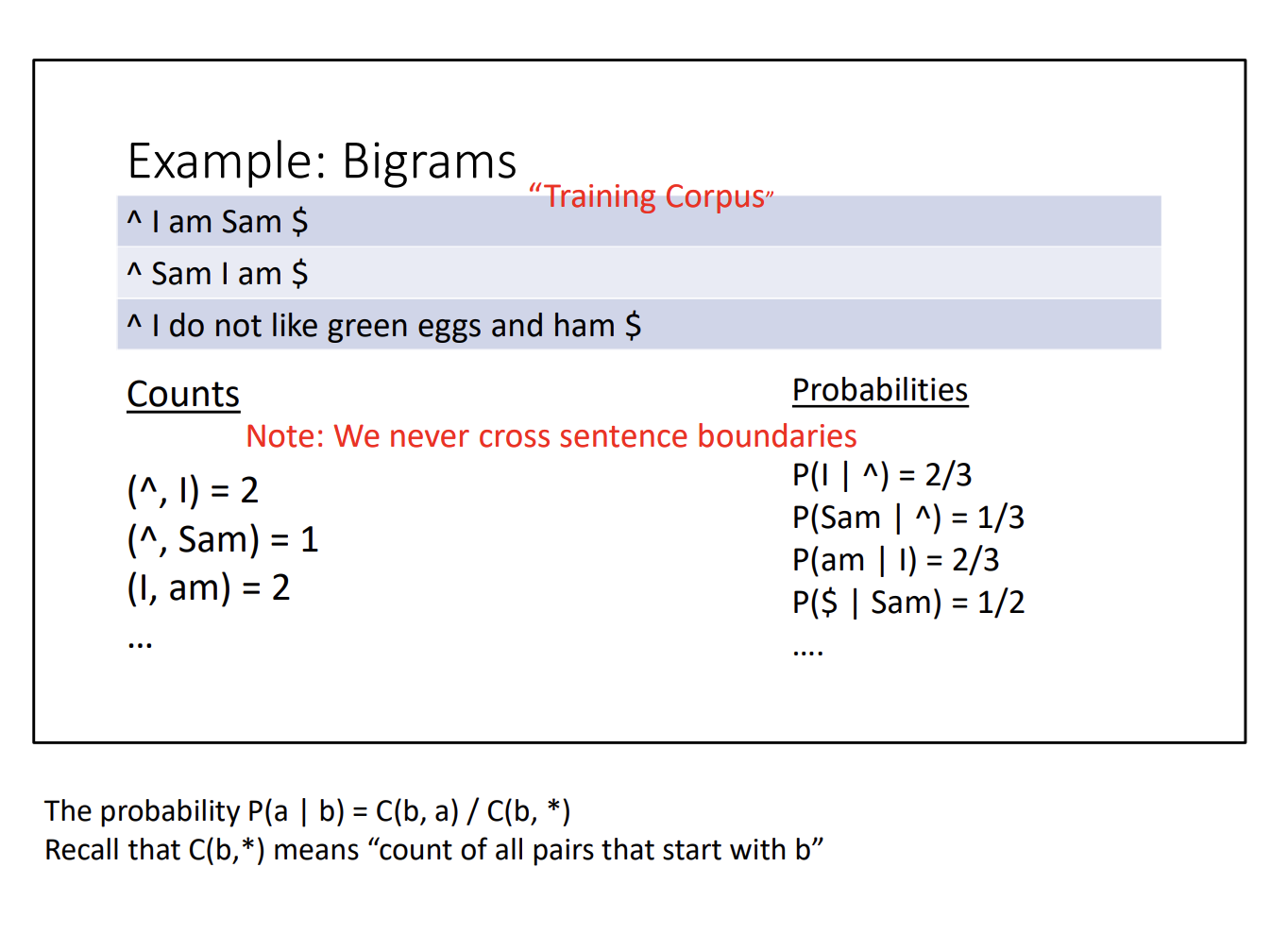

Example bigram: P(“I saw a van”) = P(“I”) x P(“saw” | “I”) x P(“a” | “I saw”) x P(“van” | “I saw a”)

But how feasible is this?

- Not really. We use a smaller limit: the n-gram

- Limit of words Basic Idea: Probability of next word only depends on the previous (N – 1) words

- N = 1 : Unigram Model-

- N = 2 : Bigram Model -

State of the art rarely goes above 5-gram.

We apply laplace smoothing for the bigram probabilities.