Positional Encoding

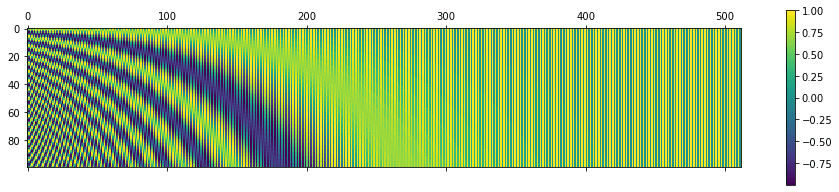

They started with sinusoidal encoding as was in the original paper.

However, we have now moved to RoPE.

Does positional encoding have weights?

No, it does not have weights. It’s a fixed, deterministic function of position and dimension.

- The values are precomputed using sine and cosine functions and are not learned during training.

This great huggingface article helps us derive the motivation.

Then you might finally understand this article https://machinelearningmastery.com/a-gentle-introduction-to-positional-encoding-in-transformer-models-part-1/

- My brain visualizes this plot, but I don’t fully understand this

Why not concatenate sinusoid

https://www.reddit.com/r/MachineLearning/comments/cttefo/comment/exs7d08/