Zero-Shot Learning (ZSL)

Zero-shot learning (ZSL) is a problem setup in machine learning, where at test time, a learner observes samples from classes, which were not observed during training, and needs to predict the class that they belong to.

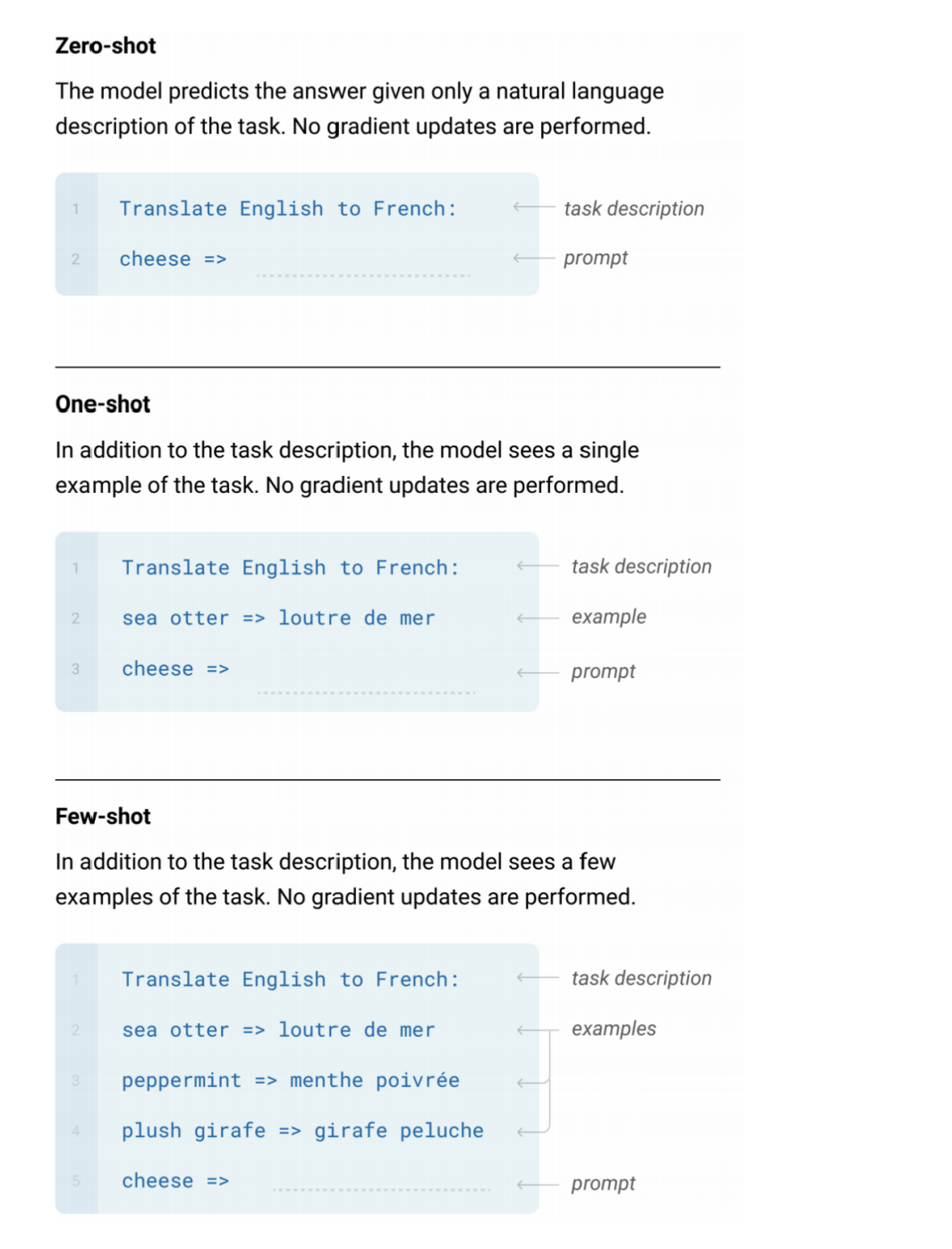

It’s basically Few-Shot Learning without taking any shots. https://pub.towardsai.net/zero-shot-vs-few-shot-learning-50-key-insights-with-2022-updates-17b71e8a88c5

So how is that possible? Even us, humans, can’t do that.

- Good talk: https://www.youtube.com/watch?v=jBnCcr-3bXc

Two ways:

- Pattern recognition with no training examples

- Solved by Semantic Transfer

I thought this was irrelevant, but actually, it’s extremely relevant. Think about all the classification tasks with a Supervised Learning paradigm. What if there are thousands of classes to predict? Some examples

- Object Recognition

- The type of butterfly

- The brand of a car (Toyota, Honda, Ferrari, etc.)

- Cross-lingual dictionary induction (?)

- The idea is you can translate english to german, and english to chinese. You want to translate german to chinese, wait why is this hard?

- Mind reading (read a brain and map that to a word)

- Every word would be a category

The idea is that you learn a mapping from a feature space to a category embedding.

Also, the problem of multi-label also exists.

Like say you annotate an image, maybe it has both a mountain and a beach.