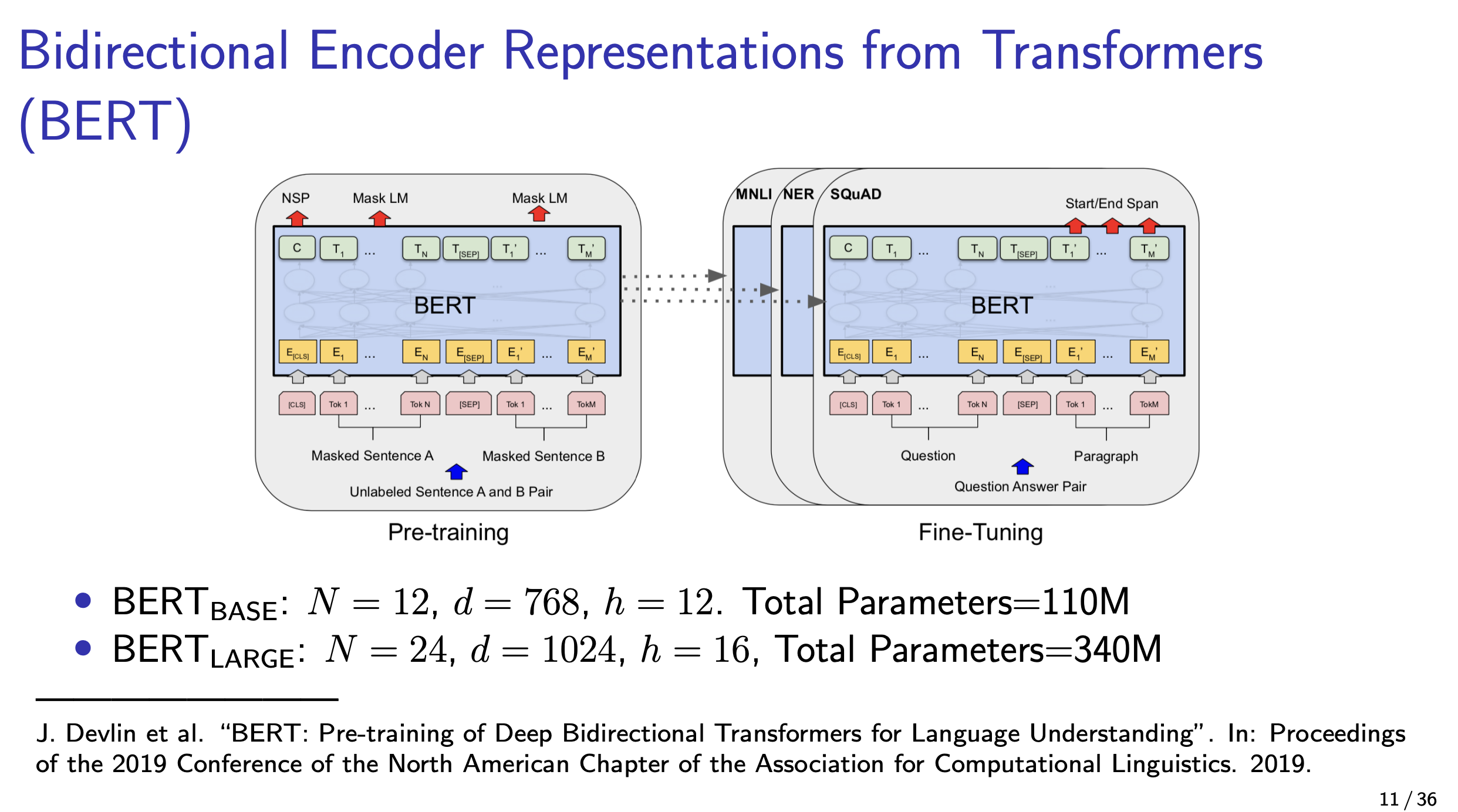

Bidirectional Encoder Representations from Transformers (BERT)

BERT is a bidirectional Transformer. BERT is not a generative model. It’s an encoder only.

Bert tries to predict the masked token.

Resources

- Original paper https://arxiv.org/pdf/1810.04805

- https://watml.github.io/slides/CS480680_lecture12.pdf

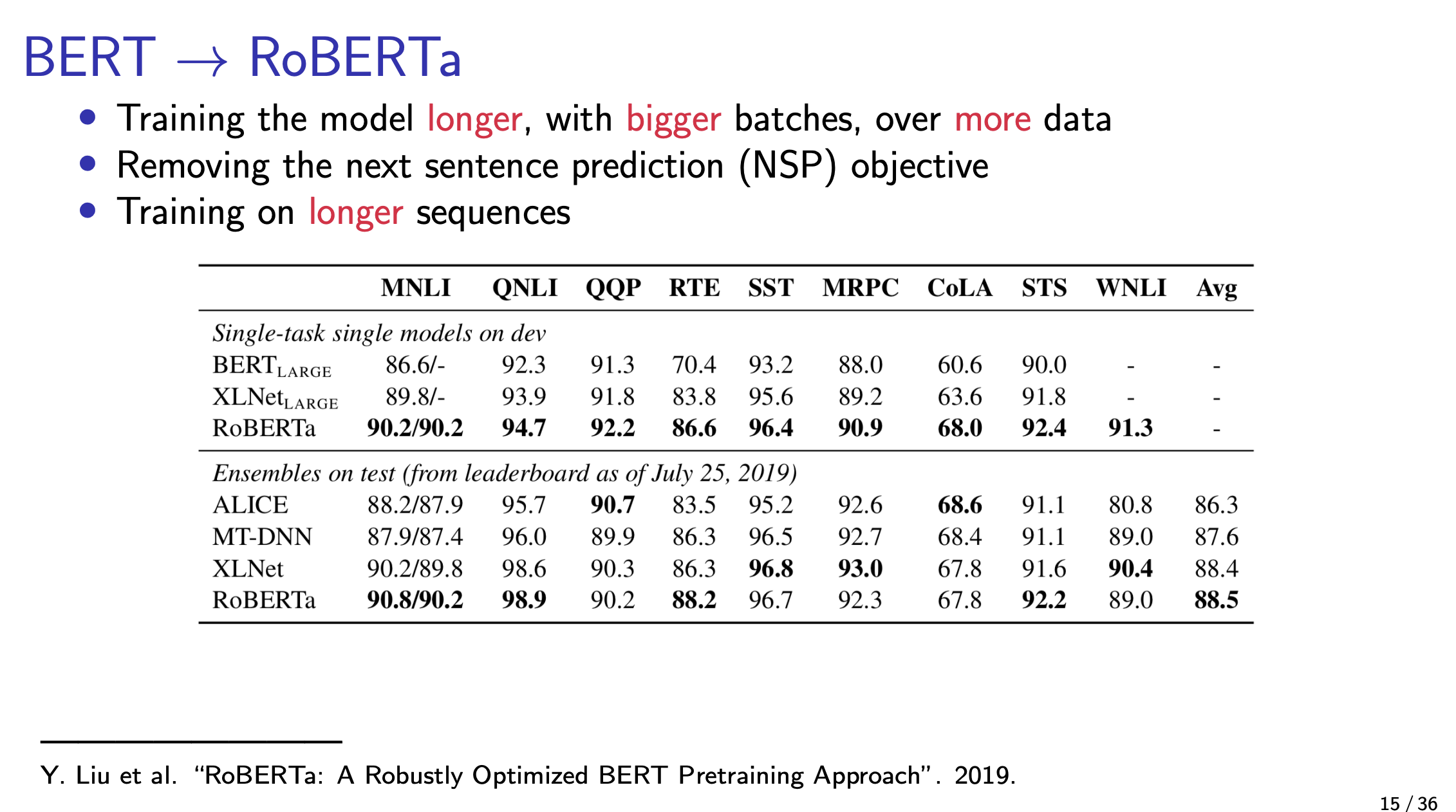

RoBERTA

This is just training BERT on more images.