Transformer

The main motivation for the transformer architecture was to improve the ability of neural networks to handle sequential data.

Transformers can process data in parallel.

Architecturally, there’s nothing about the transformer that prevents it from scaling to longer sequences. It is simply that when we compute the attention , we end up with a dimension, and that can become really really big.

A rough draft of the dimensions (inspo from Shape Suffixes)

B: batch size

L: sequence length

H: number of heads

C: channels (also called d_model, n_embed)

V: number of models

input embedding (B, L, C)

Components

- Feedforward

- LayerNorm

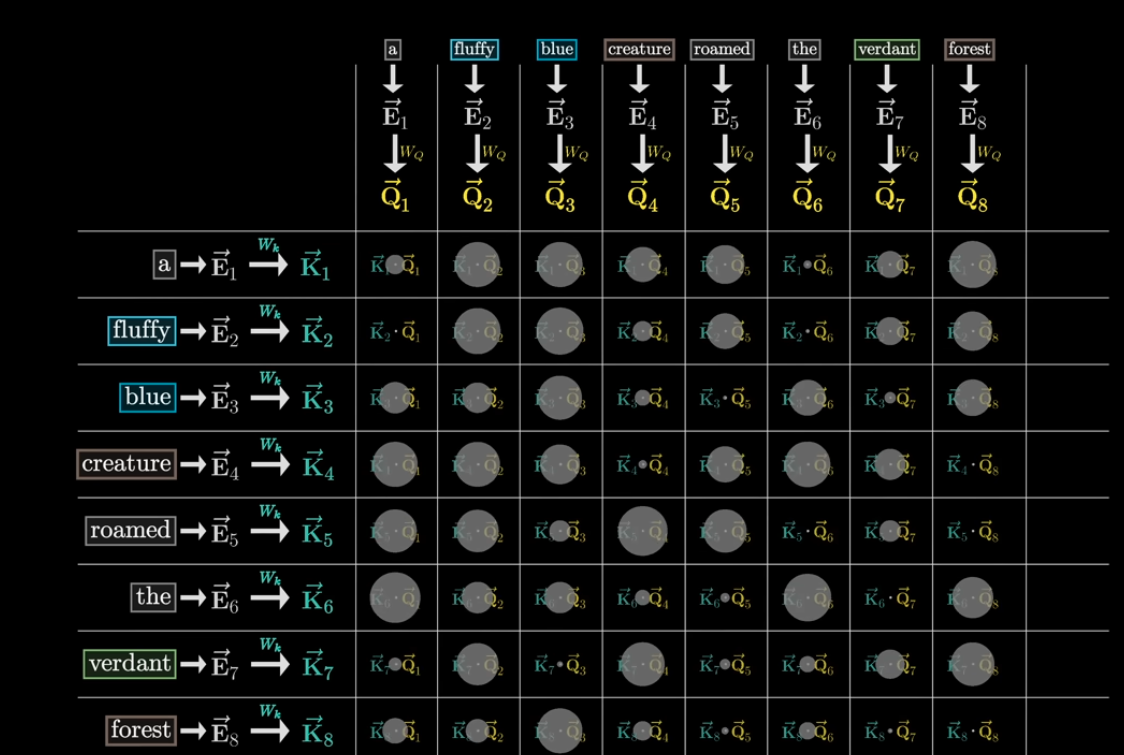

When you compute attention, is it (L,C) * (C,L) or (C, L) * (L,C)?

- We want to model the relationship between every token to every other word in the sequence i.e. (L, L)

You get a matrix of shape

(B, h, L, d_k) @ (B, h, d_k, L) → (B, h, L, L)

From 3B1B: There’s masking, so that your current word doesn’t affect the previous word.

- Really good visualization from 3b1b

Resources

- Annotated transformer

- Illustrated transformer

- LLM Visualization

- Original paper Attention Is All You Need by Vaswani et. al.

- https://goyalpramod.github.io/blogs/Transformers_laid_out/

- Attention is all you need (Transformer) - Model explanation (including math), Inference and Training by Umar Jamil

- the slides

- Just read this blog

- I really liked these slides from Waterloo’s CS480

Some really good videos:

- History of Transformers

- The Transformer Architecture

- Vision Transformer (An Image is Worth 16x16 Words) video

- Swin Transformer

- Vision Transformers

Attention computes importance.

So the left is the attention block.

Think of it as a multi-class classification for 32K tokens.

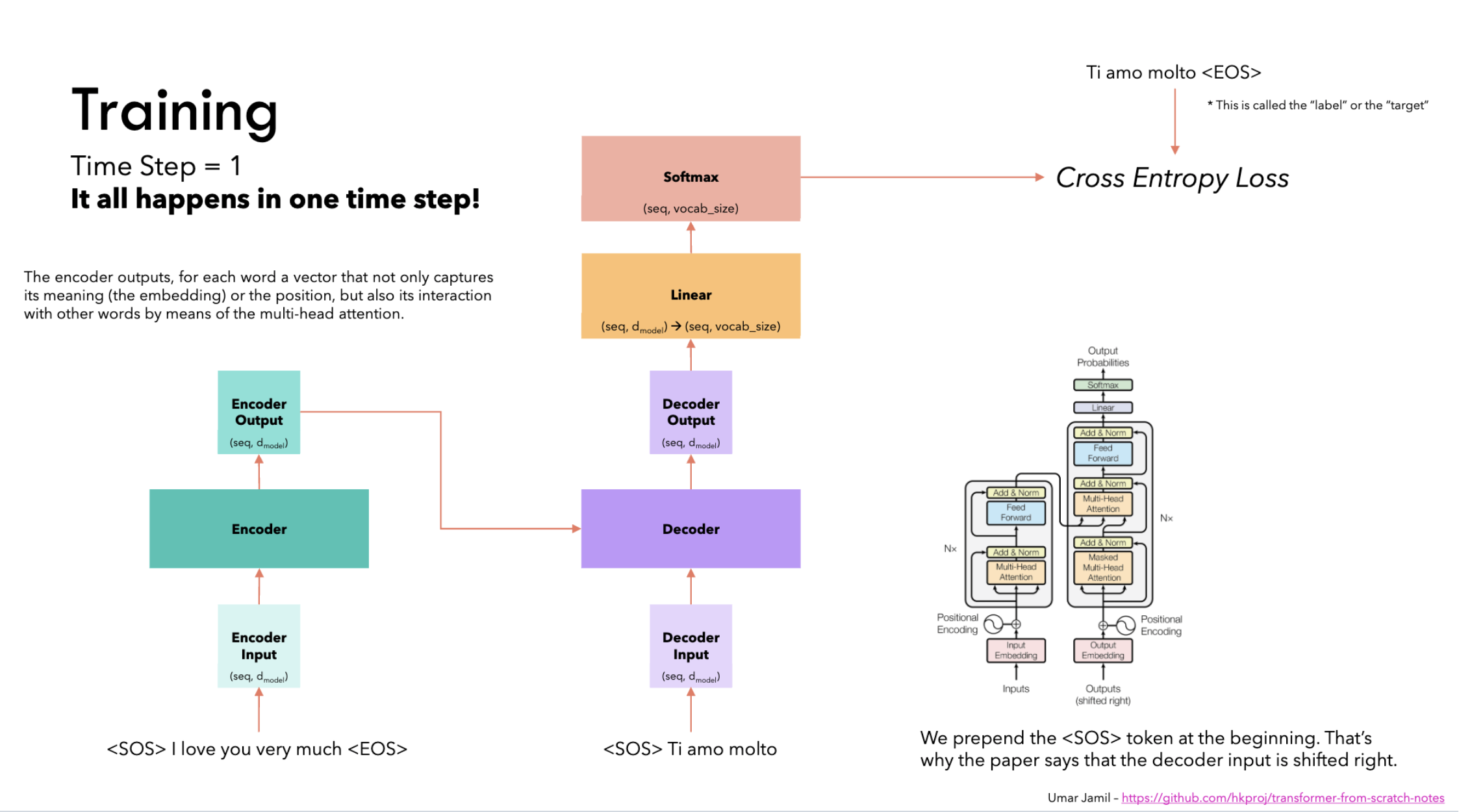

Nis the number of layersDon’t be confused. it’s NOT the number of layers of the feedforward network. This is the number of blocks.

- is like 40 for LLama

- can be 512

Implementation Details

The part about how training is fed got me choked up, from reading Annotated transformer (reading it is really really helpful though).

Gold target:

<bos> I like eating mushrooms <eos>

When we build inputs/labels:

- labels (trg_y) =

I like eating mushrooms <eos>

So the very first training example is:

- Input to decoder at position 0:

<bos> - Label at position 0:

I

That means: the model is explicitly trained to predict the first word (“I”) given only <bos> and the encoder context.