Branch Prediction

I first saw this idea in ECE222.

It seems that this is fundamental, heard George Hotz talk about it.

And this is important in the whole CPU vs. GPU debate. Because GPUs don’t have branch prediction.

Why don't GPUs have branch prediction?

Because GPUs are built around a different bet: run tons of threads in parallel and hide latency, instead of making one thread run as fast as possible. Classic branch prediction is mostly a CPU trick for single-thread performance.

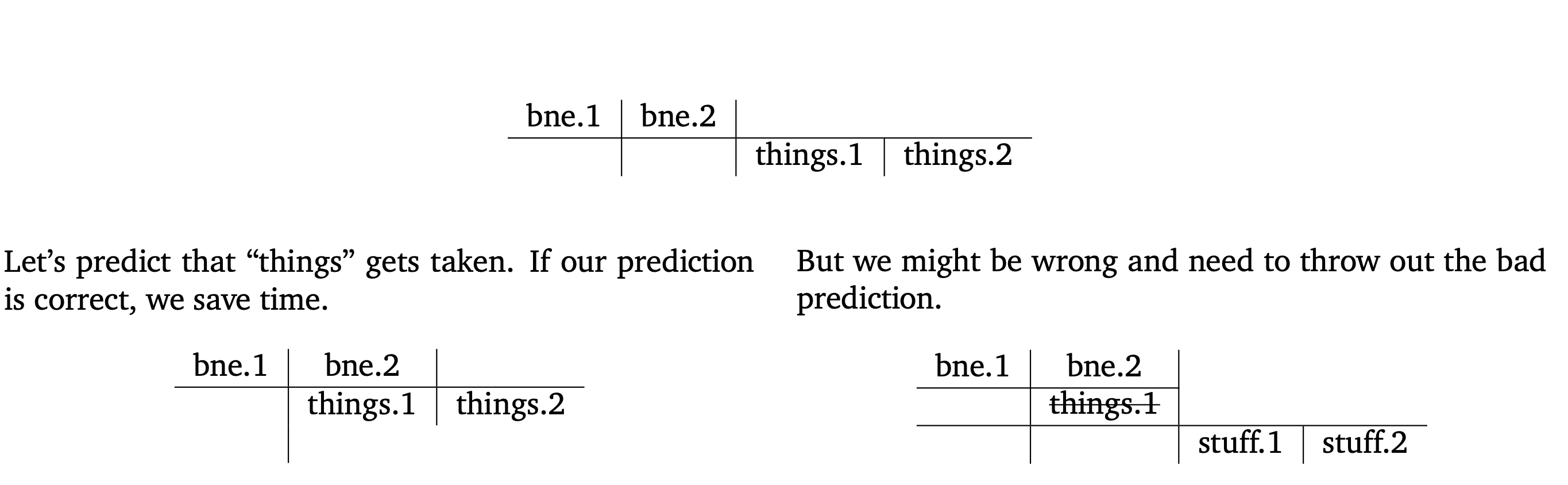

How does branch prediction work? If we predict correctly, we can pipeline: