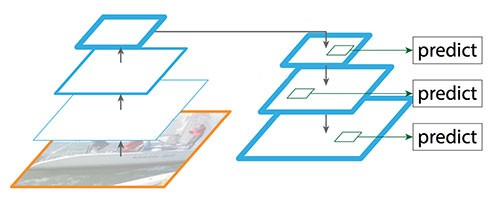

Feature Pyramid Network (FPN)

introduced from Self-Driving Car Companies

Okay, I kind of understand a bit more, this just treats the image at multiple scales and passes the context to all these different resolutions, so you can make better predictions.

The actually merging is done with a Transformer, I think they get the ground truth in simulation I need to understand how this works. Basically, a FPN takes an images and scale across multiple resolutions into this sort of pyramid shape. We start from a super low resolution (top of pyramid) and then move down. Video:

- FPN: https://www.youtube.com/watch?v=6fXBXNjd1JQ&ab_channel=MaziarRaissi

- BiFPN (EfficientDet): https://www.youtube.com/watch?v=vK_SWupTpY4&ab_channel=MaziarRaissi

The original paper is called “Feature Pyramid Networks for Object Detection”.

There is this blog article: https://jonathan-hui.medium.com/understanding-feature-pyramid-networks-for-object-detection-fpn-45b227b9106c

BiFPN

https://github.com/google/automl/tree/master/efficientdet (original GitHub) There is this notebook that you should consult:

https://github.com/tristandb/EfficientDet-PyTorch

The follow up of that is a BiFPN.

- https://towardsdatascience.com/review-fpn-feature-pyramid-network-object-detection-262fc7482610

- https://jonathan-hui.medium.com/understanding-feature-pyramid-networks-for-object-detection-fpn-45b227b9106c