Flash Attention

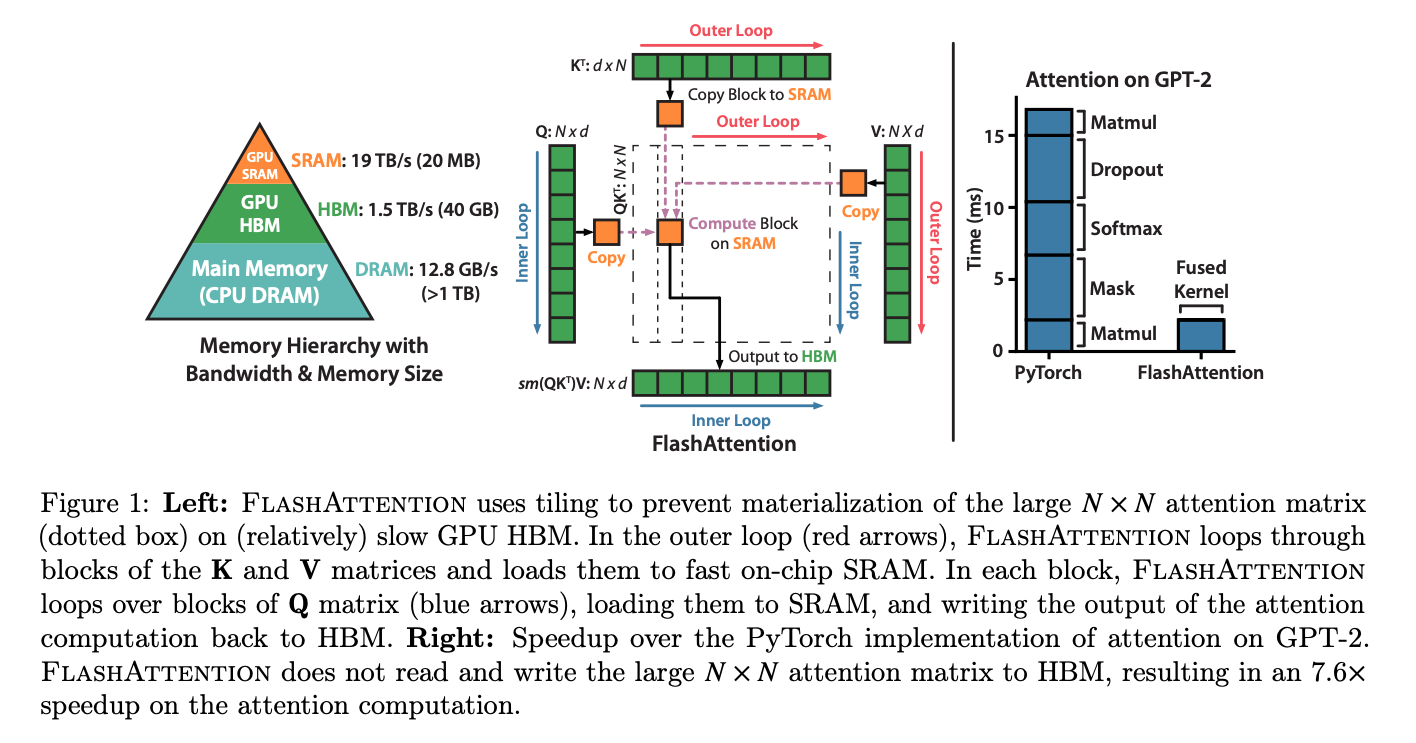

FlashAttention is an efficient memory-optimized algorithm for computing self-attention in Transformers. It was designed to reduce memory usage and increase speed by leveraging tiling and kernel fusion techniques, making it particularly useful for large-scale deep learning models.

https://arxiv.org/pdf/2205.14135