Attention (Transformer)

Terms:

- Self-Attention

- Masked Attention

- Sparse Attention

- Flash Attention

- Paged Attention (used for faster compute)

- Multi-Head Latent Attention

- Multi-Head Attention

- Multi-Query Attention (modern)

- Grouped Query Attention

Confusion:

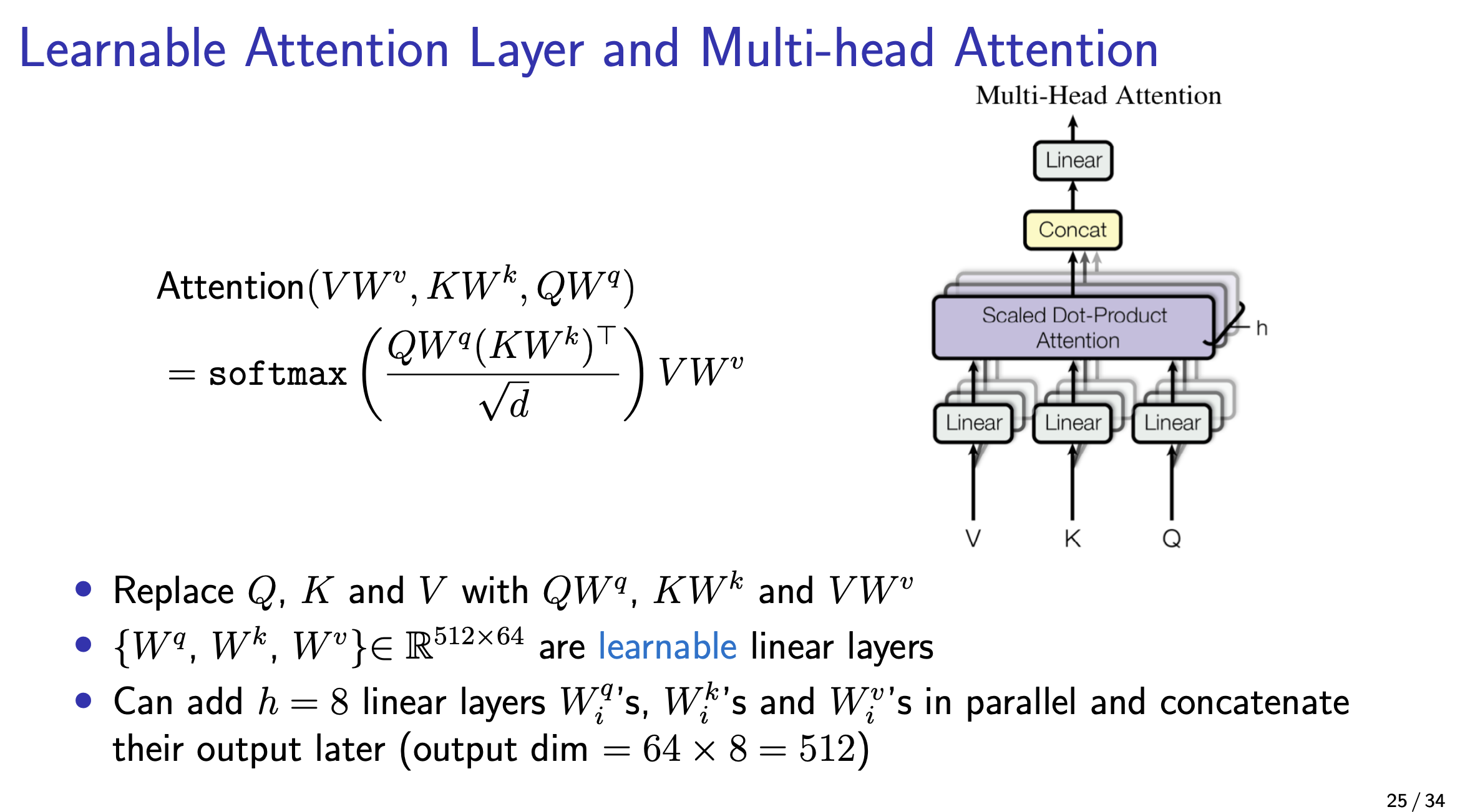

In matrix form

Attention mechanism

-

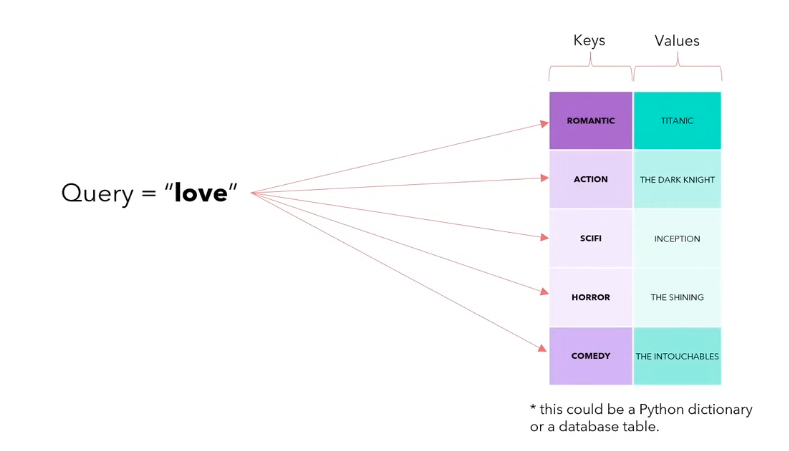

Query roughly speaking is what am I looking for.

-

Key is what I represent

-

Value is what I actually contain

-

Query (Q): “What am I looking for?”

-

Key (K): “What do I have?”

-

Value (V): “What do I give you if you choose me?”

Another vid

I was really confused about QKV. https://www.reddit.com/r/MachineLearning/comments/19ewfm9/d_attention_mystery_which_is_which_q_k_or_v/ https://stats.stackexchange.com/questions/421935/what-exactly-are-keys-queries-and-values-in-attention-mechanisms

Like conceptually, there are no physical properties that allow us to distinguish Q from K. Like for all we known, Q is K and K is Q.

- Query (Q): “What am I looking for?”

- Key (K): “What do I have?”

- Value (V): “What do I give you if you choose me?”

Think of a library:

- Query = what you want to read about

- Keys = the summary on the card catalog for each book

- Values = the full content of each book

Question

in attention, is W_Q, W_K and W_V really necessary? Just use the raw embedding as your Q, K and V. the embedding is going to change too anyways

- Inputs:

- Query

- Value

- Key

Output: an matrix

The dot product of the query and key tells you how well the key and query are aligned.

Then (Softmax operation is row-wise, i.e., ):

What is ? I think that is the number of dimensions

- It’s just a scaling factor

- I asked the professor and he said empirically, it gives the best performance

Attention Layer

This is cross-attention