Kernel Fusion

This is simply combining CUDA Kernels.

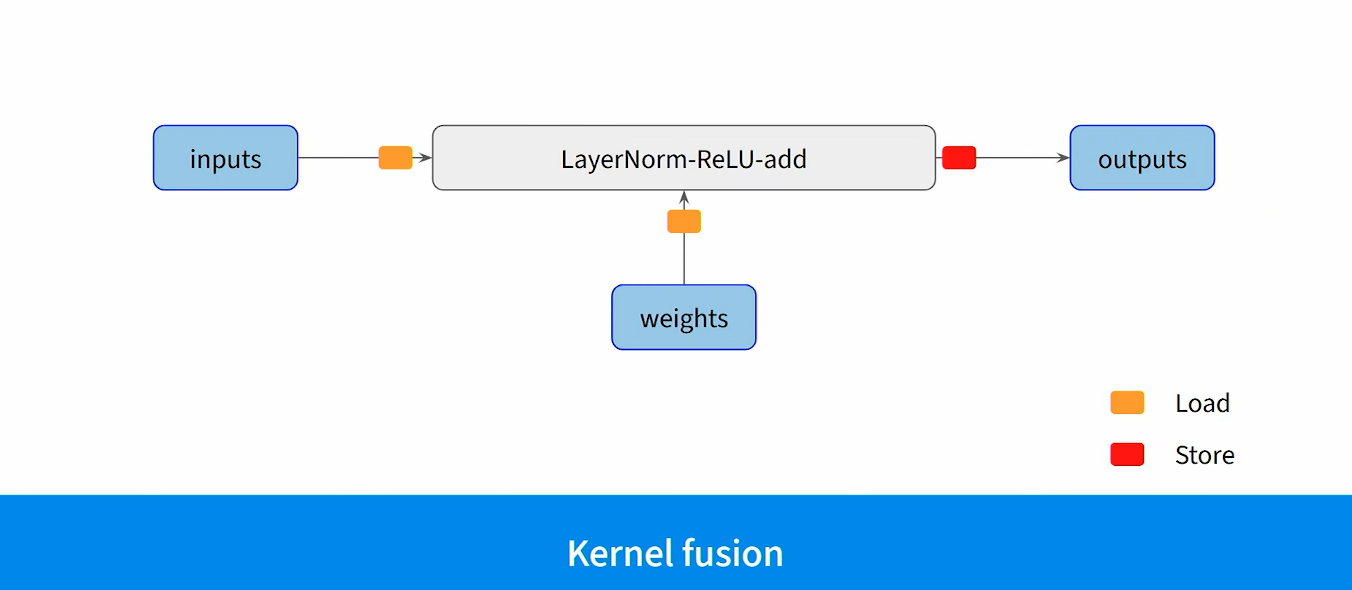

Kernel fusion is an optimization technique in GPU computing that combines multiple small GPU operations (kernels) into a single, more efficient kernel.

Before Kernel Fusion:

After Kernel Fusion

In deep learning, models often perform sequences of element-wise operations, such as:

- Matrix multiplications

- Activation functions (ReLU, Softmax, etc.)

- Normalization (LayerNorm, BatchNorm, etc.)

- Scaling and element-wise additions

Each of these steps traditionally launches separate GPU kernels, leading to:

- Excessive memory read/write operations (global memory bottleneck)

- High launch overhead (each kernel launch adds latency)