Masked Attention

https://www.youtube.com/watch?v=bCz4OMemCcA

How is masked attention implemented? Just use a lower triangular matrix right.

- Andrej Karpathy shows how this is implemented

- You just add the Causal Attention Mask

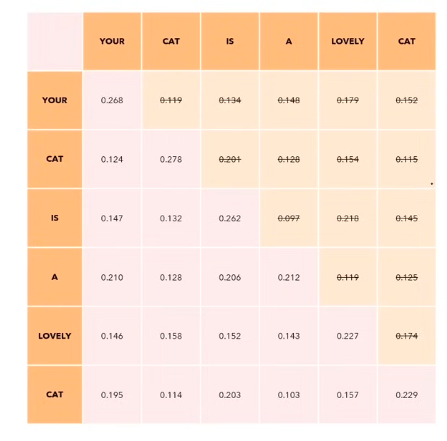

The causal mask looks like this