SE465: Software testing and Quality Assurance

Link to course notes here.

There are weekly assignments. So this might get really annoying.

Things that I need to review for final:

- Test smells (p.37 of Emily notes)

- what are the different integrations? and differences?

- Cluster integration (inter-class)

Concepts

Some basic terminology:

- A test fixture sets up the data that are needed for every test

- Example: If you are testing code that updates an employee record, you need an employee record to test it on

- A unit test is a test of a single class

- A test case tests the response of a single method to a particular set of inputs

- A test suite is a collection of test cases

- A test runner is software that runs tests and reports results

Fault vs failure vs error: See Fault

- Error is something that a user has written

- A fault is the result of an error

- An failure is what happens when a fault executes

There are 3 levels of software testing:

- Unit Testing

- Integration Testing

- test of group of related units together (ex: testing a group of related units together)

- System Testing

We have 2 approaches to testing:

- Blackbox Testing

- Testing without any understanding of the interior design of the code

- Whitebox Testing

We talk about how we can create a Control Flow Graph.

Code Coverage

Try to move to Code Coverage afterwards.

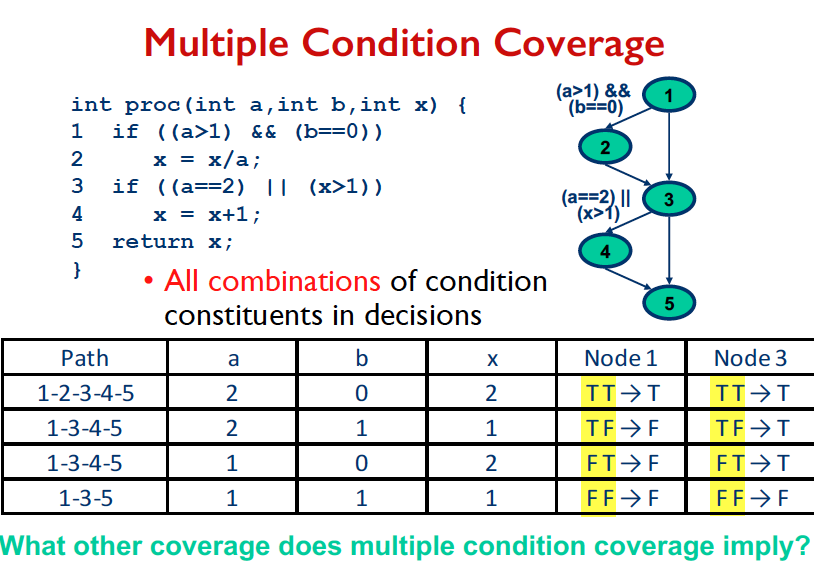

Then, we talk about different code coverage models:

- Statement Coverage

- Segment (basic block) Coverage

- Branch Coverage

- Condition Coverage

- Condition/Decision Coverage

- Modified Condition/Decision Coverage

- Path Coverage

Statement coverage = # of executed statements / total # of statements

Segment (basic block) coverage counts segments rather than statements.

branch coverage = # of executed branches / total # of branches

condition coverage = # of conditions / total # of conditions

- seems to exclude the else condition

- however, if you have condition/decision coverage, then you need to look at the branching

They talk about the short-circuit problem.

Modified Condition/Decision Coverage

- Key idea: test important combinations of conditions and limiting testing costs

Often required for mission-critical systems.

Each condition should be evaluated one time to “true” and one time to “false”, and this with affecting the decision’s outcome.

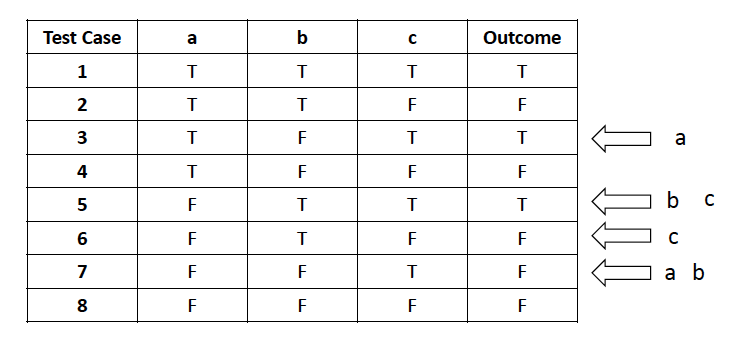

So then, we have group of variables changing. Ex for (a || b) && c

Path coverage is basically the same as branch coverage? Except, it’s looking at every single path. Branch coverage is entirely independent.

Loop coverage

- At minimum, we have to execute it zero times, once, and twice or more times

- To get the coverage, use these values: minimum +- 1, minimum, maximum, maximum +-1, typical

Need to understand that there are 2 uses:

- c-use (computational use)

- this is a vertex

- p-use (predicate use)

- This is an edge

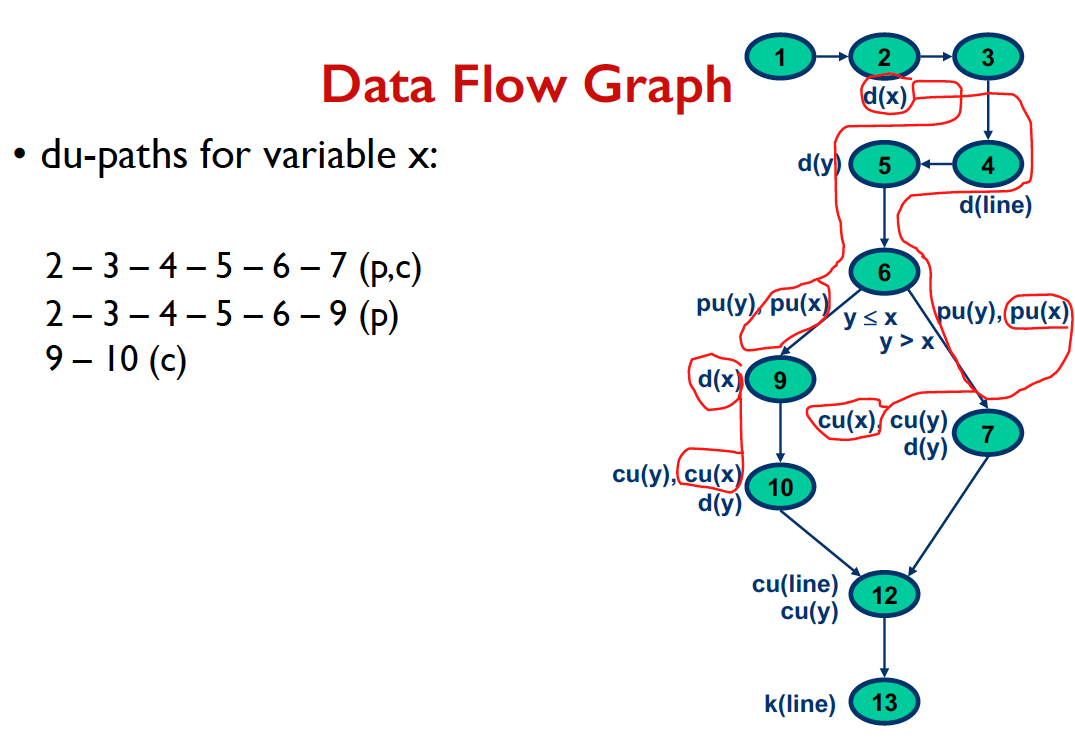

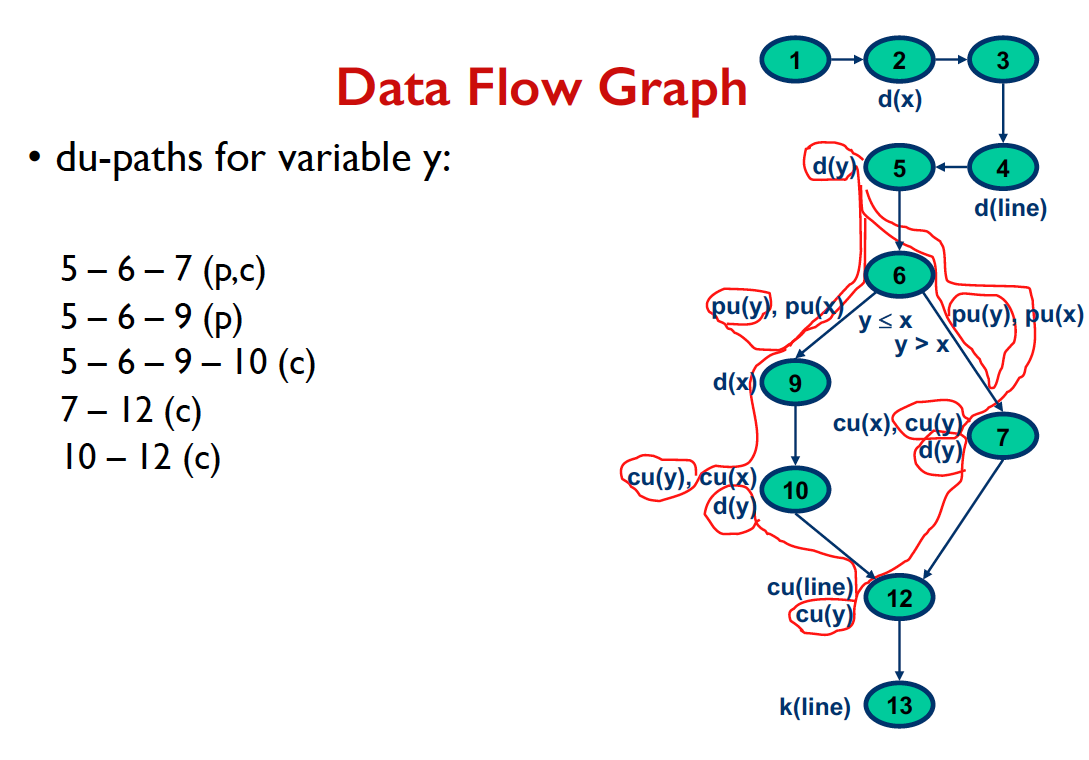

We talk about Data Flow Analysis and Data Flow Graph.

du-pair = d + u thing

There is the du-path. Either one of 2 cases (so essentially, having c-use or p-use at the last node, don’t have a definition on the path excedpt maybe last node, you can’t have it repeat):

- c-use of v at node nk, and P is a def-clear simple path with respect to v (i.e., at the most single loop traversal)

- p-use of v on edge nj to nk, and <n1,n2,…,nj> is a def-clear loop-free path (i.e., cycle free)

For example:

Then, we get into Mutation Testing.

There are 3 criteria that are spoken about:

Good test case:

- Reach the fault seeded during execution (reachability)

- Cause the program state to be incorrect (infection)

- Cause the program output to be incorrect (propagation)

Then, we talk about more general guidelines for testing.

They talk about all the different test smells:

- Mystery Guest

- when the test uses external resources, and thus is not self-contained

- Resource Optimism

- makes assumption about existence / absence of state from external resources

- Test Run War

- test code fails when multiple programmers are running them

- General Fixture

- Eager Test

- solution is to separate into tests that only test 1 method each

- Lazy Test

- several tests check same method using same fixture

- Assertion Roulette

- Indirect Testing

- For Testers Only

- Sensitive Equality

- Test Code Duplication

Then, we move onto integration testing.

Stub replace what are called modules.

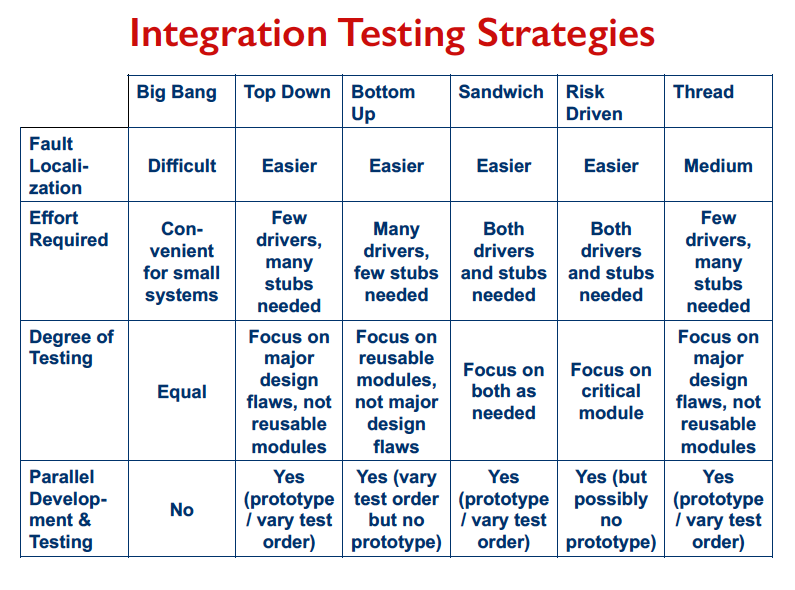

Different strategies for integration testing:

- Big Bang

- Top-Down

- Bottom-Up

- no need for stubs

- Sandwich

- Risk-Driven

- integrate based on criticality

- Function/Thread-Based

- integrate components according to threads / functions they belong to

The professor then talks about testing for OO. The concerns are valid. The methods on their own don’t mean anything generally. And they depend on the state of the object.

There are many integration levels:

- Basic unit testing (intra-method)

- Unit Testing (intra-class)

- Cluster integration

Mocking and stubbing.

mock object = dummy implementation for an interface or a class in which you define the output of certain method calls

A Mock has the same method calls as the normal object

- However, it records how other objects interact with it

- There is a Mock instance of the object but no real object instance

stubbing = return the whatever value we passed in

Creating a mock

//Let's import Mockito statically so that the code looks learer

import static org.mockito.Mockito.*;

//mock creation

List mockedList = mock(List.class);

// Or @Mock List mockedList;We can then call verify on this mock object

mockList.add(“one”);

verify(mockedList).add(“one”);

verify(mockedList).add(“two”); //will fail because we never called with this valueExamples

when(mockedList.get(0)).thenReturn("first");

when(mockedList.get(1)).thenThrow(new RuntimeException());There’s the argument captor:

verify(mockStorage).barcode(argCaptor.capture());

//this line verifies the barcode function is called and remembers what is the input for the bar code function

verify(mockDisplay).showLine(argCaptor.getValue());

// to ensure the same values are called in both barcode and showLineCluster Integration.

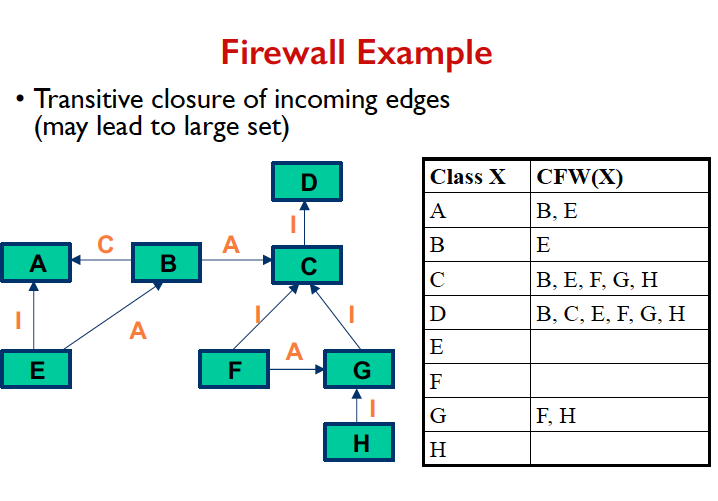

They talk about the Object Relation Diagram (ORD).

- C = composition

- A = association

- I = inheritance

CFW = class firewall

Building a firewall is pretty easy

MADUM = minimal data member usage matrix

- Different usage categories: constructors, reporters (read only), transformers, others

nxm matrix and n is # data members, m is # member methods

Need to go through the steps of the diagram.

Next: We talk about data slices.

- The goal is to reduce the number of method sequences to test

I need to know how to use the MADUM table.

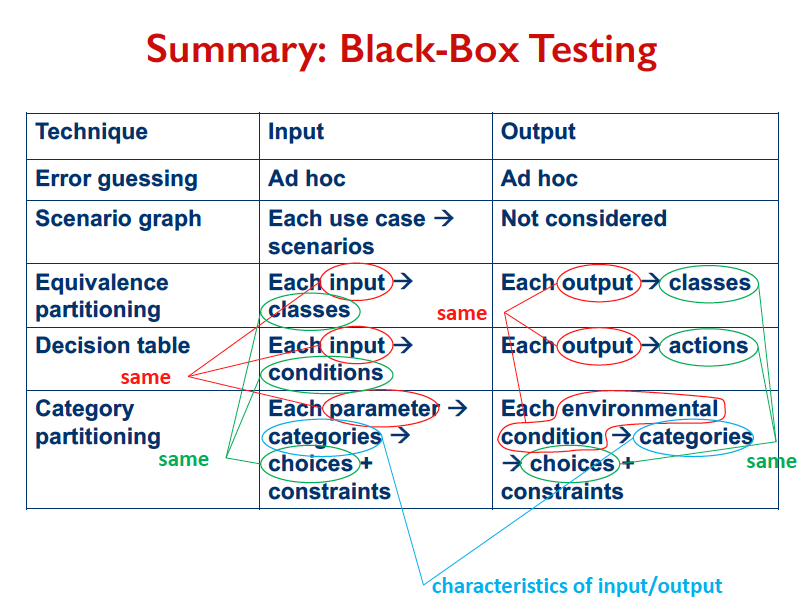

Blackbox testing

For each use case:

- Develop a scenario graph

- Determine all possible scenarios

- Analyze and rank scenarios

- Generate test cases from scenarios to meet coverage goal

- Execute test cases

Equivalence partitioning

Scenario graph Equivalence partioning decision table Category partitioning

Fuzzing

Fuzzing: set of automated testing techniques that tries to identify abnormal program behaviors by evaluating how the tested program responds to various inputs.

2 main categories:

- Generation based

- Mutation based

Greybox fuzzing - Focus fuzzing on those that lead to higher code

Metamorphic Testing

generally to reveal new errors.

test-suite minimization, aiming to reduce the # of test cases while ensuring adequate coverage.

If a test requirement ri can be satisfied by one and only one test case, the test case is an essential test case.

- if a test case satisfies only a subset of the test requirements satisfied by another test case, it is a redundant test case.

- GE heuristic: first select all essential test cases in the test suite; for the remaining test requirements, use the additional greedy algorithm, i.e. select the test case that satisfies the maximum number of unsatisfied test requirements.

- GRE heuristic: first remove all redundant test cases in the test suite, which may make some test cases essential; then perform the GE heuristic on the reduced test suite.

Regression testing

Delta Debugging: A technique, not a tool

The inverse delta i fails, and that causes the entire test to fail.

Software Reviews and Inspections

Then, we get onto software reviews and inspections.

planning → overview → preparation → meeting → rework → follow up

Number of code errors (NCE) vs. weighted number of code errors (WCE)

The teacher introduces 3 types of metrics:

- Process Metric

- Product Metrics

- Project Metric

Process metric focuses on error density, and timetable metrics. Error removal effectiveness, and productivity.

– classic measure of the size of software by thousands of lines of code

FP = measure of development resources required to develop a program, based on the functionality specified for the software

Refactoring

2 types:

- Floss refactoring (interleaved refactoring)

- Root canal refactoring (massive changes)

Slice based cohesion

Tightness = S/length(M)

3/15

It expresses the ratio of the number of statements which are common to all slices over the module length

overlap = 1/VO sum * sum(slice / sum count of variables) t expresses the average ratio of the number of statements which are common to all slices to the size of each slice.

Clone detection

Type 1: Code fragments are identical Type 2: structurally and syntactically identical Type 3: fragments are copies with further modifications type 4: two or more code fragments perform the same computation, implemented through different syntax

- ex: 2 different sorting algorithms

Logger

Load Testing

two ways to schedule workloads:

- steady load

- stepwise load

Chaos engineering

A chaos Monkey randomly disables production instances to make sure that the system is robust enough to survive this common type of failure without any customer impact.

latency monkey introduces artificial delays

View

FFF → F TFF → T FTF → T FFT → T

in top down, need a lot of stubs. Favorizes testing for high level design flaws, whereas bottom-up favors testing the functionality of reusable components more thorougly.

There’s inspection, walkthrough and buddy check.

Midterm review

- a

- c

- b

- c

- a

- b

3/5 0/3 1/4 3/5 0/3 1/4 3/5 0/3 1/4

4.1 I misread. Use a number that is not max int 4.2. Dataflow graph has the 3 d(n), d(num) d(sum) d(i) pu(i) pu(n)

cu(num), d(number)

cu(num), cu(number), d(sum)