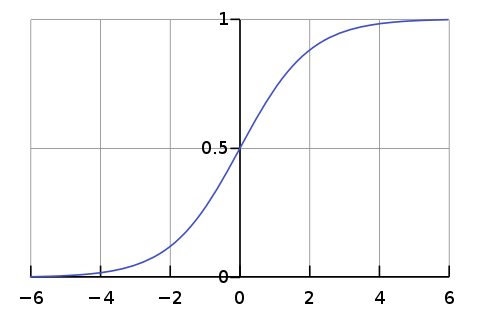

Sigmoid Function

- Historically popular since they have a nice interpretation as a saturating “firing rate” of a neuron

However, recently it is rarely used because of three drawbacks:

- Sigmoids saturate and kill gradients. When the neuron’s activation saturates at either tail of 0 or 1, the gradient at these regions is almost zero, which means almost no signal will flow through the neuron to its weights and recursively to its data.

- Sigmoid outputs are not zero-centered. This gives inefficient gradient updates. If the data coming into a neuron is always positive, then the gradient on the weights ww will during backpropagation become either all be positive, or all negative (depending on the gradient of the whole expression ). This could introduce undesirable zig-zagging dynamics in the gradient updates for the weights. This is why you want zero-mean data!

- exp() is a bit compute expensive (more minor issue)