Activation Function

Activation functions are non-linear. Used in a Neural Network.

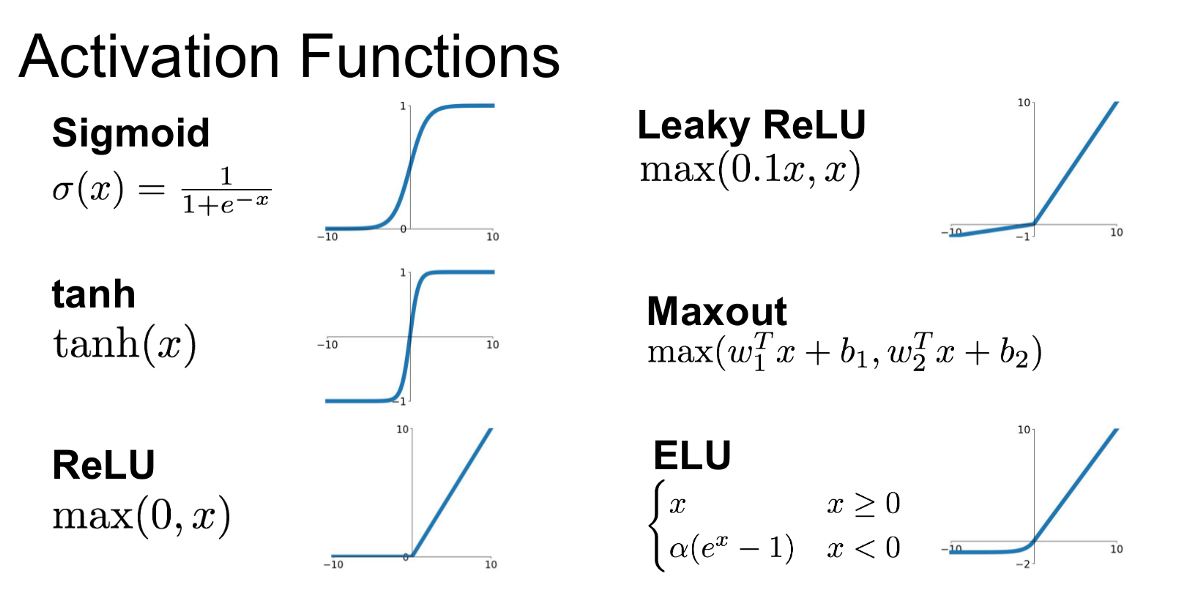

Several activation functions:

- Sigmoid Function

- See the page for drawbacks, but it is no longer used

-

- Compared to Sigmoid, it still kills gradients when saturated, however, it is zero-centered

- Rectified Linear Unit (ReLU)

- Does not saturate (in positive region)

- Very computationally efficient

- Converges much faster than sigmoid/tanh in practice

- Actually more biologically plausible than sigmoid

- Drawback: Not zero-centered, so there are still parts of the network that are not activated because the gradient is 0, i.e. “dead ReLU”

- Leaky ReLU,

- Parametric ReLU,

- Exponential Linear Units (ELU)

- All benefits of ReLU

- Closer to zero mean outputs

- Negative saturation regime compared with Leaky ReLU adds some robustness to noise

- Maxout Neuron

- Softmax Function

In general, just use ReLU, be careful with learning rates.

- From CS231n (?)

There is also parametric ReLU, which makes the alpha a learnable parameter.

There’s also Mish.