TD3 (Twin Delayed DDPG)

Heard about this algorithm from Armand’s YouTube video teaching his car to do donuts. It’s actually not that hard, since drifting is just trying to maximize the torque.

Why is TD3 better than DDPG?

- The learned Q-function begins to dramatically overestimate Q-values, so policy exploits these errors in the Q-function.

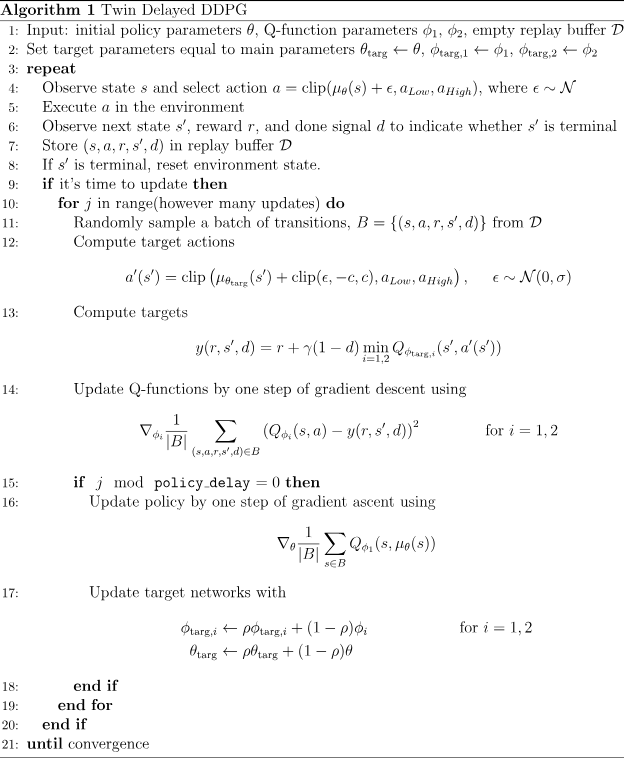

Trick One: Clipped Double-Q Learning. TD3 learns two Q-functions instead of one (hence “twin”), and uses the smaller of the two Q-values to form the targets in the Bellman error loss functions.

Trick Two: “Delayed” Policy Updates. TD3 updates the policy (and target networks) less frequently than the Q-function. The paper recommends one policy update for every two Q-function updates.

Trick Three: Target Policy Smoothing. TD3 adds noise to the target action, to make it harder for the policy to exploit Q-function errors by smoothing out Q along changes in action.

I don't get how the 2 Q's will be different?

The 2 Qs get initialized with different weights.

Next: clipped double-Q learning. Both Q-functions use a single target, calculated using whichever of the two Q-functions gives a smaller target value:

and then both are learned by regressing to this target: