Deep Deterministic Policy Gradient (DDPG)

More sample efficient. Though mostly replaced by TD3 since its just objectively better. DDPG can be thought of as being DQN for continuous action spaces.

Why is it called DDPG?

Because the policy that is learned is deterministic.

Resources

- https://spinningup.openai.com/en/latest/algorithms/ddpg.html

- Implementation here

- Lecture 5: DDPG and SAC from Deep RL Foundations, slides here

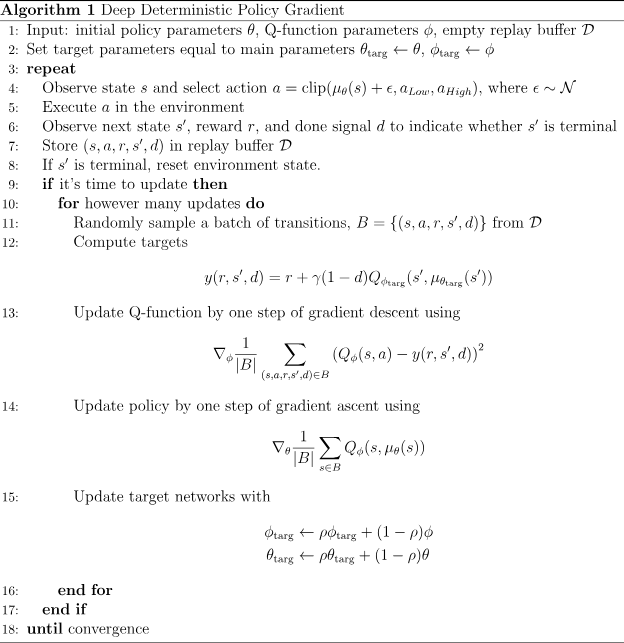

Algorithms like DDPG and Q-Learning are off-policy, so they are able to reuse old data very efficiently. They gain this benefit by exploiting Bellman’s equations for optimality, which a Q-function can be trained to satisfy using any environment interaction data.

DDPG interleaves learning an approximator for with learning an approximator to .

- Notice that we take the max, so it looks like a Bellman Optimality Backup

In practice however, we deal with continuous action spaces, taking the max is not so obvious. So we learn a policy network to predict how to maximize Q:

For updating the policy , we just do gradient ascent to maximize :

Notes on Implementation

You will notice that the network will learn an MLP to predict both mean and variance.

Related

- Not to be confused with DDPM