Transfer Learning

Transfer learning (TL) is a research problem in Machine Learning that focuses on storing knowledge gained while solving one problem and applying it to a different but related problem.

https://www.v7labs.com/blog/transfer-learning-guide

In the context of Computer Vision , it is very easy. Just use someone else’s pre-trained weights for a given model architecture. There are three ways to proceed:

- Freeze all the layers with the pre-trained weights and just change train the last softmax part of the layer on your own data (when You don’t have a lot of data) this is what we do, see example here

- Freeze some of the layers (You have some data)

- Don’t freeze any layers, simple use the pre-trained weights as initialization (You have lots of data)

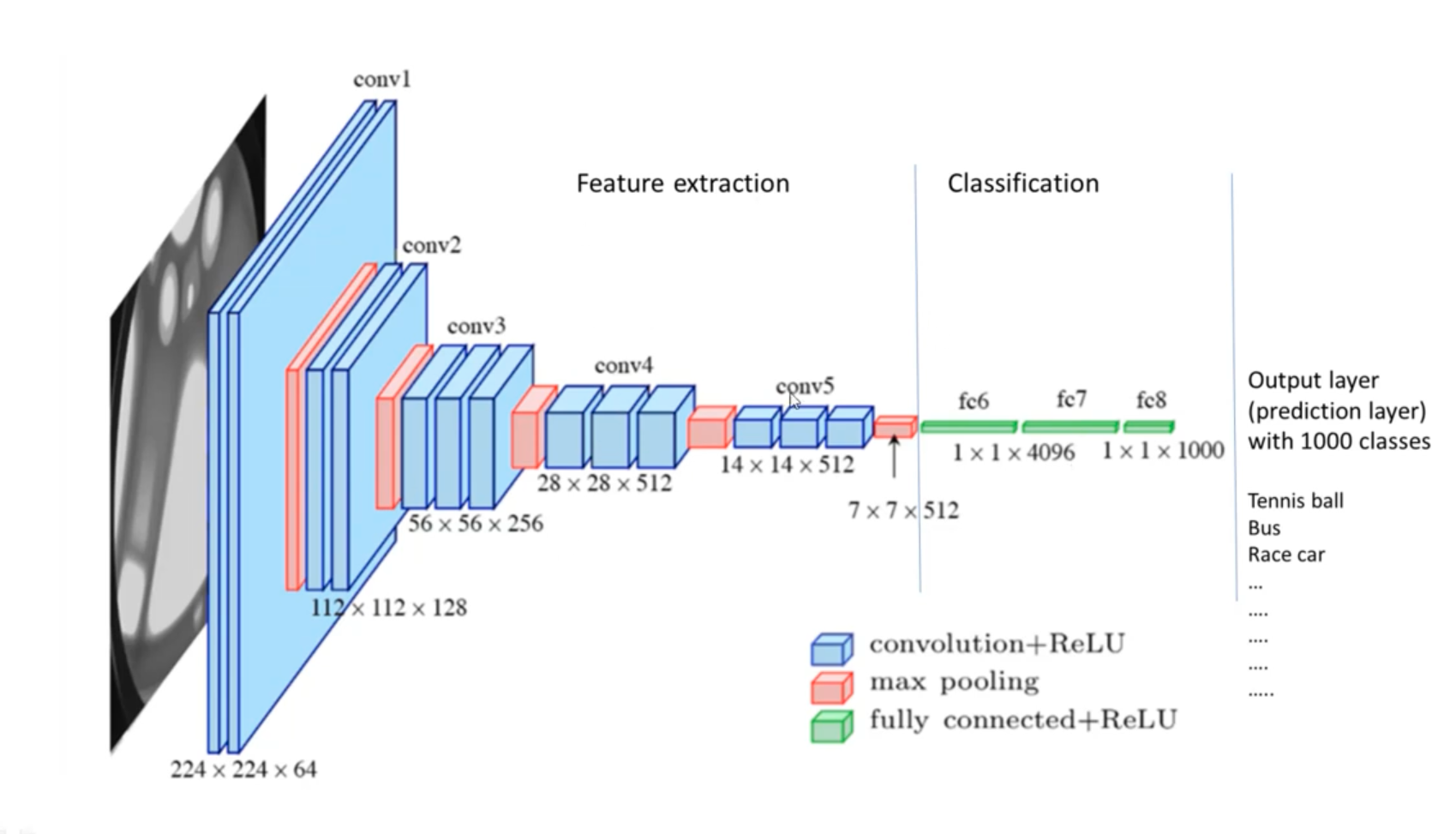

Ex: Use a feature extractor that is already trained (such as VGG-16), and simply train your own classification network.

There are 6 simple steps for transfer learning:

- Obtain pre-trained model

- Create a base model

- Freeze layers

- Add new trainable layers

- Train the new layers

- Fine-tune your model