A Reduction of Imitation Learning and Structured Prediction to No-Regret Online Learning

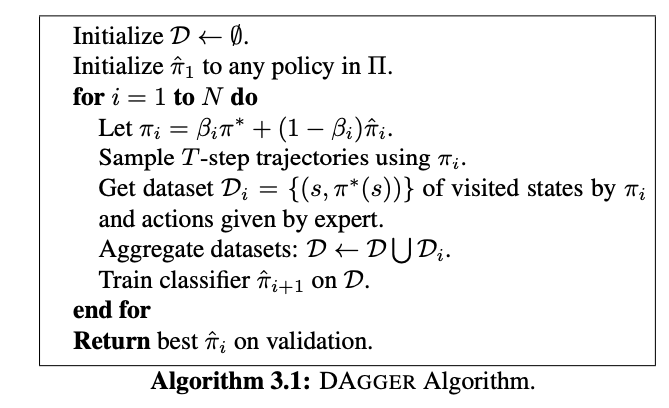

DAgger = dataset aggregation

First heard about this paper when reading up on ALOHA.

Dagger "Helps reduce distribution shift for the learner", but how?

- They do a sort of Polyak Averaging

DAgger improves on behavioral cloning by training on a dataset that better resembles the observations the trained policy is likely to encounter, but it requires querying the expert online.

Krish M was mentioning this, and I was confusing it with IMPALA.