BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension

I confused this with BERT lol

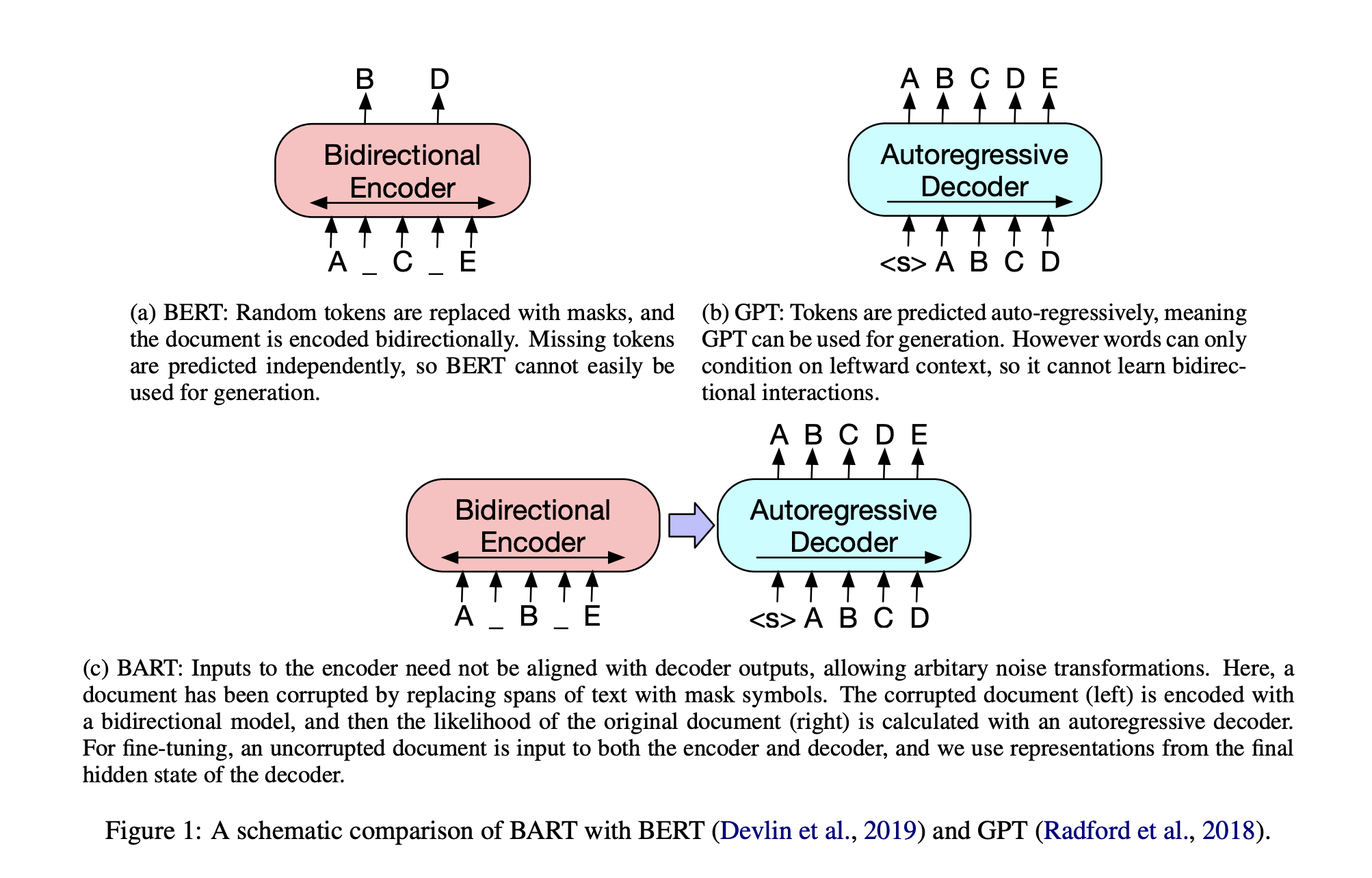

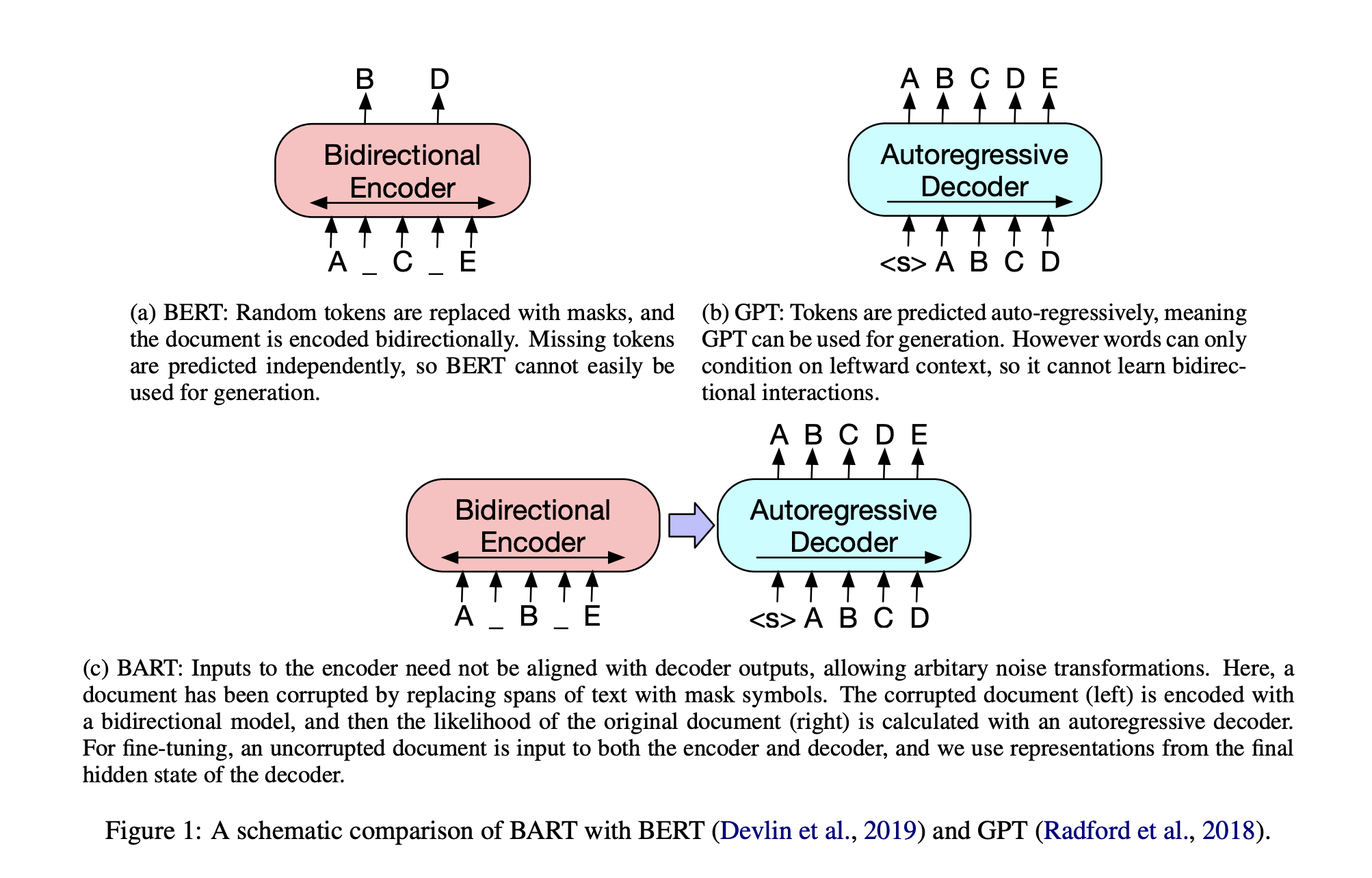

The encoder is similar to BERT; the decoder is similar to GPT.

I confused this with BERT lol

The encoder is similar to BERT; the decoder is similar to GPT.