Do As I Can, Not As I Say: Grounding Language in Robotic Affordances

From Jacob https://medium.com/ml-purdue/recent-advancements-in-language-capable-robotics-88fe31145451

Project page:

-

What are the authors trying to do? Articulate their objectives.

- Find a way to leverage LLM, which have really good semantic understanding of language and are quite general-pupose, and use them to control robots in long-horizon tasks, and policies that are only trained on specific skills (i.e. picking, placing, navigating).

-

How was it done prior to their work, and what were the limits of current practice?

- People in robot learning were not really using LLMs yet. They trained on specific skills and were not able to complete long-horizon tasks.

-

What is new in their approach, and why do they think it will be successful?

- In addition to plugging the LLM to the policy direction, you also want an intermediate step to ground the actions generated by the LLM before saying yea this is the action we actually want to do.

-

*What are the mid-term and final “exams” to check for success? (i.e., How is the method evaluated?)

- They run it on a physical robot and check success rate.

-

What are limitations that the author mentions, and that the author fails to mention?

- Hand-engineering the affordances. The underlying skills is still the fundamental limitation. The high-level planning is actually quite easy.

Reading this paper, they mention some literature:

- BC-Z for imitation learning

Questions I have reading this paper:

- How is the policy actually executed?

- Each skill is trained via BC-Z or

- Can’t you achieve the same with prompt engineering? Just tell the LLM what the robot can and cannot do, you don’t necessarily need to train this value function

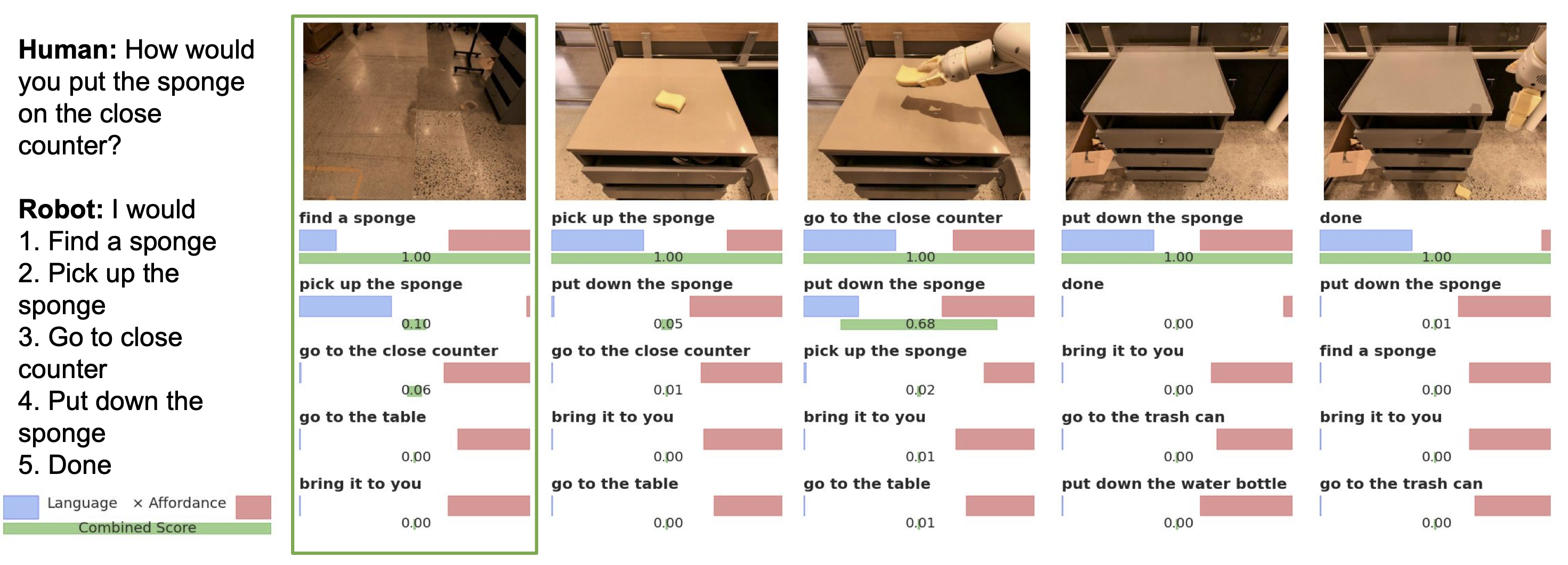

Say → LLM provides a task-grounding to determine useful actions for a high-level goal Can → learned affordance function provide a world-grounding to determine most feasible

- This is trained with RL, it’s just a value function? It’s language conditioned

Human labelers give ground truth. Value functions associated with these skills provide the grounding necessary to connect this knowledge to a particular physical environment

1. LLM generation

- How do they score the LLM? Taking the one with the highest probability of being generated by the LLM (i.e. the raw logit values).

The idea here is that we can fix the set of outputs by the LLM to discrete “skills” that we know the robot can do, as opposed to let the LLM run wild and generate any token it can generate

- If you did that, you need a more sophisticated way to determine how the token maps to a particular skill

2. Grounding

It’s really interesting the pick, go to, place, and terminate affordance functions. These are actually basically manual features, which actually looks like a hack.

Value Function

They only use the value function for the pick kinds of task. The other two are hand-engineered affordances:

I was confused like how much of grounding is actually necessary, because the LLM should already be restricted to generate actions that the robot can already do.

- It seems like its mostly like “find” before “pick up”

3. Policy

They actually have 3 kinds of policies, for different kinds of skills:

- For pick manipulation skills: we use a single multi-task, language-conditioned policy

- For place manipulation skills: we use a scripted policy with an affordance based on the gripper state

- For navigation (“go to”) policies we use a planning-based approach which is aware of the locations

[!PDF|255, 208, 0] Do As I Can Not As I Say Grounding Language in Robotic Affordances, p.24

We note here briefly a few lessons learned in prompt engineering and structuring the final prompt. Providing explicit numbers between steps (e.g., 1., 2., instead of combining skills with “and then” or other phrases) improved performance, as did breaking each step into a separate line (e.g. adding a “\n” between steps