Momentum Contrast for Unsupervised Visual Representation Learning (MoCo)

Quite a seminal paper.

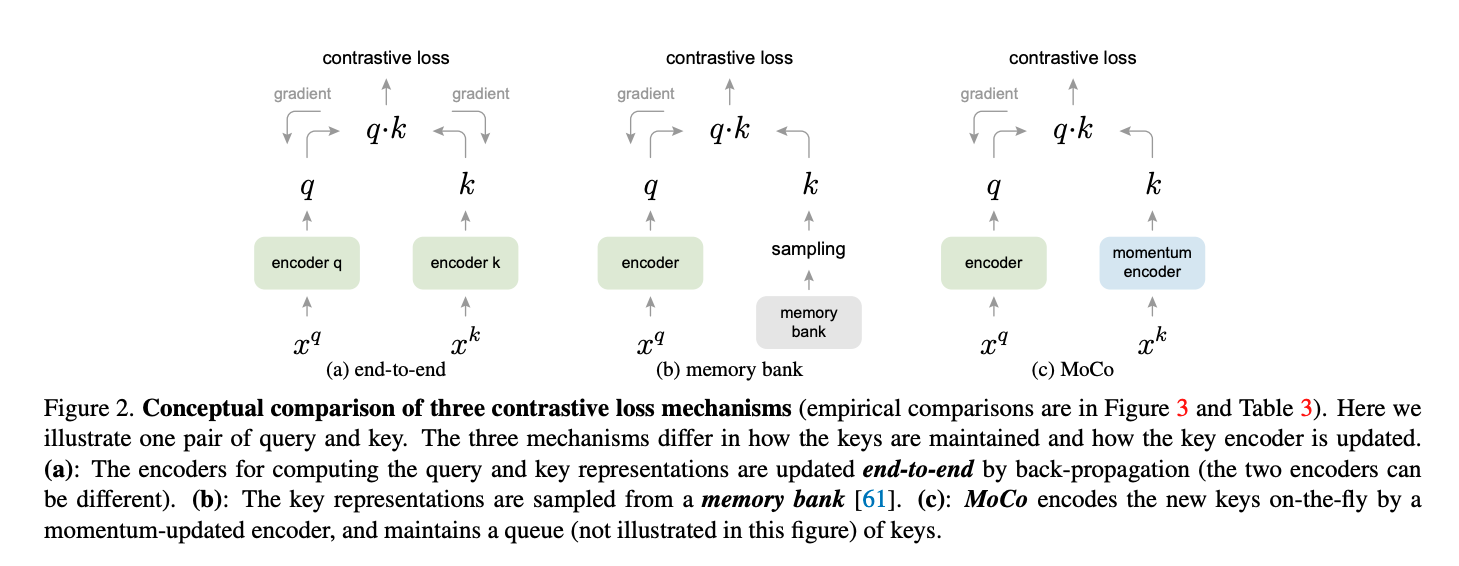

MoCo trains a feature encoder that maps images (or different augmented views of images) into a representation space in which:

- Two views (augmentations) of the same image are pulled close together (“positive pairs”)

- Views of different images are pushed apart (“negative pairs”)

This is the basic idea behind contrastive learning. However, there are some challenges.

CLIP doesn’t seem to suffer from these problems, or does it?