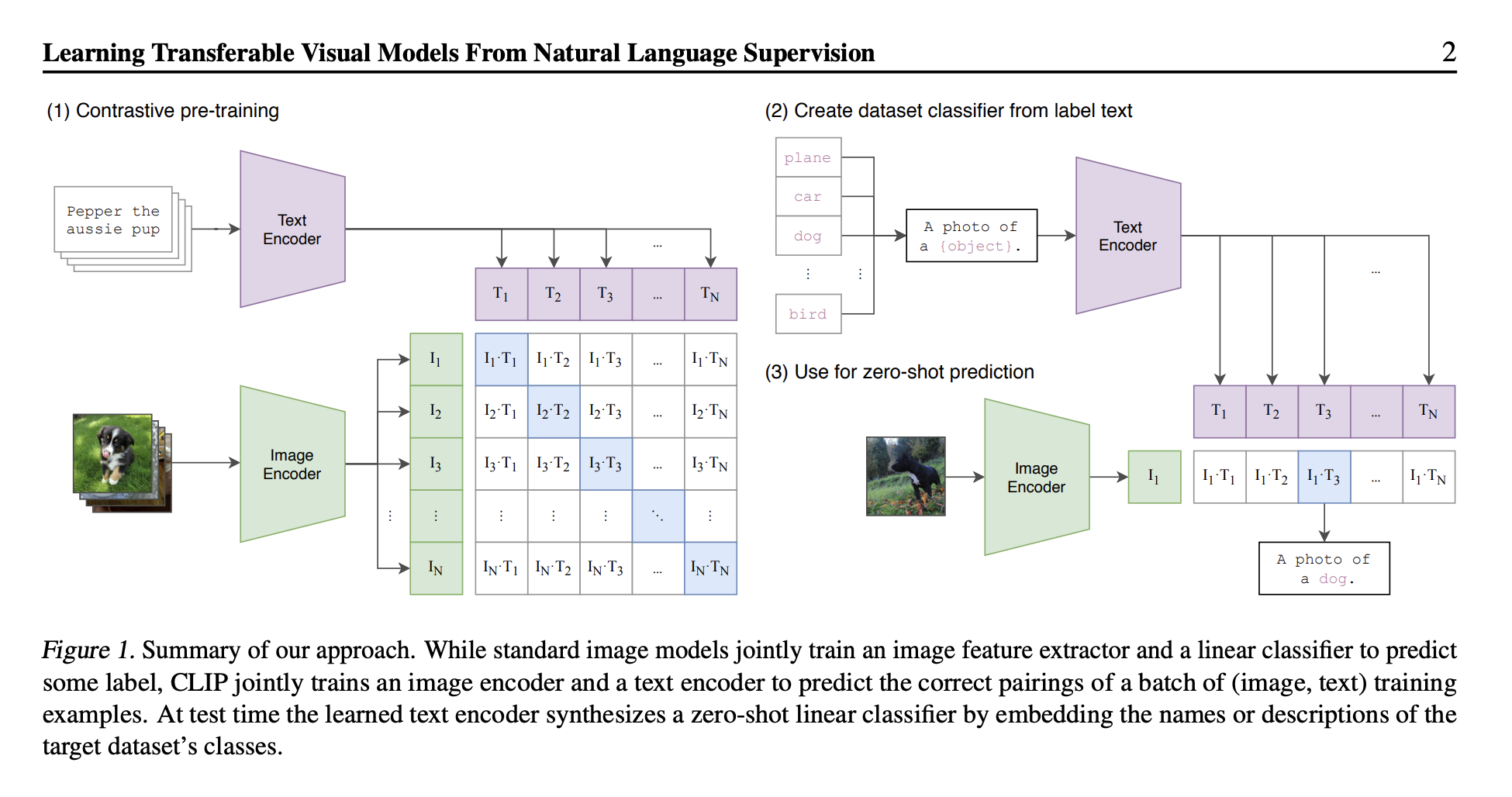

Learning Transferable Visual Models From Natural Language Supervision (CLIP)

CLIP was trained on a huge number of image-caption pairs from the internet.

Implementation details:

- Image Encoder is implemented with ResNet-50 / Vision Transformer

- Text encoder is implemented with text transformer

- Contrastive Loss

Pseudocode

# image_encoder - ResNet or Vision Transformer

# text_encoder - CBOW or Text Transformer

# I[n, h, w, c] - minibatch of aligned images

# T[n, l] - minibatch of aligned texts

# W_i[d_i, d_e] - learned projection of image to embedding

# W_t[d_t, d_e] - learned projection of text to embedding

# t - learned temperature parameter

# extract feature representations of each modality

I_f = image_encoder(I) # [n, d_i]

T_f = text_encoder(T) # [n, d_t]

# joint multimodal embedding [n, d_e]

I_e = l2_normalize(np.dot(I_f, W_i), axis=1)

T_e = l2_normalize(np.dot(T_f, W_t), axis=1)

# scaled pairwise cosine similarities [n, n]

logits = np.dot(I_e, T_e.T) * np.exp(t)

# symmetric loss function

labels = np.arange(n)

loss_i = cross_entropy_loss(logits, labels, axis=0)

loss_t = cross_entropy_loss(logits, labels, axis=1)

loss = (loss_i + loss_t) / 2

# Figure 3. Numpy-like pseudocode for the core of an implementation of CLIP“At test time the learned text encoder synthesizes a zero-shot linear classifier by embedding the names or descriptions of the target dataset’s classes.”

- Yes, so you take the embeddings, and then you take the image, and the image that has the highest value will be the correct answer

Resources:

CLIP is a model for telling you how well a given image and a given text caption fit together.

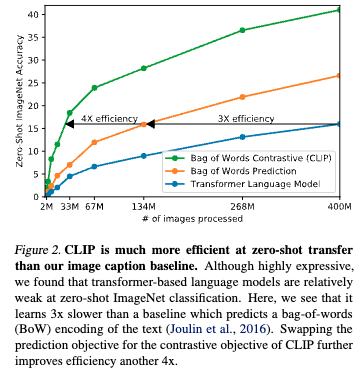

- Blue - Initially trying a CNN text encoder + transformer decoder, predicting the caption of the image

- Orange - Using bag of words, see Bag of Tricks for Efficient Text Classification. Essentially at eval time, it will be presented with all the correct classes, and try to predict the correct one

- Green - CNN text encoder + transformer encoder, simply using a contrastive loss