Page Rank

Algorithm behind ranking pages. Main ingredient behind Google Search Engine.

See CS370 and CS451 notes.

Does Google search still use Page Rank?

Google today uses hundreds of ranking factors, including machine learning and AI models, to evaluate the relevance and quality of content.

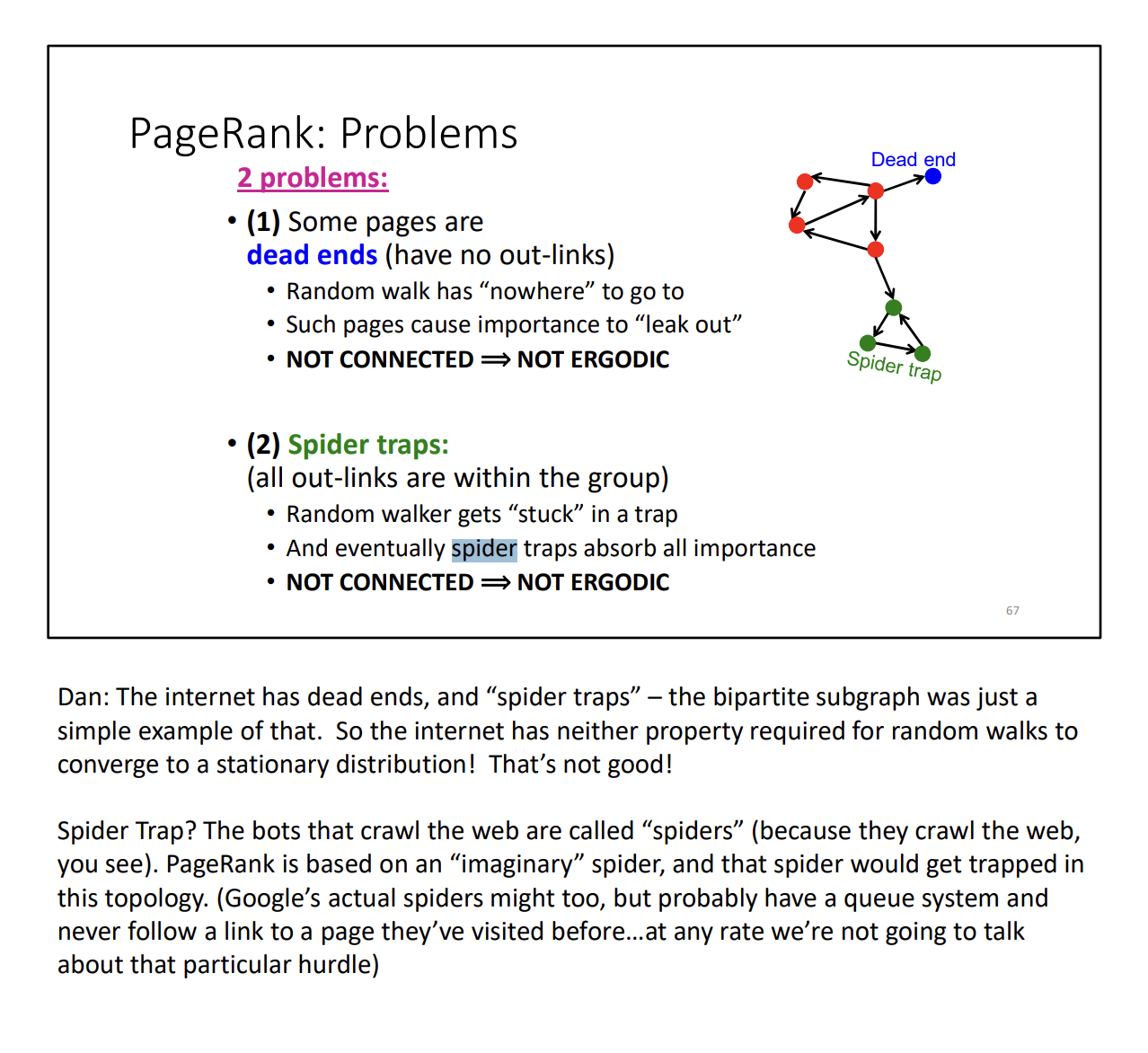

- Spider trap are cycles

CS451

Google’s solution for traps is to teleport to another node with probability .

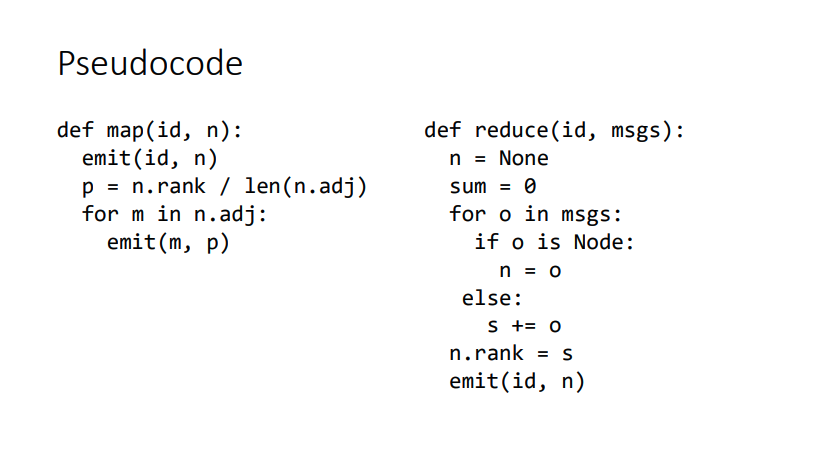

- This is the initial simple code, where we simply accumulate the node’s probabilities. We have higher chance to end up in nodes with higher probabilities

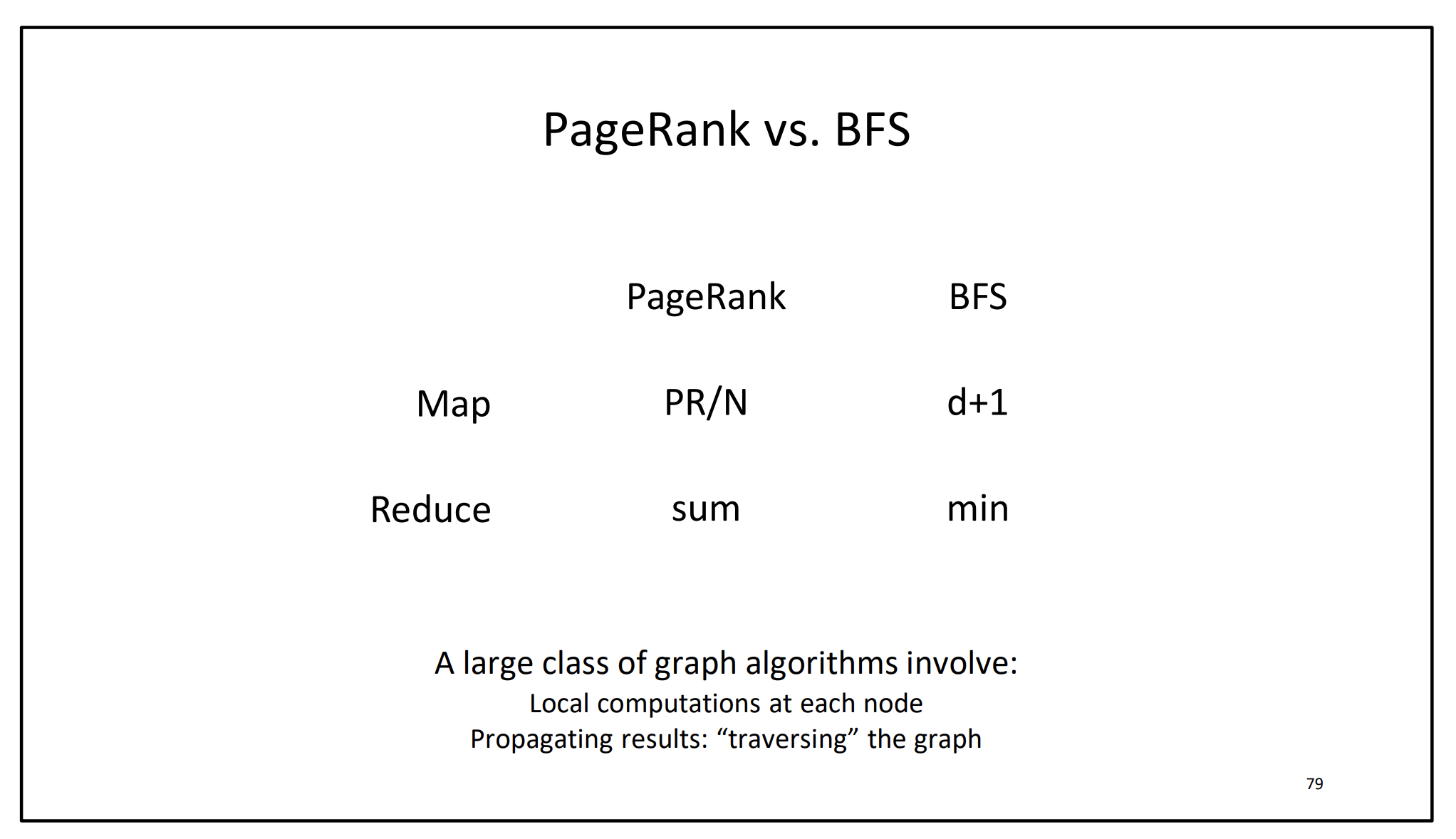

This is actually extremely similar to how Distributed BFS works, with just slightly different updates.

There's an issue with this implementation

Over time, there can be mass that starts to be lost because of dangling nodes (nodes with no edges).

These nodes have initial mass assigned to them, but then since they have no neighbours, that mass assigned just disappears.

- The mass doesn’t get to the reducer

- Look at this implementation for how they deal with it https://git.uwaterloo.ca/cs451/bespin/-/blob/master/src/main/java/io/bespin/java/mapreduce/pagerank/RunPageRankBasic.java?ref_type=heads

- They split in 2 stages:

- 1st stage: Distribute weight to edges

- 2nd stage: Do random jumps, and also distribute extra weights that were missing

- my personalizedpagerank implementation https://git.uwaterloo.ca/s36gong/cs451-f24/-/blob/main/src/main/java/ca/uwaterloo/cs451/a4/RunPersonalizedPageRankBasic.java?ref_type=heads

- Just check how many neighbours first. If no neighbours, do the jump

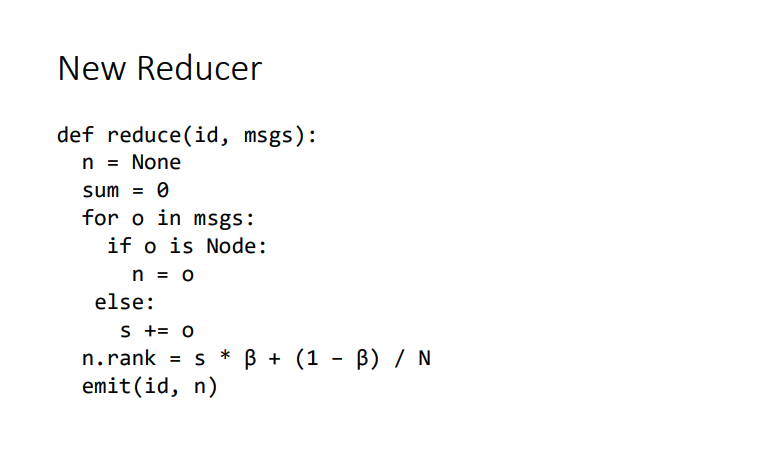

For random jumps, just change the reducer side:

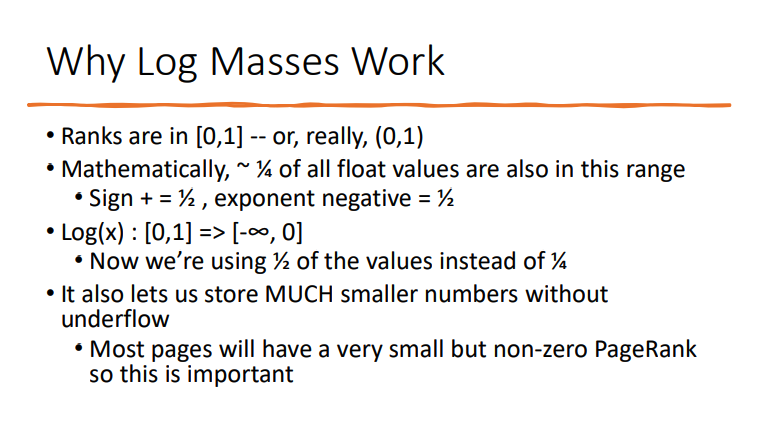

In the bespin implementation, we store log masses, as opposed to masses, because is a huge number. Each node initially starts as , which is a tiny number.

float mass = node.getPageRank() - (float) StrictMath.log(list.size());

The teacher introduces some variations, though don’t be too worried.

PageRank in Spark

val lines = sc.textFile("the-internet.txt")

val damp = 0.85

val links = lines.map{ s =>

val parts = s.split("\\s+")

(parts(0), parts(1))

}.distinct().groupByKey().cache()

var ranks = links.mapValues(v => 1.0)

for (i <- 1 to iters) {

val contribs = links.join(ranks).values.flatMap{ case (urls, rank) =>

val size = urls.size

urls.map(url => (url, rank / size))

}

ranks = contribs.reduceByKey(_ + _).mapValues((1 – damp) + damp * _)

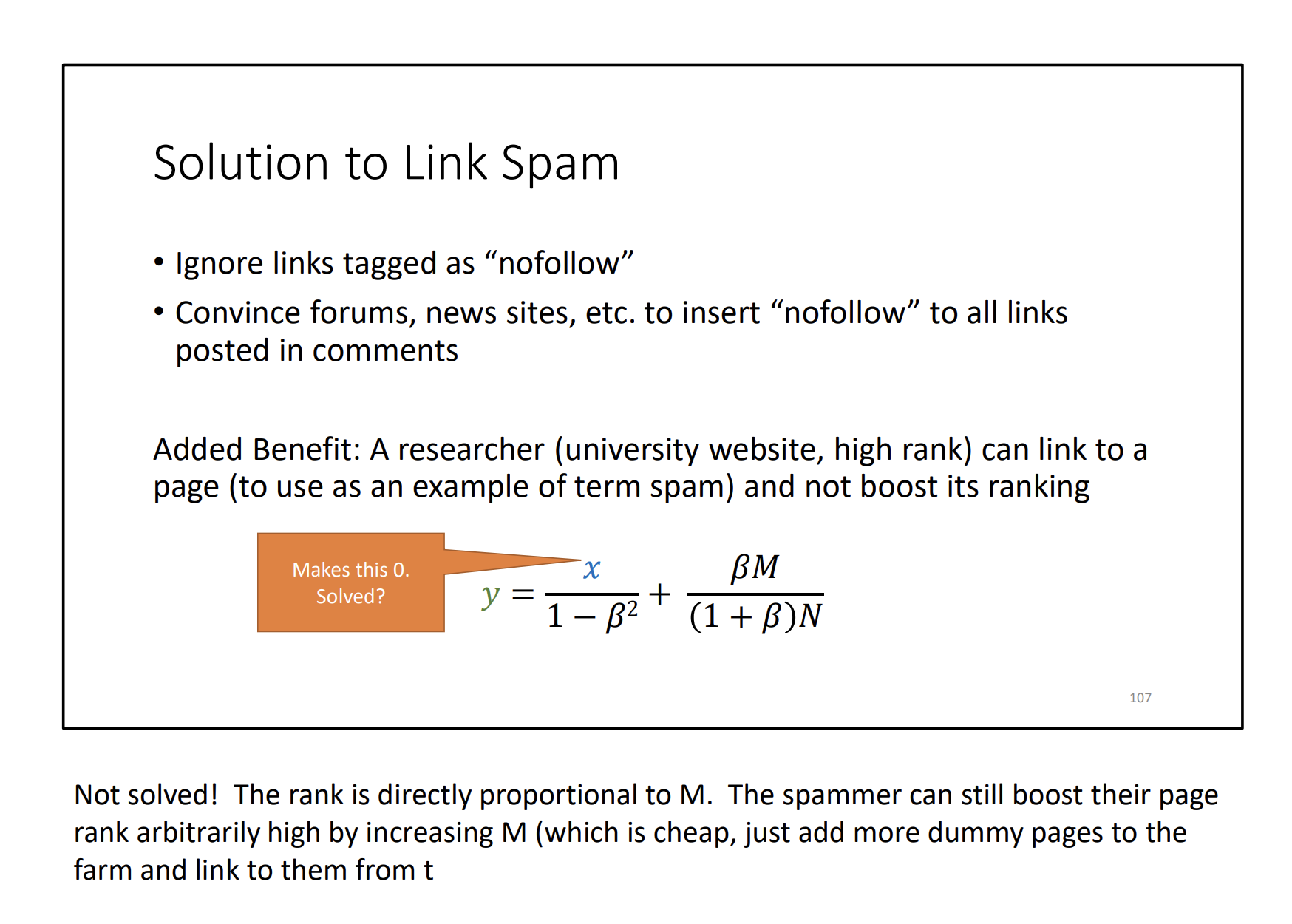

}There’s link spamming

A first idea:

Here’s another solution: Use a personalized page rank.

- Define source nodes, as opposed to 1/N initial weight for all pages

Random jumps do not lead into the farm pages, so they’re not accumulating very much mass.

What to use for “Source Nodes” We should identify “trustworthy” pages Easy to say… What’s trustworthy? Domains with strict entry requirements?

- .edu, .gov, etc.

CS370

The main formula is

Google uses

How do you guarantee that there is convergence of page rank?

- You check for the eigenvalues. You can see the remaining values

We have page rank convergence if is a positive Markov matrix.