PyTorch

https://bnikolic.co.uk/blog/fast-nonlinear-optimisation.html

Resources

Good codebases

Get number of parameters in model

sum(p.numel() for p in model.parameters() if p.requires_grad)# Split training set into training and validation (to detect overfitting)

train_dataset, val_dataset = torch.utils.data.random_split(

train_val_dataset, [0.95, 0.05]

)

Replacing Tensorflow as the state of the art DL model.

Tips:

- ALWAYS use

torch.compile

Torch

torch.cat() # This is INEFFICIENT

torch.unbindtorch.as_tensor

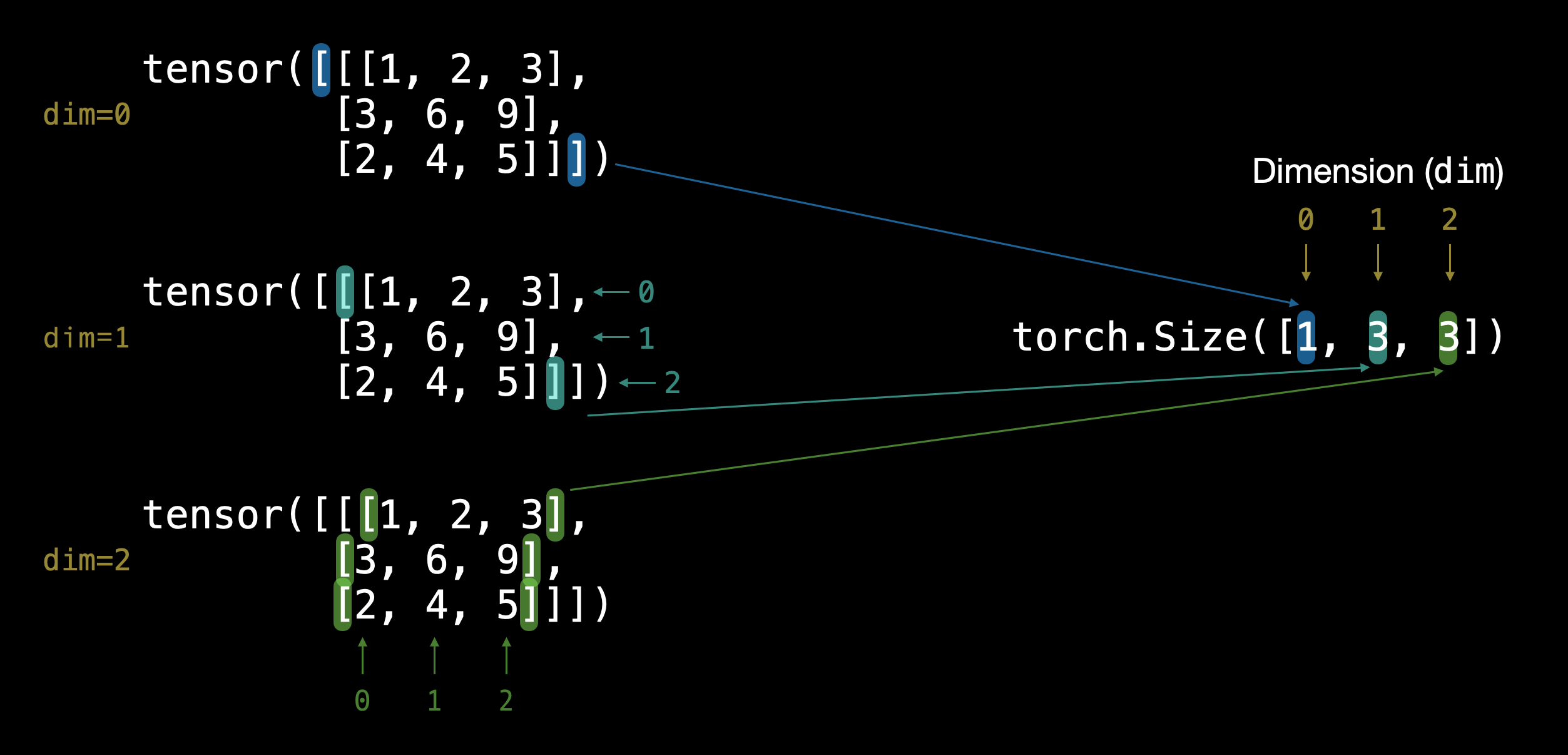

Visualizing a Tensor

When you index, you collapse down one of the dimensions.

Indexing

I swear this indexing messes with my brain. Consider the example below

X = torch.randn((32,3))

C = torch.randn((27,2))

C[X] # is valid

C[X].shape # torch.Size([32, 3, 2])Reshaping

I think torch can reshape by doing

X.view(-1, 6) # Reshape this, without changing it in view

X.reshape(-1) # this does it in place, it changes it permanentlyPyroch viewing https://wandb.ai/ayush-thakur/dl-question-bank/reports/An-Introduction-To-The-PyTorch-View-Function—VmlldzoyMDM0Nzg

input_HLK = input_LD.view(L, H, K).permute(1, 0, 2)- can also use with the permute function

Memory Layout

https://pytorch.org/docs/stable/generated/torch.Tensor.contiguous.html

Tranpose

Multiple transposes?

Transpose is essentially .permute().

Tensors

Tensors are the fundamental building block of machine learning. Their job is to represent data in a numerical way.

There is a difference between torch.tensor and torch.Tensor

torch.tensor # infers the type

torch.Tensor # doesn't infer the type, casts to float32import torch

# Scalars

scalar = torch.tensor(7)

scalar # tensor(7)

scalar.ndim # 0

scalar.items() # 7

vector = torch.tensor([7, 7])

# Initializing values

tensor = torch.rand((3, 4))

zeros = torch.zeros((3, 4))

ones = torch.ones((3, 4))

# Arithmetic

torch.matmul(tensor, tensor)

torch.mm(tensor, tensor) # Alternative syntax

tensor @ tensor # Same thing, actually faster

# Other functions

zero_to_ten = torch.arange(start=0, end=10, step=1)

ten_zeros = torch.zeros_like(input=zero_to_ten) # will have same shape

torch.max(x), torch.min(x)

tensor = torch.arange(10., 100., 10.)

tensor_float16 = tensor.type(torch.float16)The same operations can be done with NumPy

Tensor data types: https://pytorch.org/docs/stable/tensors.html#data-types

You can cast as float by using .float()

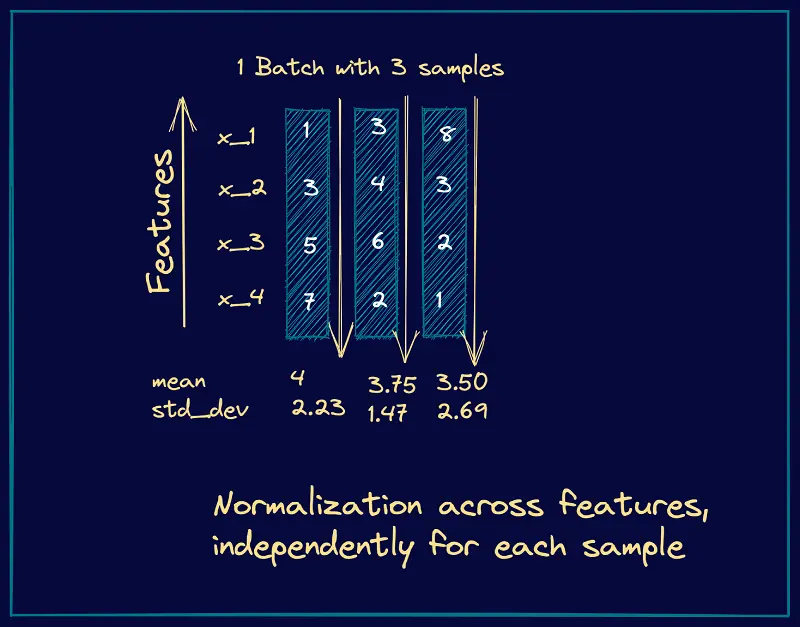

Dimensions of tensors

For Mac

Although we don’t have an Nvidia GPU, there has been added support. Use device = torch.device("mps")

Torch Argmax

Was getting a little confused on the indexing, so writing some notes so that we can do this.

Let’s visualize torch.argmax(x, dim=0) and torch.argmax(x, dim=1) using a small 2D tensor:

👇 Example tensor x (shape (3, 4)):

D →

0 1 2 3

┌─────────────────

B 0 │ 1 7 2 9

1 │ 4 3 8 6

2 │ 0 5 6 4

x.shape = (3, 4) → 3 rows (B = batch), 4 columns (D = features)

-

ahh this mental model picture is messing with my understanding, I needed a refresher on this

torch.argmax(x, dim=0) — along batch (dim=0)

- For each column, find the index of the max down the rows:

torch.argmax(x, dim=0) → tensor([1, 0, 1, 0]) # shape: (4,)- You’re scanning downward (↓) for each column.

torch.argmax(x, dim=1) — along features (dim=1)

For each row, find the index of the max across the columns:

-

Row 0 → max is

9at col 3 -

Row 1 → max is

8at col 2 -

Row 2 → max is

6at col 2

📤 Output:

python

CopyEdit

torch.argmax(x, dim=1) → tensor([3, 2, 2]) # shape: (3,)

You’re scanning rightward (→) for each row.

Ideas

These ops for MPS are not implemented. https://github.com/pytorch/pytorch/issues/77764