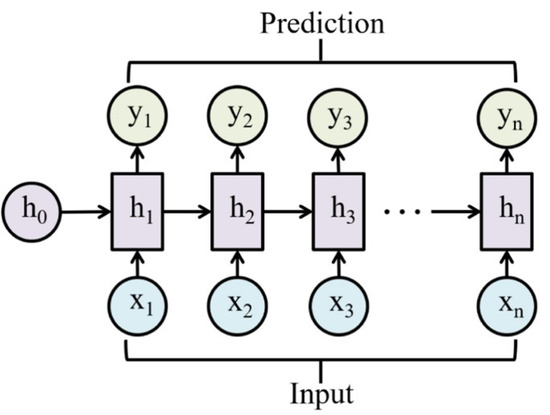

Recurrent Neural Network (RNN)

“Learning from the past”. Good for times series data.

Resources

- https://karpathy.github.io/2015/05/21/rnn-effectiveness/

- https://www.youtube.com/watch?v=TQQlZhbC5ps&ab_channel=CodeEmporium

- https://cs231n.github.io/rnn/

- https://www.deeplearningbook.org/contents/rnn.html

Three types of models:

- Vector-Sequence Models (ex: captioning an image)

- Sequence-Vector Models (ex: Sentiment Analysis, converting a sentence into a number)

- Sequence-sequence models (ex: Language translation)

Disadvantages:

- Slow to train

- Long sequences lead to vanishing / exploding gradients → Solution: Long Short-Term Memory

Output vs hidden state

Had this confused when I was reading World Models paper. In an RNN, the hidden state is the internal representation that carries information forward in time. It’s designed to be rich enough to summarize the relevant history of the sequence.

In my words

The way you can think of (the hidden state) is that it’s right before some transformation to get the output () . But is propagated through time. It doesn’t make sense to propagate , but that’s generally lossy, less information than what the hidden state has.

The output is typically computed as a simple transformation of , e.g.

or through a softmax layer for classification.

Propagating instead of wouldn’t make sense because is usually a lower-dimensional, task-specific (and often lossy) projection of . It doesn’t preserve enough information for the next step in the sequence to condition on.

That’s why the recurrence is defined in terms of and not in terms of :