Multilayer Perception (MLP) / Neural Network (NN)

A neural network is a universal function approximator.

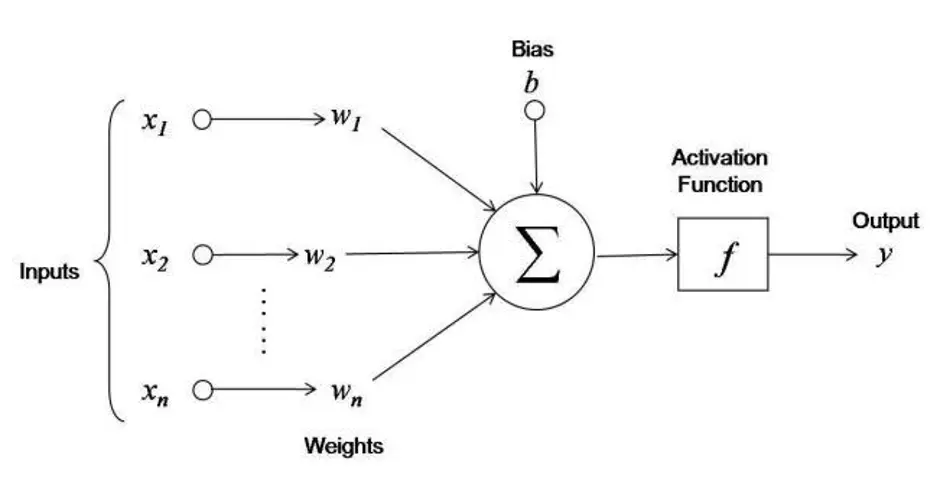

I remember this, but the reason we have Activation Functions is to get a non-linear property. Else if everything is linear, we can just collapse all of the linear layers in a single linear layer.

- Credits to https://www.pinecone.io/learn/batch-layer-normalization/ for inspiration on how to draw it

I separated the sum and the + bias visualization, even though really they are the same, because when it comes to implementing this, we use matrix representation, which is

MLP vs. FFN?

All MLPs are FFNs, but not all FFNs are standalone MLPs.

- A CNN is a type of FFN that is composed of convolution and pooling layers and then followed by an MLP layer.

Fully-connected layer means that every node in the layer has weights connecting it to every single node in the previous and next layer.

If your input is , and your hidden dimension is , you need a matrix, and a bias :

Concepts

Types of Neural Networks

Steps

- Import the training set which serves as the input layer.

- Forward propagate the data from the input layer through the hidden layer to the output layer, where we get a predicted value y. Forward propagation is the process by which we multiply the input node by a random weight, and applying the activation function.

- Measure the error between the predicted value and the real value.

- Backpropagate the error and use gradient descent to modify the weights of the connections.

- Repeats these steps until the error is minimized sufficiently, by finding the optimal weights.

Vocabulary

The input layer: What the machine always knows. Ex: The banking behavior of a customer.

The hidden layer: Where the magic happens.

The output layer: What the machine will predict Ex: Whether or not the customer will quit within the next 6 months.

Node/Neuron: A thing that holds a number. Represented by a circle in the image.

Gradient descent: The algorithm that allows us to get more and more accurate data as the model improves by updating the weights of the connections.

Weights: These are the things that get updated by the model to become more accurate after every iteration. They are represented by the connections formed between each neuron. Each connection has a different weight.