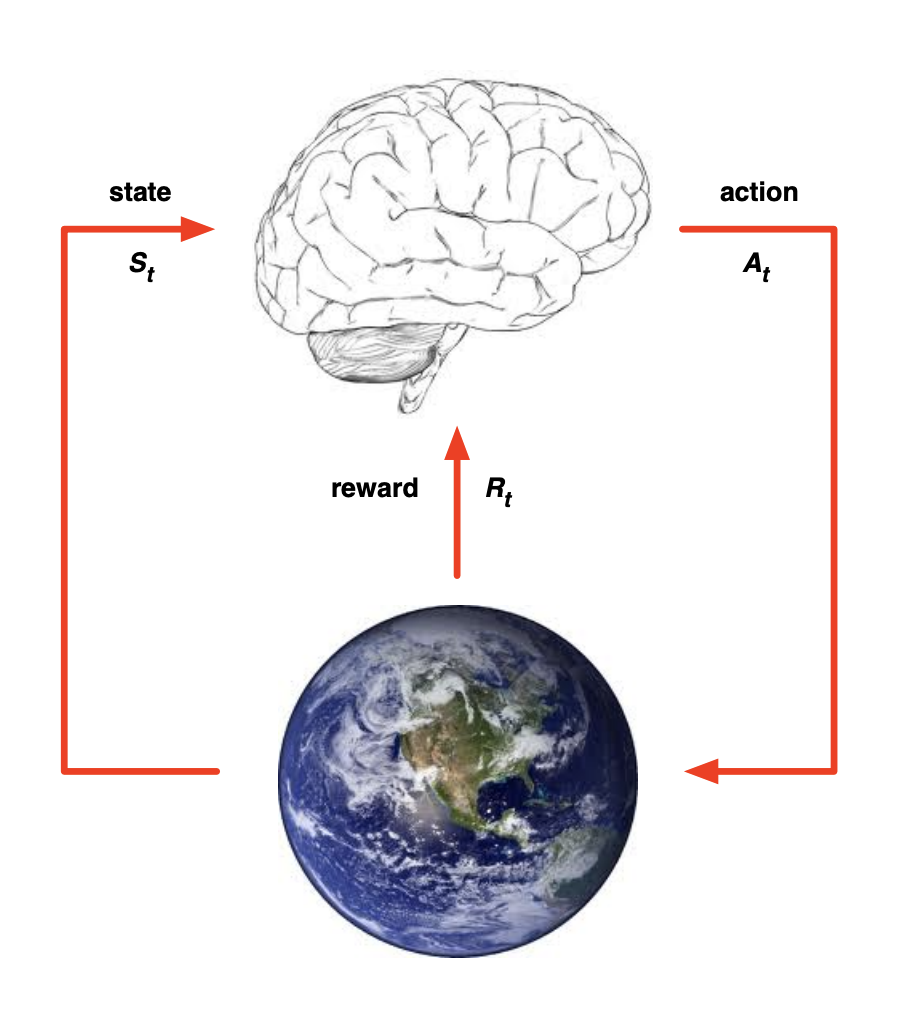

History and State

Definition

The history is the sequence of observations, actions, rewards

- i.e. all observable variables up to time

- i.e. the sensorimotor stream of a robot or embodied agent

State is the information used to determine what happens next Formally, state is a function of the history:

Information State / Markov State

An information state (a.k.a. Markov state) contains all useful information from the history.

- “The future is independent of the past given the present”

Environment State

The environment state is the environment’s private representation i.e. whatever data the environment uses to pick the next observation/reward The environment state is not usually visible to the agent Even if is visible, it may contain irrelevant information

Agent State

The agent state is the agent’s internal representation

- i.e. whatever information the agent uses to pick the next action

- i.e. it is the information used by reinforcement learning algorithms It can be any function of history:

What we think happens next really depends on our representation on state. Our job is to build a state that is useful.

Fully Observable Environments

This is the best case.

This is the best case.

Full observability: agent directly observes environment state

- Agent state = environment state = information state

- Formally, this is a Markov Decision Process

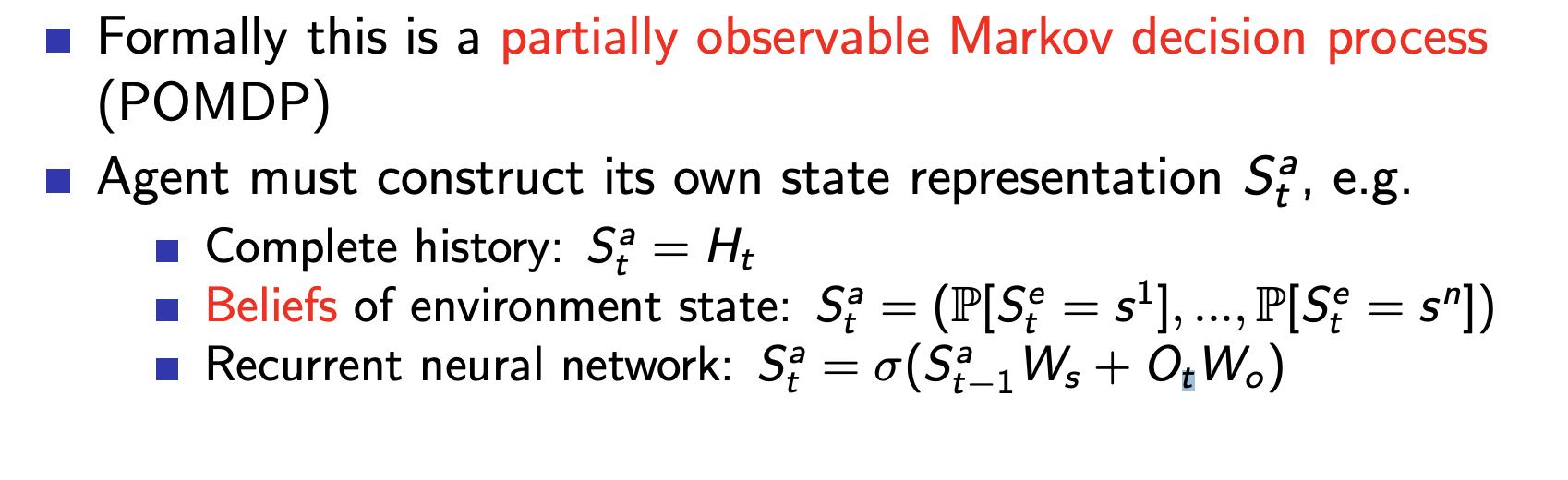

Partially Observable Environments

These are more difficult scenarios. Partial observability: agent indirectly observes environment:

- A robot with camera vision isn’t told its absolute location

- A trading agent only observes current prices

- A poker playing agent only observes public cards

In this case, agent state environment state