Unsupervised Environment Design

Unsupervised environment design refers to the process of creating an environment for artificial intelligence (AI) systems to operate in without explicit guidance or supervision.

- allowed to explore and learn from the environment on without any predefined goals or objectives

The goal of UED to create an environment that is rich in complexity and variability so the AI can learn to become more adaptable and solve a wide range of tasks.

This is in contrast to supervised learning, where the AI system is trained on a labeled dataset with specific inputs and corresponding outputs. In unsupervised environment design, the AI system is provided with an environment and is expected to learn from it by observing and interacting with it.

RL Environment

This got me confused. Like does Chess have a lot of different environments, or does it simply have one single environment?

- States = to specific configurations or situations that the agent may encounter within an environment

- Environments = a broader set of factors that can influence the agent’s behavior and performance. You have free parameters to set up the environment.

Environment is related to the setup conditions.

Different Environment Settings

- Video games different levels, maps, and game modes with varying degrees of complexity, visual appearance, and gameplay mechanics.

- Robotic different objects to grasp, surfaces to navigate, or obstacles to avoid.

- finance different market scenarios, such as bull and bear markets, changes in interest rates or economic policies, and different asset classes with varying levels of risk and return

UPOMPD

UED is often formalized through a underspecified POMPD, where you have an extra set representing the free parameters of the environment. This is incorporated into the state transition function.

A possible setting of the environment is given by some trajectory of environment parameters .

Example to make this more concrete

Suppose we wish to train a robot in simulation to pick up objects from a bin in a real-world warehouse. There are many possible configurations of objects, including objects being stacked on top of each other. We may not know a priori the typical arrangement of the objects, but can naturally describe the simulated environment as having a distribution over the object positions. These can be specified with the parameters .

The state captures the information about the environment, but the

Formalization

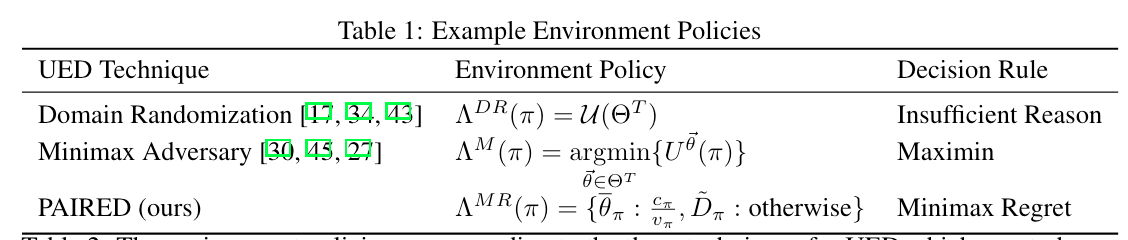

So to generate the different environments, we define an environment policy

where is the set of possible policies and is the set of possible sequences of environment parameters.