Masked Autoencoders Are Scalable Vision Learners (MAE)

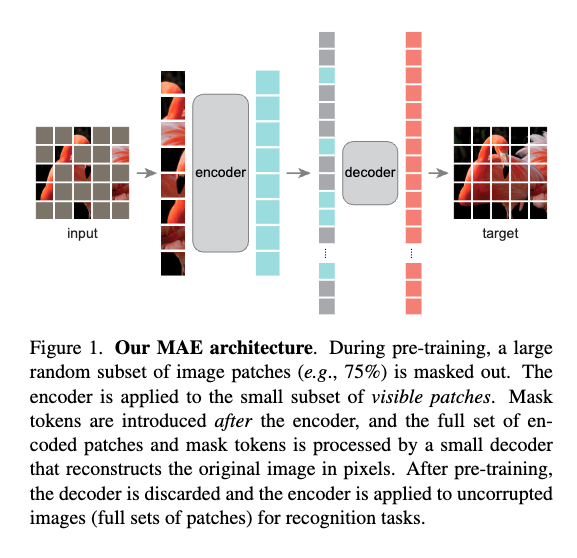

Actually a very basic idea: in addition to your autoencoder architecture, you’re going to mask a large part of the image, and force the autoencoder to reconstruct the original image.

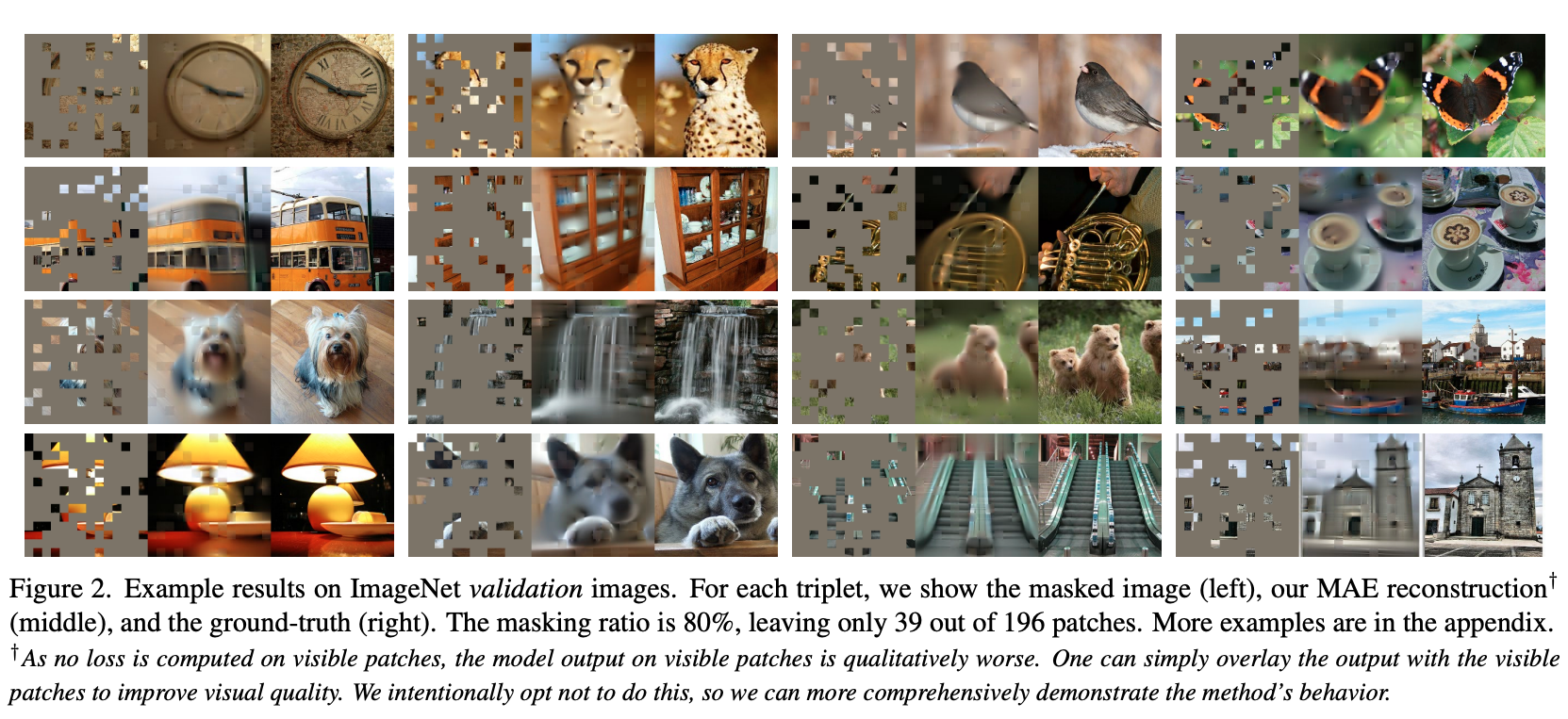

- In these results, you still see that it’s actually quite blurry, because it sort of learns the mean

Masked autoencoding idea did not come from this paper, it came from BERT in 2018.

How can masked autoencoding work so well without diffusion?

MAEs don’t need to generate an image pixel-by-pixel from pure noise (like diffusion).

They start with a partially observed image: a small set of visible patches already gives a huge amount of structure (object shapes, colors, layout).

The model’s job is to fill in the missing patches so the whole image is coherent — this is a much lower-entropy task than unconditional generation.

They show good scaling properties.

“we mask random patches of the input image and reconstruct the missing pixels”

They found that MAEs encoder without mask tokens does better. Only the decoder will have access to mask tokens:

[!PDF|255, 208, 0] Masked Autoencoders Are Scalable Vision Learners, p.5

(c) Mask token. An encoder without mask to- kens is more accurate and faste

is there a single mask token or multiple mask token, one for each area that can be masked?

Each of those mask tokens is identical (same vector in parameters), but their positional embedding makes them unique.