Generative Model

You have GANs and Diffusion Model that can generate data. There’s also GPT-3.

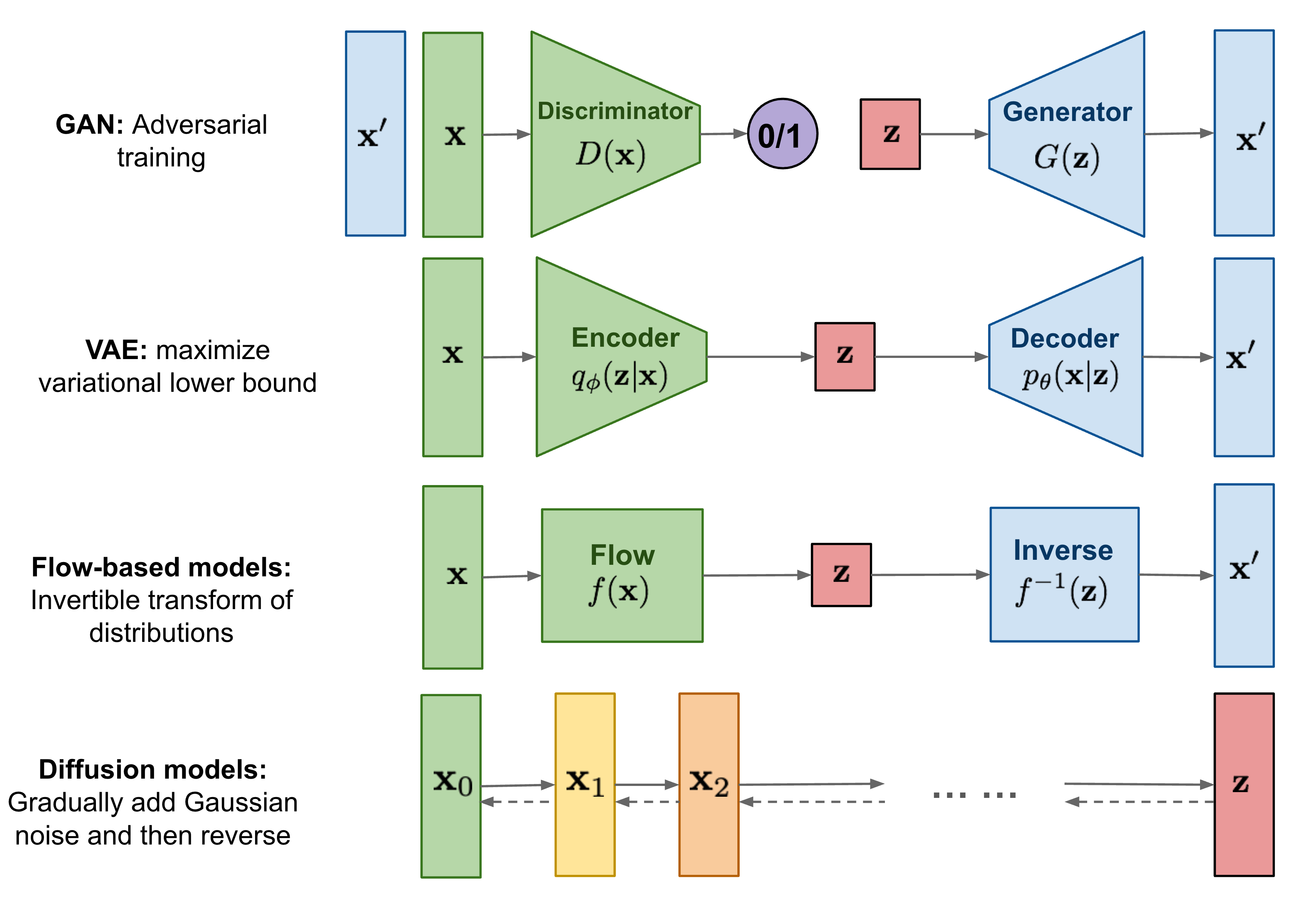

Types of generative models (source):

- Likelihood-based models: approximate the probability distribution . Ex:

- Implicit / Score-Based Models: do not model explicitly, or use alternative objectives to generate samples. Ex:

- Generative Adversarial Network (GAN):

- Diffusion Models: .

- Energy-Based Model (EBM):

I still don't fully get the difference....?

It’s not about starting from noise and then denoisinig.

What is

p(x)?

- is the probability density (or mass) of a data point under your model.

- It tells you how likely your model thinks that is.

Does this matter anymore?

Like just slap a transformer and feed data, does this really matter? The architecture is becoming standardized (transformers), but the generative modeling paradigm — diffusion vs autoregressive vs GAN — still shapes what the model does and how it learns.

https://lilianweng.github.io/posts/2021-07-11-diffusion-models/ https://yang-song.net/blog/2021/score/

- From lilian wag’s blog, it seems that all of these are really similar.

Generative Video Models

Do generative video models understand physics?

- https://arxiv.org/pdf/2501.09038 this paper proves NO

It’s just learning to correlate frames, but it has no understanding of the world’s physics.