Normalizing Flow

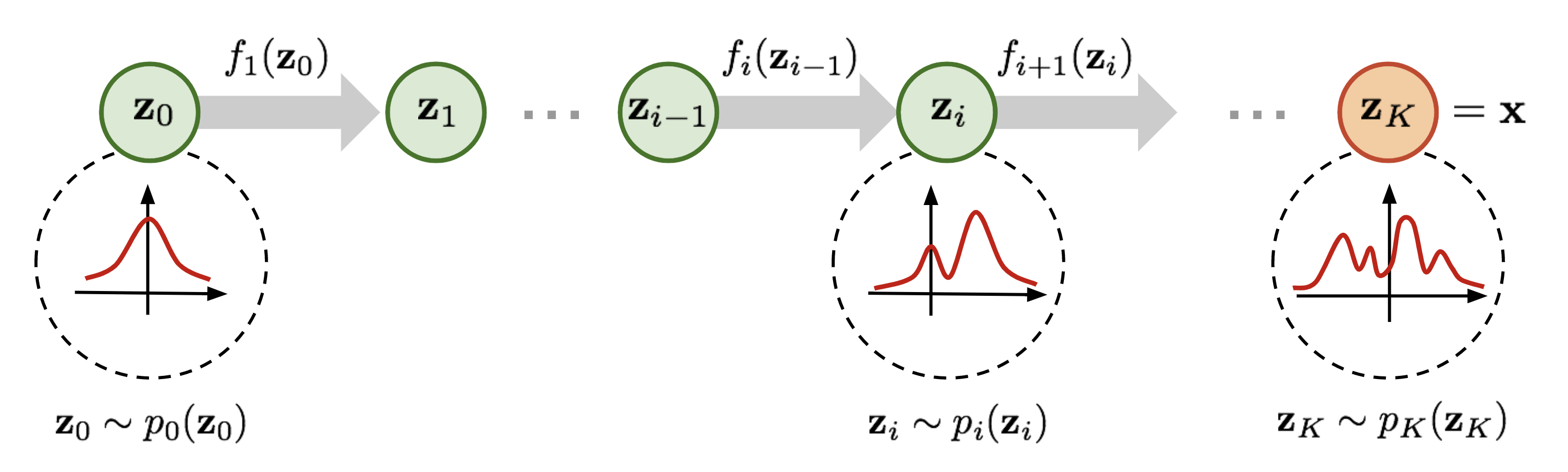

A normalizing flow is transforms a simple distribution (generally a Unit Gaussian) into a complex, data-like distribution using a sequence of invertible and differentiable functions.

This is an idea that I saw Billy Zheng write about https://hongruizheng.com/2020/03/13/normalizing-flow.html

Resources

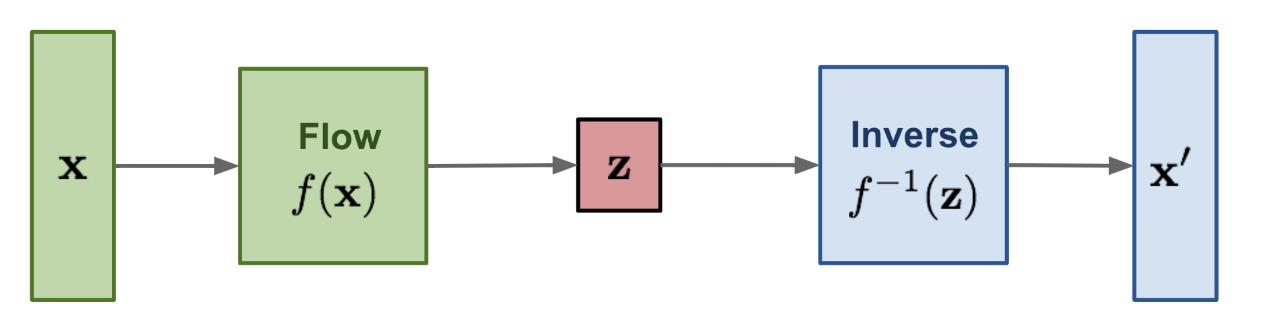

Normalizing flow learns an invertible transformation between data and latent variables:

- is a data sample

- is a latent variable sampled from a simple distribution

You can't just have z

The function in normalizing flows is perfectly invertible. In normalizing flows, we care about density estimation, not reconstruction. The loss is based on the log-likelihood of data under the model (more below).

- that would basically be an Autoencoder

We can write this in terms of probability density function (comes from the Change-of-Variable Formula theorem in probability):

I don't understand where

det(\frac{\delta f^{-1}}{\delta x})comes from?)

- In training, data flows from , use data , and apply the inverse flow: . We minimize the loss over . Compute the Log Likelihood using the change-of-variable formula:

- Notice the magic, because below, we can then use ! All thanks to the fact that is invertible.

- In sampling / generation, Data flows from: , sample from the base distribution, and apply the forward flow:

Difference with VAE?

It’s how we formulate the loss. In VAE, the encoder and decoder are separate networks. Also:

- Normalizing flow is deterministic: No randomness is added when transforming . It’s exact, we know what happens.

- VAE is stochastic: it uses a stochastic encoder that samples from a learned distribution . It outputs a distribution.

So it seems that they both model gaussians:

- In flows, the Gaussian is transformed through exact, invertible functions to match the data.

- In VAEs, the model learns to approximate the mapping between data and latent Gaussian through separate encoder/decoder networks.

Sampling:

We apply a chain of invertible transformations:

We apply a chain of invertible transformations:

- : latent variable sampled from a standard Gaussian

- Each : an invertible transformation (e.g., affine coupling layer)

- Output : a realistic-looking data sample

What does look like? Depends on the model. They’re generally just Affine Transforms, since those are differentiable.

Forward pass:

Inverse pass:

- and are neural networks (often small CNNs or MLPs).

- Same parameters are reused in both directions.

How Weight Updates Work in Flow-Based Models

Training is done via maximum likelihood estimation (MLE) using the change-of-variables formula.

Change of Variables

Given and :

Rewriting using forward Jacobian:

The fundamental differnce

This loss uses a jacobian, it’s derived from the change of variables formula.

- We’re not just doing L = x - f(z)

Training Steps

- Inverse pass: Given data , compute

- Compute log-likelihood loss:

-

Backpropagate through:

- the inverse transformations

- the neural nets and

- the log-determinant term (structured for easy computation in RealNVP/Glow)

-

Gradient Descent:

- Use Adam/SGD to update parameters in and

Normalizing flow gives you an explicit representation of density functions.

Used a lot in Generative Model.