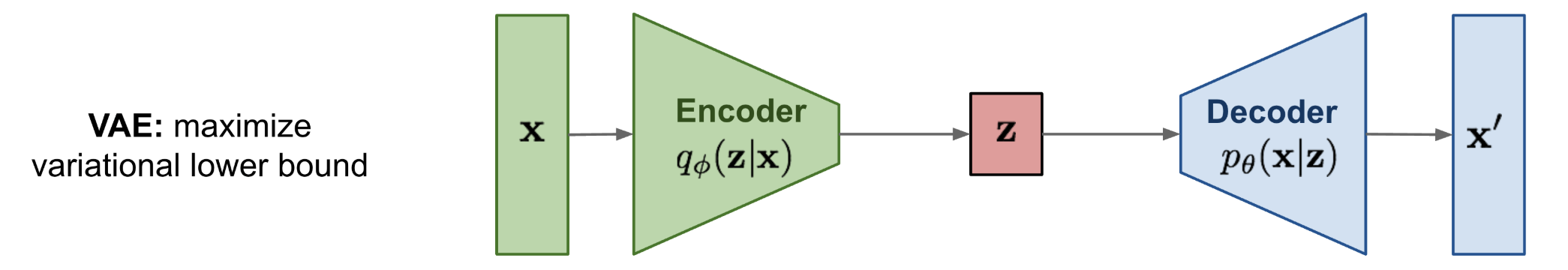

Variational Autoencoder (VAE)

Latent variable model trained with variational inference:

I got the intuition for VAEs here https://chatgpt.com/share/693a58cf-e2e4-8002-9fea-eb7fad7817b1

- g variational autoencoder: tries to map original distribution to a Gaussian, and also maps back to original distribution. Each is encoded in the loss function.

This is a variant of the Autoencoder that is much more powerful, which uses distributions to represent features in its bottleneck. There are issues that arise with Backprop, but they overcome it with a reparametrization trick.

Resources

It's basically an Autoencoder but we add gaussian noise to latent variable

z?Key difference:

- Regular Autoencoder

- Input → Encoder → Fixed latent representation → Decoder → Reconstruction.

- VAE

- Input → Encoder → Latent distribution → Sample from distribution (adds Gaussian noise via reparameterization trick) → Decoder → Reconstruction

Variational autoencoders provide a principled framework for learning deep latent-variable models and corresponding inference models.

Process

Forward Pass (Encoding → Sampling → Decoding)

- Encoder:

Input data , outputs parameters (mean and variance) of latent distribution :

- Reparametrization Trick: Differentiably sample latent variable :

- Decoder:

Reconstruct data from sampled latent vector :

Loss Function (Negative ELBO):

Optimize encoder and decoder parameters by minimizing:

Notes from the guide

The VAE can be viewed as two coupled, but independently parameterized models:

- encoder (recognition model)

- decoder (generative model)

Motivation

We want to maximize the log likelihood To make this a generative process, we want it conditioned on some known probability distribution (so then it becomes mapping probability distribution to ) (else it’s just like an Autoencoder, always deterministic).

We expand out

- However, this is NOT tractatable. It does not have a closed form solution. Trying every single value for is not tractable, is implemented as a neural net.

I'm confused on what is tractable and what is not tractable?

- is tractable - Simple prior to generate (i.e. unit gaussian)

- is tractable- simple neural net to general conditioned on

- is NOT tractable - need to integrate over all

- is NOT tractable because it needs applying Bayes Rule

So what do we do? We approximate the intractable posterior with and maximize a tractable lower bound (ELBO) on the true log-likelihood.

We approximate and then optimize by maximizing