Linear Classification

Originally, I was like, why am I learning this from CS231N, since I need to work on neural networks. However, I am realizing that the Neural Network are just stacked linear Classifiers (+ Neural Network are just stacked linear Classifiers (+ Activation Function, since they take on the form (called the Neural Network are just stacked linear Classifiers (+ Activation Function, since they take on the form (called the Activation Function, since they take on the form (called the Score Function):

So this will be used as a foundation to understand more complex algorithms. You can think of this as a single classifier that assigns a weight to each pixel with the matrix and class, i.e. the importance of a particular pixel for a particular class.

Say you want to predict an image of size , (rolled out = ) with possible classes. You have the following dimensions:

- is or more generally

- are the parameters/weights is or more generally

- is the bias term, or more generally

In practice, we use the Bias Trick to simplify the above expression to by extending the vector with one additional dimension that always holds the constant - a default bias dimension. With the extra dimension,

- is now

- is now

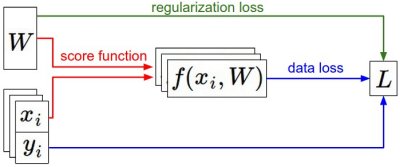

Summary of the information flow

- The dataset of pairs of (x,y) is given and fixed.

- The weights start out as random numbers and can change.

- During the forward pass the score function computes class scores, stored in vector f.

- The Loss Function contains two components: The data loss computes the compatibility between the scores f and the labels y.

- The regularization loss is only a function of the weights. During Gradient Descent, we compute the gradient on the weights (and optionally on data if we wish) and use them to perform a parameter update during Gradient Descent.

- We want to update to find a set of weights to minimize the loss function, and then we can use that later to make predictions

Hard Cases for a Linear Classifier

- When you can’t use a line to split a decision boundary, when one class appears in multiple regions of space