SE464: Software Design and Architectures

don’t really go to this class, but there seems some good guest lectures.

Taking notes because I have no idea what there is on the exam

Things I need to master for Final:

- The 5 SOLID principles and examples for each

- Two kinds of partitioning: functional and data partitioning

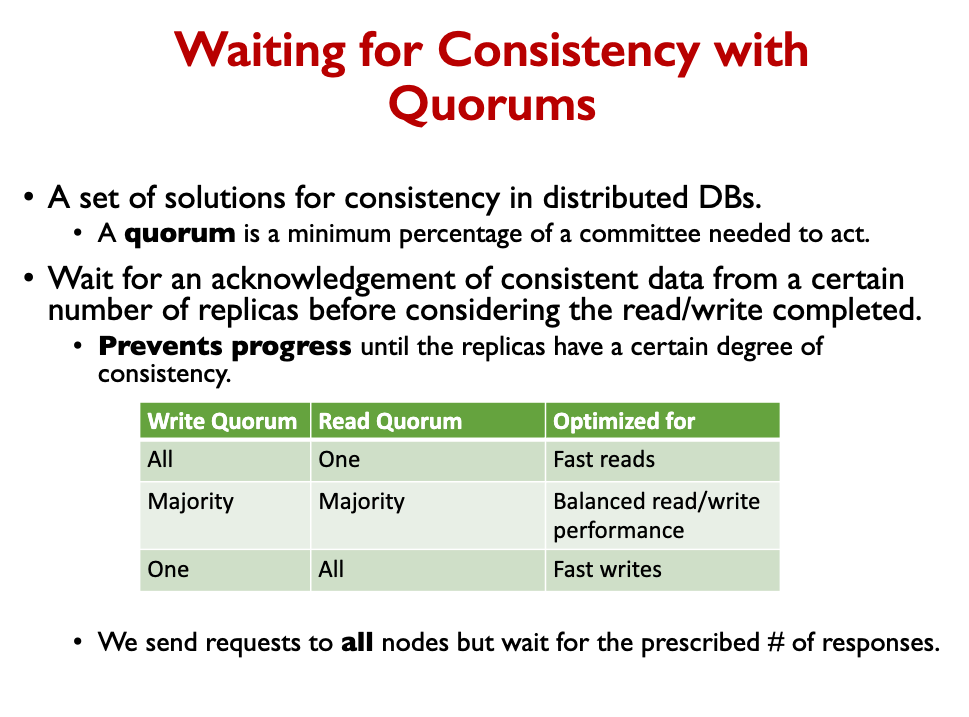

- The different quorum levels (week 4)

- What happens when DHT quorum fails?

Calculations for software architecture modelling??

My writeup

- Sharding is to remove bottleneck in writing to DB

- Read replicas is must making copies of the DB, and let them be read only

- Replication is to avoid data loss, you can replicate the entire DB or you replicate shards based on the setup, so replication is not about sharding

Notes

Codebases are exploding in size (1M to 1B lines of code for a given codebase). Software Architecture is the Key Ingredient in Managing Complexity and Risk.

What is Software Architecture?

The conceptual fabric that defines a system. All architecture is design but not all design is architecture.

Terminology: System Architecture

- Structure: Several computers, networks, databases, etc. connected together

- Analogy: Plan of city

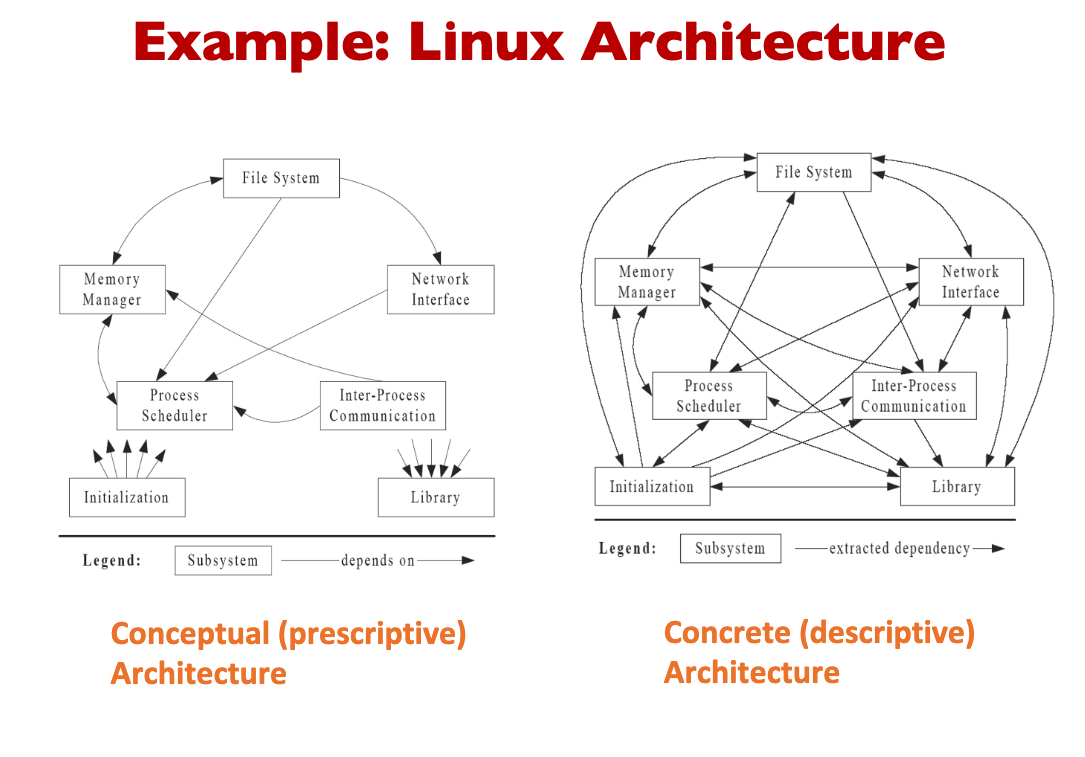

Conceptual (prescriptive) Software Architecture

- Abstract structure: Large piece of software with many parts and interconnections

- Analogy: Blueprint of house

Concrete (descriptive) Software Architecture

- Actual structure: Large piece of software with many parts and interconnections

- Analogy: Actual structure of house

Architectural Degradation

Architectural drift is the introduction of principal design decisions into a system’s descriptive architecture that

- are not included in, encompassed by, or implied by the prescriptive architecture

- but which do not violate any of the prescriptive architecture’s design decisions Architectural erosion is the introduction of architectural design decisions into a system’s descriptive architecture that violate its prescriptive architecture

Architectural Recovery

If architectural degradation is allowed to occur, one will be forced to recover the system’s architecture sooner or later.

Architectural recovery is the process of determining a software system’s architecture from its implementation-level artifacts Implementation-level artifacts can be

- Source code

- Executable files

- Java.class files

The teacher shows three kinds of web architectures:

- Logical web architecture (how the different html files are related to each other)

- Dynamic web architecture (chrome and the various get and post requests)

- Physical web architecture (the actual computers and servers talking to each other)

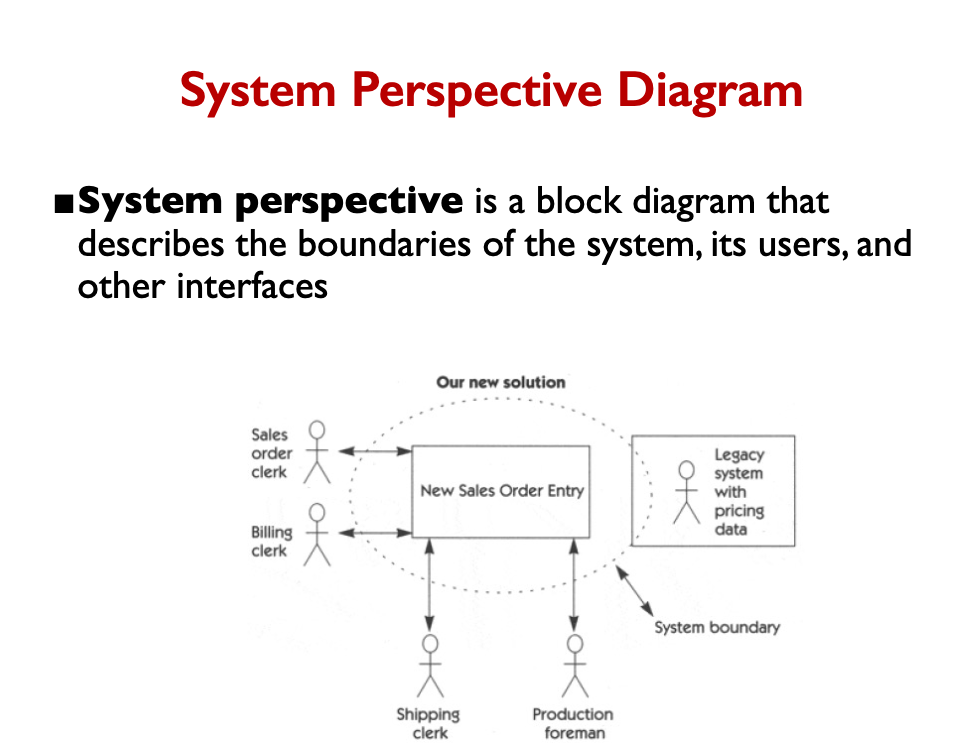

System architecture needs to express:

- The decomposition into subsystems (how the code depends on each other)

- Process interactions (dynamically how code calls each other)

- The distribution of subsystems across networked devices (where to put them)

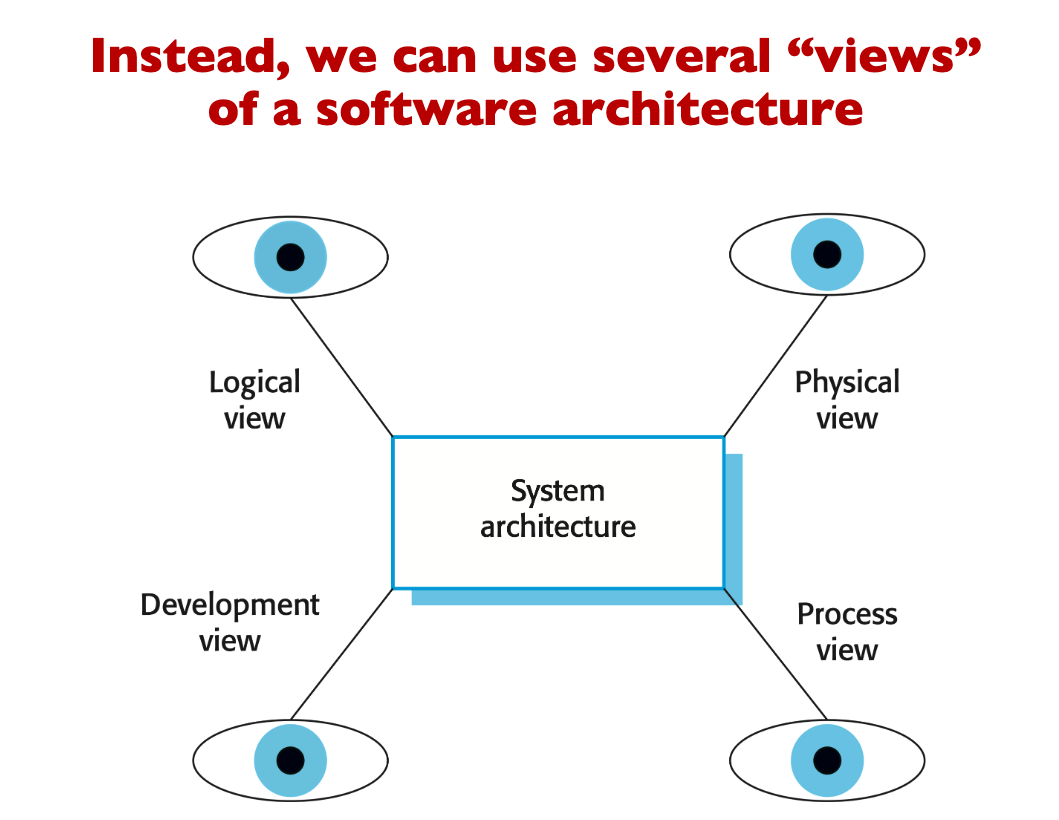

Too many perspectives for one diagram:

- If we attempt to model everything in one diagram, it becomes a mess

So we have a 4 + 1 view model of software architecture

- A logical view, which shows the key abstractions in the system as objects or object classes.

- A process view, which shows how, at run-time, the system is composed of interacting processes.

- A development view, which shows how the software is decomposed for development.

- A physical view, which shows the system hardware and how software components are distributed across the processors in the system.

- Related using use cases or scenarios (+1)

More terminology Architectural Style

- Form of structure, e.g.,

- “Pipes” between components, or

- “Layered” system, or

- “Bulletin board” system

- Analogy: Style of a building

Reference Architecture

- General architecture for an application domain

- Example: Common structure for compilers or for operating systems

Product Line Architecture (PLA)

- Architecture for a line of similar software products

- Example: Software structure for a family of computer games

Architecture vs. Design Architecture

- Structure of system (components and connectors)

- High level and hard to change (better get it right!)

- Concerned with technical and non technical requirements (e.g., Security, Legal, Outsourcing)

- Makes sense for systems with MLOCs (million lines of code)

- Very early in life cycle

Design

- Inner structure of the components

- Low level (information hiding and interfaces help it change )

- Mostly technical concerns

- Makes sense for systems with KLOCs

- Late in life cycle

Week 2.2 Design Principles

We review UML:

- Unified Modeling Language (UML) is a general purpose modeling language for object-oriented software systems

- Provides a graphical notation for describing system models

- Supports 14 different diagrams covering the structure and behavior of systems

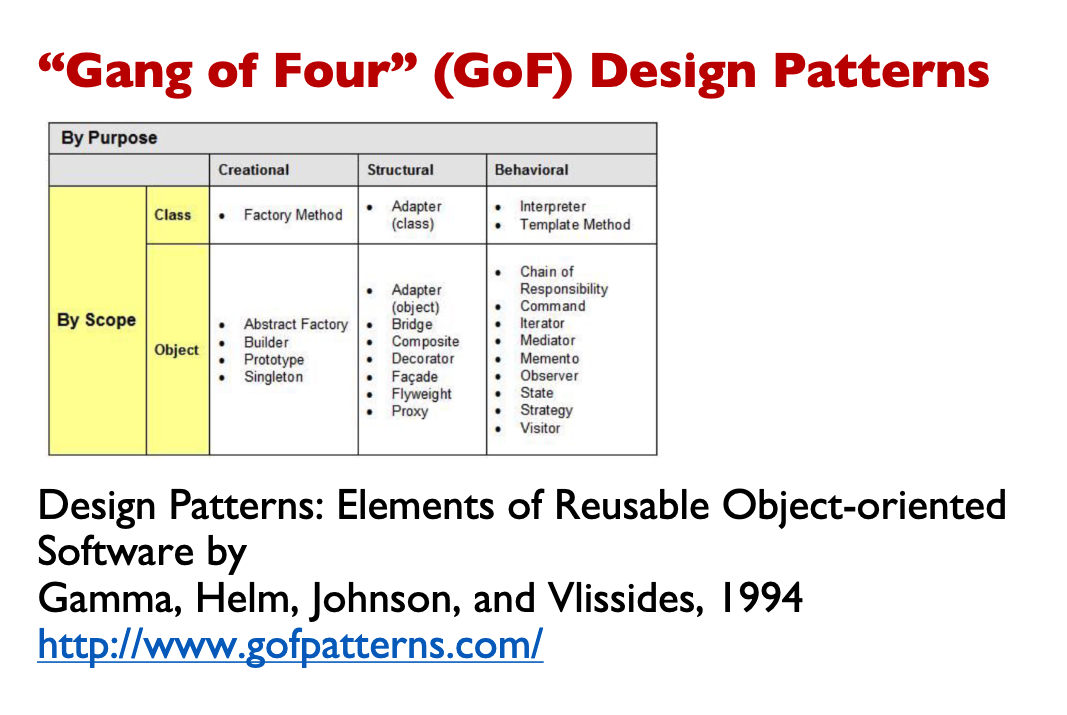

Design Patterns book: The first systematic catalog recording 23 design patterns

For each design pattern:

- Name

- Problem

- Solution

- Consequences

- Example

The 5 SOLID principles:

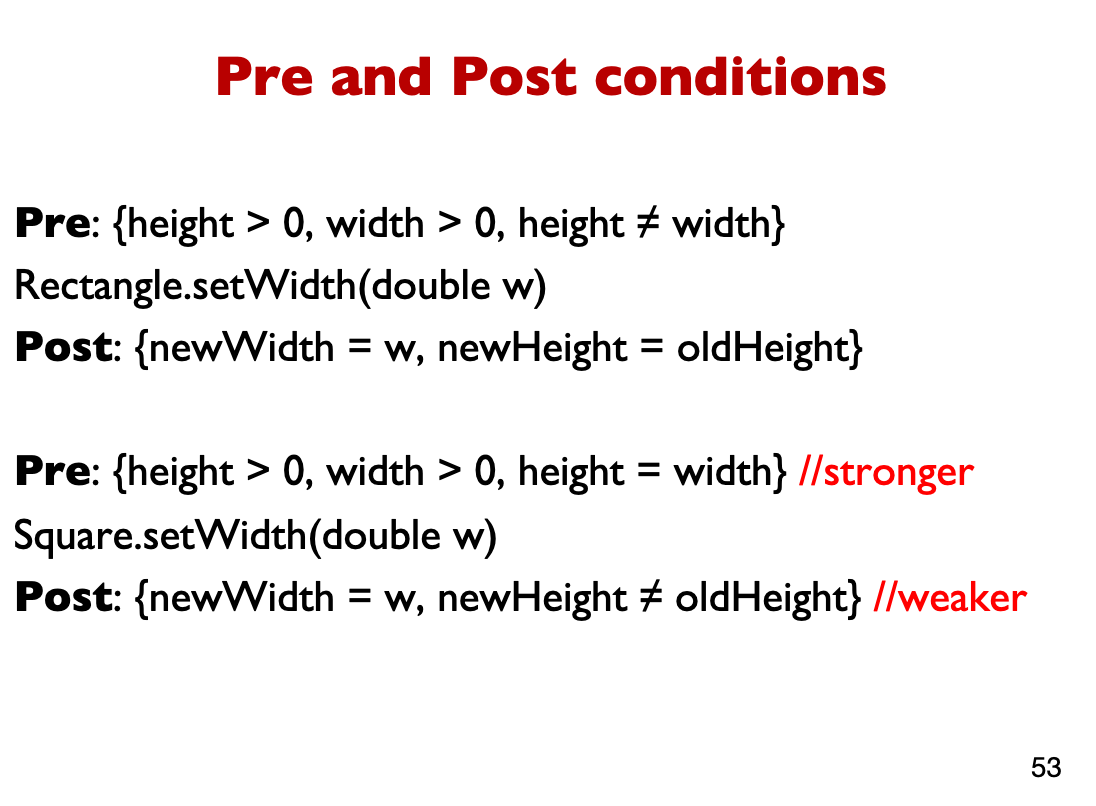

References to the Base class must be completely substitutable by references of any of the Derived classes.

SuperType reference = new SubType();- The Invariants of the SuperType MUST NOT be violated or broken by the SubType.

- The preConditions on an overridden method for the SubType must be a ‘SUBSET’ of the preconditions on the same method for the SuperType. In other words, Preconditions must not be strengthened by the SubType.

- The PostConditions on an overridden method for the SubType must be a ‘SUPERSET’ of the postconditions on the same method for the SuperType. In other words, PostConditions must not be weakened by the SubType.

The square inheriting from a rectangle is used as an example of LSP violation.

How to find violations of LSP

- If an overridden method does nothing or just throws an exception, then you’re probably violating the LSP.

- If a subtype method modifies a field of the superclass, which is not exposed through a setter, then you’re probably violating the LSP.

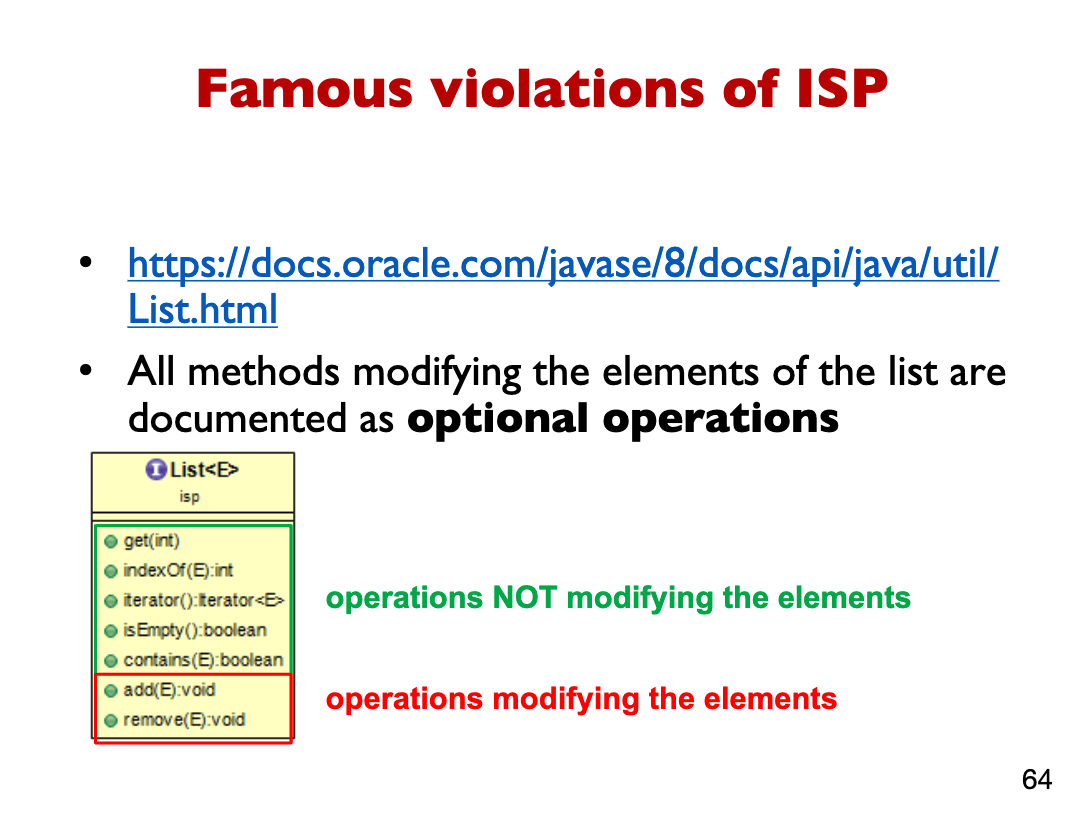

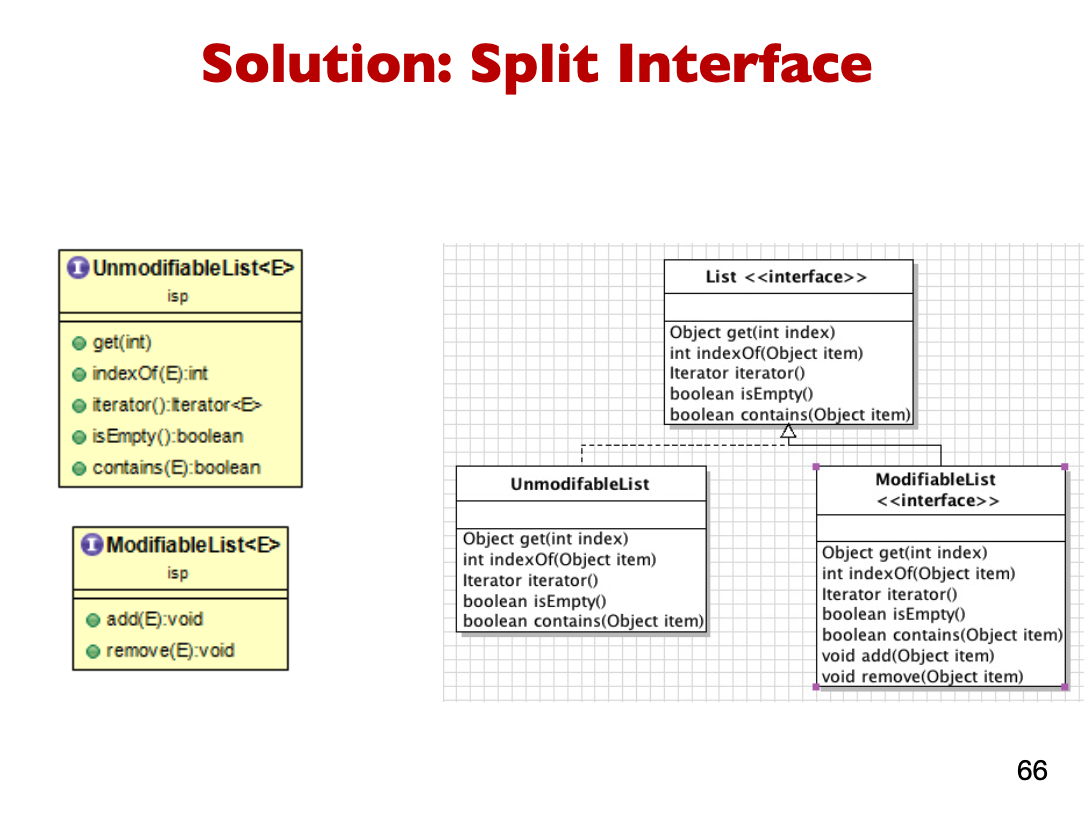

- Interface Segregation Principle

- Large interfaces with many methods of varying behavior should be split into smaller interfaces

How to find violations of ISP

A class implements an interface, but some of the interface methods are not required and are implemented with empty body or return null, or throw an exception.

Each method implemented must certainly define a concrete behavior for the class.

Why is the above bad? It violates ISP violation

- All subclasses that require an unmodifiable list behavior, need to implement all methods modifying the elements of the list by throwing UnsupportedOperationException

- This is similar to having an empty body (i.e., no behavior)

-

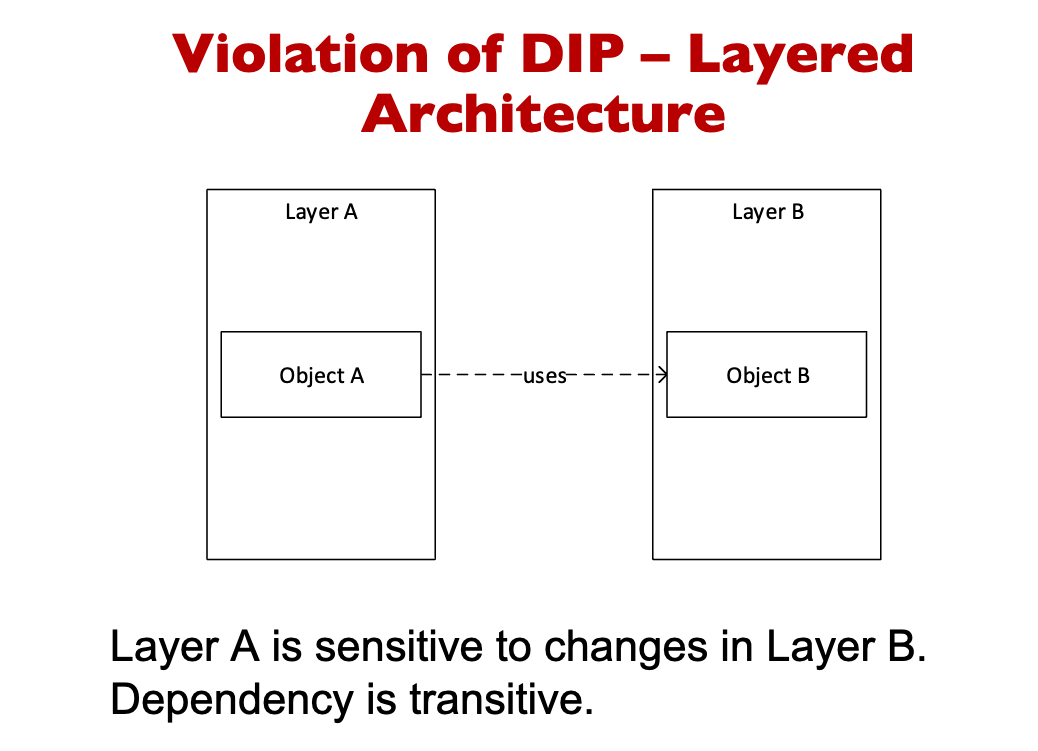

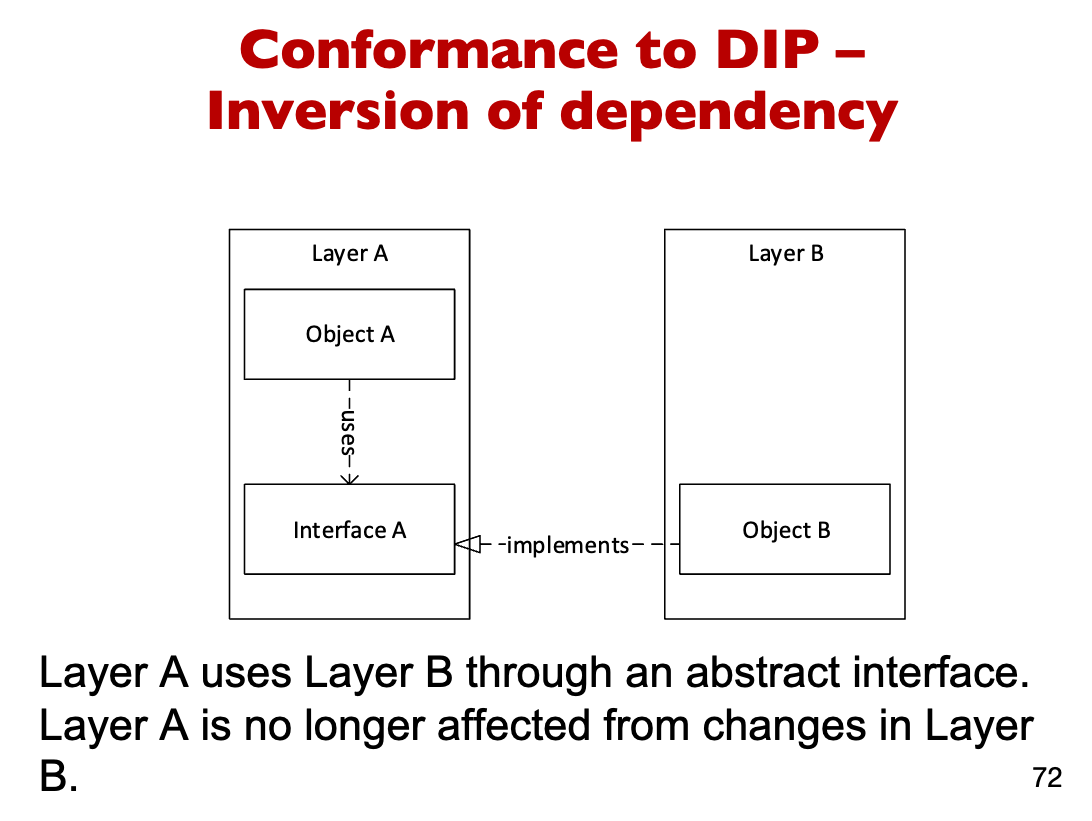

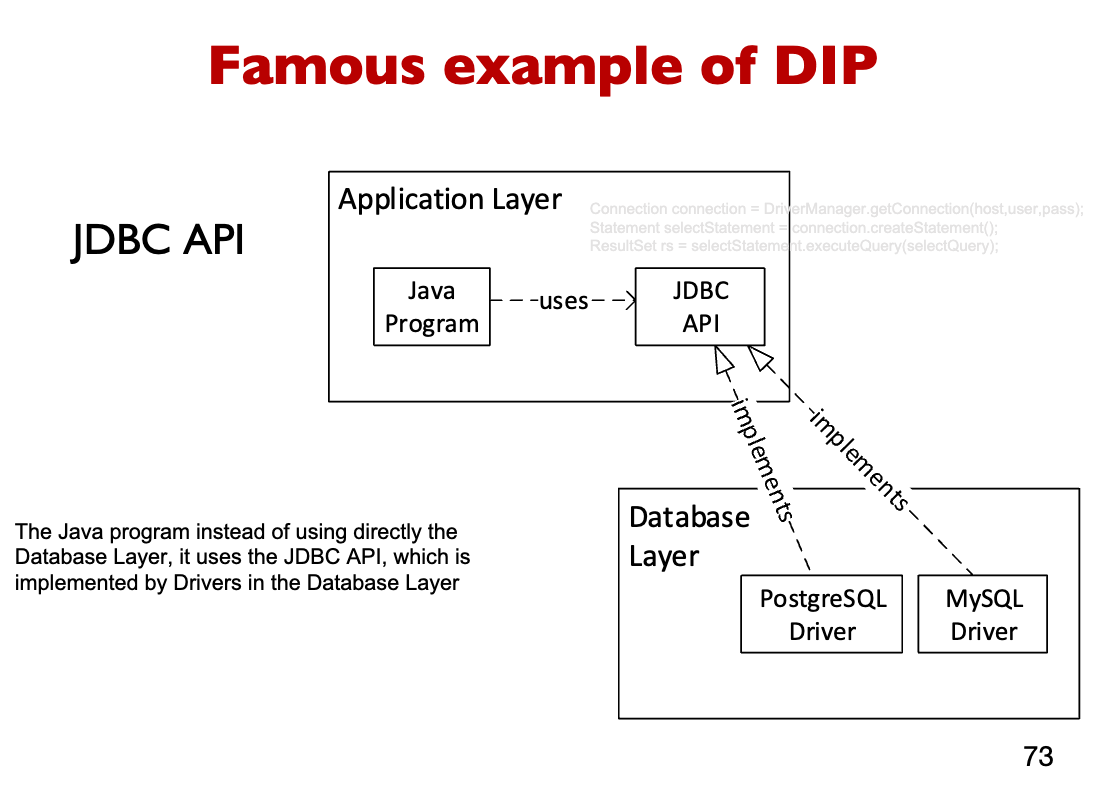

High Level modules should not depend on Low Level modules. Both should depend upon abstractions.

-

Abstraction should not depend upon details. Details should depend upon abstraction

Implementing DIP example

[Week2.2]NFR

We need to work with requirements!

Requirements come from users and stakeholders who have demands/needs.

Questions that Arise During Requirement Gathering

- Is this a business need or a requirement?

- Is this a nice-to-have vs. must-have?

- Is this the goal of the system or a contractual requirement?

- Do we have to program in Java? Why?

Types of requirements: Functional Requirements

- Specify the function of the system

- F(input, system state) → (output, new state) Non-Functional Requirements (Constraints) (NFR)

- Quality Requirements

- Specify how well the system performs its intended functions

- Performance, Usability, Maintenance, Reliability, Portability

- Managerial Requirements

- When will it be delivered

- Verification (how to check if everything is there)

- What happens if things go wrong (legal responsibilities)

- Context / Environment Requirements

- Range of conditions in which the system should operate

Software Specification Specification acts as a bridge between the real-world environment (demands and needs of stakeholders) and the software system.

Non Functional Requirements (NFR)

- NFRs are often called “quality attributes”

- NFRs specify how well the system performs its functions:

- How fast must it respond?

- How easy must it be to use?

- How secure does it have to be against attacks?

- How easy should it be to maintain?

Functional requirements are like verbs

- The system should have a secure login NFRs are like attributes for these verbs

- The system should provide a highly secure login

NFRs require special consideration during the software architecture/high level design phase. They affect the various high level subsystem.

It is very hard to modify an NFR once you pass the architecture phase: • Consider making an already implemented system more secure, more reliable, etc

[Week3.1]scalability_web_http

A service listens for requests from clients/users, and may handle multiple requests concurrently.

Scalability Metrics

Scalability metrics are measures of work throughput:

- Requests/queries per second

- Concurrent users

- Monthly-active user

Scaling Challenges

- Why is it difficult to make services big, even if money ain’t a thang?

- Programs run on one machine, which has limited speed

- Coordinating multiple machine can be difficult (who does what?)

- Sharing data among multiple machines is difficult (where is the data? how do we manage competing requests to change the same data?)

- More machines means there is high probability one will fail (die)

- Users can be distributed worldwide (communication latency is high)

- Service components must trust each other but ignore interference from attackers (authentication)

- Software updates must be deployed without downtime

Cloud Computing makes scaling easier

- Vertical scaling: change the instance type of a virtual machine.

Horizontal and vertical scaling Eg., upgrade from:

- t3.nano (<2 cores, 0.5GB RAM, remote SSD disk) $.0052/hour …to…

- m5d.24xlarge (96 cores, 386GB RAM, local NVMe SSD disk) $5.424/hour

- Vertical scaling (up or down) just requires a reboot of the VM.

- Horizontal scaling: purchase more VM instances.

- The new instance will be available to use in just a few minutes.

- We call cloud computing resources “elastic” because you can quickly change the size and quantity of the computing resources you are using.

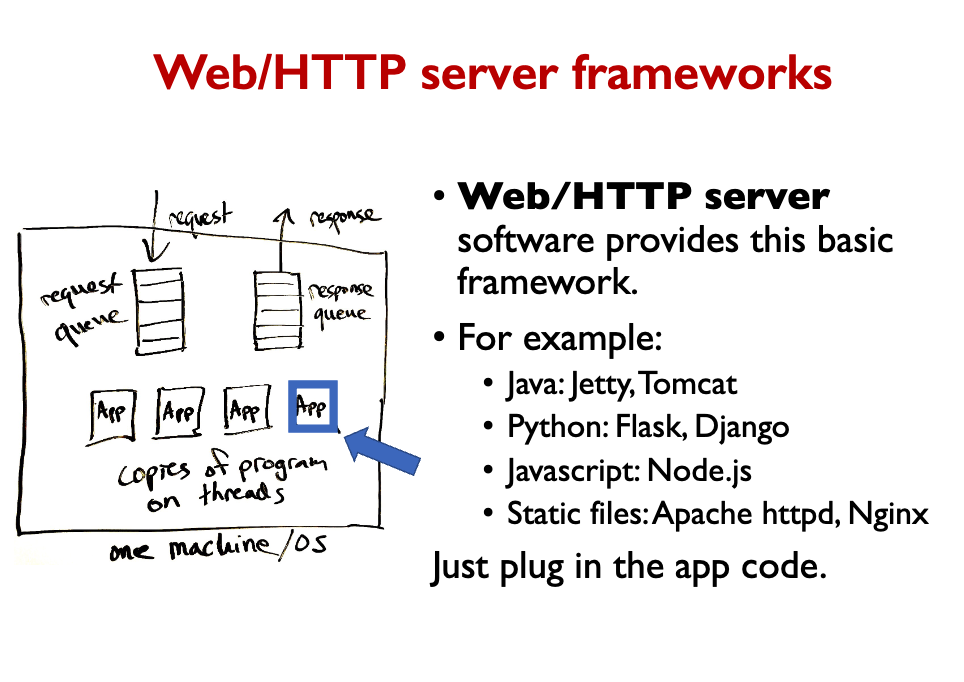

In practice, you have multithreading to handle multiple concurrrent requests. Each thread has a copy of the program that it executes.

Recap

- A software service is a program that runs continuously, giving responses to requests.

- Scalability is the ability of a service to grow to handle many concurrent users (ideally an arbitrarily large number).

Two approaches to scaling that are useful in different scenarios:

- Vertical scaling is upgrading your machine(s).

- The simplest and most efficient way of scaling… but there is a ceiling.

- Horizontal scaling is adding more machines.

- Coordinating a cluster of machines is complicated, but it’s necessary for global scale and massive throughput.

[Week3.2]cache_proxie

It’s important to identify that there are 2 types of workers:

- Stateless and Stateful worker threads

A stateless thread/process/service remembers nothing from past requests.

- Behavior is determined entirely by two things: <input request, request handling code>.

- Different copies of the service are running the same code, so they will give the exact same response for a given request.

- Has no local state.

A stateful thread (or service) changes over time, as a side effect of handling requests.

- Persistent, global variables are modified by the request processing code.

SMTP maintains a state for the duration of a single mail transaction (i.e., a session between the client and the server).

- The protocol requires maintaining state to track the sequence of commands (e.g., HELO/EHLO, MAIL FROM, RCPT TO, DATA) and responses during the session.

The first idea would be to do something stateful, where the instances store the session. but that’s not super effective. You want your servers to be stateless. So you’ll have the backend store values for you.

The first idea would be to do something stateful, where the instances store the session. but that’s not super effective. You want your servers to be stateless. So you’ll have the backend store values for you.

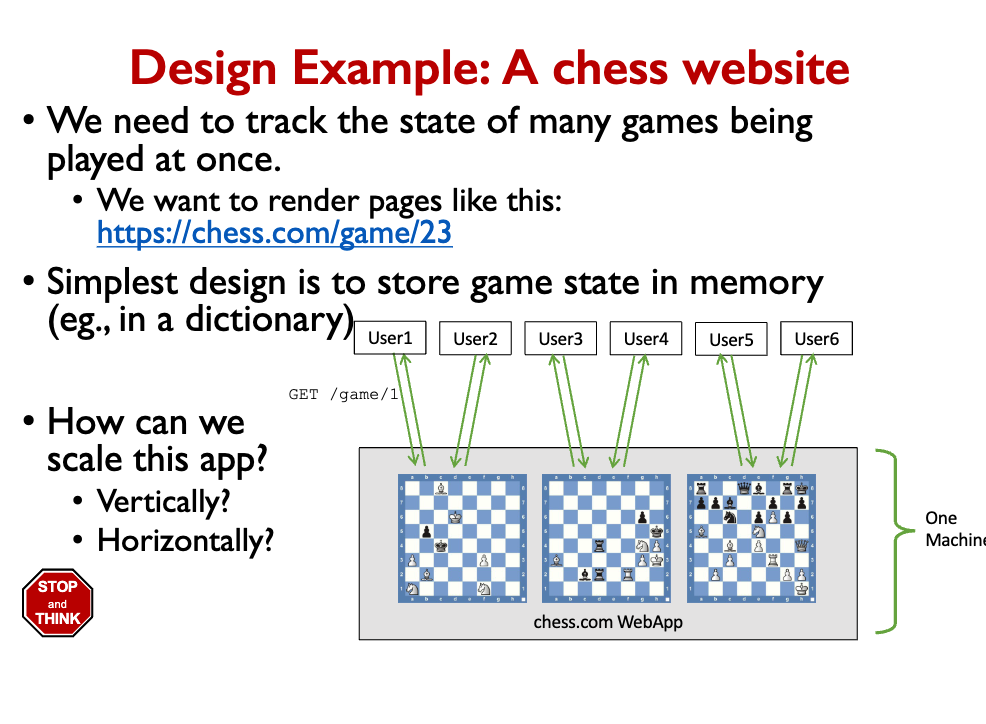

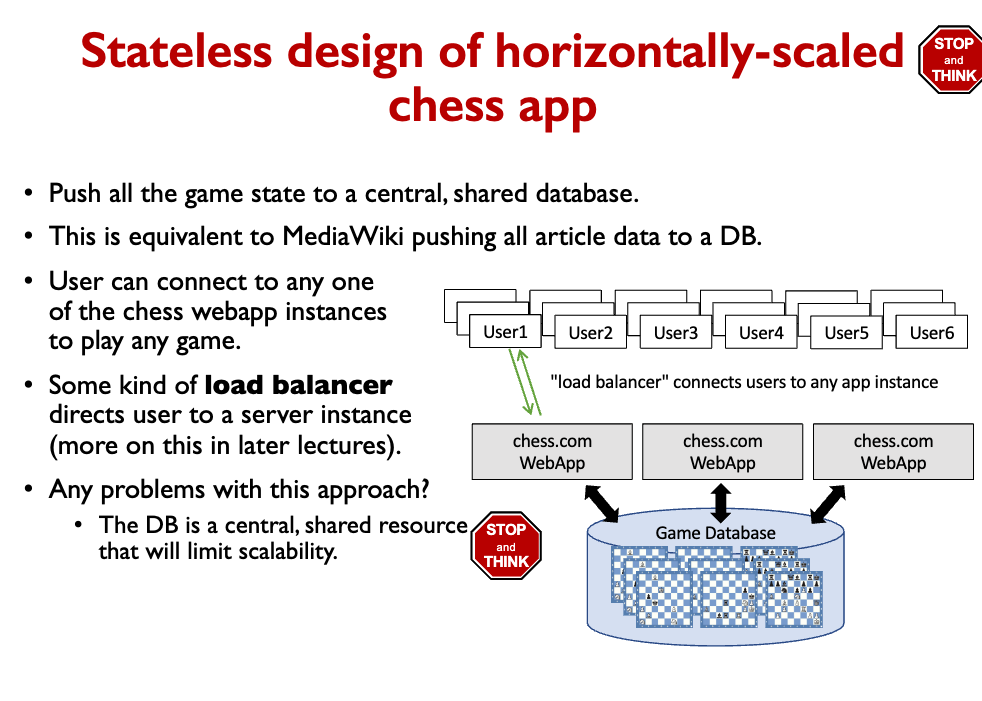

Our first attempt will run the same simple code on multiple servers.

- Each game runs on one of many servers. Each server handles a fraction of games.

- Can you see any problems with this scaling approach?

- User must connect to exact same server to continue their game. How to direct user?

- If a server fails, 1/n games are lost (or at least interrupted).

- These are stateful web apps

There’s still a problem which is that the DB is a central, shared resource that will limit scalability.

How to avoid bottleneck in database?

Just do Database Sharding.

First lesson of scalability: Don’t share!

Unsolved problems:

- How to direct users to an instance of a service (load balancing)?

- How to avoid a performance bottleneck in the database?

Proxies

Proxy

A proxy server is an intermediary router for requests.

The proxy does not know how to answer requests, but it knows who to ask.

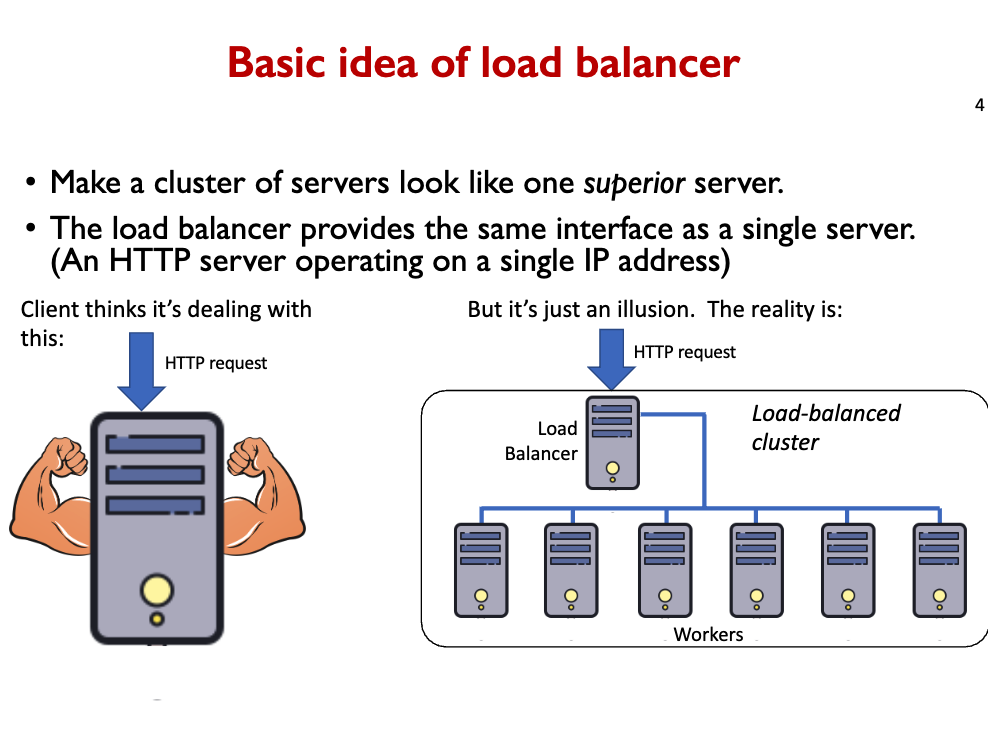

A load balancer is a type of proxy that connects to many app servers.

- The work done by the load balancer is very simple, so it can handle much more load than an application server.

- Creates a single point of contact for a large cluster of app servers.

Then the teacher talks about caching. The most common eviction policy is LRU: least recently used.

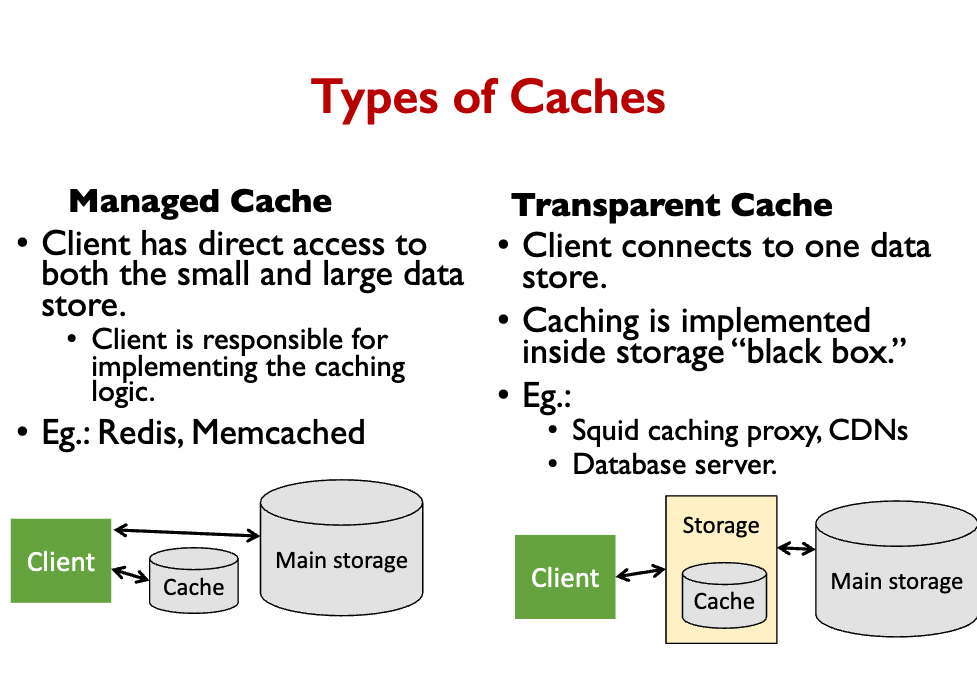

There are two types of cache:

- Managed Cache

- Transparent Cache

Managed Cache

- Client has direct access to both the small and large data store.

- Client is responsible for implementing the caching logic.

- Eg.: Redis, Memcached

Transparent Cache

- Client connects to one data store.

- Caching is implemented inside storage “black box.”

- Eg.: Squid caching proxy, CDNs, Database server

Data writes cause cache to be out of date!

- Remember that we can have many clients, each with its own cache.

- When data changes, out-of-date copies of data may be cached and returned to clients. Eg., a Wiki article is edited. What to do?

This is about Cache Coherency! Three basic solutions:

- Expire cache entries after a certain TTL (time to live)

- After writes, send new data or an invalidation message to all caches

- This creates a coherent cache. But it adds performance overhead.

- Don’t every change your data! For example, create a new filename every time you add new data. This is called versioned data.

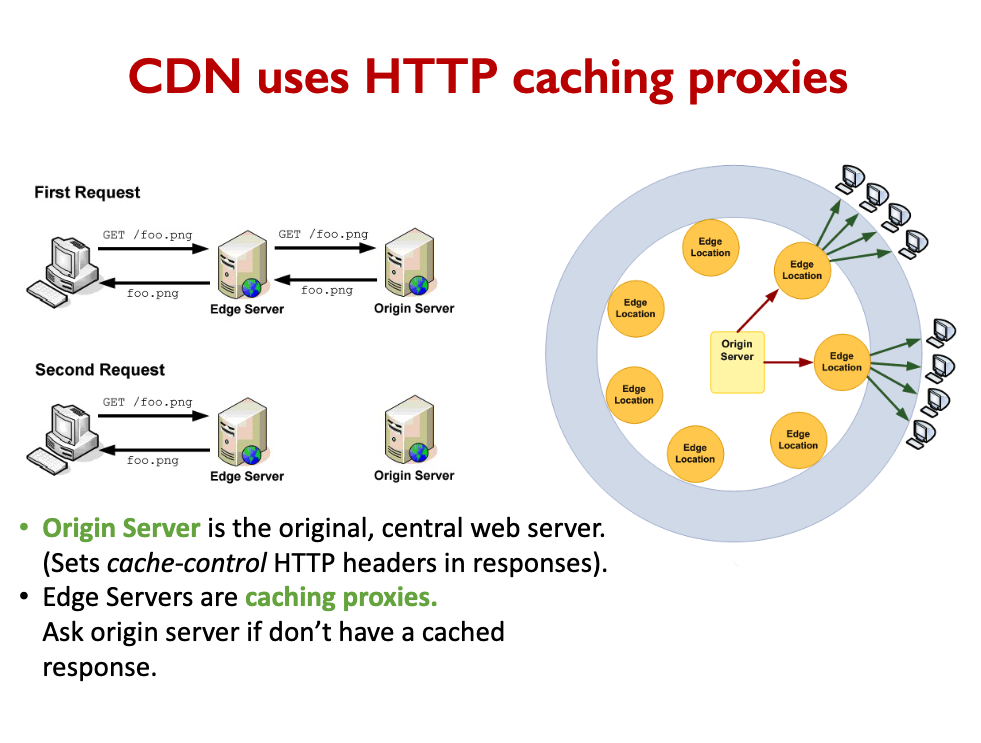

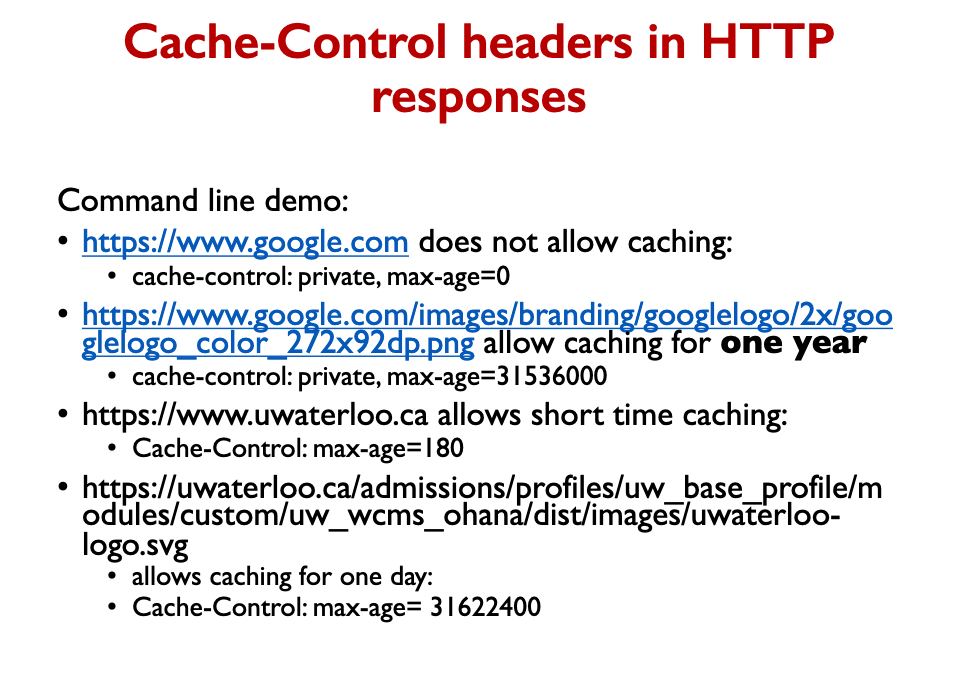

HTTP support caching well

- HTTP is stateless, so the same response can be saved and reused for repeats of the same request.

- HTTP has different methods GET/PUT/POST/DELETE.

- GET requests can be cached, others may not because they modify data.

- HTTP has Cache-Control headers for both client and server to enable/disable caching and control expiration time.

- These features allow a web browser to skip repeated requests.

- Also, an HTTP caching proxy, like Squid, is compatible with any web server and can be transparently added.

[Week4]DB 1

How to scale a database?

Why a Relational Database? These are the goals/properties that we are looking for:

- Scalability – work with data larger than computer’s RAM.

- Persistence – keep data around after your program finishes.

- Indexing – efficiently sort & search along various dimensions.

- Concurrency – multiple users or applications can read/write.

- Analysis – SQL query language is concise yet powerful. And also:

- Integrity – restrict data type, disallow duplicate entries, transactions.

- Deduplication – save space, keep common data consistent.

- Security – different users can have access to specific data.

Why not just use filesystems?

Filesystems don’t give us Analysis, integrity and deduplication properties. Also, shortcomings:

- Indexing – efficient access in just one dimension – the path/filename.

- Concurrency – multiple apps can read/write, but lacks transaction

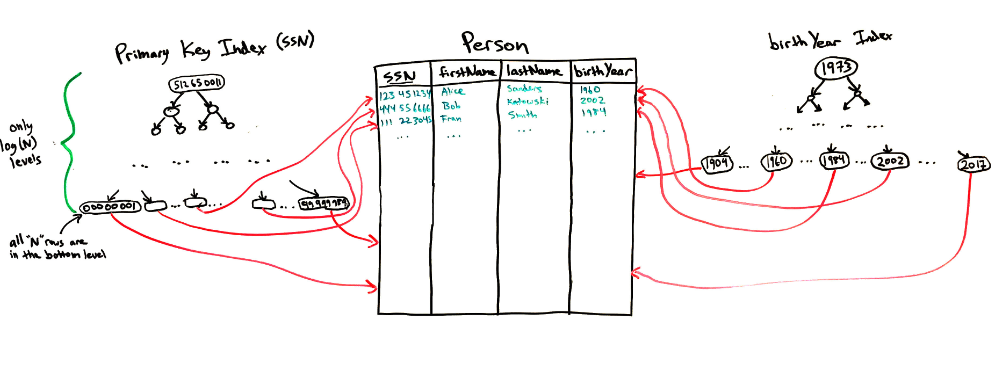

How to index into DB?

- DB indexes use a tree or hashtable instead of sorting

- Self-balanced binary trees give the log(N) speed of a binary search, while also allowing entries to be quickly added and deleted.

Multiple indexes in one table are possible

- Allow finding rows quickly based on multiple criteria

- Need two indexes to quickly get results for both:

- SELECT * FROM Person WHERE SSN=543230921

- SELECT * FROM Person WHERE birthYear BETWEEN 1979 AND 1983

DB Indexes are not free!

- Don’t add indexes unless you need them.

- Rookie mistake is to index every column “just in case.”

- Indexes consume storage space (storage overhead),

- Indexes must be updated when data is modified (performance overhead).

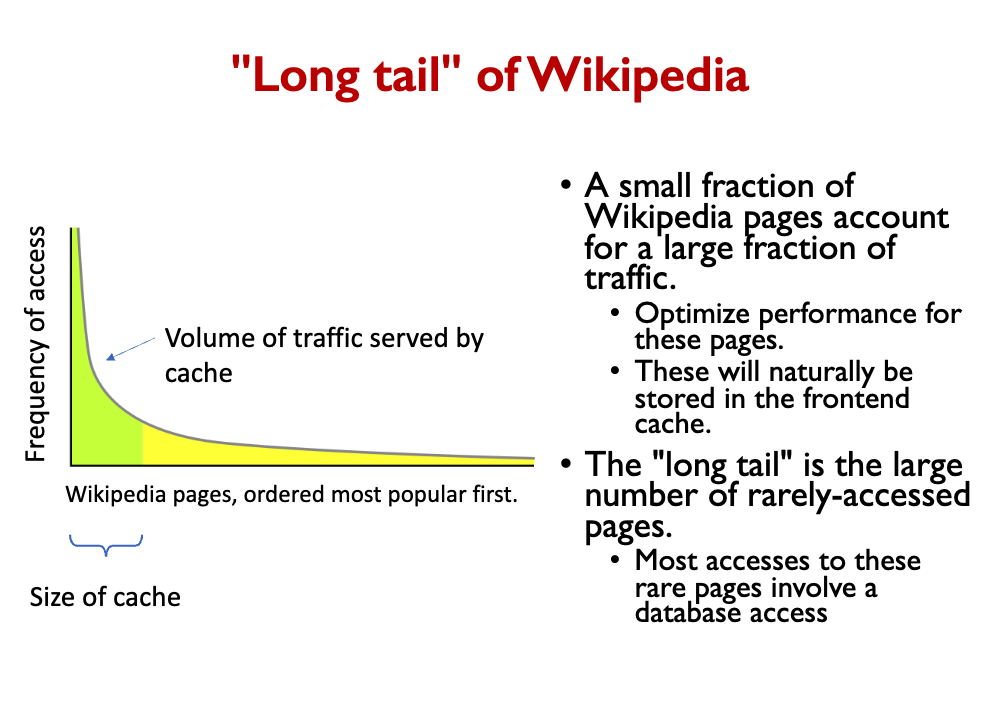

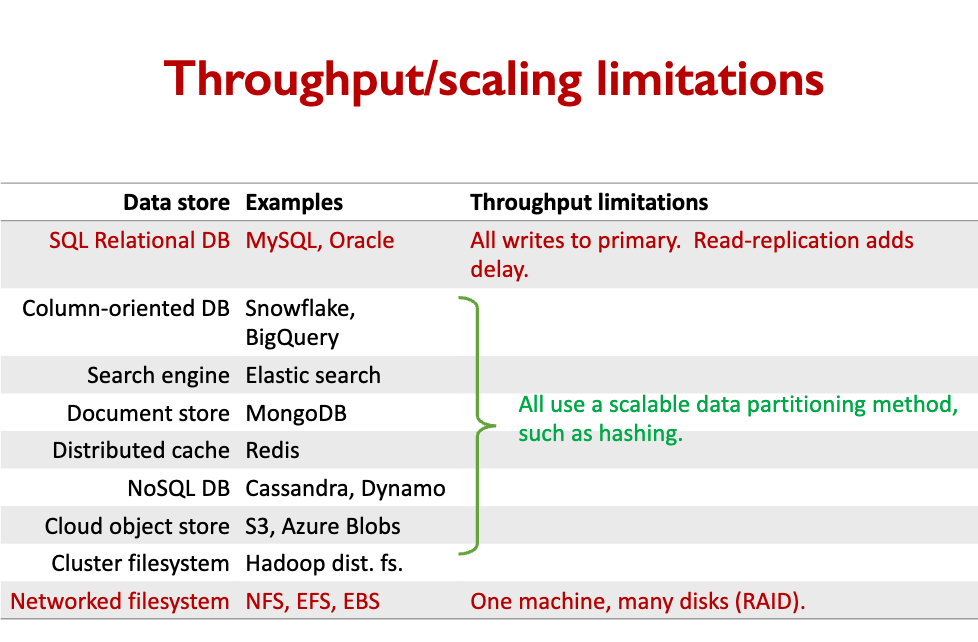

Databases are performance bottlenecks.

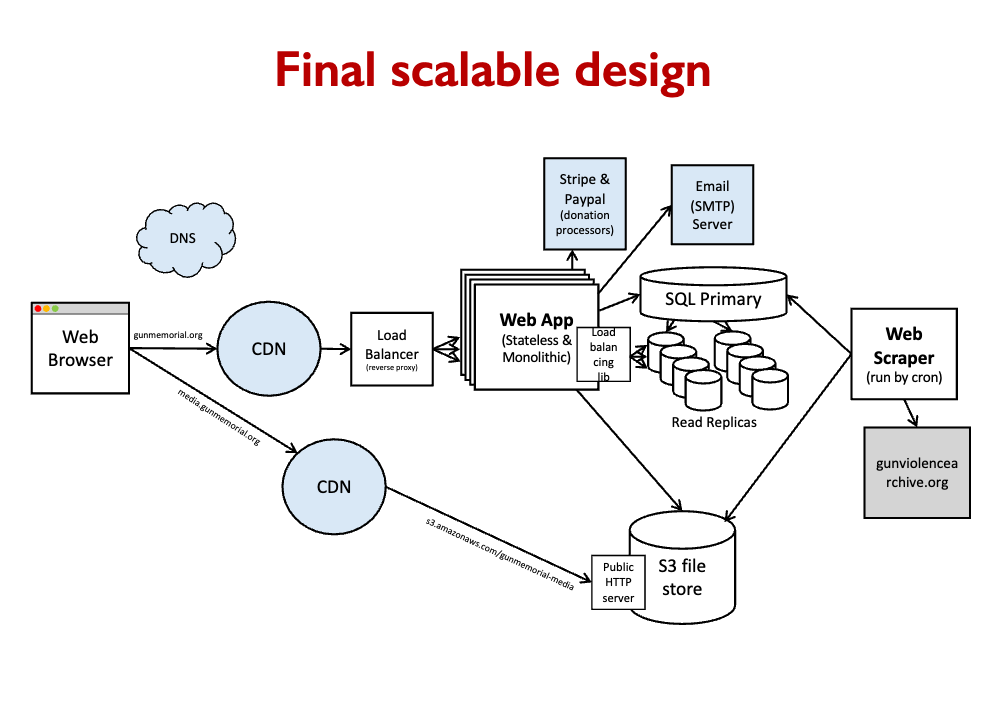

To avoid a database bottleneck, do horizontal and vertical scaling:

Vertical scaling approaches:

- Avoid unnecessary queries (cache data in the frontend).

- Buy a really fast machine, with plenty of RAM for caching.

- Use the fastest possible disks (SSDs, RAID). Horizontal Scaling:

- Use read replicas or sharding

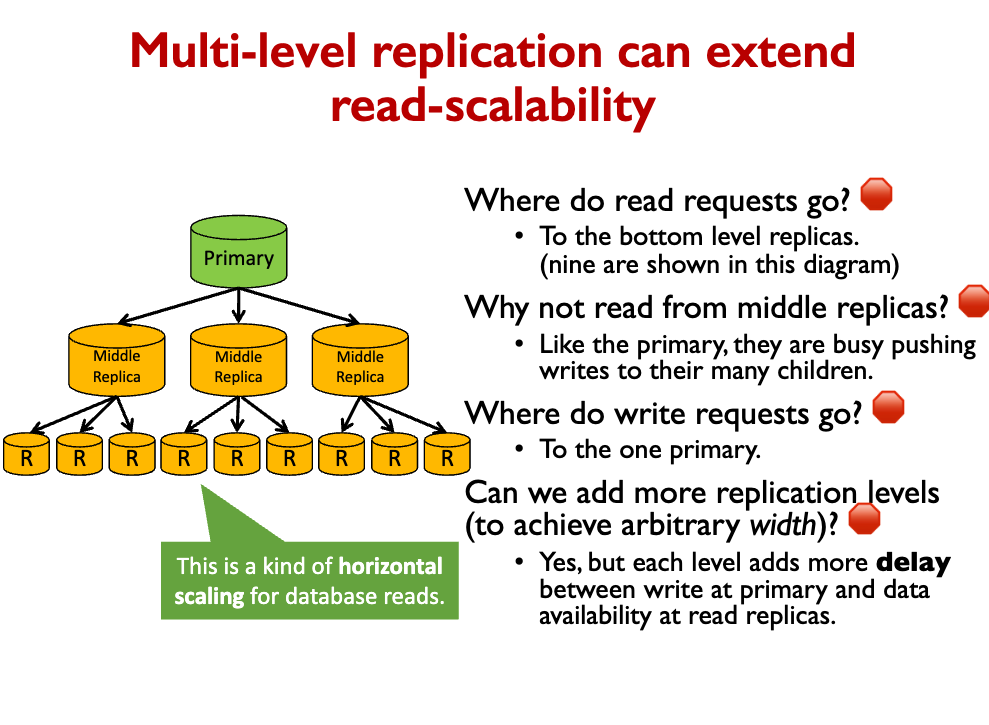

This design is not infinitely scalable.

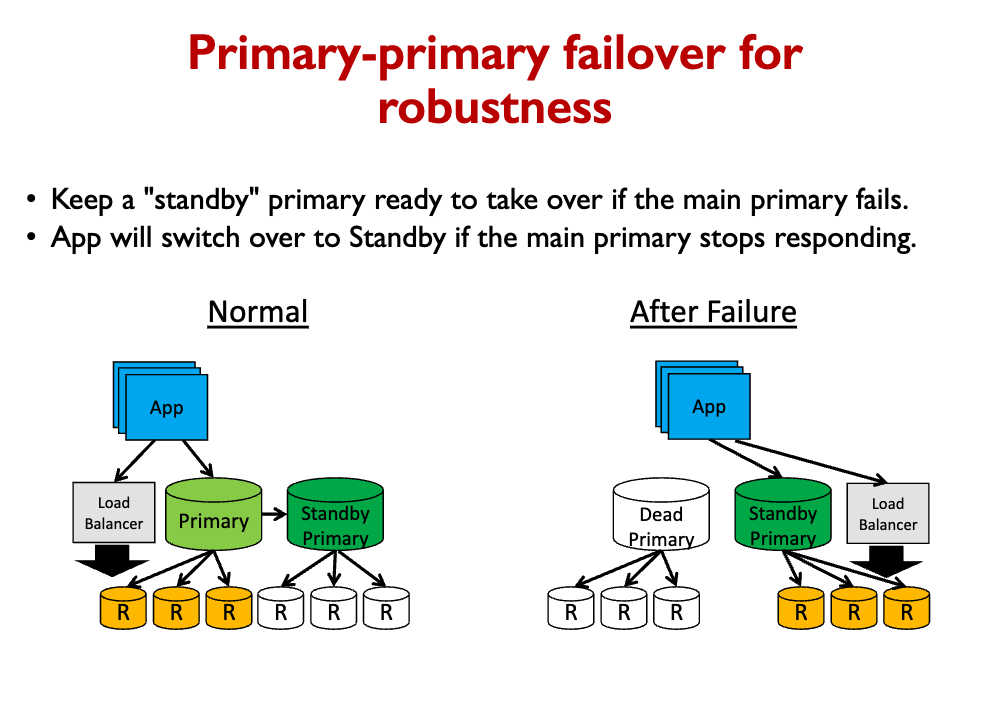

- The Primary is a central bottleneck and single point of failure.

- If there are N replicas, Primary must send N copies of each write.

- If there are R times as many reads as writes, and we want to equalize load on Primary and Replicas (to the max machine capacity), we get:

rimary_load = repl_load primary_reads + primary_writes + data_xfer = repl_reads + repl_writes + data_xfer 0 + 1 + N = R + 0 + 1 N = R

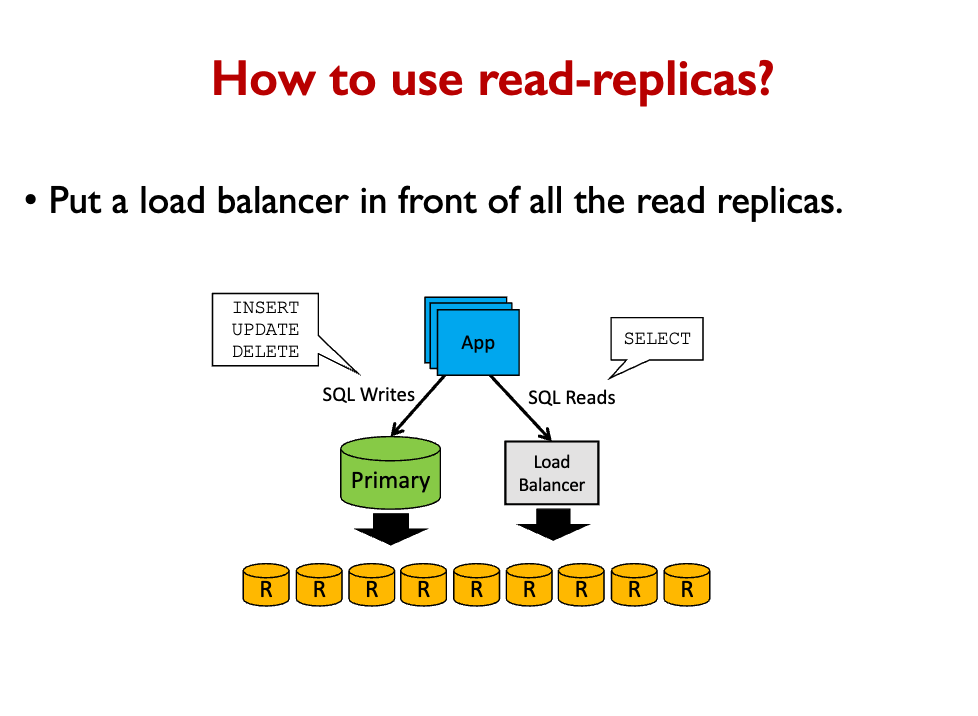

Read replicas are used by putting a load balancer in between.

- When the primary DB gets updated, it updates all the read replicas.

Isn't that still super slow, fi you have lots of read replicas?

Ya..

Can we allow writes to multiple primaries?

Each Primary still must handle all the writes, though indirectly. Thus, the same performance bottleneck remains. Also, data can become inconsistent if operations happen concurrently.

How to scale writes and storage capacity horizontally? We do partitioning!

Some kind of partitioning is needed, 2 partition ways:

- Functional partitioning divides by tables.

- Data partitioning divides by rows → this is Database Sharding

Functional partitioning:

- Create multiple databases storing different categories/types of data.

- Eg.: three separate databases for: accounts, orders, and customers.

- Cons:

- Limits queries joining rows in tables in different DBs

- Only a few functional partitions are possible. It’s not highly scalable.

Data partitioning is a more general approach.

Pros of sharding

Because each row is stored once:

- ✓Capacity scales.

- ✓Data is consistent.

If sharding key is chosen carefully:

- ✓Data will be balanced.

- ✓Many queries will involve only one or a few shards. There is no central bottleneck for these.

Cons

- ✘Cannot use plain SQL.

- ✘Queries must be manually adapted to match sharding.

- ✘If sharding key is chosen poorly, shard load will be imbalanced, either by capacity or traffic.

- ✘Some queries will involve all the shards. The capacity for handling such queries is limited by each single machine’s speed.

Some simple scaling math:

- N nodes

- R total request rate (requests per second or another time frame)

- Each node has the capacity to handle a maximum rate of requests C.

Scalable

- If each request is sent to one node: R_max = NC

- If each request is sent to a constant k number of nodes: R_max = NC/k = 𝒪(NC)

Not Scalable

- If each request is sent to all nodes: R_max = C

[Week4]DB 2

NoSQL

Review of Sharding:

- Splits data among many machines.

- Accept writes on all machines.

- But the data partitioning is done manually.

- Programmer chooses a sharding key or rule, and to write code that joins results from the different shards.

- Works well for queries that can be handled within a single shard.

- If we keep the relational model, with normalized data, many queries will involve all the nodes, so scaling is limited.

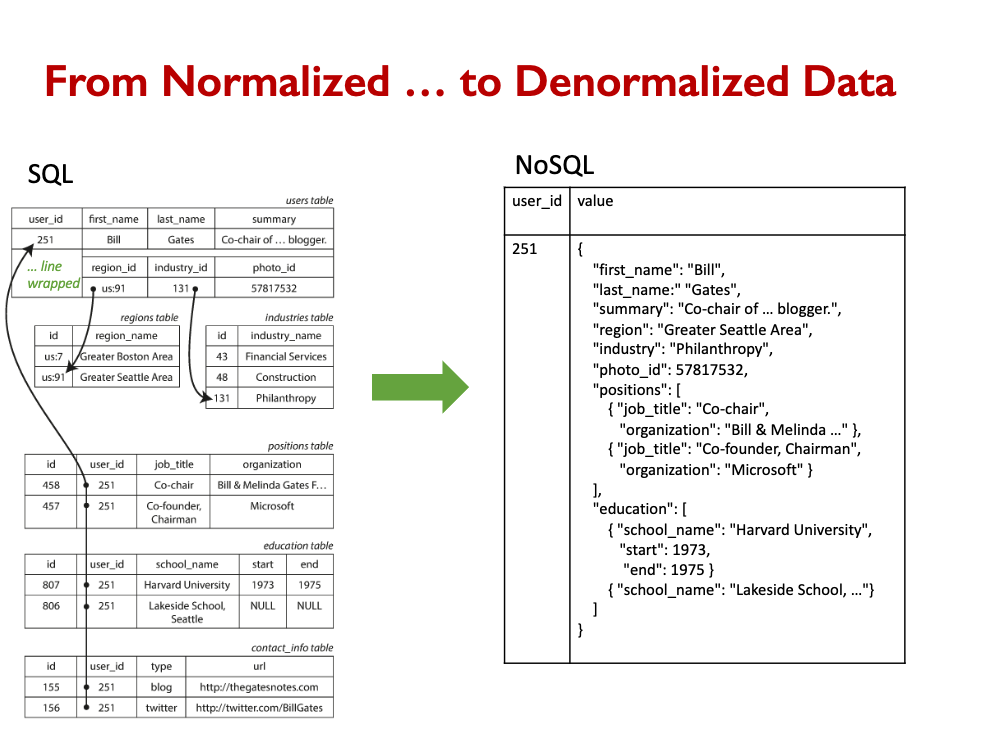

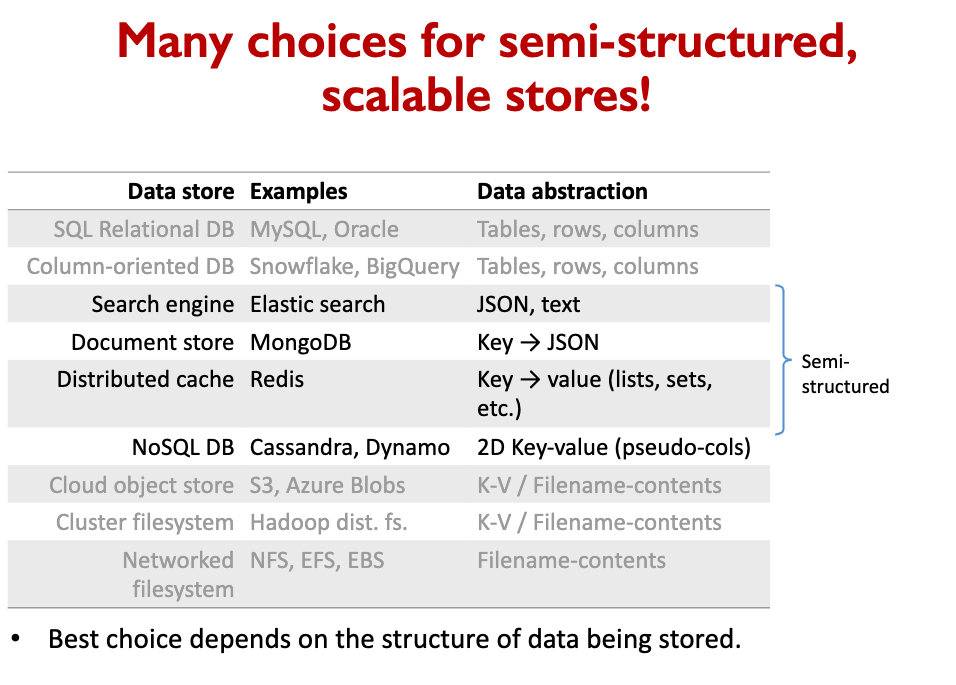

NoSQL databases all solve this problem by denormalizing data, meaning that data is duplicated to isolate queries to one node.

Data Normalization

A normalized relational database has no duplication of data.

- References (foreign keys) point to shared data

- In effect, many users are related to each other by all being linked to that industry

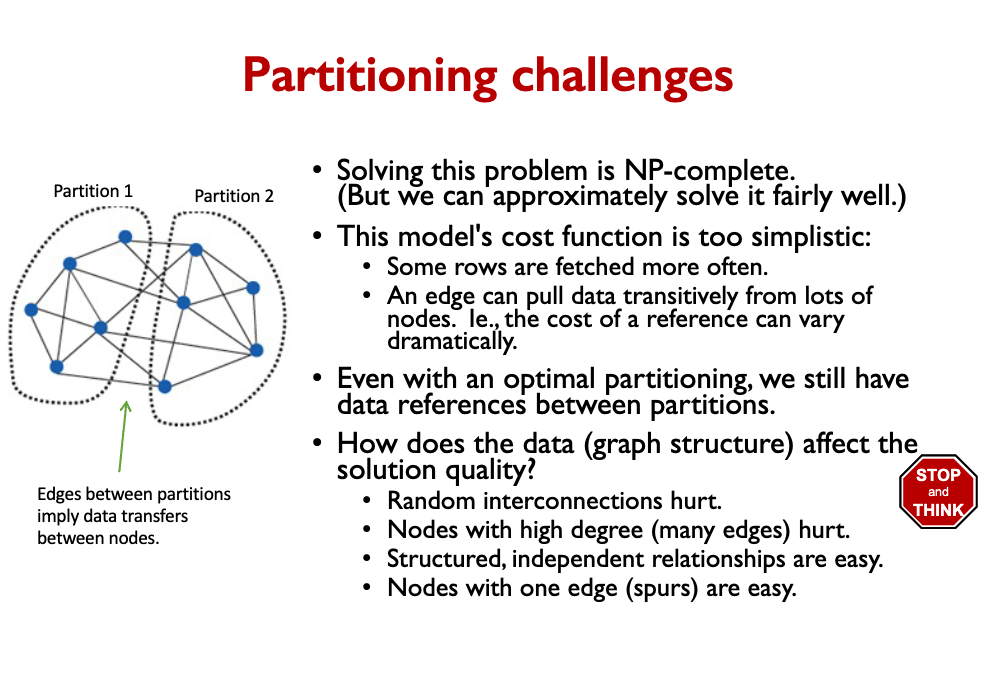

- To optimally partition the rows into shards, we could solve a balanced graph partitioning problem.

SQL uses normalized data. NoSQL uses denormalized data.

So essentially, the problem with sharding is that we need to find a partitioning scheme that works. But because we are working with relational databases, tables reference other tables, and it becomes a big mess.

This is where NoSQL databases come into the picture!

NoSQl

Removing the ability to create references gives us a NoSQL database. Instead of following references with JOINs, we store denormalized data, with copies of referenced data.

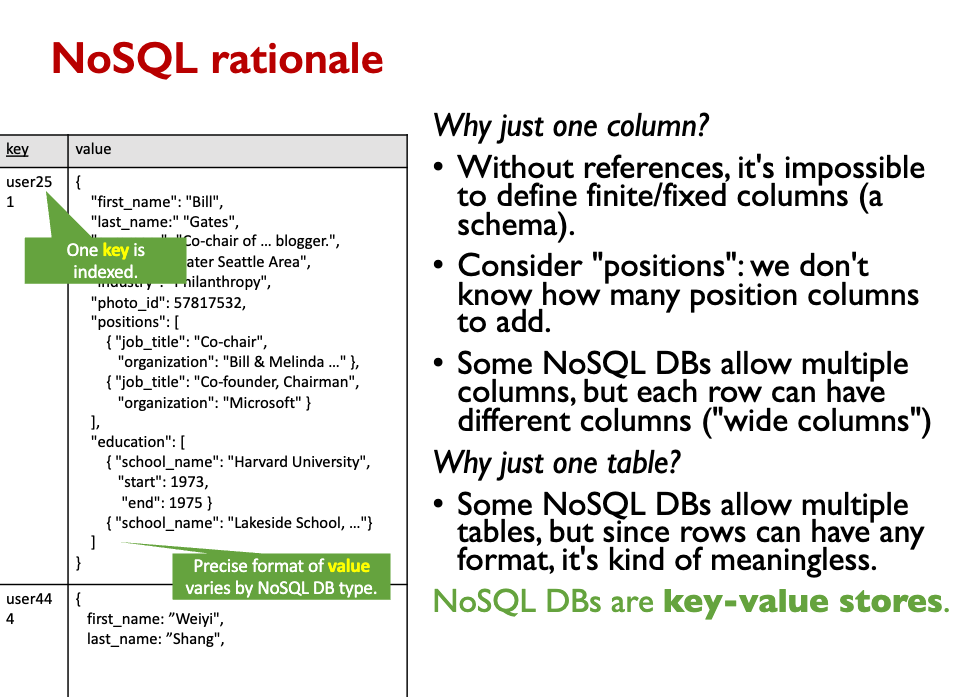

NoSQL DBs are key-value stores.

- Hashing is the basis of distributed NoSQL DBs.

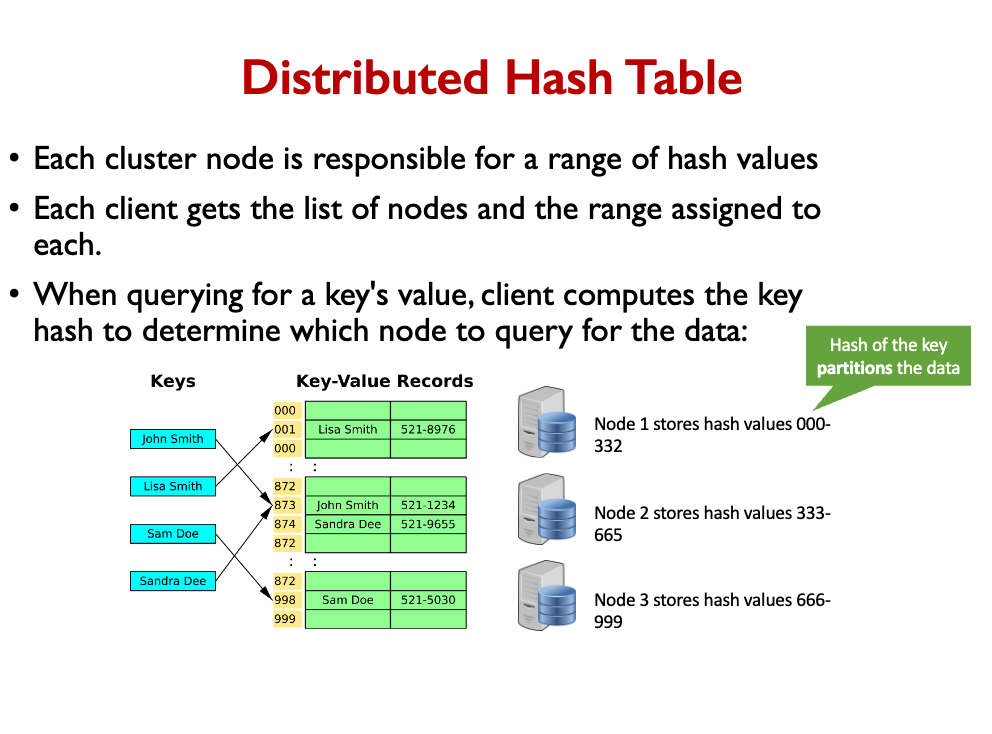

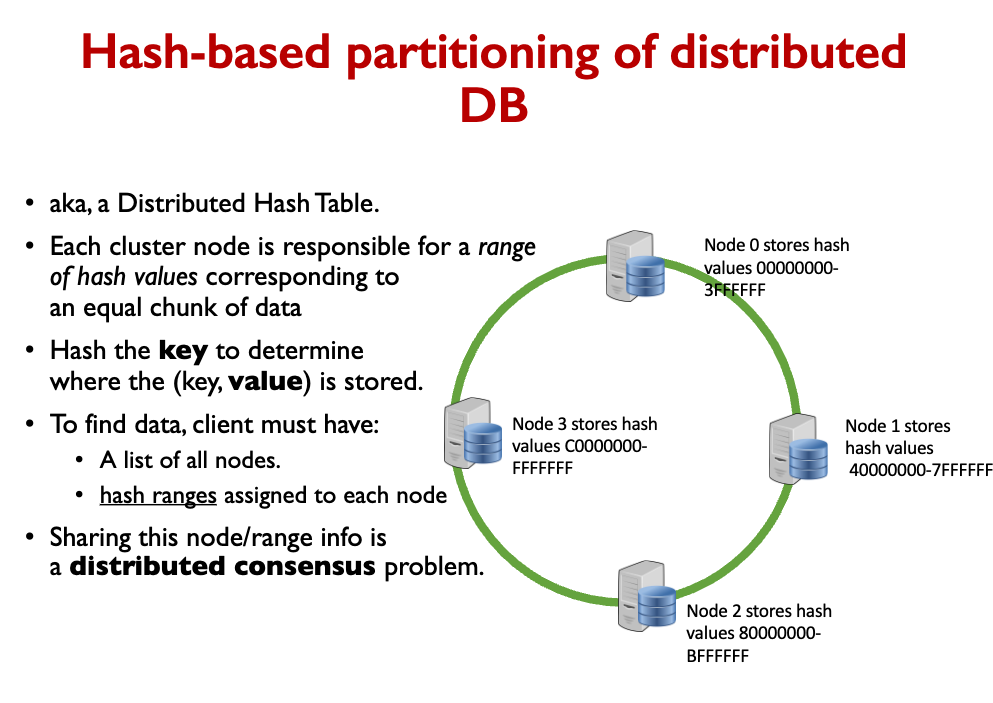

There’s this thing called a distributed hash table.

NoSQL Downsides

- Just one indexed column (the key)

- Because index is built with hash-based partitioning.

- Denormalized data is duplicated

- Wastes space.

- Cannot be edited in one place

- Eg., “Greater Seattle Area” is repeated in many user profiles instead of “region:91”

- References are possible, but:

- Following the reference requires another query, probably to another node.

- There is no constraint checking (refs can become invalid after delete).

Without references, data becomes denormalized. • Duplicated data consumes more space, can become inconsistent

Recap

- Data partitioning is necessary to divide write load among nodes.

- Should minimize references between partitions.

- Can be treated as a graph partitioning problem.

- SQL sharding was a special case of data partitioning, done in app code.

- NoSQL databases make partitioning easy by eliminating references.

- Without references, data becomes denormalized.

- Duplicated data consumes more space, can become inconsistent.

- NoSQL databases are very scalable, but they provide only a very simple key-value abstraction. One key is indexed.

- Distributed Hash Table can implement a NoSQL database.

- The hash space is divided evenly between storage nodes.

- Client computes hash of key to determine which node should store data.

Distributed DB Consistency

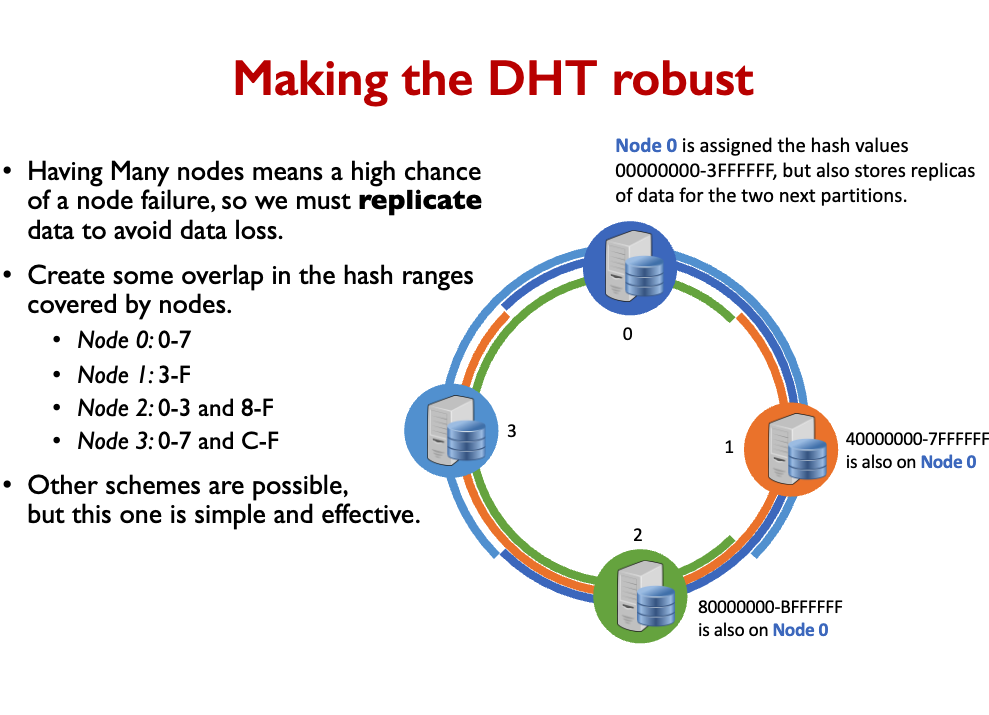

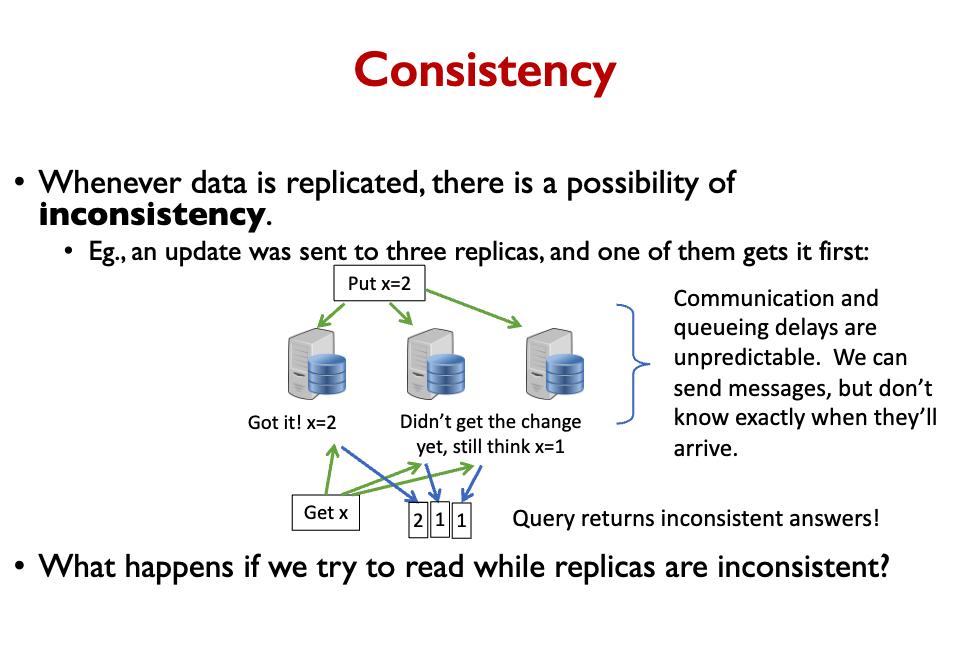

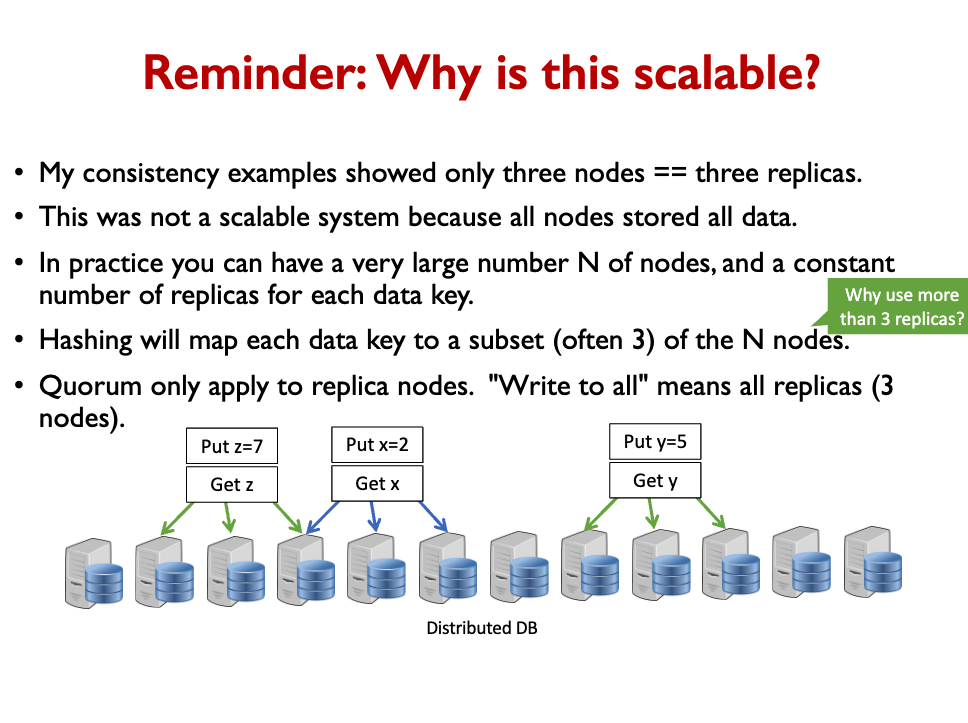

We also introduce replication, which is not the same as sharding.

- Replication to avoid data loss

However, once we start introducing replicas, we have the problem of inconsistency.

The CAP thereom is the most famous result in distributed systems theory.

CAP Theorem

It says that a distributed system cannot achieve all three of the following (you can only pick two)

- Consistency: reads always get the most recent write (or an error).

- Availability: every request received a non-error response.

- Partition tolerance: an arbitrary number of messages between nodes can be dropped (or delayed).

In other words:

- When distributed DB nodes are out-of-sync (partitioned), we must either accept inconsistent responses or wait for the nodes to resynchronize.

- To build a distributed DB where every request immediately gets a response that is globally correct, we need a network that is 100% reliable and has no delay.

Client-centric consistency models

- The CAP theorem gives us a tradeoff between consistency & delay.

- Inconsistency is bothersome. It can cause weird bugs.

- Fortunately, delay is usually something our apps can handle.

- If we really need both consistency and timeliness, then we must go back to a centralized database (probably a SQL relational DB).

- Distributed (NoSQL) DB designs give different options for handling the consistency/delay tradeoff.

- We’ll consider a client connecting to the DB cluster.

- What consistency properties might we want to ensure?

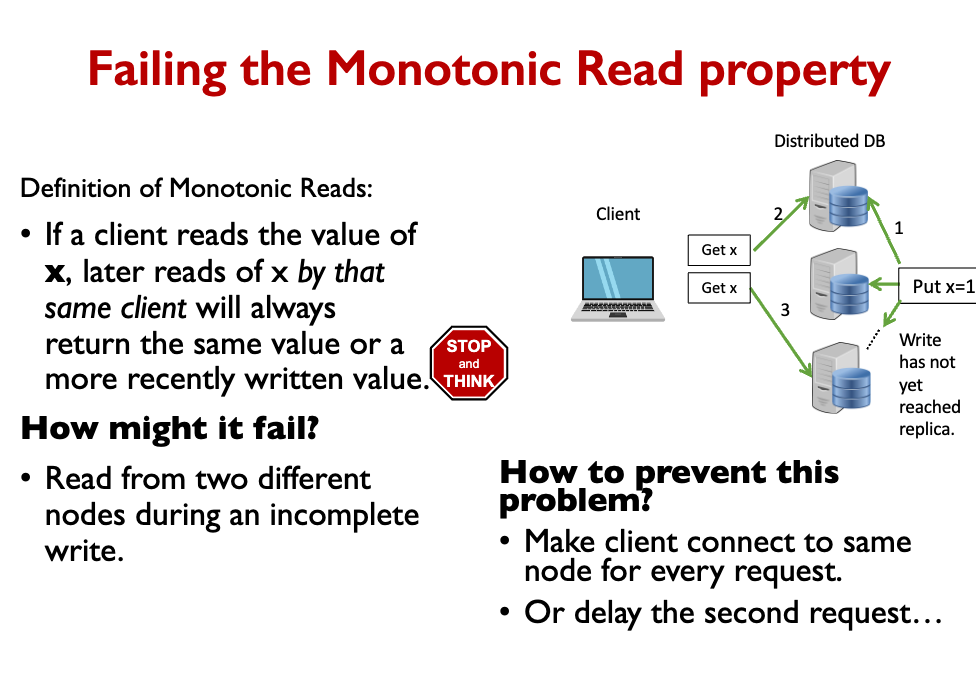

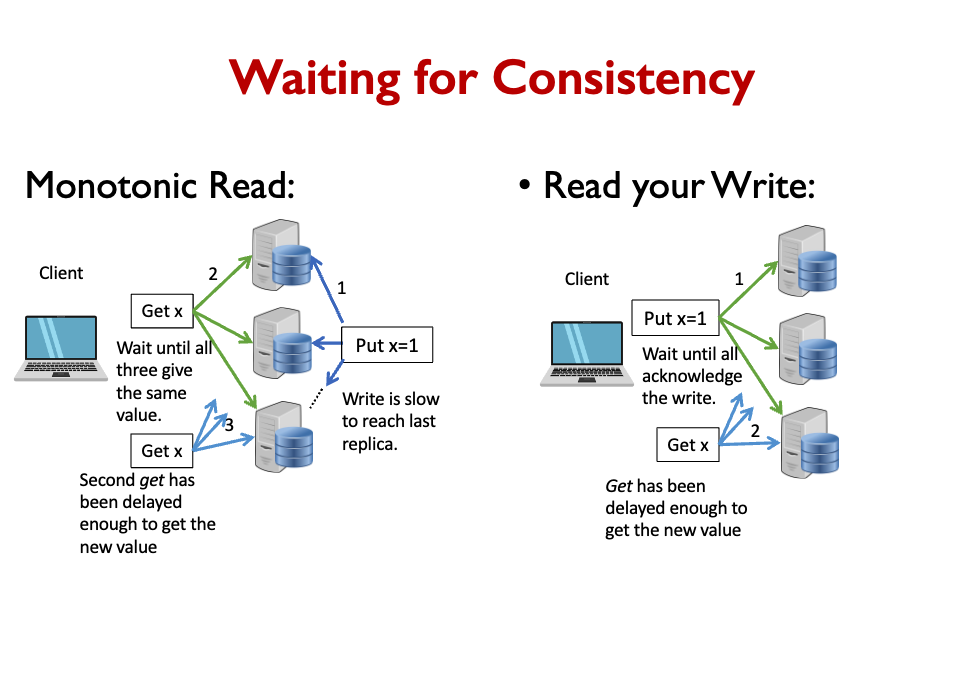

Client-centric consistency properties Monotonic Reads

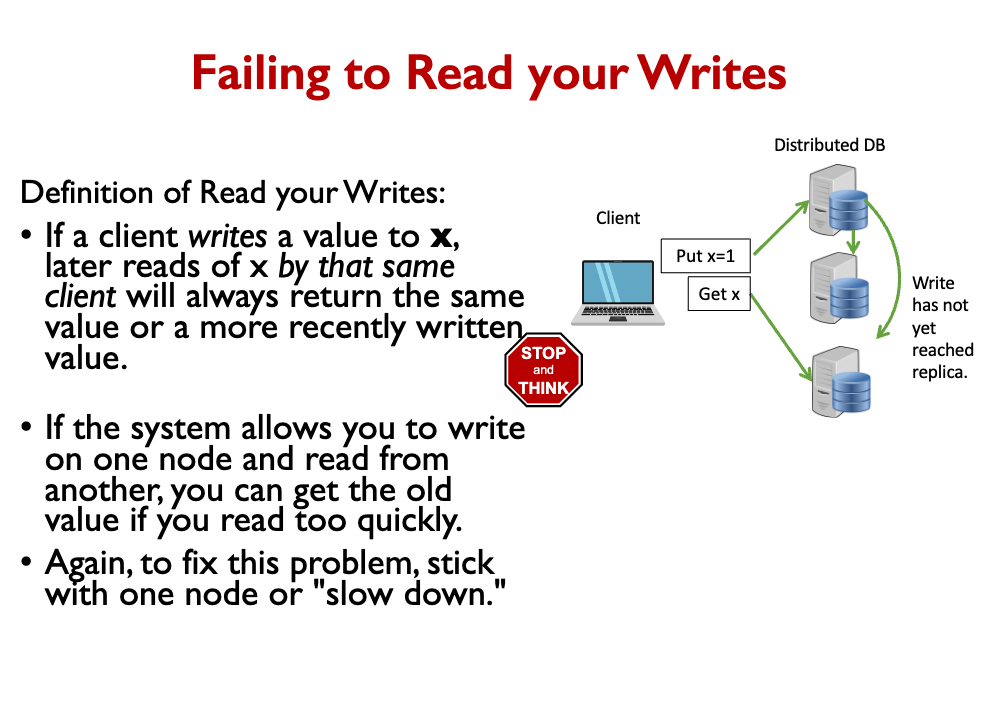

- If a client reads the value of x, later reads of x by that same client will always return the same value or a more recently written value. Read your Writes

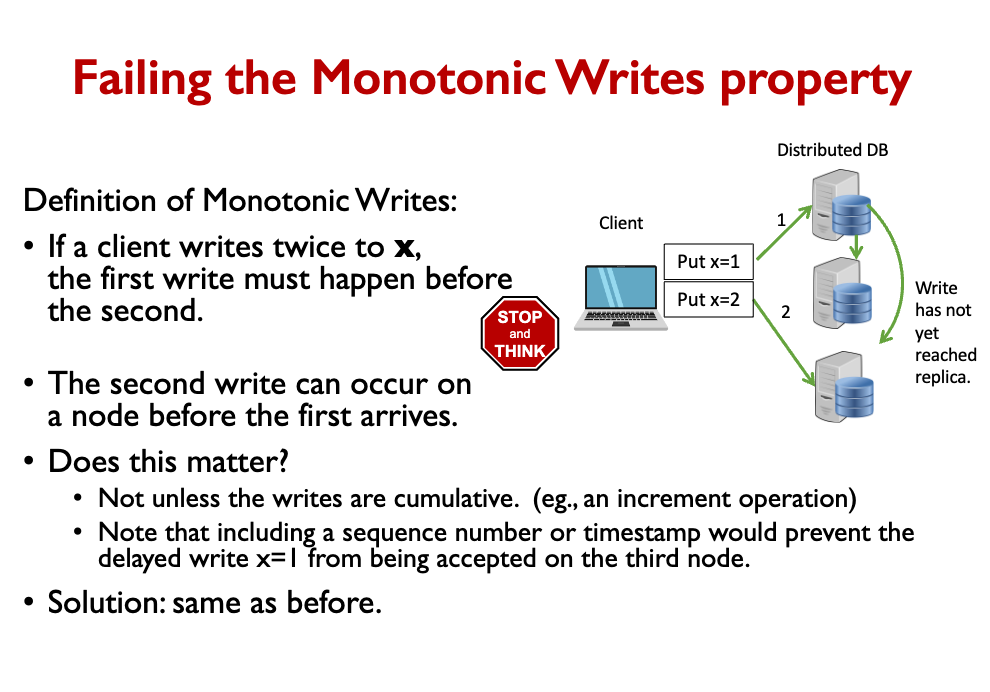

- If a client writes a value to x, later reads of x by that same client will always return the same value or a more recently written value. Monotonic Writes

- If a client writes twice to x, the first write must happen before the second

So how do we achieve consistency? We Set some rules for client and replication behavior to achieve consistency.

Two ways to do this:

- Make client send all requests to one replica node.

- Pro: Simplicity.

- Con: Consistency problems arise when a node fails.

- Client must switch to another node, and the consistency problems are again possible.

- Note: if don’t care about fault tolerance, then avoid replication to get consistency. MongoDB does not replicate data and thus has Consistency and Partition Tolerance but lacks Availability because a failed node causes downtime (CAP).

- Make client wait until the the read or write is synchronized across the whole system.

- For efficiency, we only care about the single key/value being synchronized.

- How do we know when the value is synchronized?

- Simplest approach is for the client to send the request to all nodes and wait!

The teacher introduces the ideas of quorum, which is like voting.

Quorum

A quorum is a minimum percentage of a committee needed to act.

To ensure consistency, the DB will

- Wait for an acknowledgement of consistent data from a certain number of replicas before considering the read/write completed

- Prevents progress until the replicas have a certain degree of consistency

I'm still a little confused

On how the DB gets consistency at the very end. There’s related stuff for CS348.

- It’s like a voting mechanism, where you wait on the majority

Question: What happens if a DHT replica fails?

See two examples below.

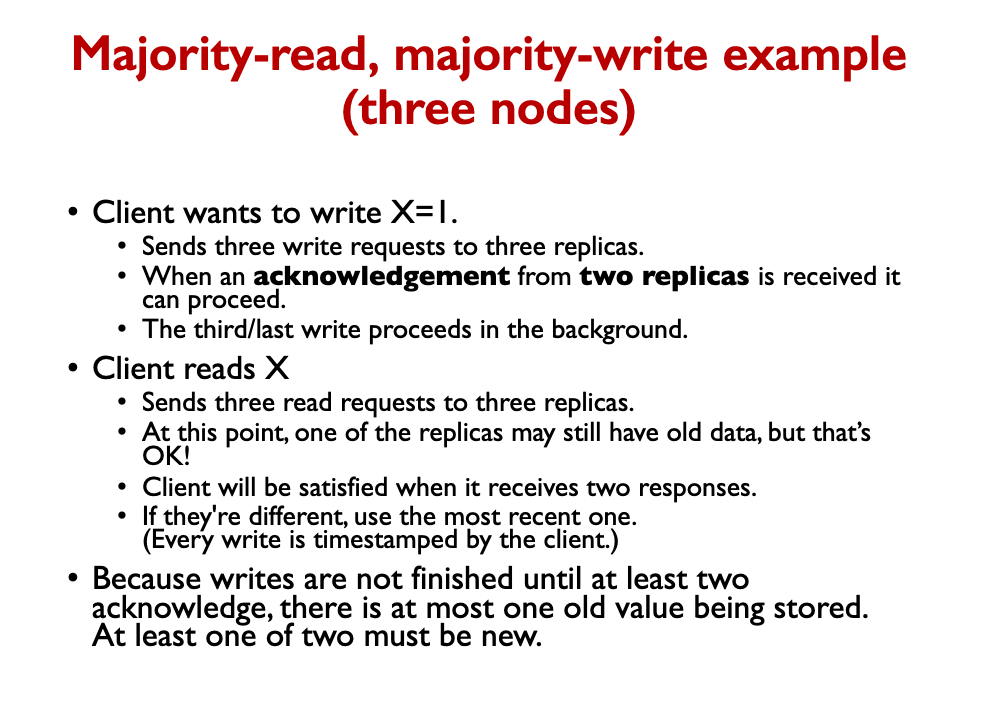

Example 1: write and read quorum of two (of three replicas).

- Client performs a write, gets two ACKs and proceeds.

- At this point, replicas store two new values, and one old value.

- Now one of the written-to replicas fails!

- Can read and writes proceed?

- Yes. Two different values will be read, but client can choose the most recent one.

- The 3rd write will eventually be received, and two copies made available.

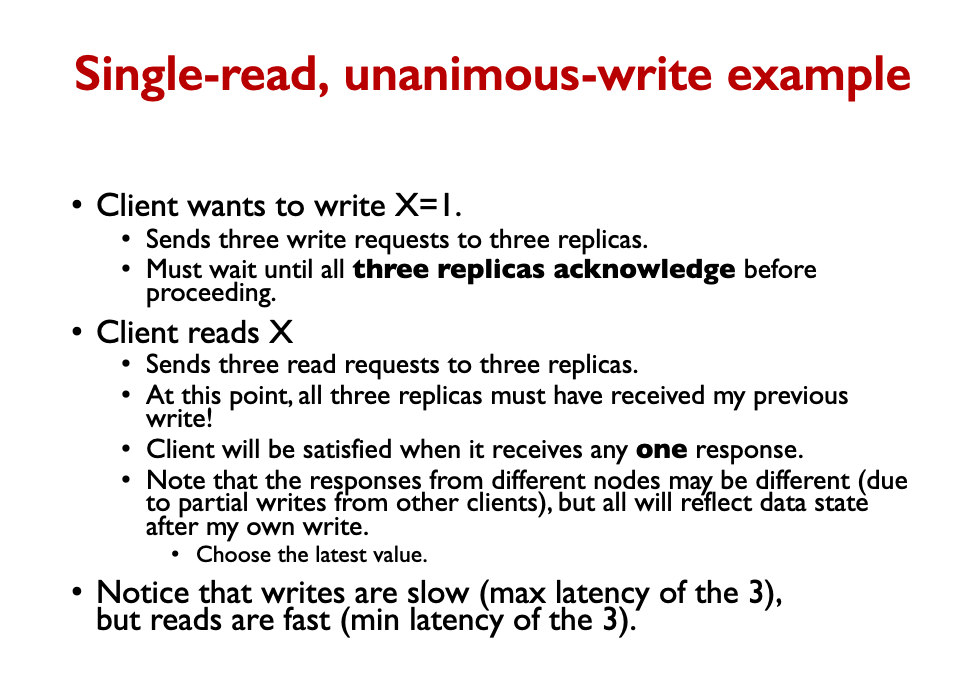

Example 2: write quorum of three (read quorum of one)

- A replica fails!

- Can reads and writes proceed?

- Client performs a write, and cannot get three ACKs.

- Write is impossible! (but reads can proceed)

- Part of the system is stalled, temporarily.

- The write can be retried after a replacement joins the DHT and gets copies of all the data.

So this is how the real world DB works

They have sharding + replicas for each shard. You write to all replicas.

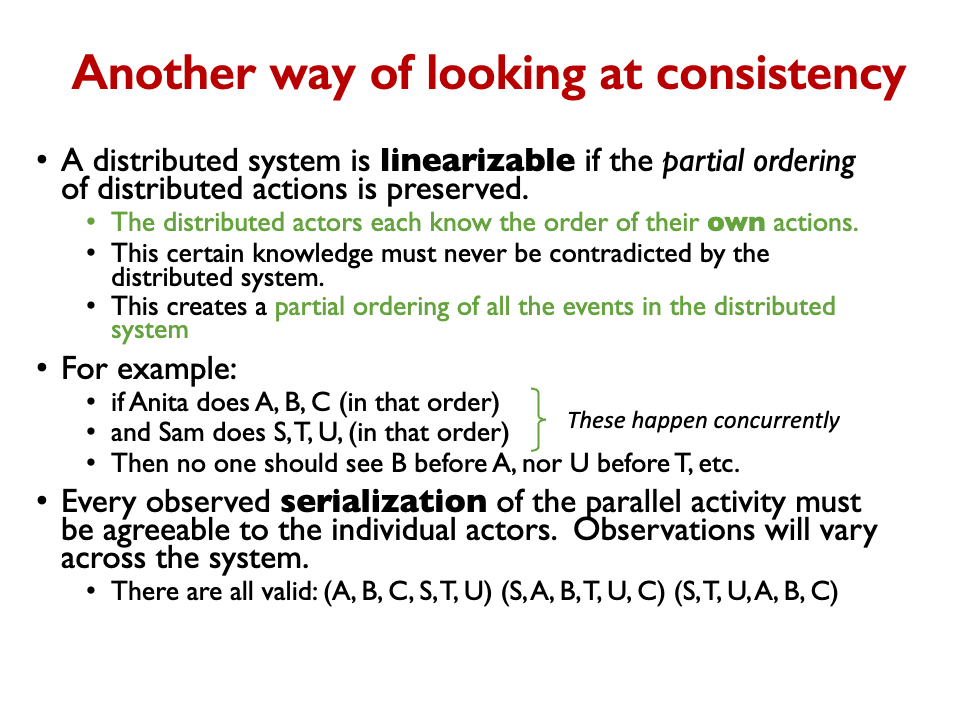

A distributed system is linearizable if the partial ordering of distributed actions is preserved.

Recap So we introduced replication to ensure that a single failure does not lose data (also some parallelization).

- The more nodes you have, the more likely a failure!

- However, replication introduces consistency problems.

- Tradeoff: must choose 2 of Consistency, Availability and Partition Tolerance (CAT)

- A distributed DB client, at very least, would want to achieve:

- Montonic reads, monotonic writes, read your writes (together: linearizability).

- Ensure consistency by waiting for responses from multiple replicas.

- Different quorum levels (all, majority, one) trade delay of reads/writes and determine whether reads or writes are unavailable during recovery.

- Cassandra DB lets programmer choose the quorum level for each read/write.

- Other NoSQL databases are designed to use just one read/write strategy.

[Week5.1]Choossing DB

Recall the goals of a Database → See Week 2.

- Scalability

- Persistence

- Indexing

- Concurrency

- Analysis

- NEW Separation of concerns – decouples app code from storage engine. Also, allows apps to be stateless, allowing parallelism

Less important

- Integrity

- Deduplication

- Security

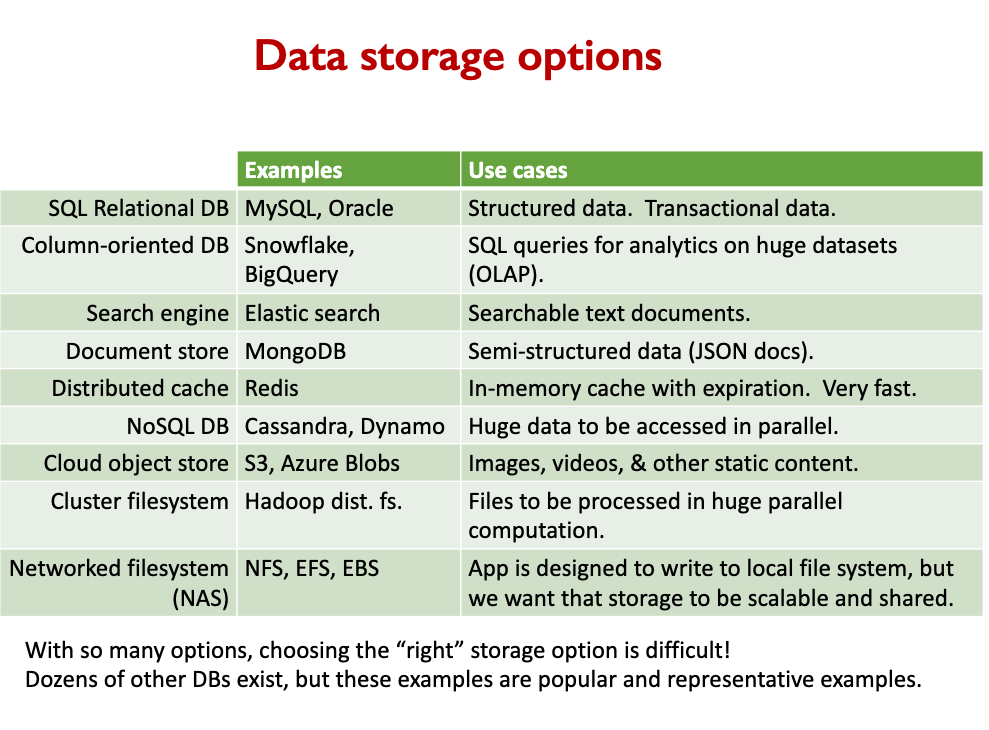

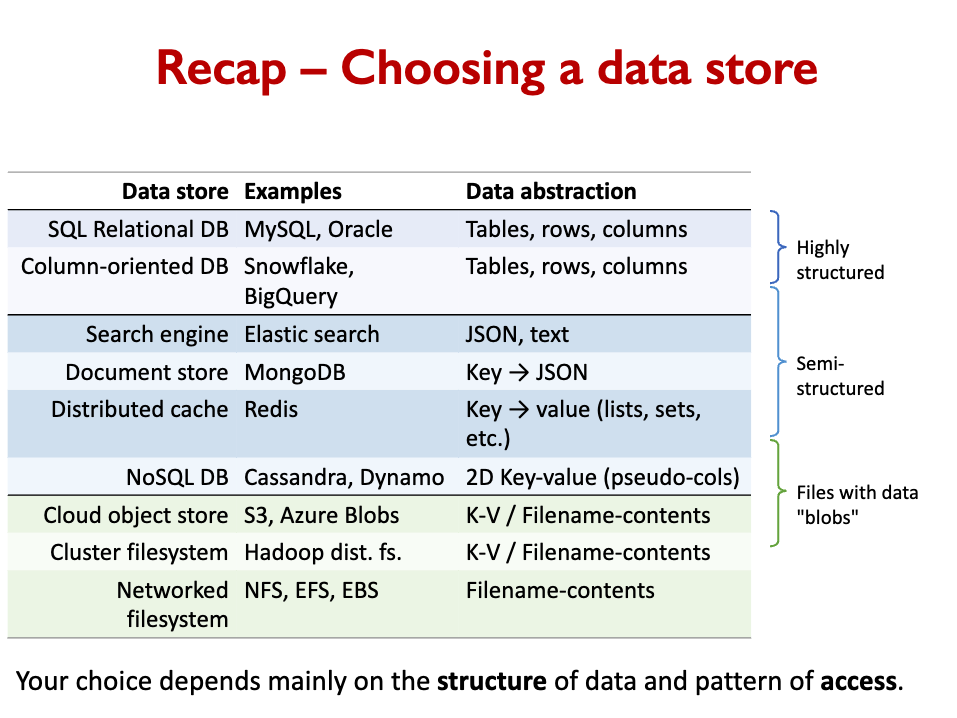

Lots of data storage options

Previously, we saw that:

- Read-Replication and Sharding allow lots of parallel reads and writes.

- This is useful for OLTP applications (Online Transaction Processing)

OLAP (Online Analytics Processing) involves just a few huge queries

- Eg., Over the past three years, in which locations have customers been most responsive to our mailed-to-home coupons?

- Analytics queries involve scanning tables, not using indexes.

- Must be parallelized over many nodes.

- The workload is mostly reads, with occasional importing of new data.

Column-oriented DBs are optimized for SQL analytics workloads

The teacher explains:

- Elasticsearch

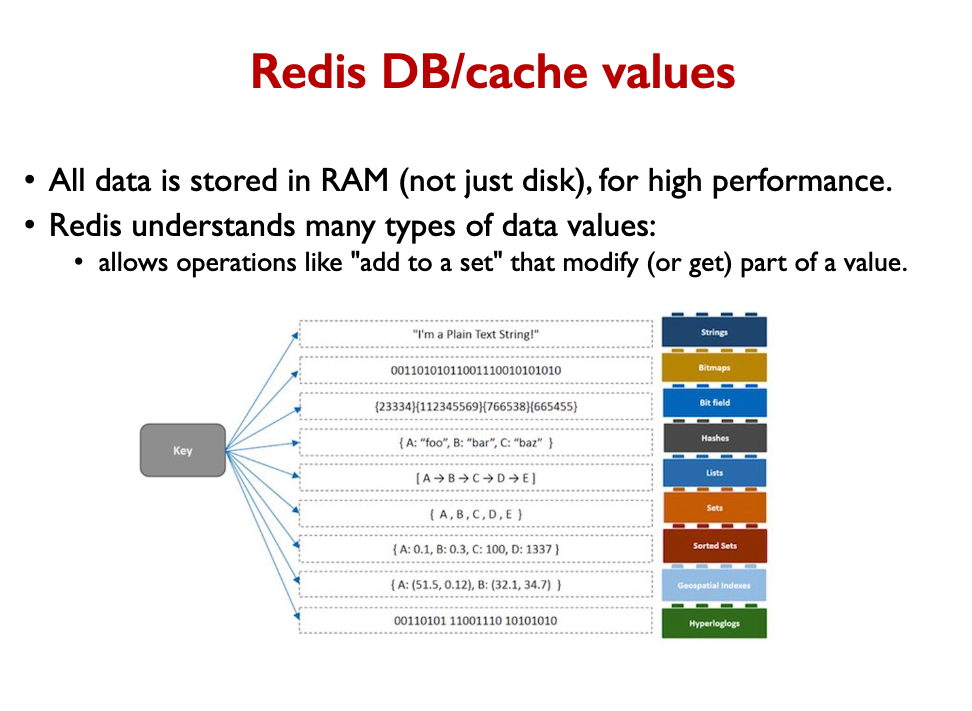

- Redis

- CassandraDB

Distributed Caches

- For example: Redis, Memcached, ElastiCache, Riak

- Originally developed in order to reduce load on relational databases.

- Cache responses to frequent DB requests or other materialized application data.

- Always support timed expiration of data.

- Use the same basic key-value abstraction as NoSQL distributed DBs.

- Store data across many nodes.

- Have the same data consistency issues as NoSQL databases.

- Often optimized to do everything in-memory,

- but most also store data persistently to disk

So… distributed caches and NoSQL databases are very similar

Comparison

NoSQL Database

- Items are permanent/persistent

- All items are stored on disk (some are cached in RAM).

- Scale is the primary goal

Distributed Cache

- Items expire

- Items are stored in RAM (though maybe persisted to disk)

- Speed is the primary goal

- RAM capacity is limited

- Once capacity is reached, start evicting oldest/least-used items.

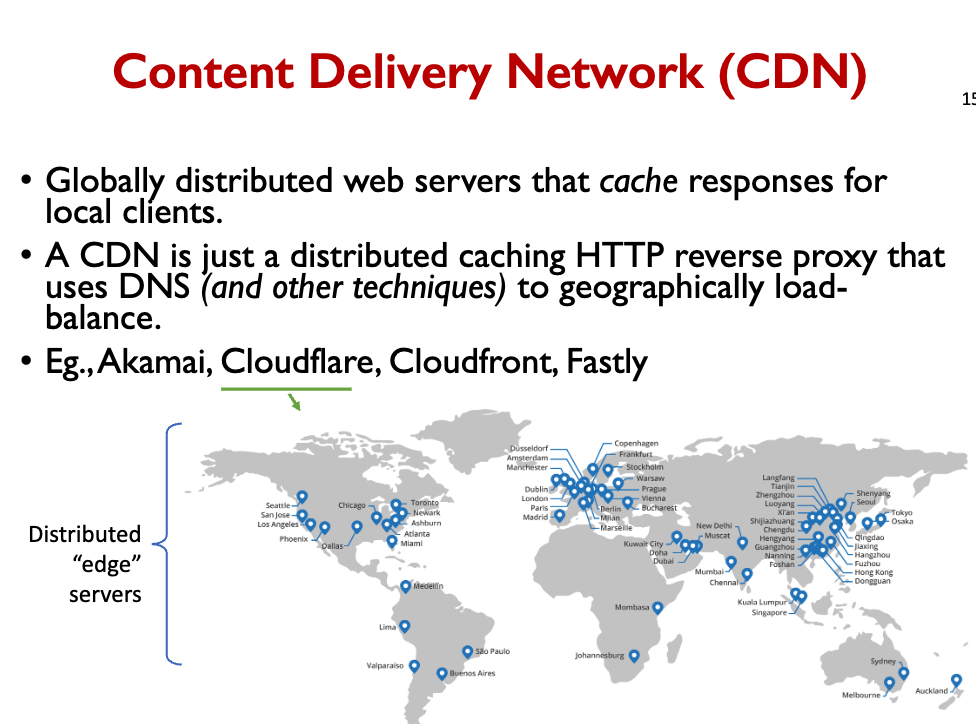

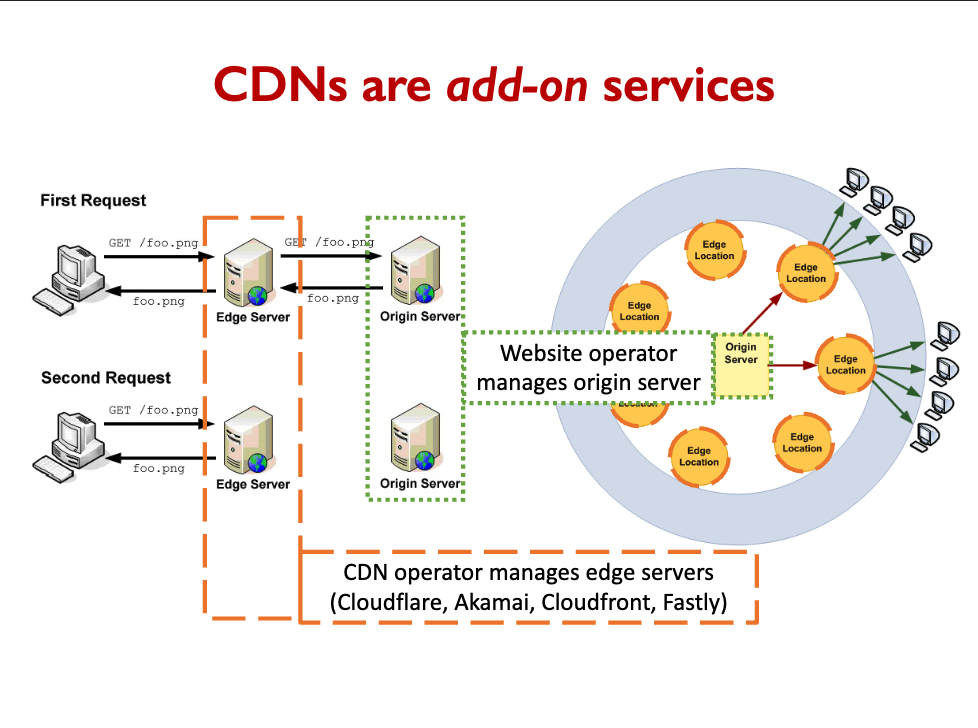

CDN / Reverse Proxy Cache

- Cache common HTTP responses.

- Transparent to the application.

- Just configure the cache’s origin

Redis is a super fast cache

- Example use cases: To store real-time stock prices. Real-time analytics. Leaderboards. Real-time communication. And wherever you used memcached before.

Now, we explore file structures with arbitrary data.

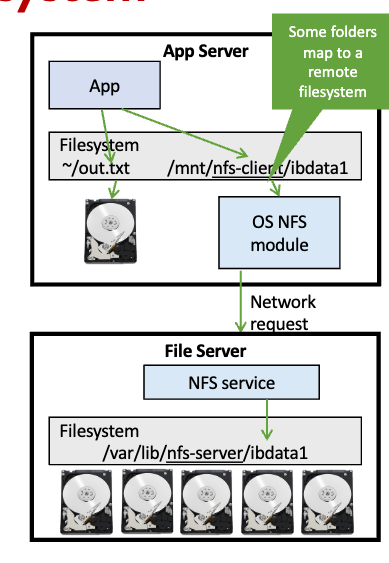

Networked file system

- Eg., NFS (unix), SMB (Windows).

- Managed by the OS.

- Provides a regular filesystem interface to applications by mounting the remote drive.

- Not too useful in modern applications, but may be necessary if your app is built to work directly with a local file system.

- Modern apps should instead interact with cloud-based storage services

Cloud object store (S3)

- A flexible general-purpose file store for cloud apps.

- Managed by cloud provider. Capacity available is “unlimited.”

- Provides a network API for accessing files (maybe REST).

- In other words, app accesses files like a remote database.

- Often provides a public HTTP GET interface to access files:

- Can be easily connected to CDN or urls used directly

Hadoop File System (HDFS)

- When you need to use Hadoop/Spark to do distributed processing.

- Data is too big to move it for analysis.

- Allows data to reside on the same machines where computation happens, thus making processing efficient.

- Hadoop distributed filesystem and its distributed processing tools were designed to work together.

[week5.2]REST API

An API defines how software can be used by other software.

Methods

- GET: to request a data

- POST: to post data to the server to be processed, and perhaps get data back, too.

Less commonly:

- PUT: to create or replace a new document on the server.

- DELETE: to delete a document.

- HEAD: like GET, but just return headers Response codes

- 200 OK: success

- 301 Moved Permanently: redirects to another URL

- 403 Forbidden: lack permission

- 404 Not Found: URL is bad

- 500 Internal Server Error

RESTful API Design style: Paths represent “resources” – data or objects in your system.

- GET reads data

- PUT/POST creates or modifies data

- DELETE deletes data

XML is older than JSON, and now is less common than JSON because many people think XML is unnecessarily complicated.

HTML is an XML document that defines a web page.

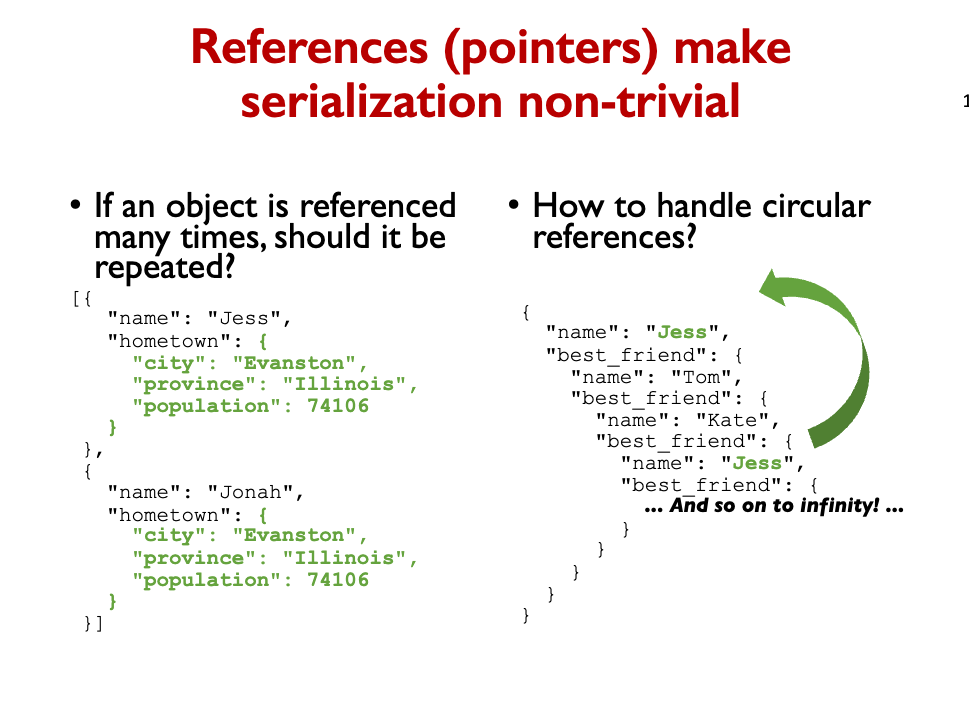

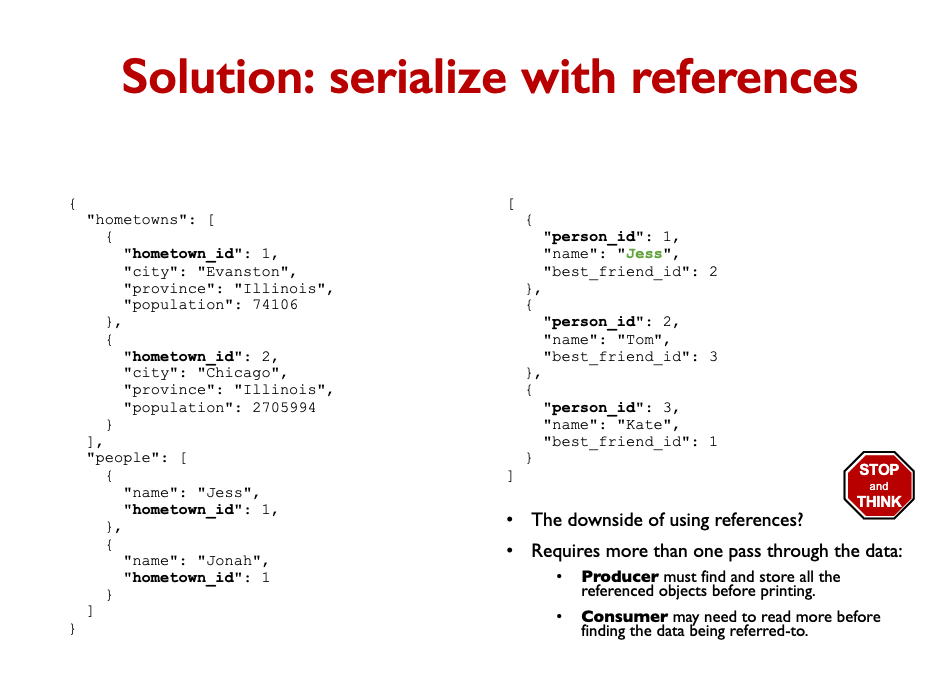

Computer memory is one big array, but programs and databases use references to organize data into complex structures.

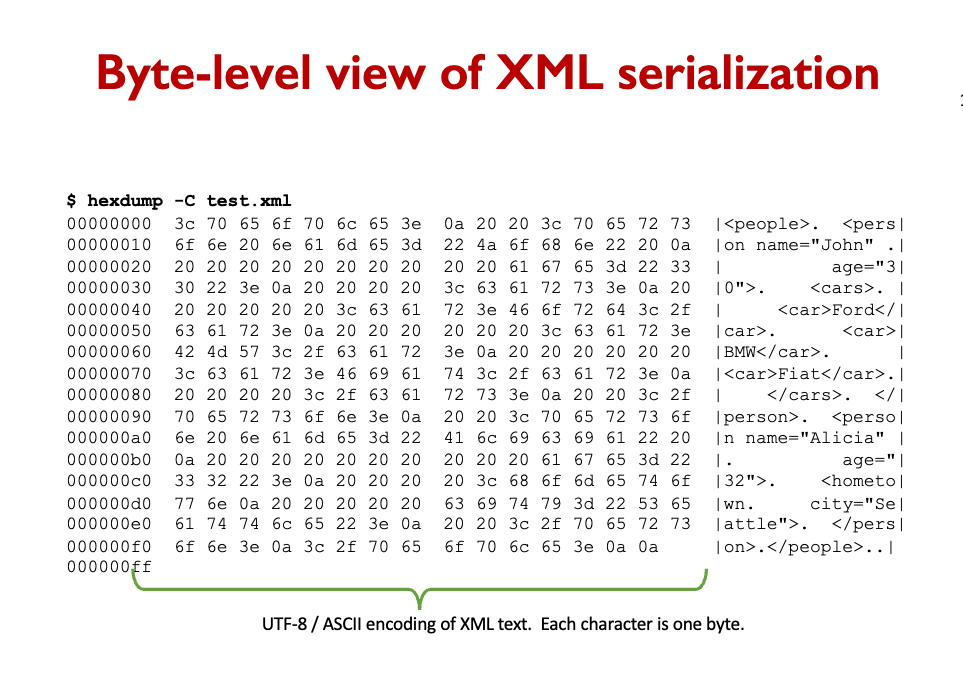

Data files are arrays of bytes. Messages sent over the network are serial streams of bytes. Serialization is converting a data object into a sequence of bytes.

Services are black boxes, exposing network APIs.

- Decouples development of different parts of the system.

- Network APIs define the format and meaning of requests and responses.

- REST is the most popular format for network APIs

- Based on HTTP and uses url, method, response codes, usually JSON bodies.

- JSON is a common data serialization format. XML is also used

[week6] Load Balancing

Last week introduced microservices as an alternative to monolithic design.

We treat each service as a black box.

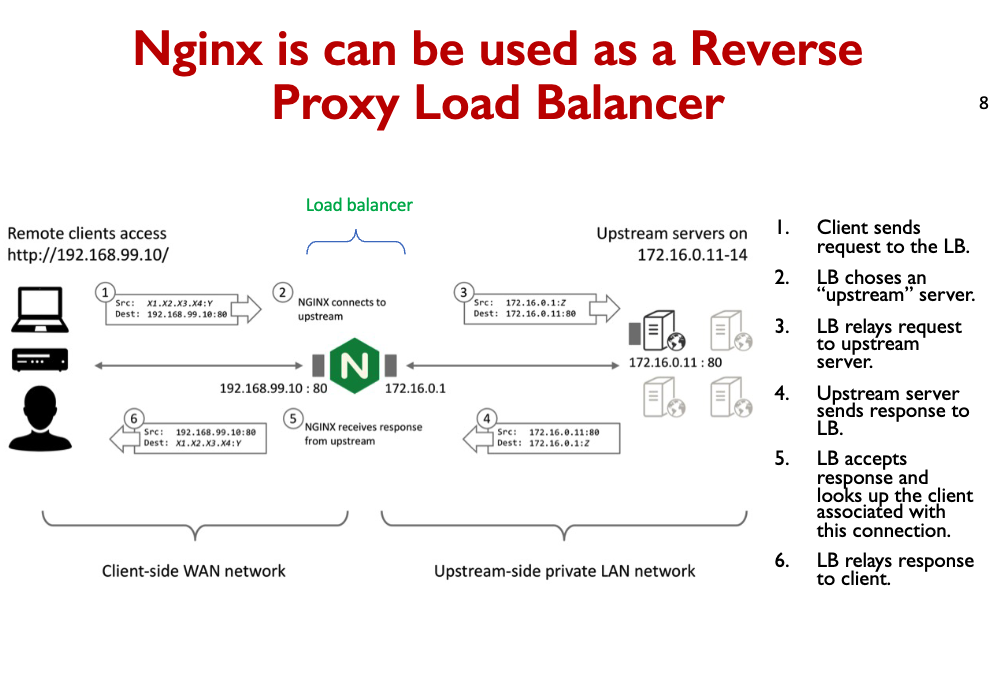

Load balancer is a single point of contact for a service.

- Requests are proxied to a cluster of workers.

- Load balancer does very little work:

- Just forward request/response and remember the request source.

- Load balancer can relay requests for 10s-100s of application servers.

- Makes one IP address appear like one huge machine, but it’s actually a cluster

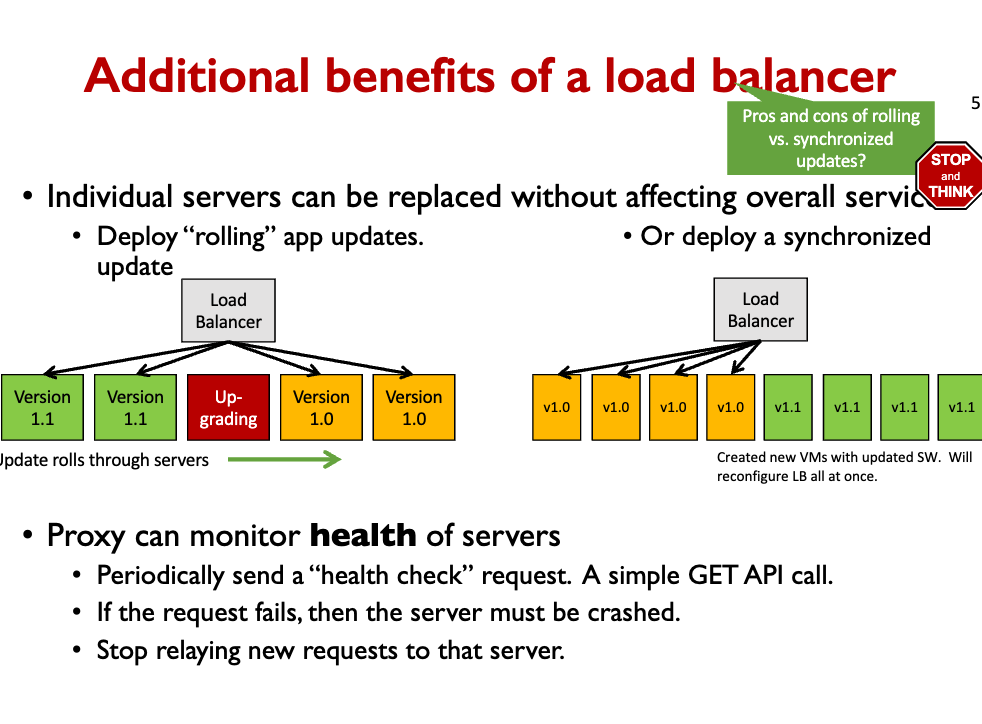

- With a load balancer, you can deploy rolling app updates!!

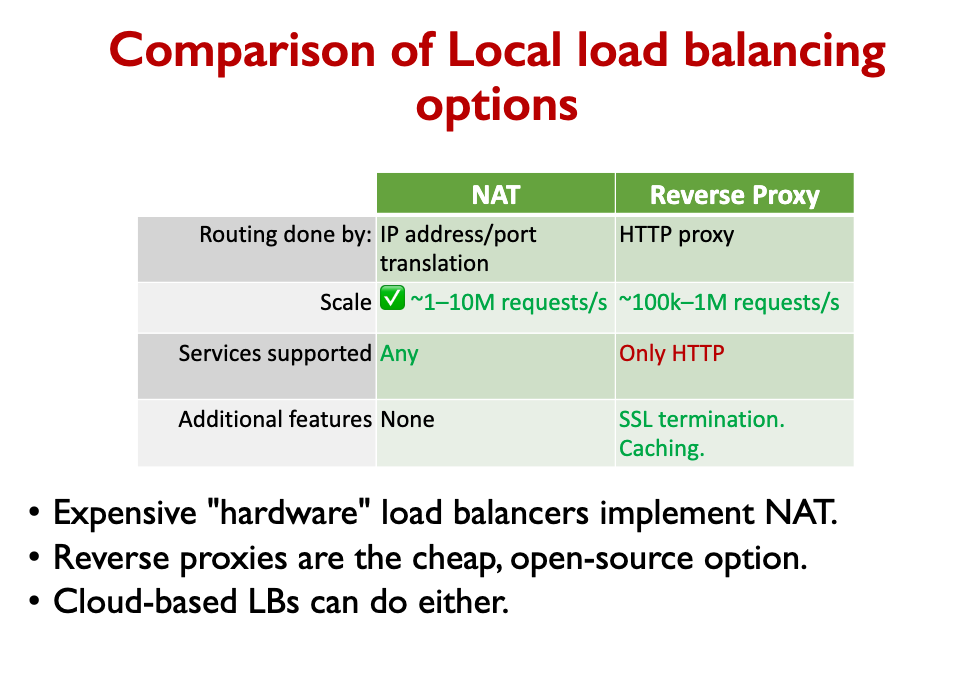

There are 2 types of load balancers:

- Network Address Translation (NAT)

- Works at the TCP/IP layer

- Reverse Proxy

- Works at the HTTP layer

- Ex: Nginx

Main difference between NAT and Reverse Proxy

- NAT forwards packets one-by-one, but remembers which server was assigned to each client.

- Reverse proxies store full requests/response before forwarding.

Load balancer machine is a single point of failure.

- Can only handle ~1M requests/sec.

- Resides in one data center, thus:

- The data center is also a single point of failure.

- Huge services need more than just local load balancers.

- Can clients find a service replica without contacting a central bottleneck?

- We have a distributed service discovery problem.

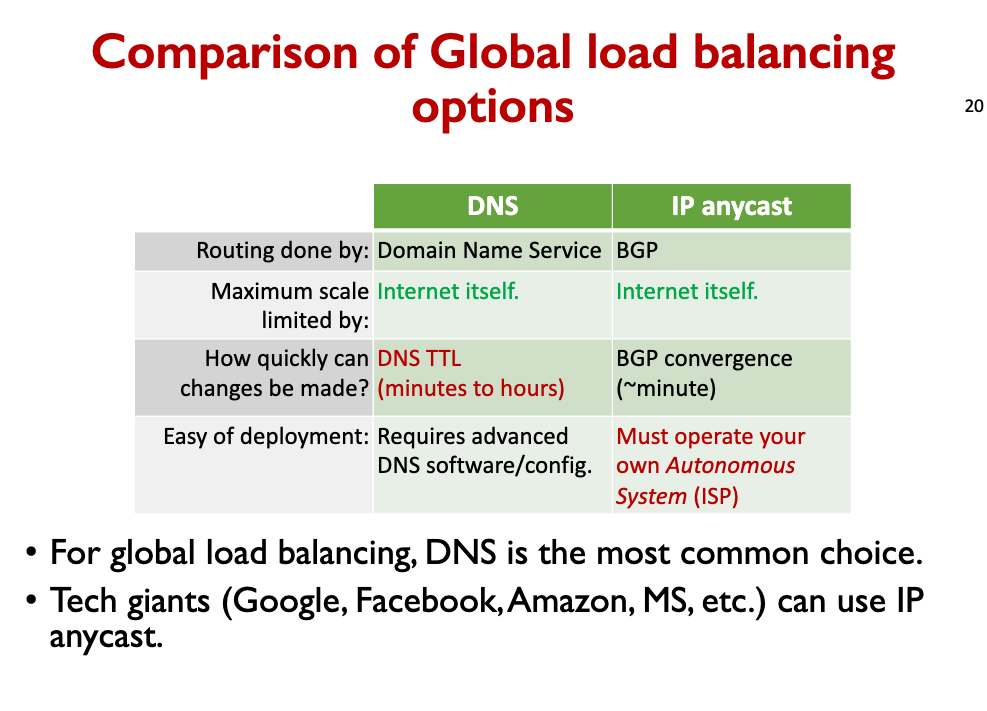

We have the Domain Name Service (DNS) that comes to the rescue.

DNS allows multiple multiple IP addresses per domain name.

- Client can then randomly choose one of the IP addresses.

- Even better, DNS server can store multiple answers, but give different responses to different users (either randomly, or cyclically).

- Remember that DNS is a cached, distributed system.

- UW’s DNS resolver may have been told google.com = 172.217.9.78.

- UofT’s DNS resolver may have been told google.com = 172.217.9.80.

- Different answers are cached and relayed to all the users on the 3 networks.

- Each of those three IP addresses are different reverse-proxying load balancers sitting in front of hundreds of app servers.

Question: Is there a limit to scaling by DNS?

Geographic load balancing with DNS

- More than just balancing load, DNS can also connect user to the closest replica of a service.

- Clever DNS server examines IP address of requester and resolves to the server that it thinks is closest to the client (IP address geolocation).

- In other words, the IP address answers are not just different, but customized for the particular client

Cache control headers

Geographic load balancing with IP Anycast

- 8.8.8.8 is the one IP address for Google’s huge public DNS service.

- Handles >400 billion DNS requests per day! Anyone here use it?

- Cannot rely on DNS for load balancing, because it is the DNS server!

- IP Anycast load balancing is implemented with BGP. (Details in networking class)

- Basic idea is that many of Google’s routers (around the world) all advertise to their neighbors that they can reach 8.8.8.8 in just one hop

- Thus, traffic destined for 8.8.8.8 is sent to whichever of these Googlerouters are closest to the customer.

- Technically, this violates the principle that an IP address is a particular destination, but for DNS this doesn’t matter because it’s UDP and stateless.

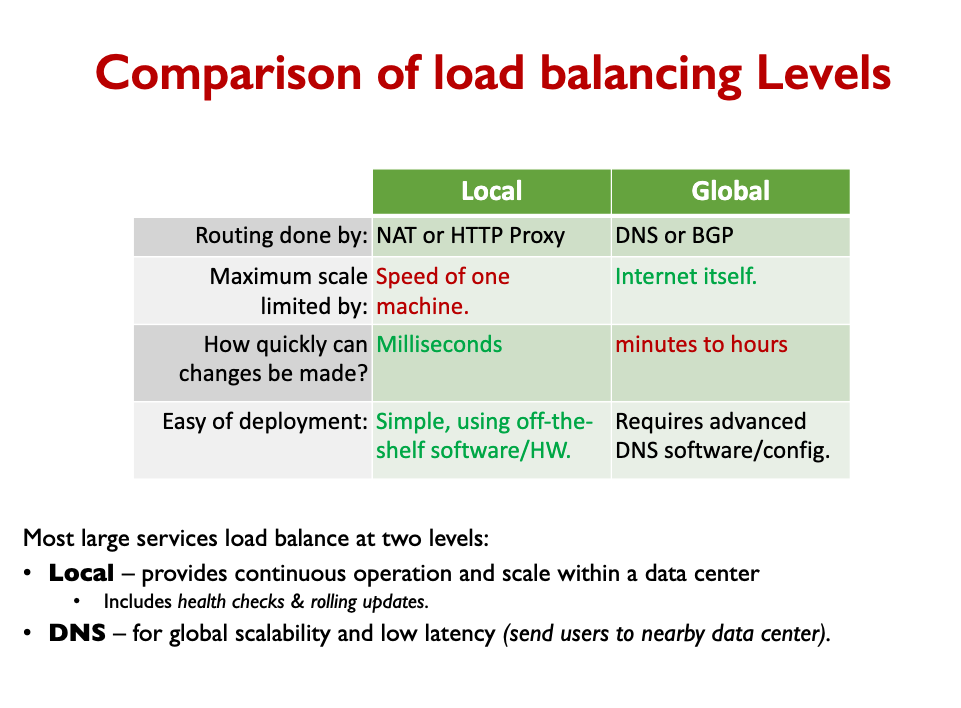

Recap: Load balancers

We have most of the view of a basic scalable architecture! (for services, at least)

- Frontend: Client connects to “the service” via a load balancer.

- Really, the client is being directed to one of many copies of the service.

- Global LBs (DNS and IP anycast) have no central bottlenecks.

- Local LBs (Reverse Proxy or NAT) provide mid-level scaling and continuous operation (health checks & rolling updates).

- Services: Implemented by thousands of clones.

- If the code is stateless then any worker can equally handle any request.

- Data Storage:

- Scaling SQL DB, NoS

[week6] microservices

Nothing much worthy of notetaking here. Understand monolithic architecture vs. microservices architecture.

[Week 6] Basic Architecture Design

[Week8] Architecture modeling

Basic architectural elements

- Components

- Connectors

- Interfaces

- Configurations

- Rationale – reasoning behind decision

Software Performance Models

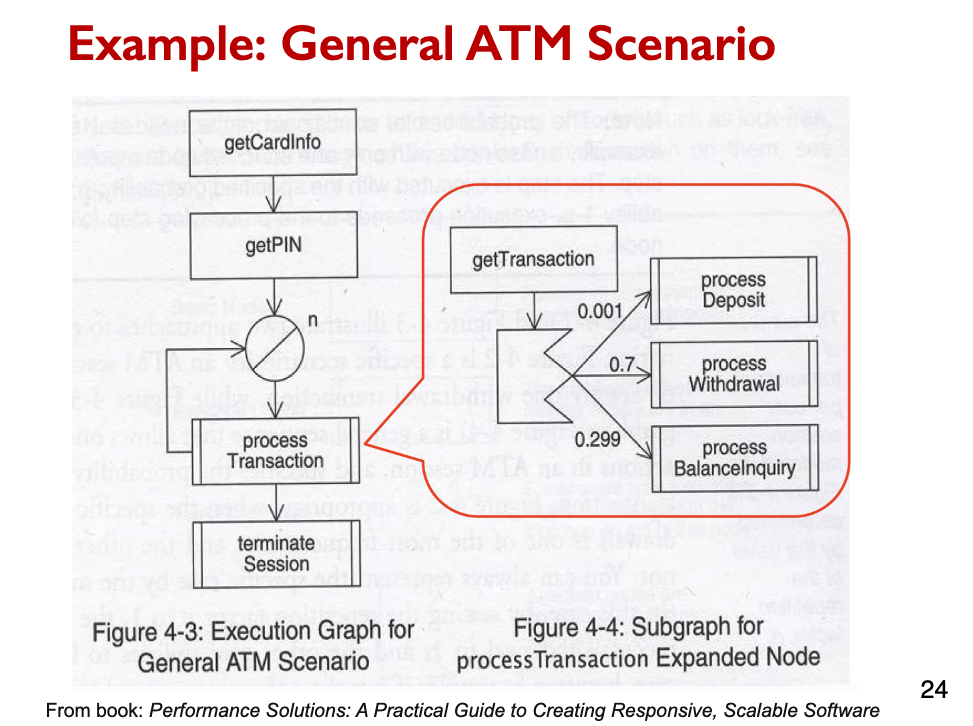

- Software Performance Modeling (SPM)

- Execution graphs

- Queuing Networks

- Machine learning based performance models

What are software performance models?

They are formal representations of the software to capture aspects of performance.

Software performance models can be categorized:

- Software Execution Models (e.g., Execution Graphs)

- System Execution Models (Queuing Networks)

- Machine learning based models

- Others (e.g., Stochastic Petri Nets)

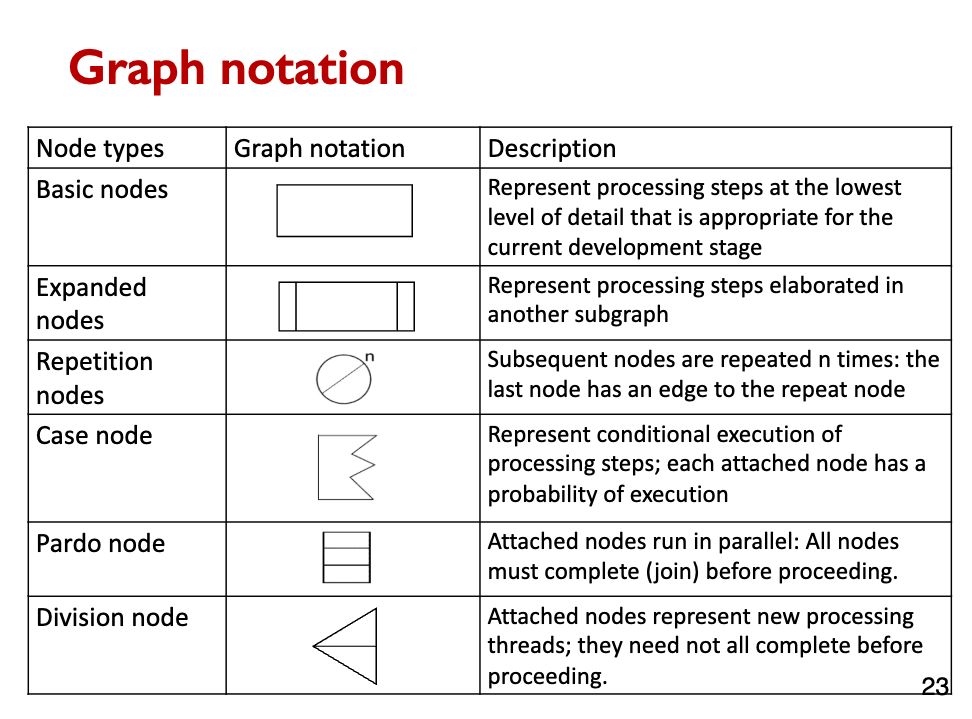

You need to know these symbols

[Week8] Architecture modeling 2

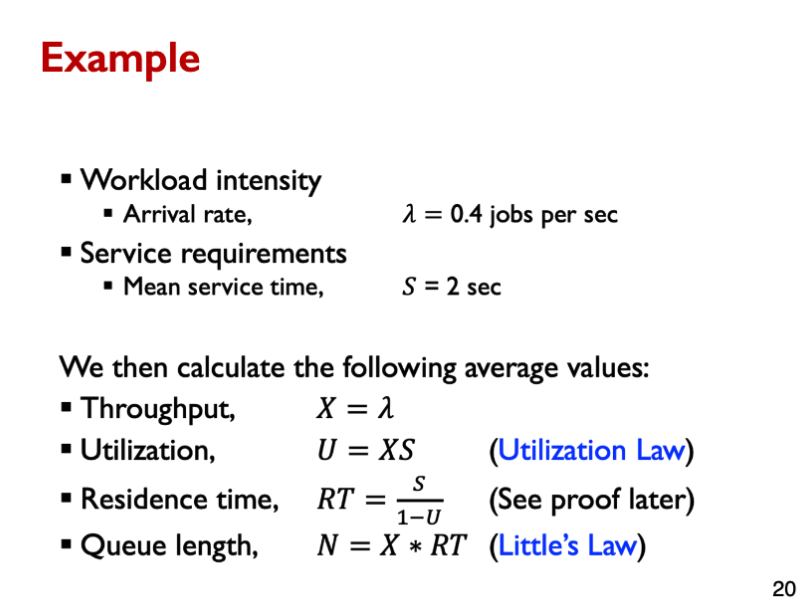

Queuing Network Models (System Execution Models)

Queuing network models (QNM) characterize the software’s performance in the presence of dynamic factors, such as other work loads or multiple users aim to solve the contention for resources.

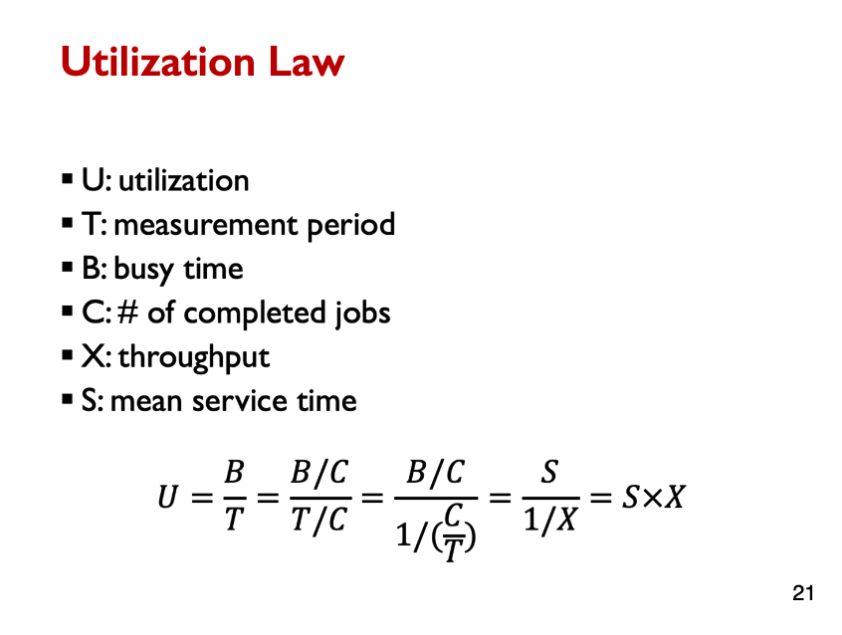

Performance metrics of interest for each server are:

- Residence time, RT: the average time jobs spend in the server, in service and waiting

- Utilization, U: the average percentage of the time the server is busy

- Throughput, X: the average rate at which jobs complete service

- Queue length, N: the average numbers of jobs at the server (receiving service and waiting)

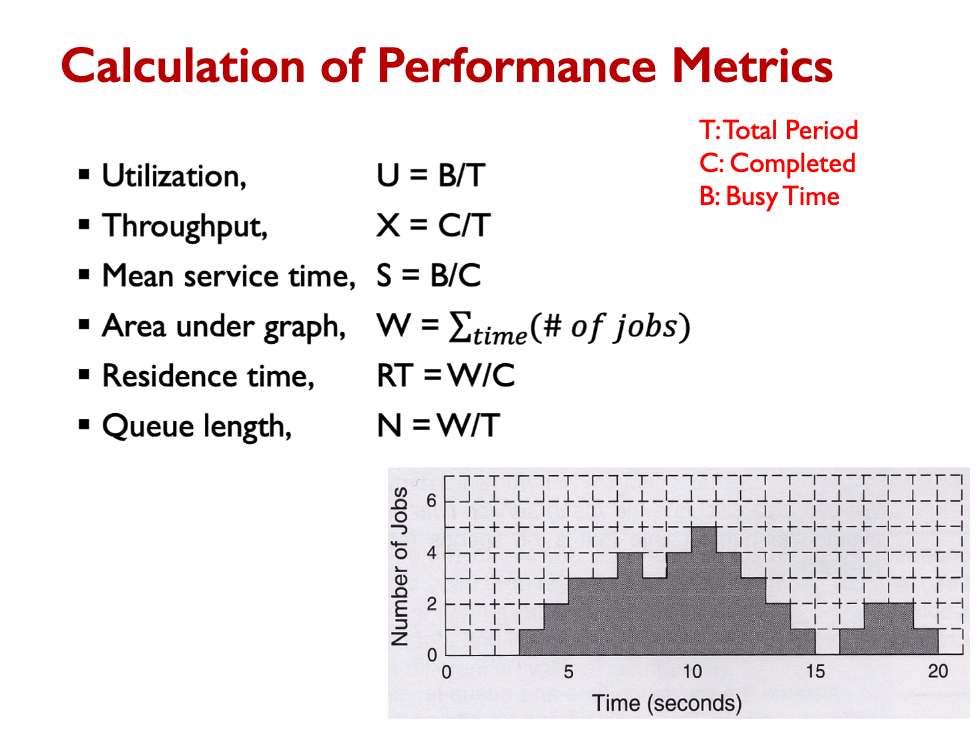

W = area under graph graph throughput = C/ T utilization = B / T mean service time = B / C Residence time = W/C Queue length = W/T

We also have .

[Week9]Twitter Design Exercise

Relational Design:

- Writes are fast/simple.

- Cannot handle lots of data/users.

- Reads are slower. Pre-built Feeds:

- Can use NoSQL, so much more scalable.

- Duplicates tweets.

- Very wasteful for celebrities with millions of followers.

- Writes are slow.

- Celebrities’ tweets may not reach all user feeds within 5 seconds.

- Lots of publication work is done

We’ve denormalized because now there are duplicates. Keys are users, value includes the latest feed data and other items that are commonly needed.

- Hash the key (user) to assign each user’s data to set of replicated storage node

Hybrid Design – Twitter 3.0

- Pre-build feeds for most users.

- But celebrity tweets are stored in a small relational database.

- Fetch a user feed in two steps:

- Get normal-user tweets from pre-built NoSQL feed.

- Query relational database read-replica to get recent tweets from any celebrities that the user is following.

- Celebrity tweets are relatively rare, so a single primary SQL database can handle these writes.

- Many read-replicas handle the reads.

Relational DB: space-efficient, fast writes, but slow reads. NoSQL DB: duplicative, slow writes, but fast reads.

[Week9]ICDE_CaseStudy

Quality attributes that we are looking for:

- Reliability

- Availability

- Portability

- Scalability

- Performance

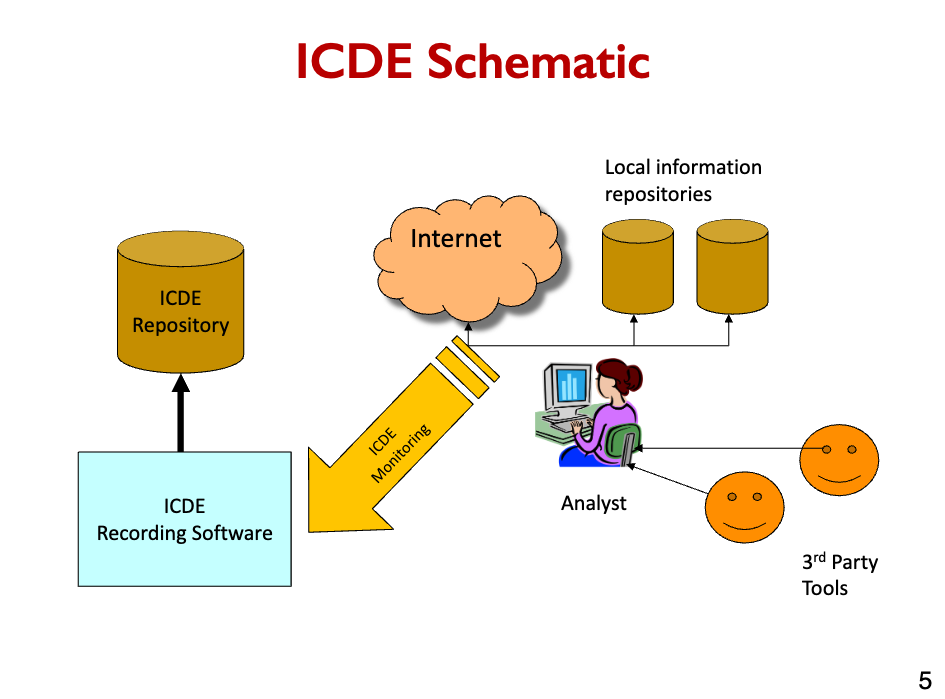

Information Capture and Dissemination Environment (ICDE) is a software system for providing intelligent assistance to

- financial analysts

- scientific researchers

- intelligence analysts

- analysts in other domains

4 common scalability issues in IT systems:

- Request load

- Connections

- Data size

- Deployments

[Week10] Push notification

Yea, how does this stuff actually work? Like your iPhone, how do the notifications work?

Email is a simple way to send notifications.

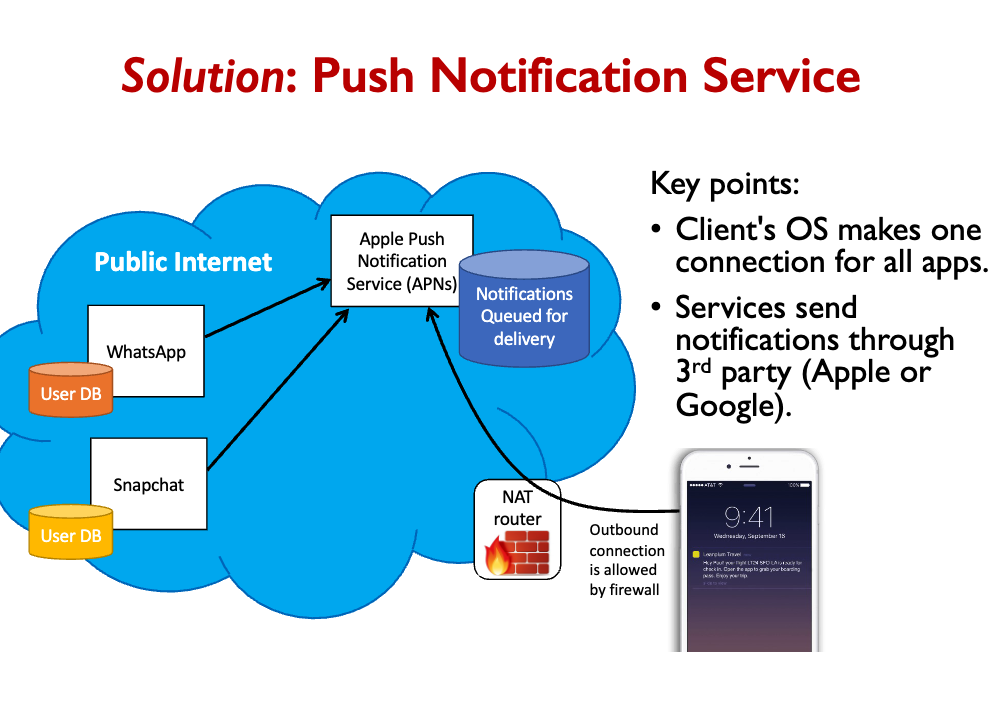

Client cannot implement a REST API because it is not easily reachable. Why?

- IP addresses change when devices move.

- IP addresses are usually private (NATed).

- Device or network may have a firewall.

- Client does not control a DNS server.

- May be powered off, or out of radio contact.

- App may not always be running

So, most services rely on clients initiating all requests themselves, sent to always-listening services with well-known hostnames.

- Server actions are synchronous with client.

- But this is not always sufficient!

- Push Notifications: hacks to send msgs to clients.

PNS: Push Notification Service.

Web browsers were designed to pull data from servers.

- Server implements REST api, browser makes REST request to fetch data.

- Modern applications also desire pushed updates from the service.

- Eg., there is a new message for you, an edit occurred on a shared document, …

- Client can make repeated requests for new data (polling), but this is a poor solution. Requires a tradeoff between latency and network overhead.

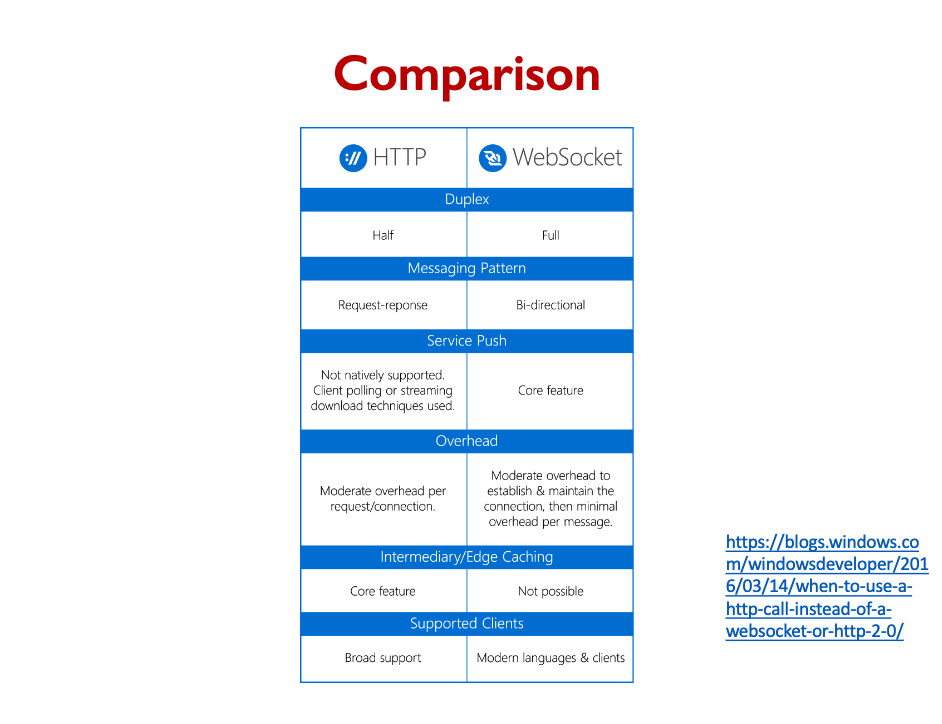

- Websockets are the preferred modern solution.

- Long-polling was the solution prior to websockets.

- Both present some architectural challenges (similar to smartphone PNS).

Websockets

- A Websocket is a long-lived, bi-directional network connection.

- It’s similar to a TCP socket, but it’s available to Javascript code in a browser.

- JS app creates a websocket connection to server.

- Client can send API requests through the websocket.

- Responses comes back through the websocket.

- The connection remains open!

- Server can send messages at any time, independent of client requests.

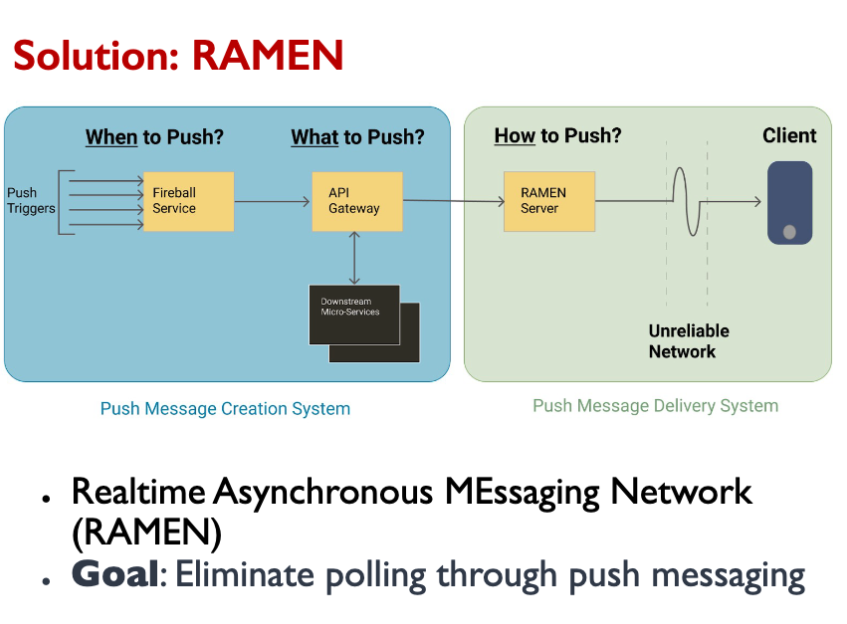

• Traditional web/app design uses a client-server model, but sometimes we want to push data to client instead of client always pulling.

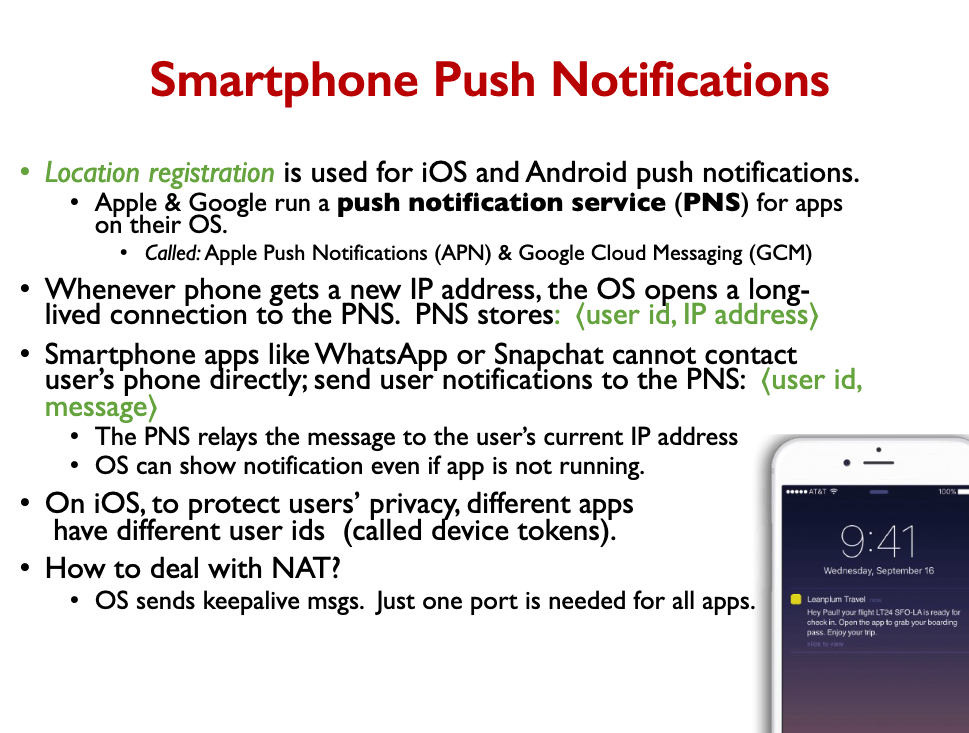

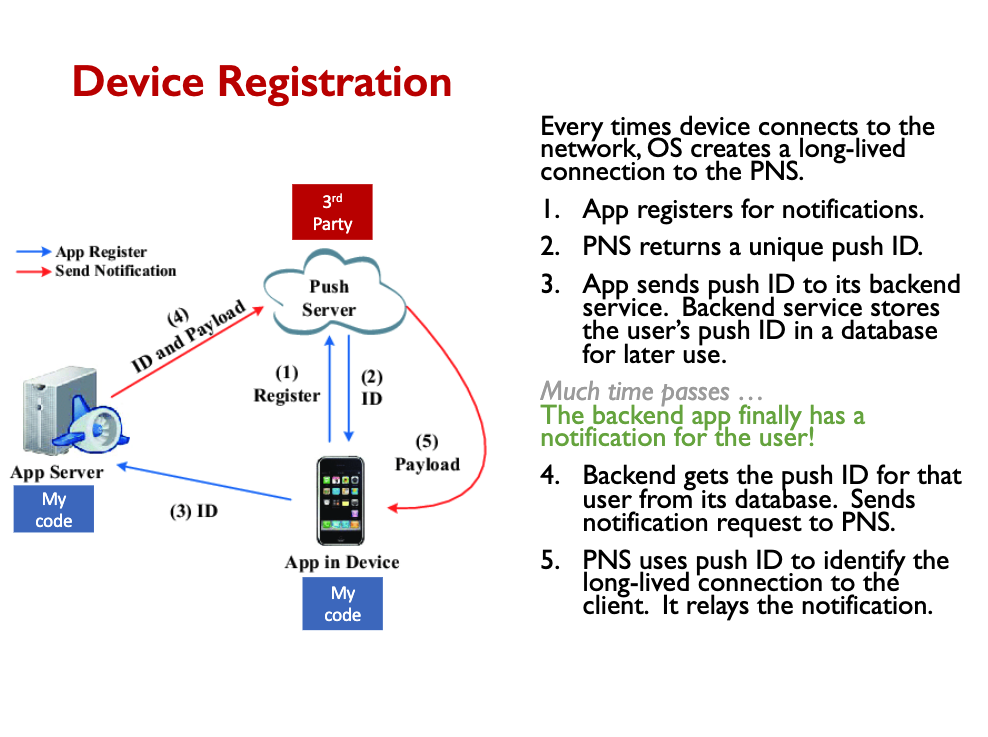

- Asynchronously sending data to clients can be a challenge.

- Mobile OSes have special push notification services.

- Allows a single connection to be shared by all apps on the phone.

- Allows notifications to be delivered even if app is not running.

- Web browsers can use Websockets or Long-polling.

- In both cases, client is connected to one machine and service must somehow relay messages to that connection

[Week10] Push notification case study

With Uber, polling drained battery faster and made app sluggish.

[week11.2]Async

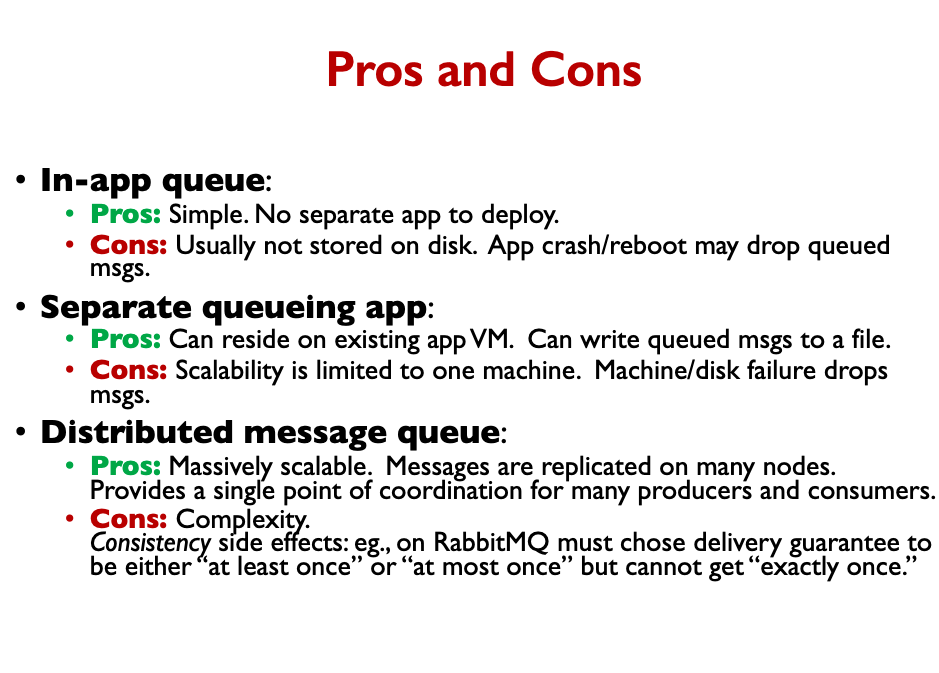

Client provides a callback function (webhook) where it expects to receive a response.

Passive Queues • The queue accepts and stores messages until they are requested

Active Queues

- Queue knows where to send messages.

- Queue actively pushes messages out to subscribers.

- Subscribers must listen for messages

Distributed queue cannot guarantee strict FIFO ordering of message.

Tip

Tip: If multiple messages must be ordered, send one big message

Services can be tightly or loosely coupled (synchronous or async).

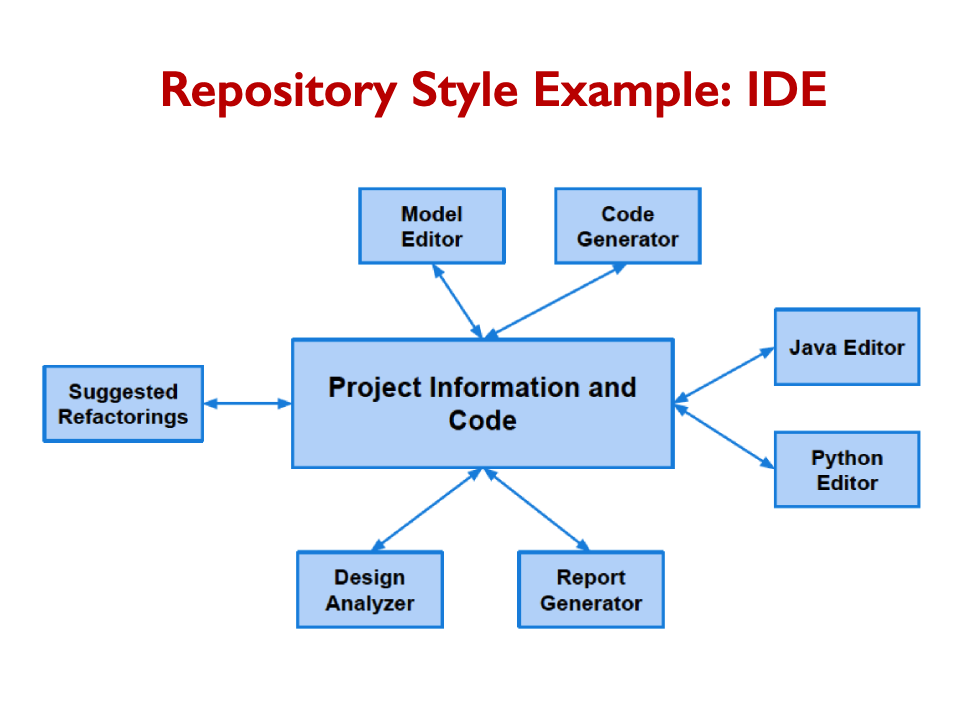

[Week11.1]Arch_styles

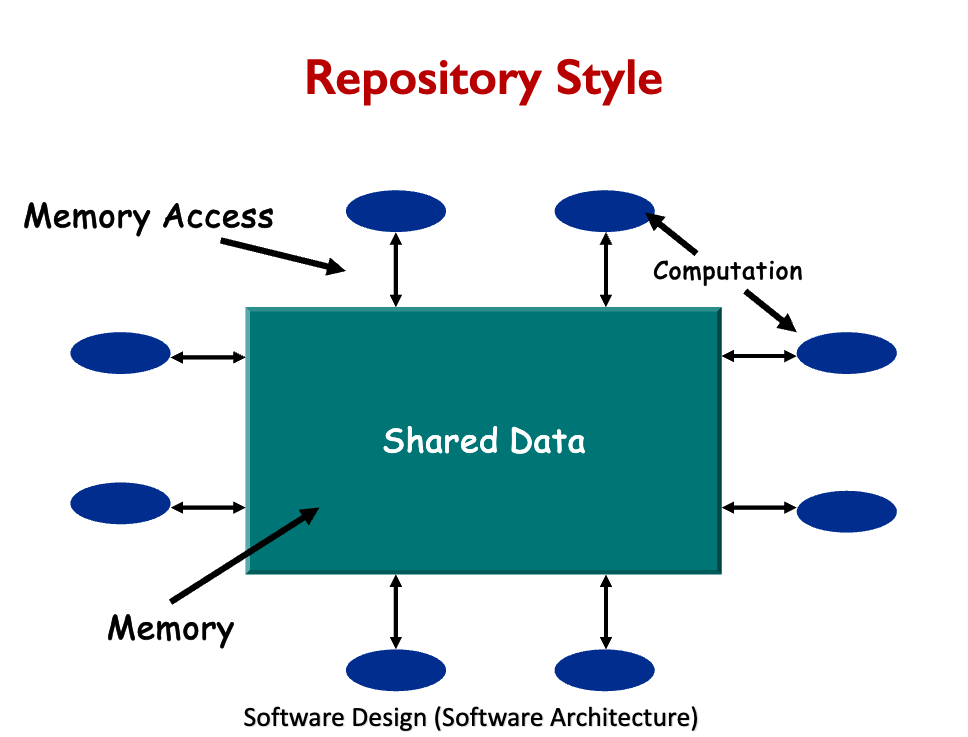

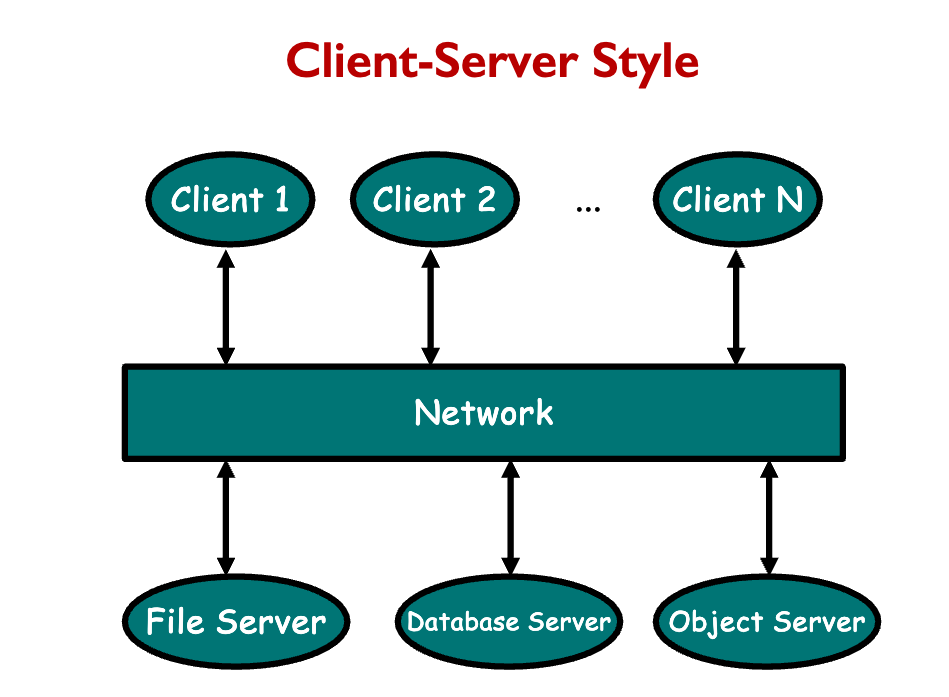

“Abstraction layering and system decomposition provide the appearance of system uniformity to clients, yet allow Helix to accommodate a diversity of autonomous devices. The architecture encourages a client-server model for the structuring of applications.

Repository style disadvantages:

- Must agree on a data model a priority

- Difficult to distribute data.

- Data evolution is expensive

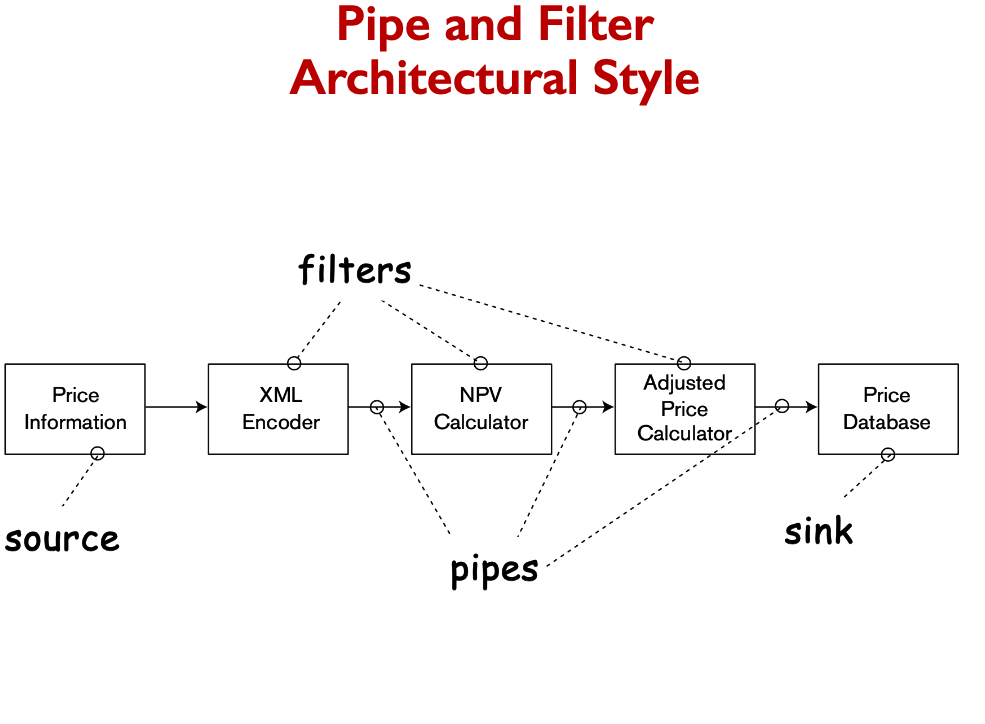

Pipe and Filter Architectural Style

- Filters do not share state with other filters.

- Filters do not know the identity of their upstream or downstream filters

Examples

- Unix Shell Scripts: Provides a notation for connecting Unix processes via pipes.

cat file | grep Erroll | wc -l

Compilers that do things in stages.

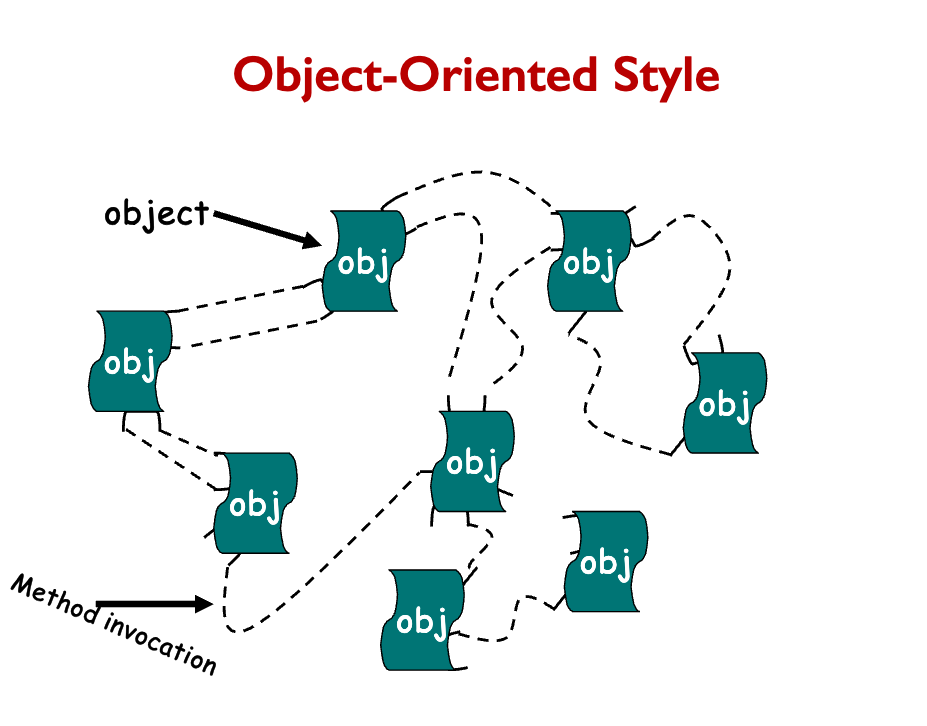

Object-oriented style

- Objects are responsible for preserving the integrity (e.g., some invariant) of the data representation.

- The data representation is hidden from other objects.

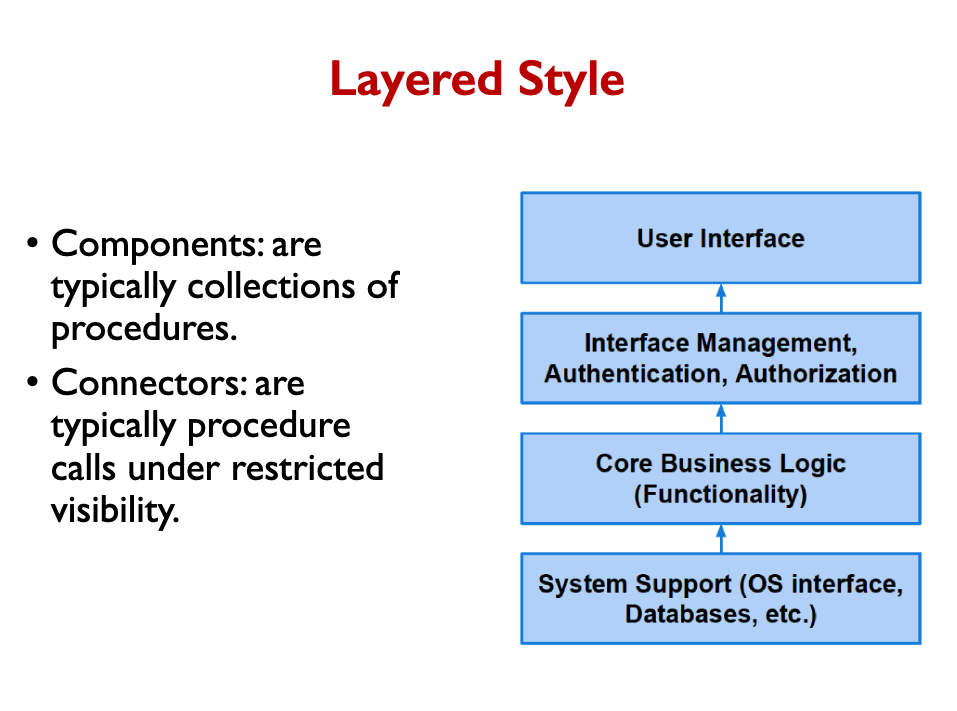

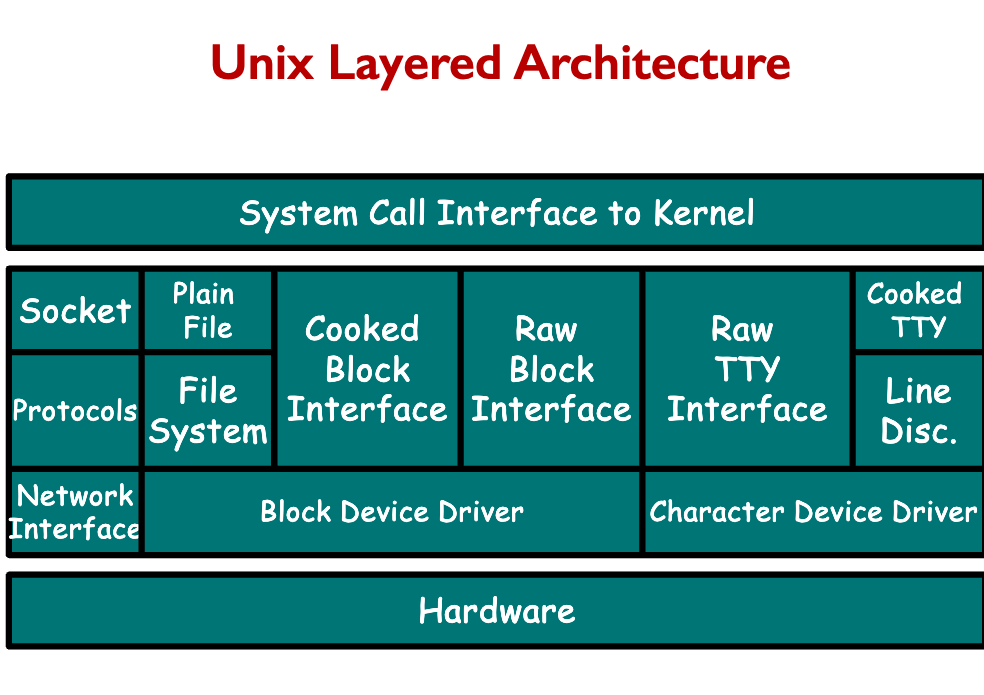

Layered-style

For example

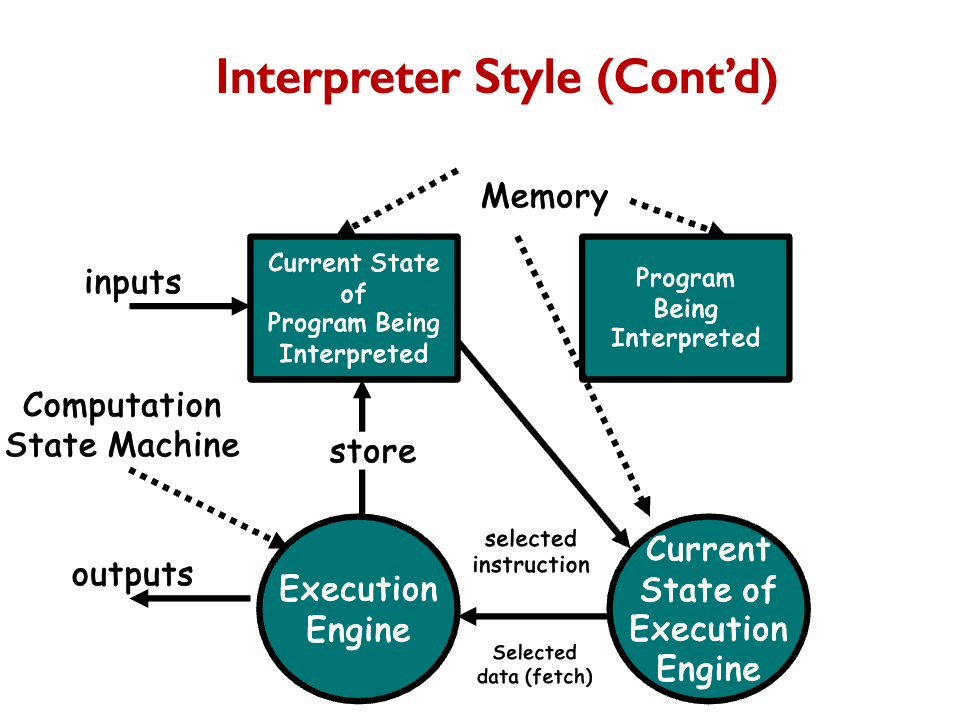

There’s also the interpreter style

- Suitable for applications in which the most appropriate language or machine for executing the solution is not directly available.

Interpreter Style Disadvantages

- Defining, implementing and testing interpreter components non-trivial

- Extra level of indirection slows down execution.

- Java has a JIT (Just In Time) compiler

- emacs 28 has (experimental) support for native compilation

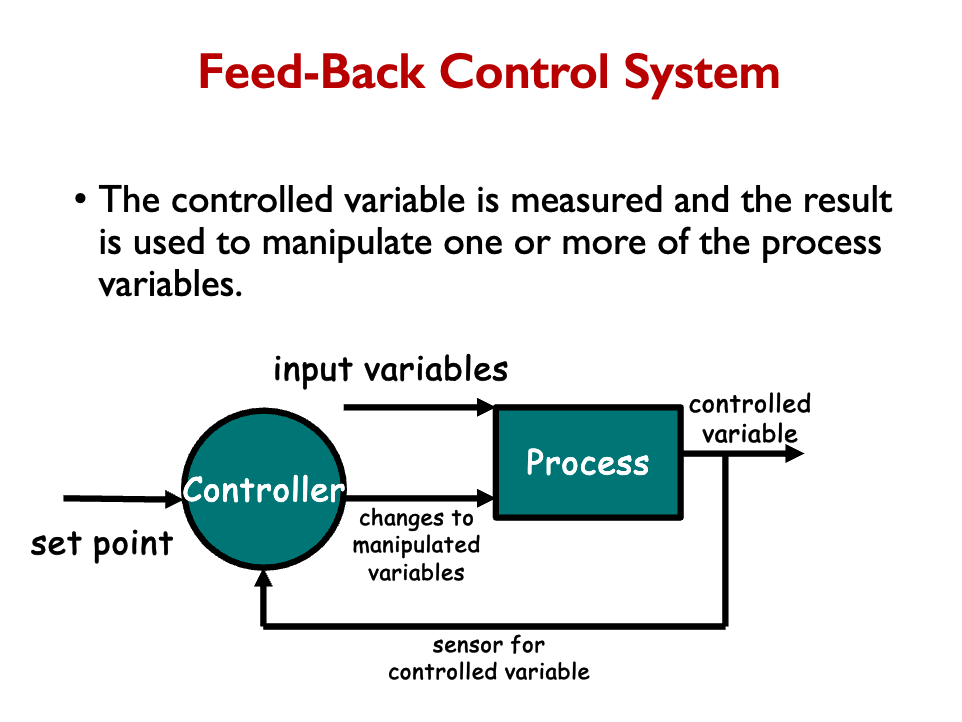

Process-Control Style

[Week12.1]security

Three questions to check before performing the requested action:

- Authenticity

- Integrity

- Authorization

Design Principles for security

- Least Privilege: give each component only the privileges it requires

- Fail-safe Defaults: deny access if explicit permission is absent

- Economy of Mechanism: adopt simple security mechanisms

- Complete Mediation: ensure every access is permitted

- Open Design: do not rely on secrecy for security Separation of Privilege: introduce multiple parties to avoid exploitation of privileges

Least Common Mechanism: limit critical resource sharing to only a few mechanisms Psychological Acceptability: make security mechanisms usable

Defense in Depth: have multiple layers of countermeasures Examples:

- Website protection

- Network security

- Account security

- Physical security

- Administrative controls

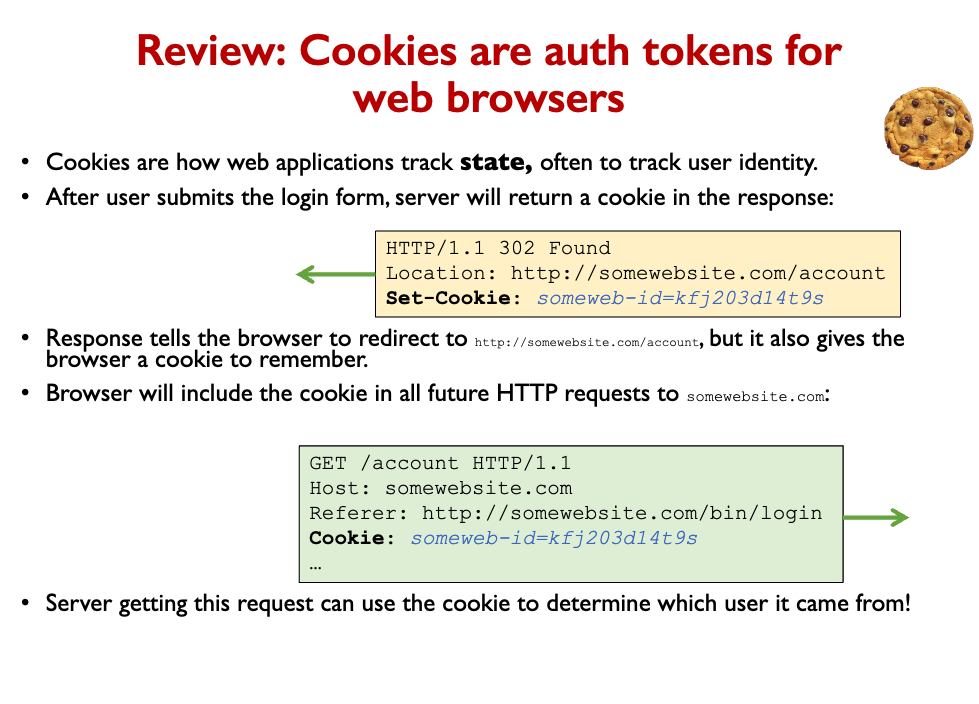

Cookies are auth tokens for web browsers.

[Week12.2]secure_micro_services

TLS Certificate and Verification Process

Network Segmentation in Kubernetes Use of Namespaces:

- Groups cluster into smaller, manageable units

- Each namespace acts like a distinct segment Network Policies in Kubernetes:

- Regulate traffic between namespaces

- Define rules for inbound and outbound traffic

Input Data Validation Risks

SQL Injection Risk:

- Risk from string concatenation in SQL queries Remote Code Execution (RCE) Vulnerability:

- Example: Unsanitized input strings in .NET process invocation

- Potential for attacker-controlled process execution or reverse shell creation Cross-Site Scripting (XSS) and Other Risks:

- Data passed between services without sanitization

- Risks in front-end usage leading to XSS vulnerabilities

Summary Initial Protection Measures:

- Secure cloud environment with a virtual private cloud

- Restrict access through a single, well-guarded entry point Communication Security:

- Implement TLS for protected communication channels

- Prevent unauthorized data reading or modification within the cluster Cluster Segmentation:

- Divide the cluster into smaller segments for controlled communication

- Apply strict pathways for inter-service communication Service-Level Protection:

- Use JWT-based authentication and access-control lists (JSON Web tokens)

- Middleware implementation for reusability and uniformity Hardening and Validation Techniques:

- Docker container hardening

- Essential input data validation and sanitizing

Three Main Simulation Methods

- Tabletop Exercises (TTX)

- Red Team Operations

- Atomic Simulations

[Week13]streaming_batch_leader_follower

Streaming vs Batch Processing

Easy vs. Hard scalability problems Easy:

- Handle independent requests from millions of customers.

- Assume the data is already available to service the requests.

- Trivially parallelizable – use horizontal scaling to divide and conquer. Hard:

- Perform a calculation using all the data from millions of customers.

- Eg., build a recommendation system from Amazon order history.

- Requires lots of coordination, housing data on the compute nodes.

- Must use MapReduce, Spark, etc.

- Luckily, it’s OK if the calculation takes several hours.

In batch processing, the data is already there, and you process it in batches.

Stream Processing is real-time data processing.

Comparing Batch vs Stream

- Key differentiators:

- Purpose

- Data handling

- Processing requirements

- Batch for large data volumes

- Stream for real-time analytics, low latency

- Large data in batches vs streams of continuous data