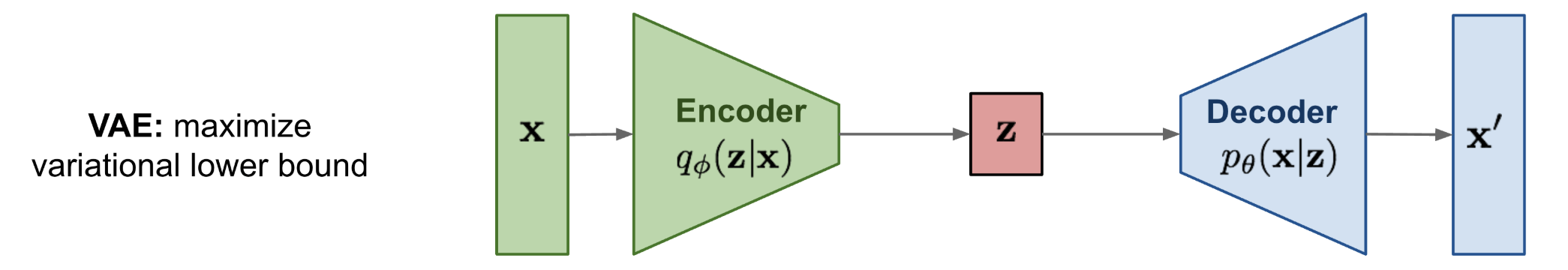

Variational Autoencoder (VAE)

Latent variable model trained with variational inference:

This is a variant of the Autoencoder that is much more powerful, which uses distributions to represent features in its bottleneck. There are issues that arise with Backprop, but they overcome it with a reparametrization trick.

Resources

“VAE is deeply rooted in the methods of variational bayesian and graphical model.”

- #todo I don’t fully understand this

It's basically an Autoencoder but we add gaussian noise to latent variable

z?Key difference:

- Regular Autoencoder

- Input → Encoder → Fixed latent representation → Decoder → Reconstruction.

- VAE

- Input → Encoder → Latent distribution → Sample from distribution (adds Gaussian noise via reparameterization trick) → Decoder → Reconstruction

Process

Forward Pass (Encoding → Sampling → Decoding)

- Encoder:

Input data , outputs parameters (mean and variance) of latent distribution :

- Reparameterization Trick:

Differentiably sample latent variable :

- Decoder:

Reconstruct data from sampled latent vector :

Loss Function (Negative ELBO):

Optimize encoder and decoder parameters by minimizing: