Unsupervised Learning, Dimensionality Reduction

Autoencoders

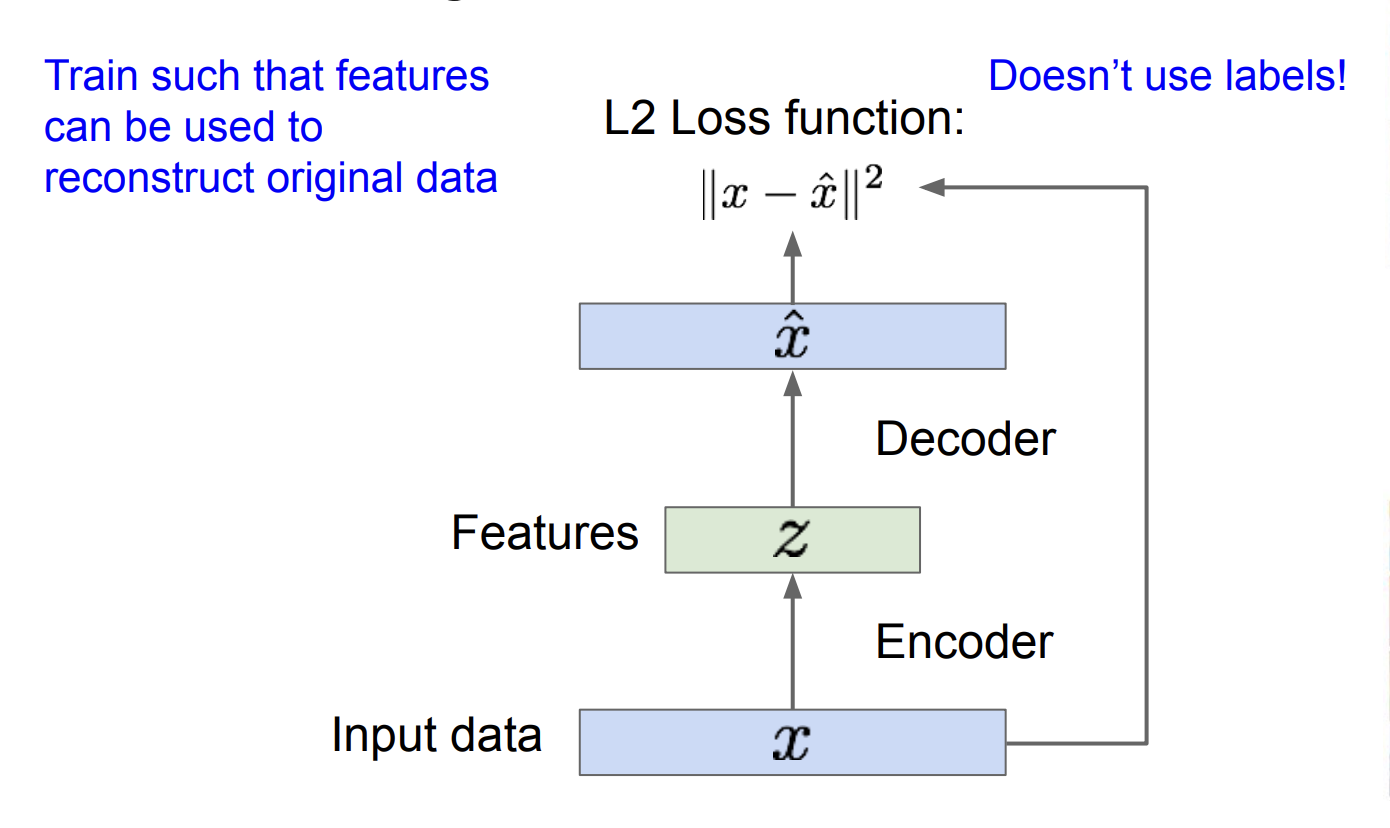

An autoencoder is a type of Neural Network used to learn data encodings in an unsupervised manner.

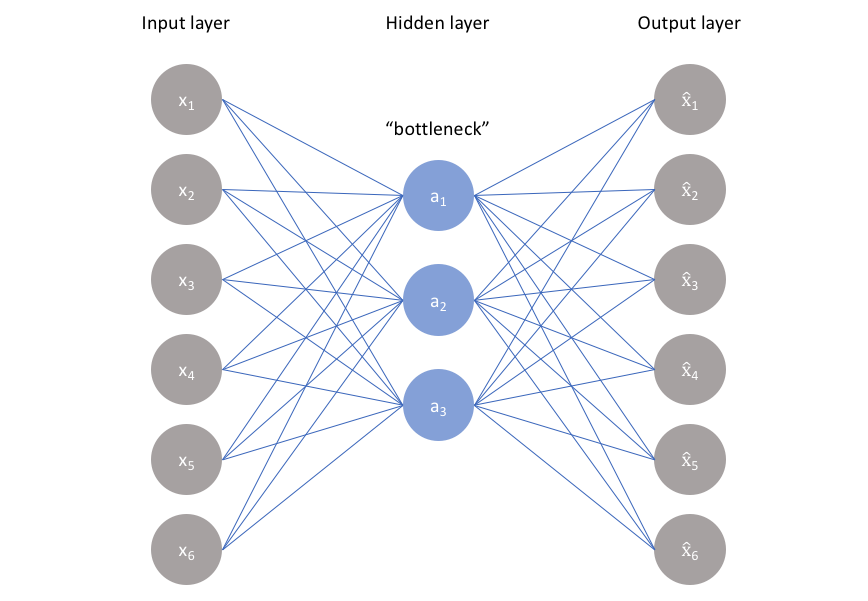

At its core, it’s just an MLP that learns to compress and then reconstruct its input by passing data through a lower-dimensional bottleneck.

Resources

- https://lilianweng.github.io/posts/2018-08-12-vae/

- https://www.v7labs.com/blog/autoencoders-guide

- Video: https://www.youtube.com/watch?v=bIaT2X5Hd5k&ab_channel=DigitalSreeni

Autoencoders consist of 3 parts:

- Encoder: Tries to compress the input data.

- Bottleneck (contains the “features”): Contains the compressed feature representation. and Most important part of the network.

- Decoder: Tries to reconstruct the input data. The output is then compared with a ground truth.

One practical application of autoencoders is that we remove the decoder, and simply use our encoder as as as input to a standard CNN .