Importance Sampling

Almost all off-policy methods utilize Importance Sampling.

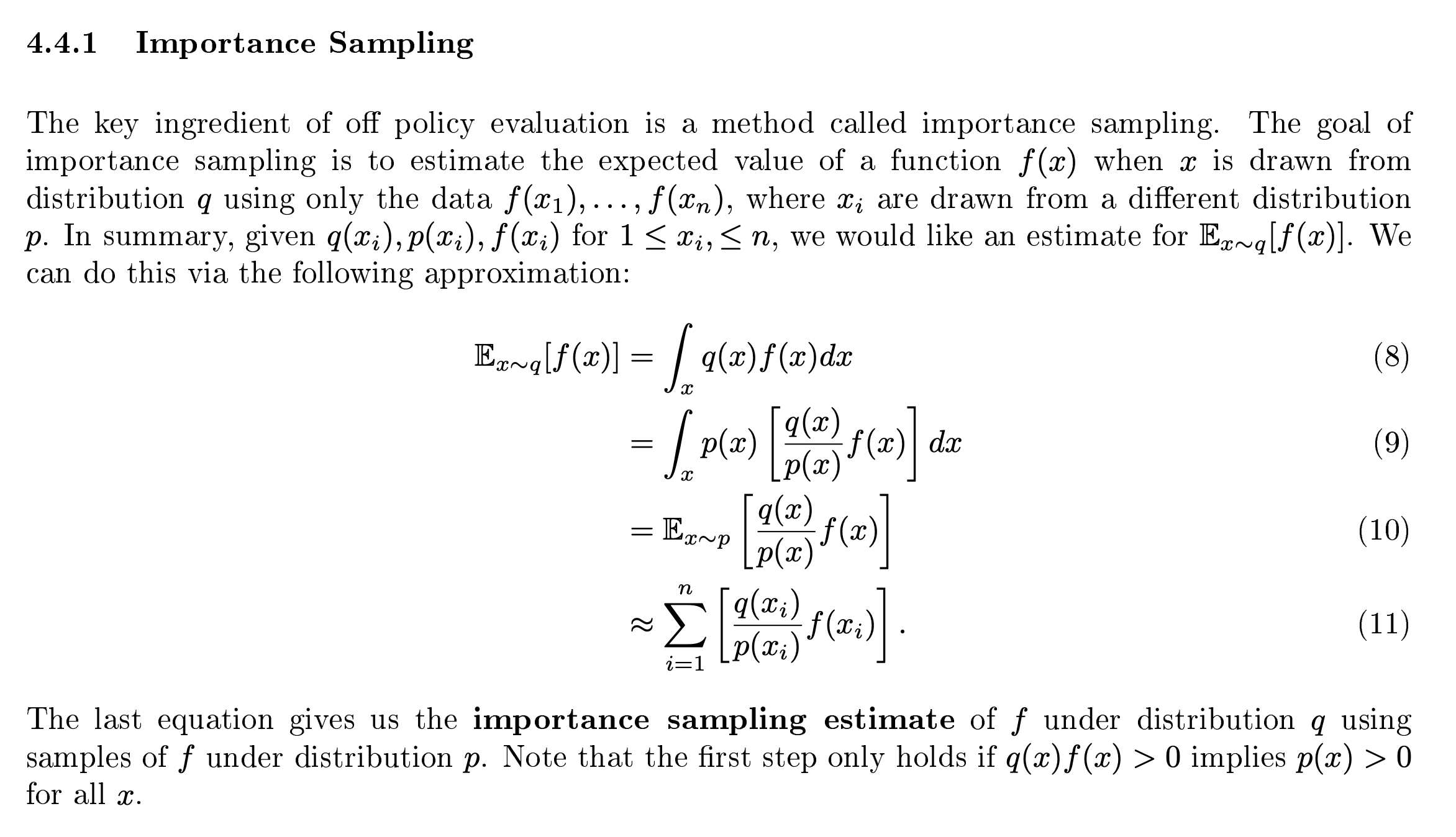

Importance Sampling is a general technique for estimating expected values under one distribution given samples from another.

We can use the following approximation

We can use the following approximation

Why does it kind of look like Advantage Weighted Regression.

From ChatGPT

In off-policy reinforcement learning (RL), we use data generated by a behavior policy (say, ) to improve a target policy ().

- The issue is that the distribution of data (state-action pairs) comes from , but we’re evaluating or improving

- This distribution mismatch needs to be corrected

This is what we do with importance sampling! For each sample , we weight its contribution by the importance ratio:

Suppose:

- β always takes action A.

- π takes action A only half the time and action B the other half.

Without importance sampling, we would overestimate the frequency of action A in π.

F1TENTH

Learning this under the Particle Filter lecture. Basically, if the distribution you want to sample from is normal, you know what values to use. However, how would you do it for any distribution?

We can use the importance sampling principle.