Q-Learning

Q-Learning is the Off-Policy implementation of TD Control. I first went through this with Flappy Bird Q-Learning.

See Deep Q-Learning for continuous state case.

Tabular Q-Learning

Even though it’s off-policy, we don’t need Importance Sampling!

- We now consider off-policy learning of action-values

- Next action is chosen using behavior policy

- But we consider alternative successor action

- As you’ll see below, we let

- And update towards value of alternative action

- Learned from here

Q-Learning Properties (Off-policy learning)

Q-Learning converges to optimal policy - even if you’re acting suboptimally.

- Sarsa is the on-policy version of q-learning

The above uses the 1-step Bellman Optimality Equation

Q-learning generally uses the 1-step bellman optimality backup. You might wonder if there is a -step version, and indeed there is :)

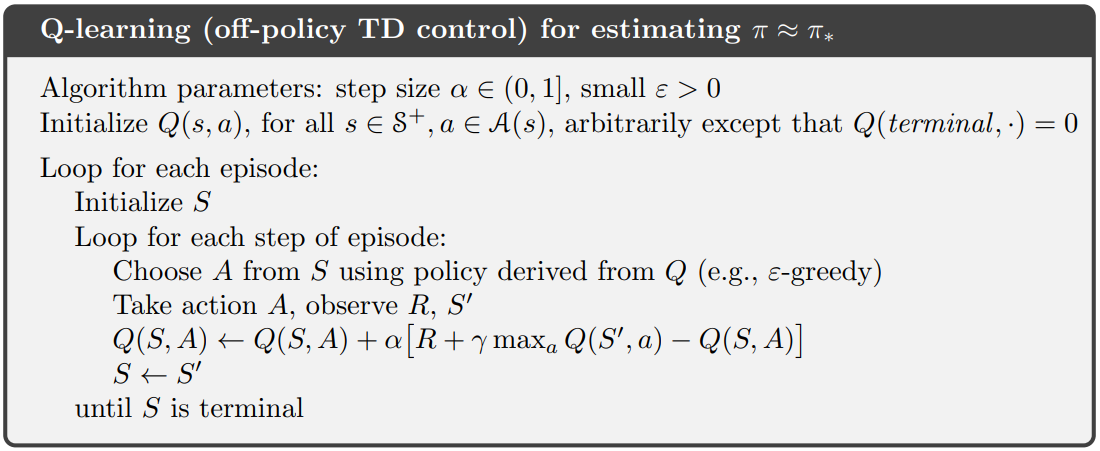

Off-Policy Control with Q-Learning

- We now allow both behaviour and target policies to improve

- The target policy is greedy with respect to

Q-Learning Control Algorithm