Memory Coalescing

Memory coalescing is a technique where multiple memory requests from different threads or processes are combined into a single, larger request. I saw this through the PMPP.

Also see Heap (Memory), where they talk about coalescing memory in the context of freed memory blocks (seen in CS241E).

Memory Coalescing vs. DRAM Bursting

Memory coalescing and DRAM bursting have very similar ideas. Both aim to optimize memory access efficiency, but they differ in the level that it is done:

- Memory Coalescing occurs at a software level (CUDA does this)

- DRAM Bursting occurs at a hardware level

Memory coalescing reduces the number of memory accesses required by aligning them efficiently, while DRAM bursting speeds up the process once a memory access is initiated.

CUDA

These are techniques combined with Tiling to make memory access faster.

Enabled through DRAM Bursting.

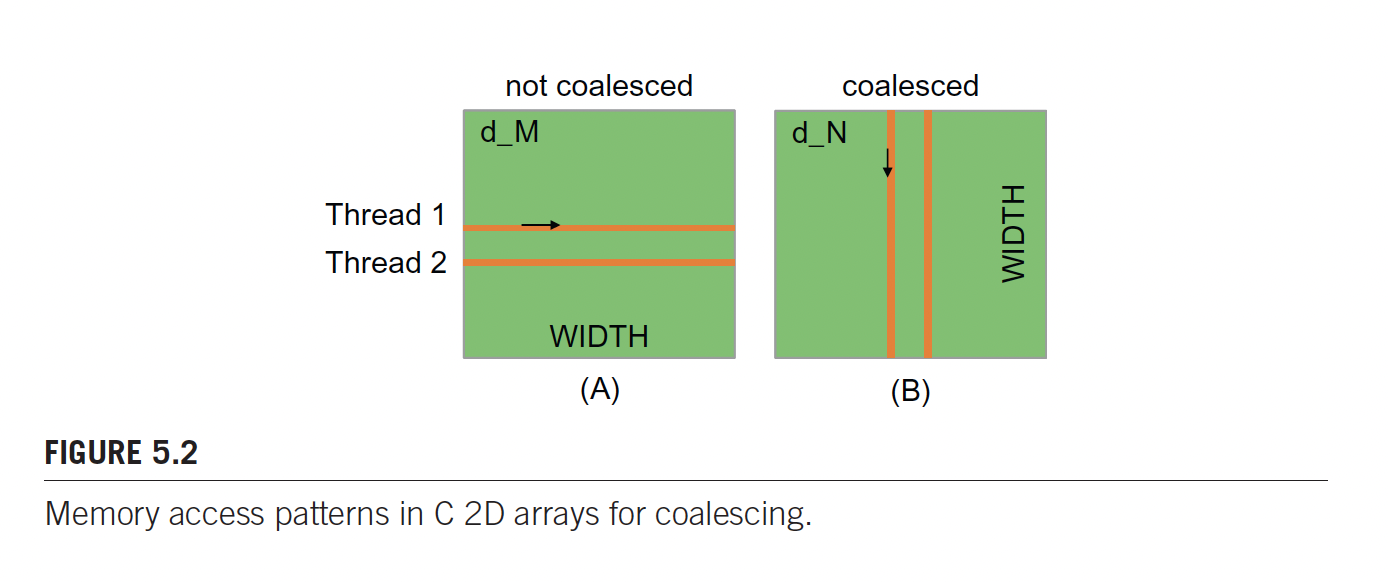

Good pattern:

- On each iteration, the threads are requesting memory adjacent to each other, so the hardware knows

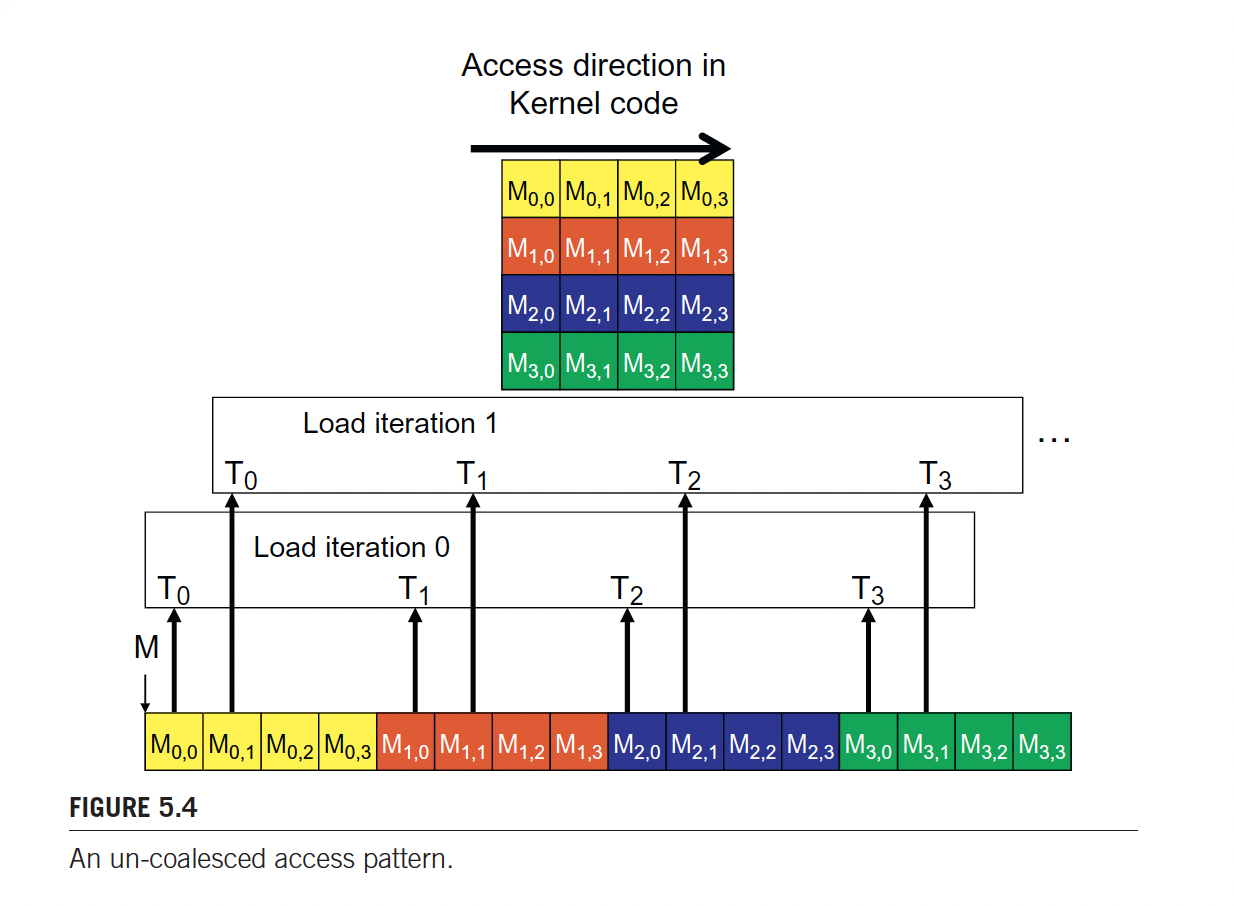

Bad pattern:

- each thread is requesting data that is very far from each other

Who is responsible for detecting that there is consecutive memory access?

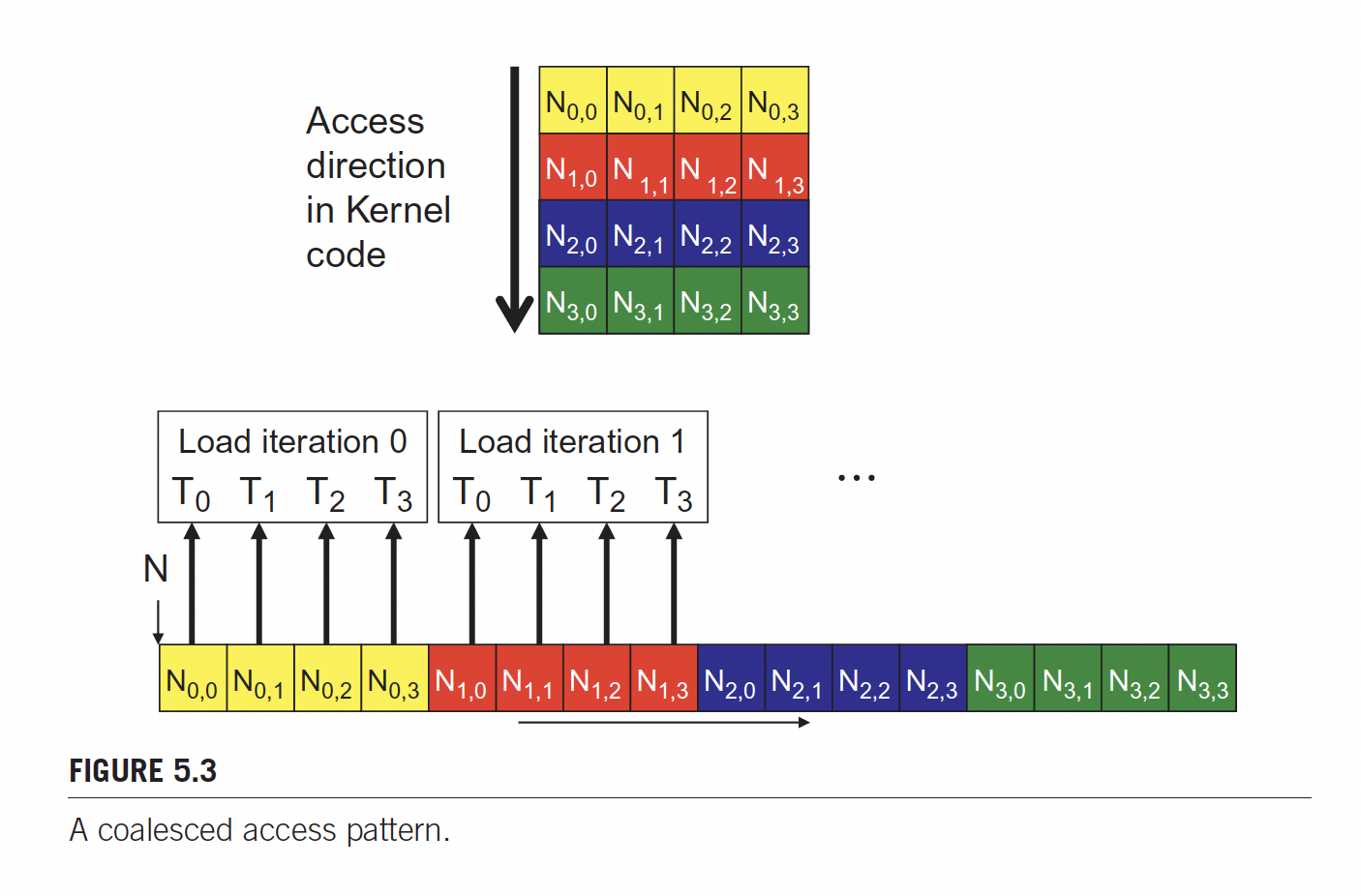

The hardware (GPU memory management system…?) can detect consecutive global memory locations.

The DRAM will recognize that multiple threads are requesting from the same row, therefore it will request it in a single burst.

Personal thoughts: Ahh, I see why it is better. Essentially, you have multiple threads running at the same time. Each of these are iterating through the matrix. You want the threads to be accessing adjacent things, because each thread is doing things approximately at the same time. If the kernel does an access horizontally, there is no parallelism.