Proximal Policy Optimization (PPO)

PPO is very similarly motivated as TRPO, except that instead of using second-order methods, it uses first-order methods to constrain the policy update.

Resources

- https://spinningup.openai.com/en/latest/algorithms/ppo.html

- Implementation here

- Lecture 4: TRPO, PPO from Deep RL Foundations, slides here

- https://lilianweng.github.io/posts/2018-04-08-policy-gradient/

- https://openai.com/blog/openai-baselines-ppo/

- https://arxiv.org/pdf/1707.06347.pdf

Other (Explained well from the spiderman video)

- https://huggingface.co/blog/deep-rl-ppo#the-clipped-part-of-the-clipped-surrogate-objective-function

- Towards Delivering a Coherent Self-Contained Explanation of Proximal Policy Optimization (explanation paper)

- https://iclr-blog-track.github.io/2022/03/25/ppo-implementation-details/

Good reasons for using PPO:

- its comparatively high data efficiency

- its ability to cope with various kinds of action spaces

- its robust learning performance

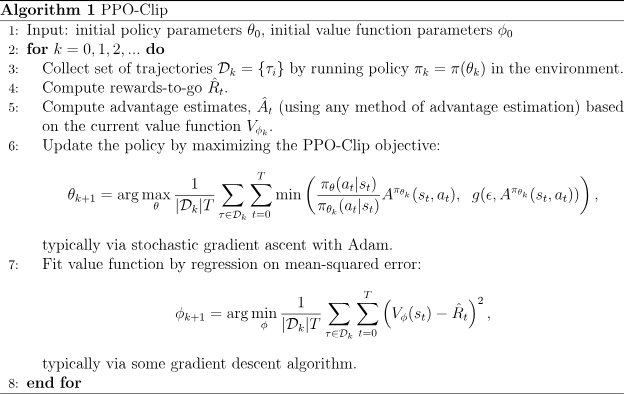

PPO updates its policies via And we do gradient ascent to maximize this objective

- is a (small) hyperparameter which roughly says how far away the new policy is allowed to go from the old.

Why couldn't TRPO do this gradient ascent thingy?

Because TRPO requires Constrained Optimization, which is quite computationally expensive.

PPO gets around this by using the clipped surrogate objective