Policy Gradient Methods

Class of Reinforcement Learning methods that is widely used in practice where we directly optimize the policy, as opposed to learning a Q-function (Value-Based Methods).

- That’s not fully true, because it seems like they still use the poilcy

Classes of policy gradient methods:

Did the derivation during this livestream: https://www.youtube.com/watch?v=rCyMJFKv_qA

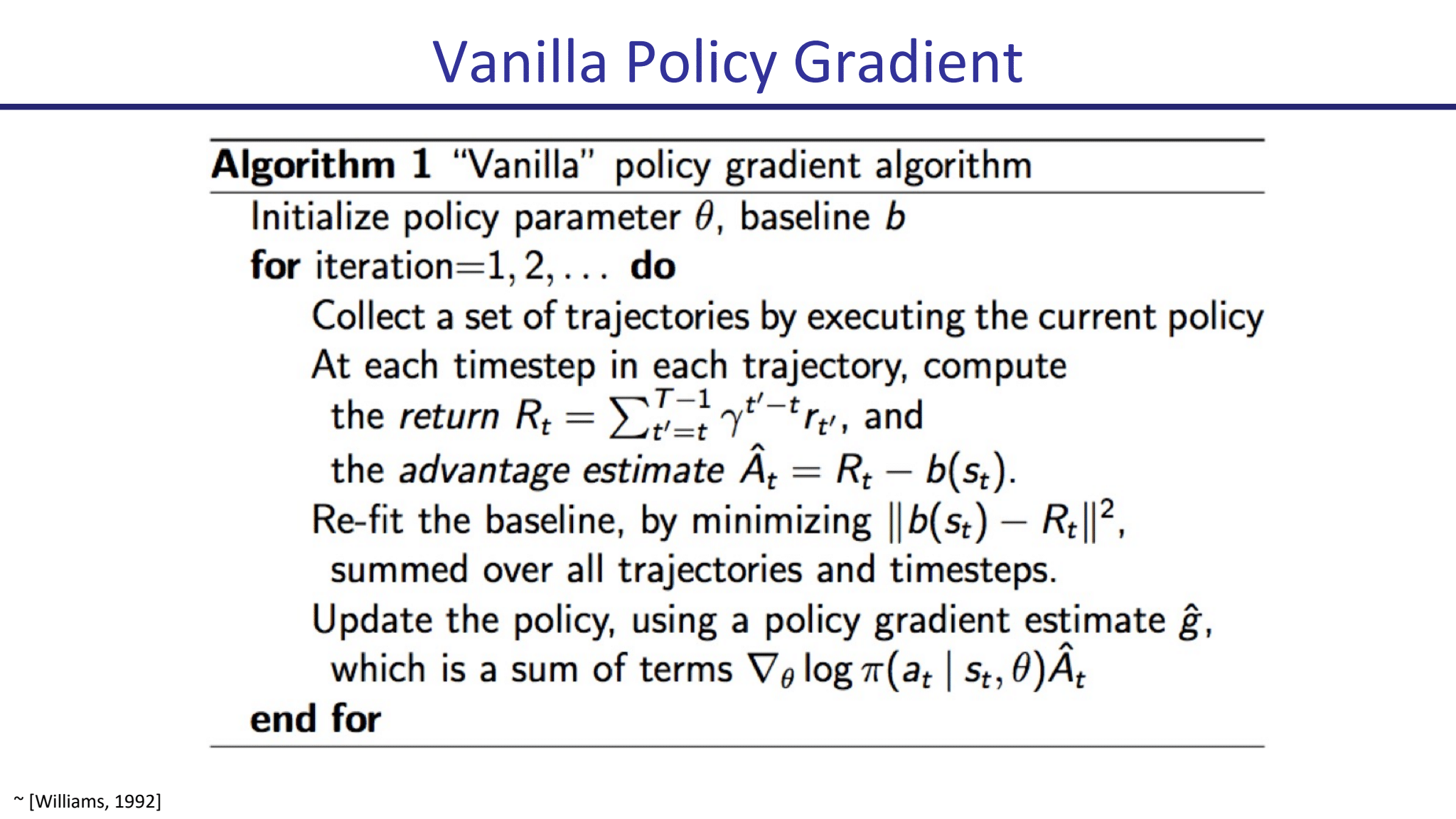

Make sure to understand this derivation (refer to more steven gong): We go from where:

- is a rollout

- is a parameterized policy

- is some advantage estimator, comes from Generalized Advantage Estimation

We can then update our parameters

Resources:

- Spinning up part 3

- Lecture 3: Policy Gradient and Advantage Estimation from Deep RL Foundation Series, slides here

“Policy gradient methods work by directly computing an estimate of the gradient of policy parameters in order to maximize the expected return using stochastic gradient descent”.

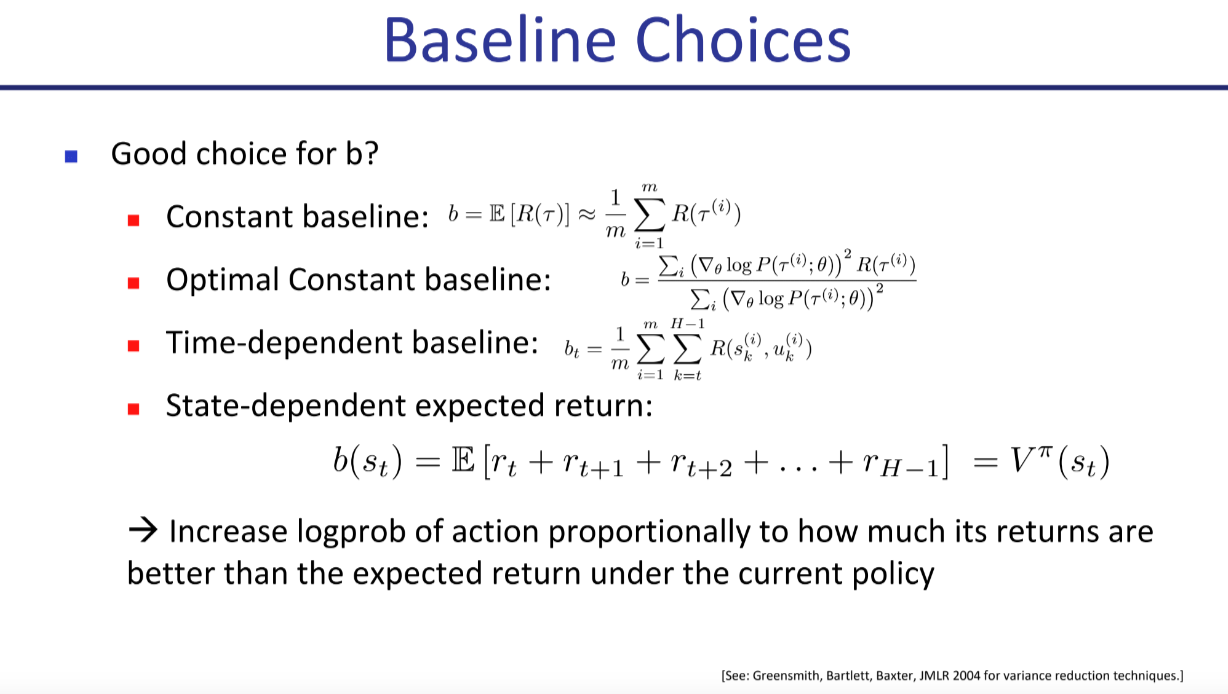

As talked about above, the value network helps with reducing variance. Pieter Abbeel calls it the baseline, but it’s essentially the value function (your current estimate of how much expected reward you will get at this state).

From the Lecture 3 slides:

- So you have two neural networks which both take in states . One generates policy parameters , and the baseline generates the value function

Instead of Value Function Approximation, and then generating a policy directly from the value function, we directly parameterize the policy.

Advantages:

- Better convergence properties

- Effective in high-dimensional or continuous action spaces

- Can learn stochastic policies

Disadvantages:

- Typically converge to a local rather than global optimum

- Evaluating a policy is typically inefficient and high variance

Sometimes, stochastic policies are the best.

For example, in rock-paper-scissors, if your policy was deterministic, your opponent would eventually figure it out, and you would keep losing.

Score Function

Policy Gradient Theorem

For any differentiable policy , for any of the policy objective functions , , or , the policy gradient is

Critic Monte-Carlo policy gradient still has high variance We use a critic to estimate the action-value function, Qw (s, a) ≈ Q πθ (s, a) Actor-critic algorithms maintain two sets of parameters

- Critic Updates action-value function parameters w

- Actor Updates policy parameters θ, in direction suggested by critic