Central Limit Theorem

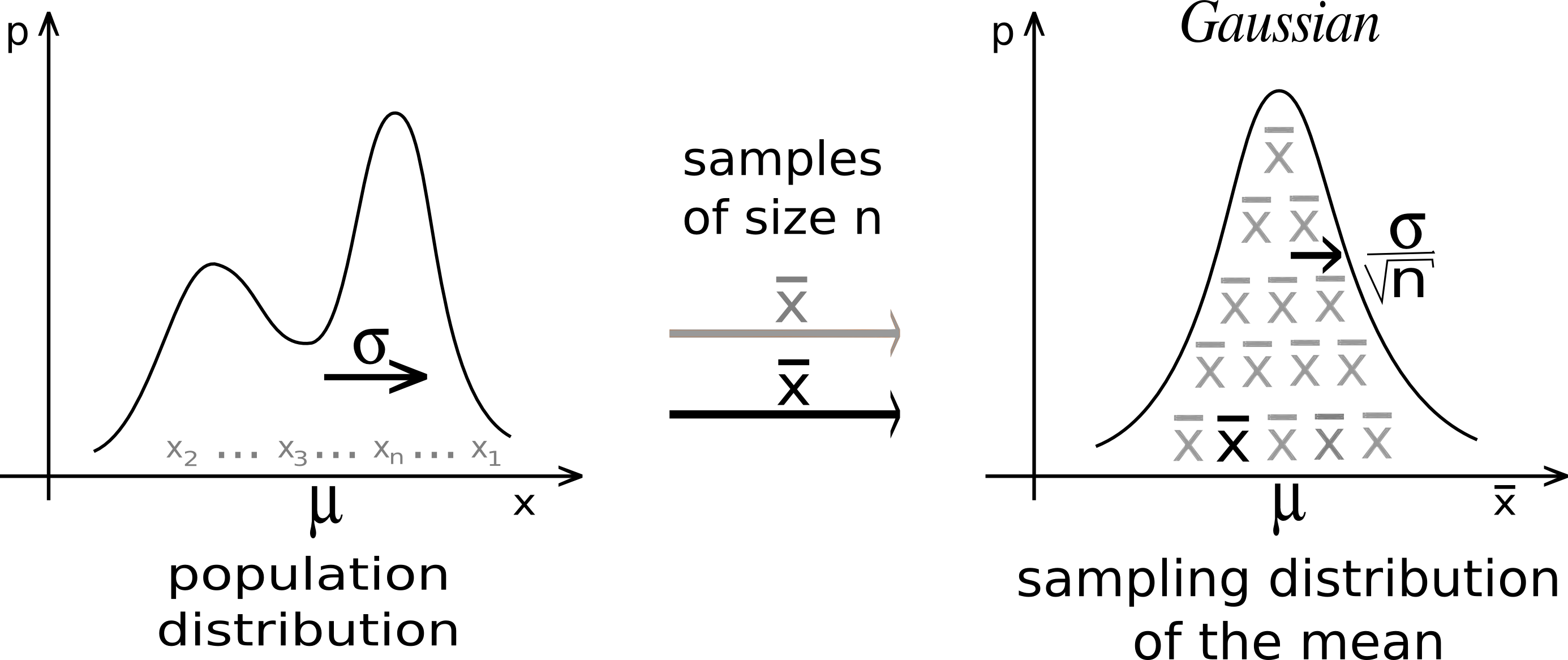

The Central Limit Theorem states that if you take sufficiently large random samples from the population with replacement (whose values are summed), then the distribution of the sample means will be approximately normally distributed.

title: The Sampling Distribution of the Sample Mean (Theorem)

Let $X_1, X_2, \dots , X_n$ be i.i.d. $N(\mu, \sigma^2)$, then

$$S_n = \sum\limits X_i ∼ N(nμ, nσ^2)$$

$$X_n = \frac{1}{n}\sum\limits X_i ∼ N(\mu, \frac{σ^2}{n})$$I know that this works, but I don’t know the theory on why it works. The teacher says the proof is done with Moment-Generating Functions.

title: CLT

The [[Law of Large Numbers]] tells us that the r.v. $\overline{X}_n$ approaches $\mu$ as $n$ approaches $\infty$. But how does $X_n$ behave?

The Central Limit Theorem tells us that as $n → \infty$:

$$X_n ∼ N \left(\mu, \frac{\sigma^{2}}{n}\right) \space OR \space Z = \frac{X_n - \mu}{\frac{\sigma}{\sqrt{n}}} \sim N(0, 1)$$This is so powerful because can model ANY distribution., For example, if then → wait is this CLT?

If , then

Proof

I asked for a proof in class for the CLT which the teacher gave, except I don’t understand it…

Let be i.i.d r.v.s. with ,

Let , where We will show that : Let , is i.i.d with ,

Then, we use the Moment-Generating Function Taking the limits, we have (2nd step to 3rd step jump is by Taylor Approximation, it seems by magic)

The central limit theorem is super interesting! Learned it while at Ericsson. Based around the Ericsson. Based around the Law of Large Numbers.

In my laymanns terms, take any distribution and randomly sample from it. Say if you sample at least 30 times, and you take the sum of those sampled values. Then repeat that process like 1000 times. If you plot the sums of those samples, it will follow a Normal Distribution.

The Continuity Correction

To convert discrete to continuous, we can apply a continuity correction to have a better approximation…?

To generate a Random Normal Number

Obtained from Xixian at Ericsson. I still don’t know enough to understand why this works.

Suppose , , and are independent samples chosen from the uniform distribution with the mean and variance . Let

Where

- is the real Variance

- is the real mean of the distribution, NOT of the sample. (I made this fatal mistake in the implementation)

Then is a random variable with a standard normal distribution if is sufficient large, e.g, is usually the number used