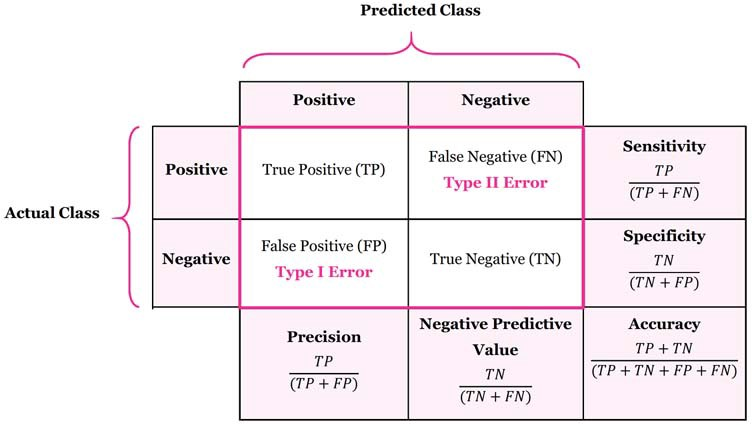

Confusion Matrix

I learned about this a while ago.

- https://www.v7labs.com/blog/mean-average-precision

- https://blog.paperspace.com/deep-learning-metrics-precision-recall-accuracy/

To create a confusion matrix, we need four attributes:

- True Positives (TP): The model predicted a label and matches correctly as per ground truth.

- True Negatives (TN): The model does not predict the label and is not a part of the ground truth.

- False Positives (FP): The model predicted a label, but it is not a part of the ground truth (Type I Error).

- False Negatives (FN): The model does not predict a label, but it is part of the ground truth. (Type II Error).

However, you cannot solely rely on Accuracy (ML) to define that your model is good, see page for more details.

Curves:

More: https://www.dataschool.io/simple-guide-to-confusion-matrix-terminology/