Dynamic Programming

Dynamic programming is a technique that combines the correctness of complete search and the efficiency of greedy algorithms.

Dynamic Programming is “Smart Brute-Forcing”.

There are two uses for dynamic programming:

- Finding an optimal solution: We want to find a solution that is as large as possible or as small as possible.

- Counting the number of solutions: We want to calculate the total number of possible solutions.

Dynamic programming can be applied if the problem can be divided into overlapping subproblems that can be solved independently.

Simple. most of the Dynamic Programming problems are solved in two ways:

- Tabulation: Bottom Up

- Memoization: Top Down

- I think I’ve gotten relatively good at DP thanks to CS341, forced me to think about formulating the subproblems fast

More advanced concepts are explored for Competitive Programming https://codeforces.com/blog/entry/8219

- Top-Down DP

- Bottom-Up DP

- Divide and Conquer DP

- Convex Hull DP

- Knuth’s Optimization DP

- Bitmask DP

- Knapsack DP

- Range DP

DP on trees: https://codeforces.com/blog/entry/20935

Applications

- Coin Problem

- Interval Scheduling

- Knapsack DP

- Longest Increasing Subsequence

- Longest Common Subsequence

- Longest Palindromic Sequence

- Optimal BST

Other:

- Reinforcement Learning

- Grid problems

- Backtracking

Recursive vs. Iterative DP

- Errichto says this iterative, bottom-up approach, is preferred in Competitive Programming

- The Competitive Programmer’s Handbook also says the same, because it is shorter and has lower constant factors.

- However, its often easier to think about DP solutions in terms of Recursive Algorithms

Older Notes

These were taken from the freecodecamp DP video.

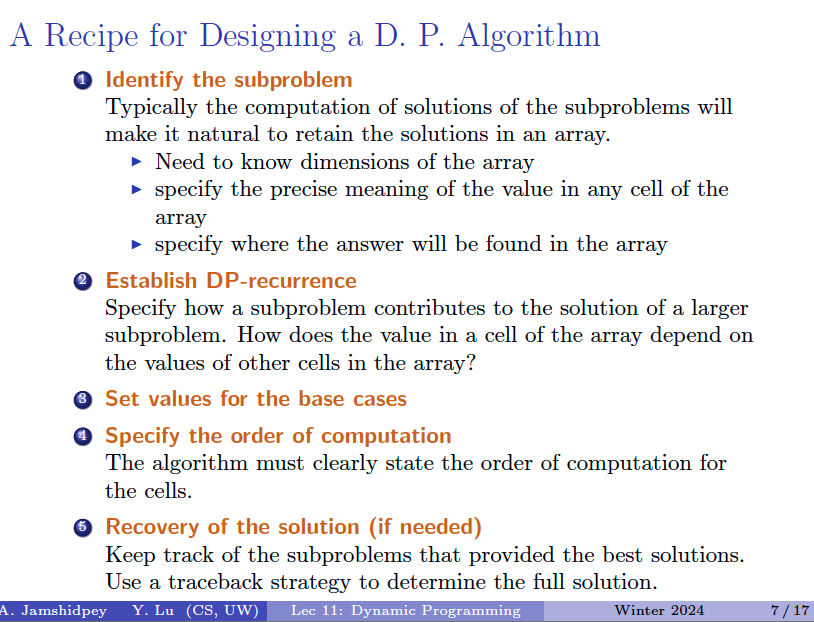

Steps (more application to top-down DP)

- Recursion

- Define Subproblems count # subproblems

- Guess (part of solution) count # choices

- Relate Subproblem Solutions compute time/subproblem

- Apply Memoization (top-down DP), OR

- Apply Tabulation (Iterative / Bottom-Up DP)

- Make sure to check Topological Order

Dynamic programming vs. Divide-and-Conquer?

- dynamic programming usually deals with all input sizes

- DAC may not solve “subproblems”

- DAC algorithms not easy to rewrite iteratively

Direction of updates (forward/backward)

- Sometimes, you do updates like

dp[i][j] = dp[i-1][j](this is what you are used to), a Backward (pull) Update - However, other times, you’ll find yourself doing

dp[i+1][j] = dp[i][j], a Forward (push) Update

When do you know which one to use? Both are somewhat equivalent. Push is almost never “mathematically required.” If you can list predecessors cheaply, you can always write a pull recurrence.

Use push when predecessors are annoying/expensive to enumerate, while successors are given naturally.

Default to backward

When each state has O(1) predecessors you can write down immediately.

- grids (top/left)

- edit distance / LCS

- interval DP (

dp[l][r]from smaller intervals)- many tree DPs (“combine children”)

- most “classic recurrence” problems

- when you want 1D/2D memory compression (pull is usually the cleanest)

Default to forward

When successors are the natural representation or push enables a known optimization.

- graph/DAG DP given as outgoing edges

- “from i you can go to a range/set of j” (difference array / prefix sums)

- state machines / actions where “next” is easy but “prev” is messy

- when you’re essentially doing shortest-path relaxations over states

A little bit ugly:

// dp[i][j] is best up to cell (i, j)

for (int i = 0; i < n; i++) {

for (int j = 0; j < m; j++) {

if (i == 0 && j == 0) {

dp[i][j] = grid[i][j];

} else if (i == 0) {

dp[i][j] = dp[i][j-1] + grid[i][j];

} else if (j == 0) {

dp[i][j] = dp[i-1][j] + grid[i][j];

}

else {

dp[i][j] = max(dp[i-1][j], dp[i][j-1]) + grid[i][j];

}

}

}This looks ugly because of base case handling

So if we used 1-indexing, we can call the recurrence equation anywhere, and have the value default to 0.

You might be tempted to just use forward dp since you don’t really need to avoid pulling from -1

You can clean this up by initializing the borders outside the branch

dp[0][0] = grid[0][0];

for (int j = 1; j < m; j++)

dp[0][j] = dp[0][j-1] + grid[0][j];

for (int i = 1; i < n; i++)

dp[i][0] = dp[i-1][0] + grid[i][0];

for (int i = 1; i < n; i++) {

for (int j = 1; j < m; j++) {

dp[i][j] = max(dp[i-1][j], dp[i][j-1]) + grid[i][j];

}

}

The most clean is the use 1-indexing for dp (and keep 0-indexing for original input)

dp[n+1][m+1]; //initialized to zero

// dp[i][j] is best up to cell (i-1, j-1)

for (int i = 1; i <= n; i++) {

for (int j = 1; j <= m; j++) {

dp[i][j] = max(dp[i-1][j], dp[i][j-1]) + grid[i-1][j-1];

}

}Older

I don’t think you should do this distinction, it makes you confused.

Forward solve

// dp[n+1][m+1] is initialized to INF

dp[0][0] = 0;

for (int i = 0; i < n + 1; i++) {

for (int j = 0; j < m + 1; j++) {

int idx = i + j;

if (i < n) {

int cost = dp[i][j] + (a[i] != c[idx]);

dp[i + 1][j] = min(cost, dp[i + 1][j]);

}

if (j < m) {

int cost = dp[i][j] + (b[j] != c[idx]);

dp[i][j + 1] = min(cost, dp[i][j + 1]);

}

}

}

cout << dp[n][m] << endl;Backward solve

// dp[n+1][m+1] is initialized to INF

dp[0][0] = 0;

for (int i = 0; i < n + 1; i++) {

for (int j = 0; j < m + 1; j++) {

int idx = i + j;

if (i == 0 && j == 0) {

continue;

}

if (i == 0) {

dp[i][j] = dp[i][j - 1] + (b[j - 1] != c[idx - 1]);

} else if (j == 0) {

dp[i][j] = dp[i - 1][j] + (a[i - 1] != c[idx - 1]);

} else {

int left = dp[i][j - 1] + (b[j - 1] != c[idx - 1]);

int top = dp[i][j] = dp[i - 1][j] + (a[i - 1] != c[idx - 1]);

dp[i][j] = min(left, top);

}

}

}

cout << dp[n][m] << endl;But when do you know when to use forward or backward solve??

Like this problem: https://leetcode.com/problems/count-partitions-with-max-min-difference-at-most-k/description/?envType=daily-question&envId=2025-12-06