Value Iteration

I am learning value iteration through two different sets of notations, from David Silver and CS287 by Pieter Abbeel.

It is confusing because value iteration propagates from the terminal states (I guess this is pretty standard, this is how Dynamic Programming works).

Unlike Policy Iteration, there is no explicit policy, and intermediate value functions may not correspond to any policy.

You should first familiarize with Value Function and Optimal Value Function.

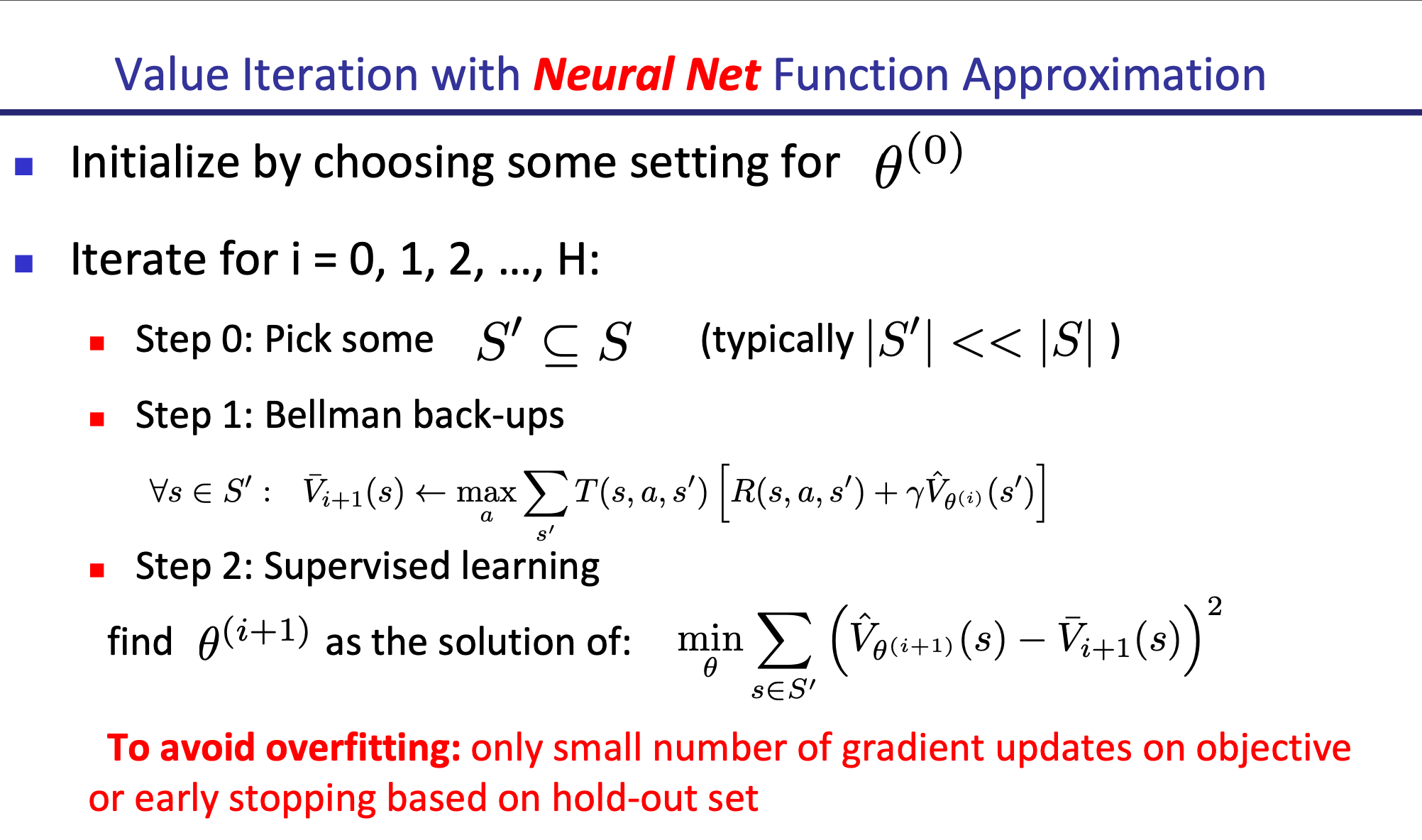

Problem: find optimal policy Solution: Iteratively apply the Bellman Equation Optimality Backup:

- We initialize for all states.

- We use to update , therefore we can get from .

- Do this for as many time steps as you need. As , we see that converges to .

Intuition

#gap-in-knowledge Understand why value iteration works (I understand the algorithm)

Think about this intuitively as a sort of lookahead. The more iterations you have, the more the values can propagate, and the better the value functions are going to be represent the optimal function, since you are taking the max every time.

Ok, this is still not intuitive to me, see proof below.

Using this Optimal Value Function, we then get an optimal policy

The algorithm is described by:

- In here, we generate the policy only at the very end, since the Bellman Optimality Backup does not depend on a policy, we only need to look at the value function. This is not the case for Value Iteration

And so you just build a table and iteratively update the table using Iterative DP. Still need to implement this from scratch, working through it through the homework.

In CS287, a good run through of the grid example is 23:03.

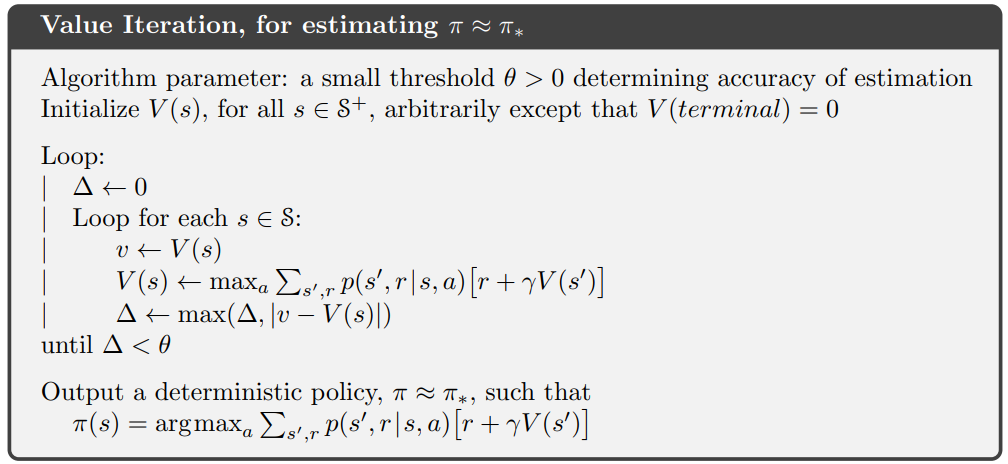

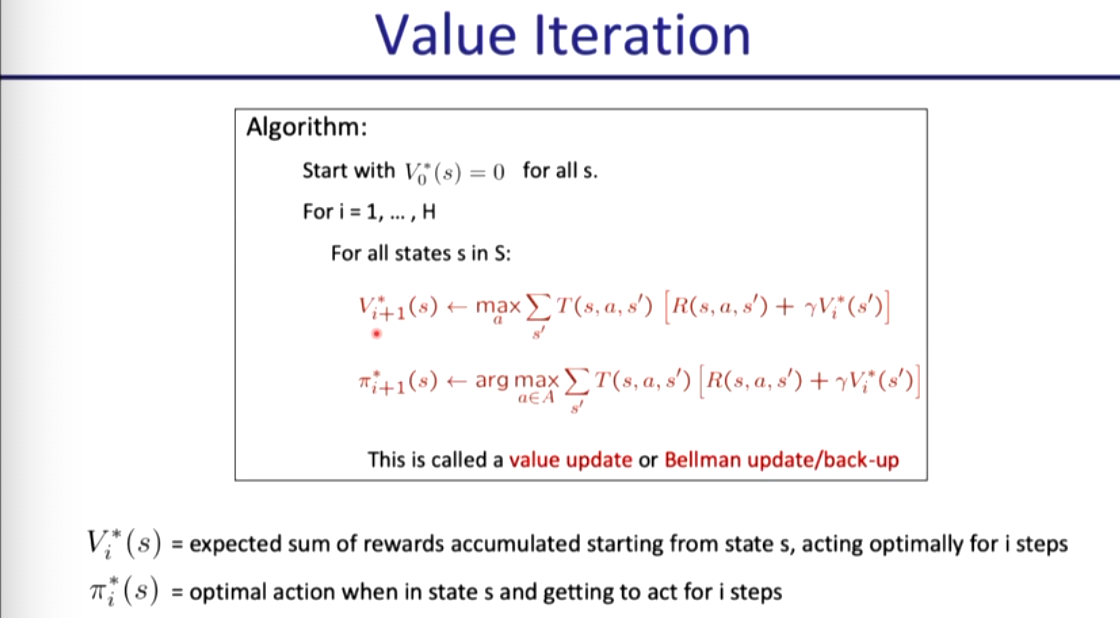

Alternative Notation

This is notation from the CS287 lecture. From an implementation perspective, it makes more sense to use and instead of and because it’s just easier as a vectorized implementation in code.

Value update:

And so the optimal policy is just taking the instead of the

#todo Convert into LabML notes

Implementation in Python

# Value Iteration, vectorized implementation

for i in range(H):

v= self.value_fun.get_values()

next_v = np.max(np.sum(self.transitions * (self.rewards + self.discount * v), axis=2), axis=1) # Bellman Optimality Backup

self.value_fun.update(next_v) # Update value function

pi_next_idx = np.argmax(np.sum(self.transitions * (self.rewards + self.discount * v), axis=2), axis=1)

pi = np.zeros(shape=self.policy._policy.shape)

pi[np.arange(pi.shape[0]), pi_next_idx] = 1

self.policy.update(pi) # Update policy

Intuition / Visualization

Okay, so I did the assignment for value iteration, so on each iteration, the value of the cells propagate backwards. Okay, you need to understand at an implementation level how the value functions and rewards are defined, i.e. Reward Engineering.

So you can see for your rollouts in the assignment, as your iteration increases, you the cells farther and farther from the reward learn to propagate.

#gap-in-knowledge I still need to build Intuition on this convergence. I know that the algorithm works if I apply it correctly, but now, it’s about getting the proof.

I think maybe the key in understand is this idea of being able to act for steps, and this is how the values propagate.

Proof of Convergence

The intuition is to show that as

Proof 2: Thinking of it as a Contraction, isn’t that the same thing? Fact: The value iteration update is a Gamma Contraction in max-norm.

Corollary: Value iteration converges to a unique fixed point.

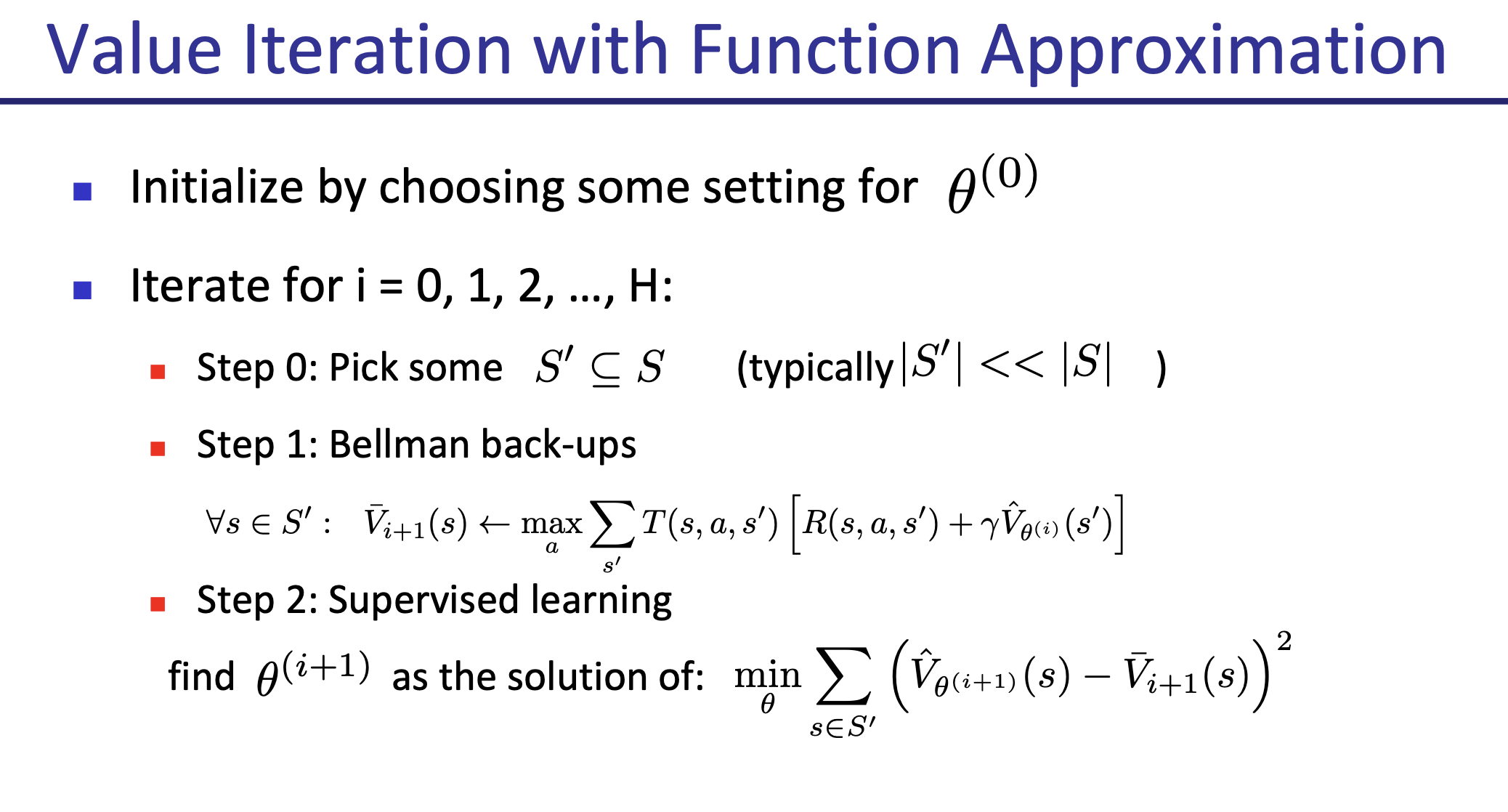

Value Iteration with Value Function Approximation

If there are very large state spaces (or you work in a continuous state space), you can first do Discretization.

Another approach is to do VFA.

For example, you can use a Neural Net.