Nonlinear Optimization Least Squares

Non-Linear Least Squares

A non-linear version of the least squares problem, where the error term is non-linear.

Options to solve:

- Ceres Solver http://ceres-solver.org/

- G2o https://github.com/RainerKuemmerle/g2o

- Eigen?

- Sophus

- CuSOLVER https://docs.nvidia.com/cuda/cusolver/index.html#using-the-cusolver-api

- Found from a quick google search https://github.com/Rookfighter/least-squares-cpp

Notes from Cyrill Stachniss course.

I find the notes from the SLAM Textbook to be really good.

The goal is to minimize the function

We solve it iteratively in the following steps:

Abstract

- Give an initial value .

- For -th iteration, we find an incremental value of, such that the object function reaches a smaller value.

- If is small enough, stop the algorithm.

- Otherwise, let and return to step 2.

- This feels very much like Gradient Descent. How do we find ? In gradient descent, it’s just some step size multiplied by the gradient of the function

Take the Taylor Polynomial expansion of :

- is the first derivative of (called the Jacobian Matrix)

- is the second derivative of (called the Hessian Matrix)

Note

Depending on whether you take or , you end up with first-order method (Gradient Descent) or second-order method (Newton’s Method).

- Gradient descent is volatile due to having to determine the step size correctly, does not guarantee convergence

- Newton’s method is computationally expensive

The book introduces two quasi-Newton methods to avoid calculating directly:

Practical advice

We usually choose one of the Gauss-Newton or Levenberg-Marquardt methods as the gradient descent strategy in practical problems.

- When the problem is well-formed, Gauss-Newton is used

- Otherwise, in ill-formed problems, we use the Levenberg-Marquardt method

In SLAM Context

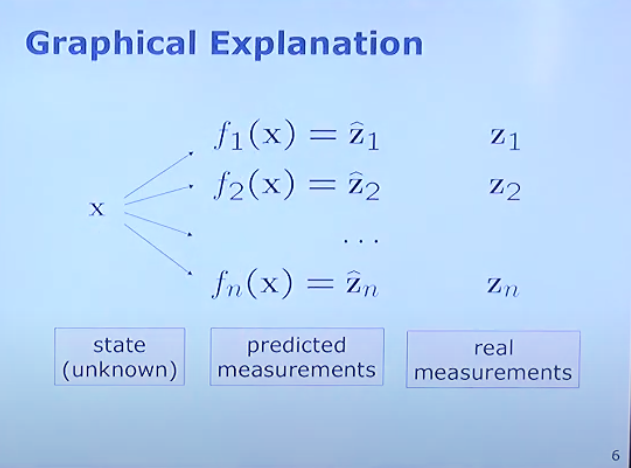

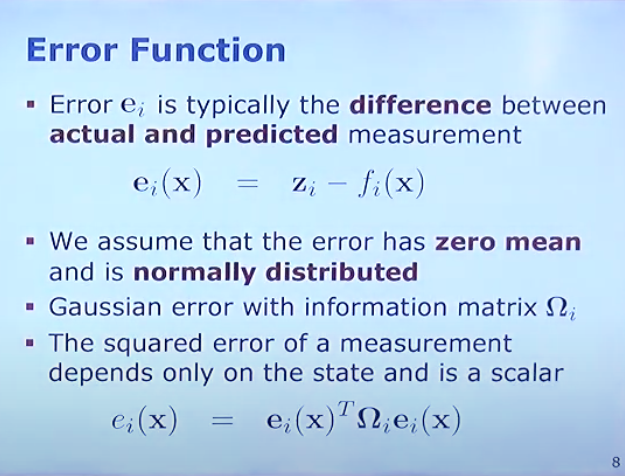

In the context of SLAM, we want to minimize the error between the current observation and the prediction.

So how is F(x) defined?

where

-

so how are those values actually computed?

-

is the 3d coordinate of the point point example

-

is the project of the 2d coordinate onto the 3d plane

What is this equation?

- Depends only on the state