WATonomous Logs

Some goals

- Occupancy networks for world modelling

2024-07-26

- Build the new computer (motherboard + GPU), and set up software on it

- Power with GPU, else won’t provide enough wattage when we run the perception stuff

- Configure other cameras on the car

- Recheck the calibration

- Have DBW set up, so we can control the car with a joystick (this is arguably most important, and then circuit breakers, and really make sure everything is good)

2024-07-22

Alright, go the cameras working, needed to factory reset (i didn’t do it correctly the first time)

As soon as multiple cameras are started, in ROS, there is big problem. so we need to use the multicc-amera setup.

Things:

- [] Mechanically, set up the rest of the 4 cameras + mount + covers

- [] Publish camera_info (intrinsics of the camera)

- [] Electrical (aryan task): Get the car powered by the main battery

2024-07-21

So we were trying to configure the cameras. Got it visualized in spinview but could not see it in ROS.

Then, I used the default launch file provided by spinview and it worked. And there were some extra settings that I did not use. And that was the fix. Always refer back to your baseline.

So i get these interesting errors in terms of the frame rate

2024-06-20

https://www.youtube.com/watch?v=2ILFU-QIc5U

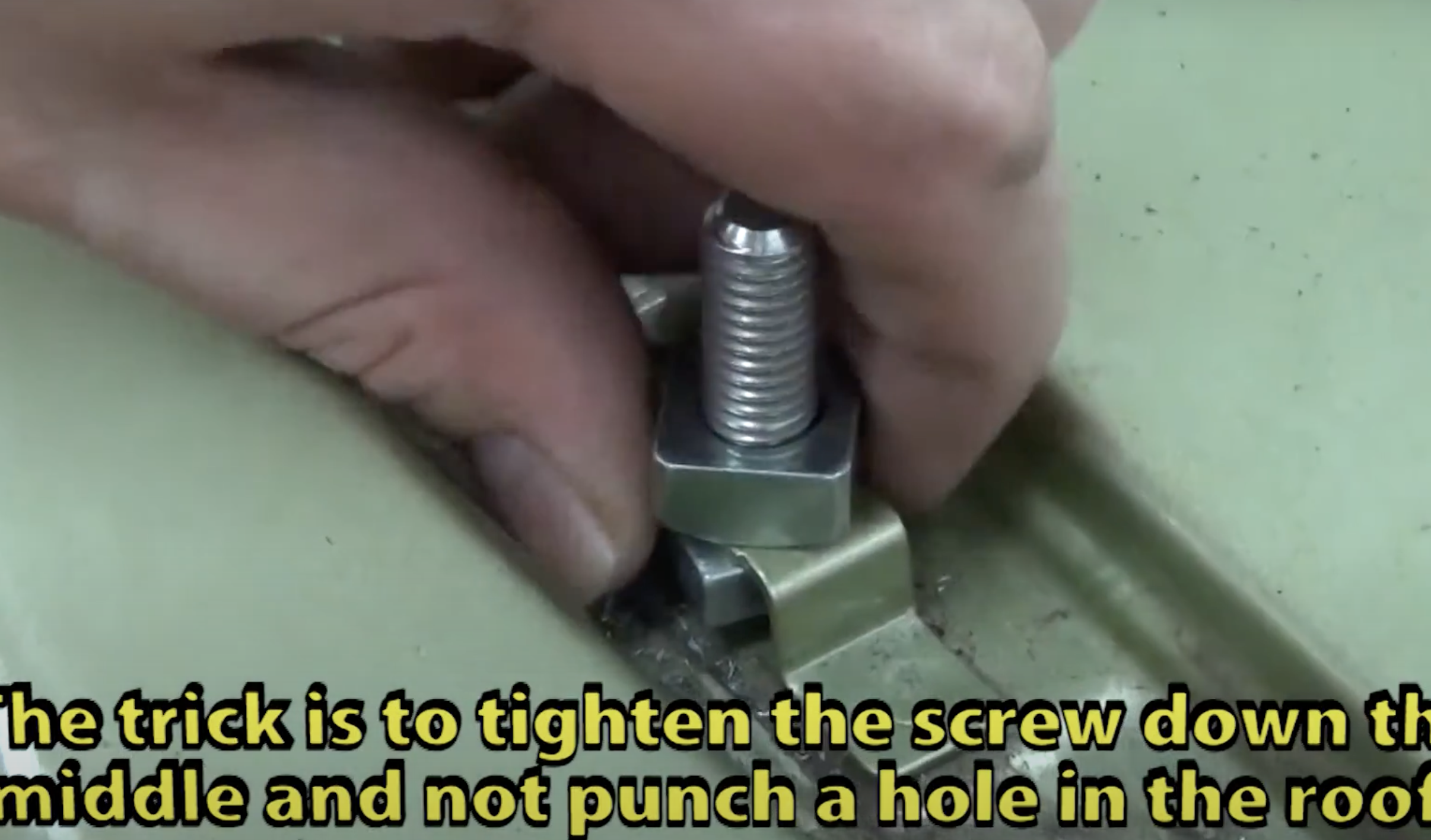

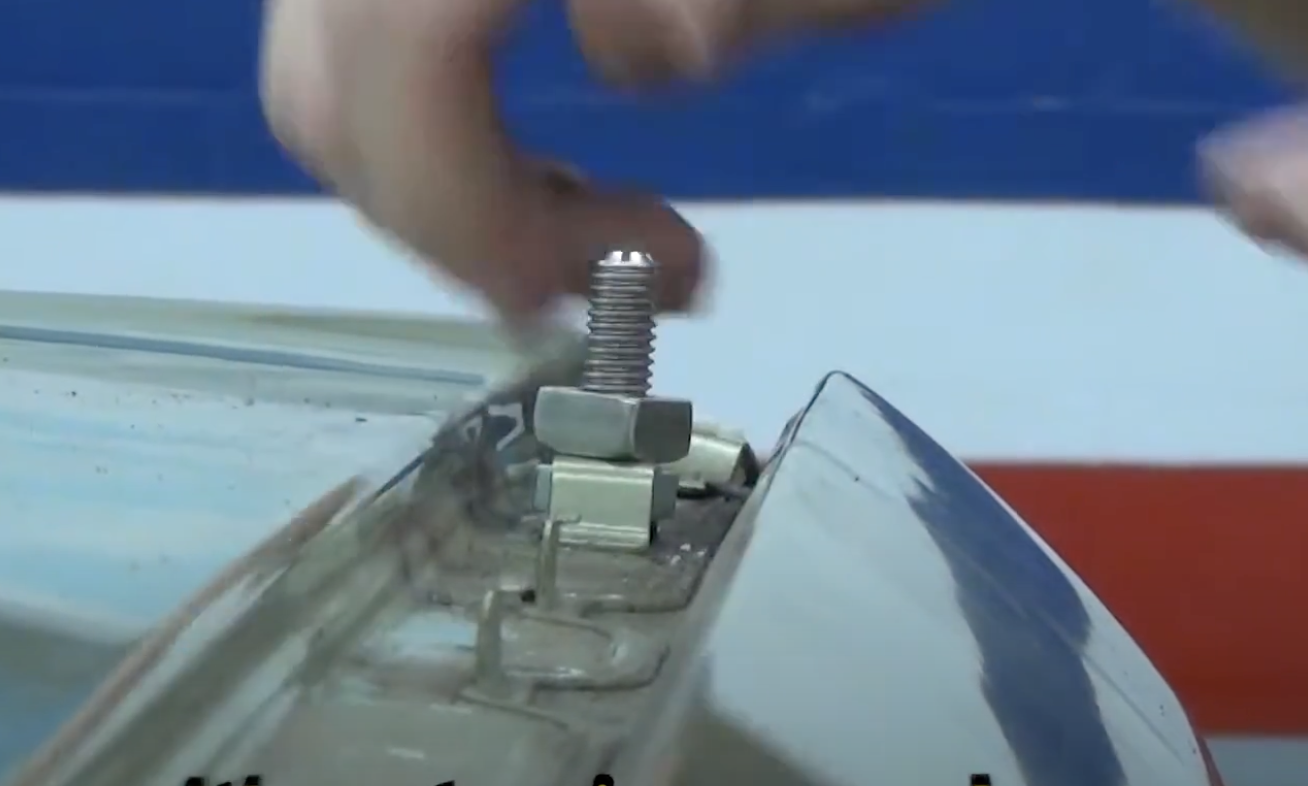

7mm screw

- 4x 10CM 4040

- 4x 8cm M7 screws

- 8x nuts

To f

2024-03-19

Working on the tracking node

ros2 topic pub /augmented_camera_detections vision_msgs/msg/Detection3DArray2024-03-18

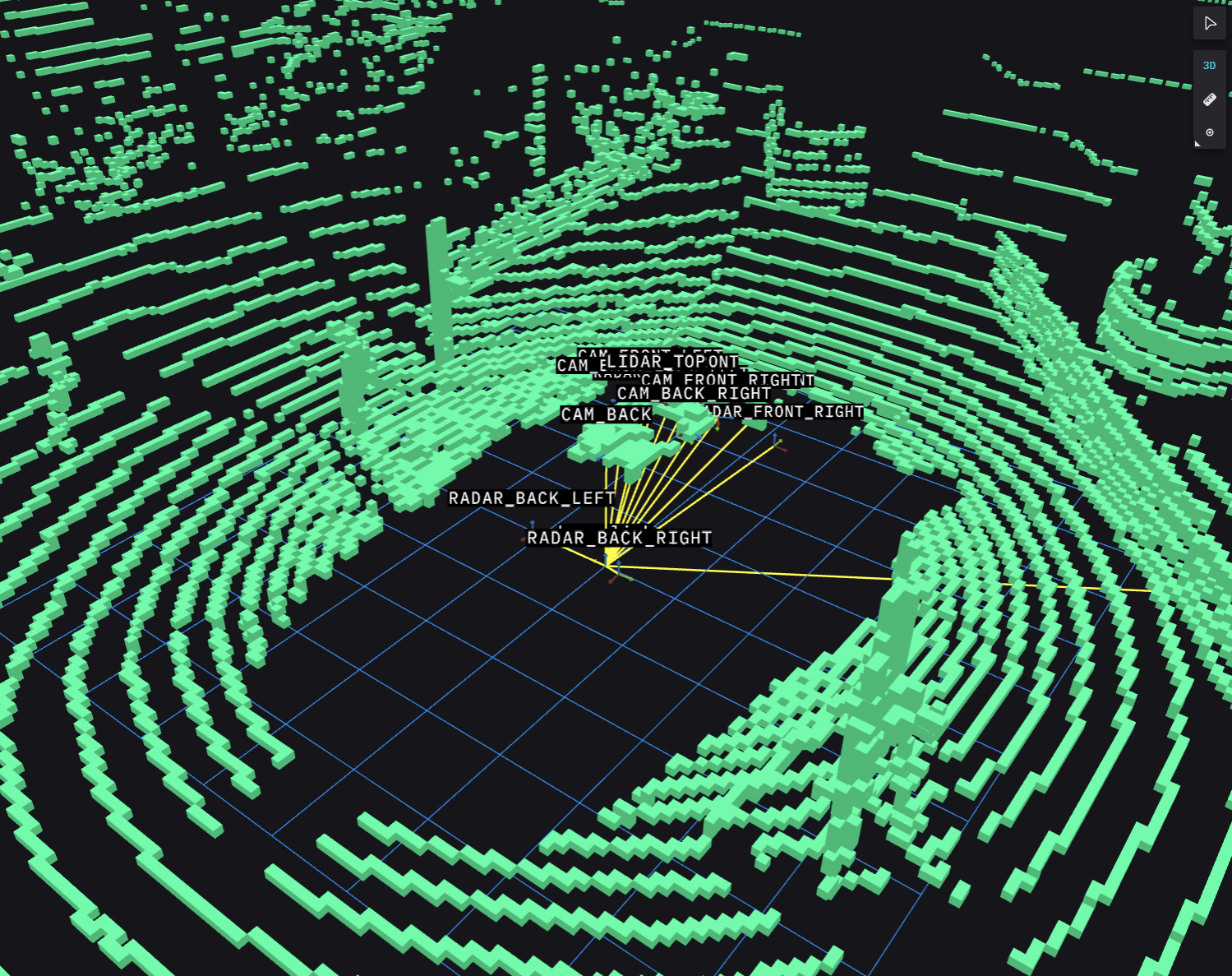

So I will be working with Mark today on the occupancy grid. One of the things that needs to be thought about is how fine-grained the voxels should be. Also, how to store them?

For visualization, only pointclouds can be accepted, and you make the size whatever the size of the voxels is. However, we might want a custom message type.

- Storing a 3D grid in memory is highly inefficient due to the sparse nature of 3d points. That’s also why pointclouds are stored in a unstructured manner

So each voxel has a particular class associated with it. We can encode this class in the pointcloud itself.

2024-03-14

We are making solid progress. I am hoping to go very deep soon. Implementing the Voxel Grid will be a first step.

Things to reason about:

- Subscribers and publishers for the 3D Tracker

- How to implement the actual 3D map

Alright, doing some fundamental work to finish off the rest of the WATonomous stack.

I need to also figure out how to implement tracking.

2024-03-10

Spent the entire night debugging with the VP people. Lots of learnings.

- Rootless docker does not configure host network mode properly. Need to run docker as root (

sudo) - RMW implementation affects it, camera node had no subscriber detected, so switching from

fastRTPStocycloneDDSfixed it

Then, it’s about getting cameras and lidars on the same subnet.

- Lidar has a static IP. Camera uses DHCP

- The cameras you guys are used to are USB based. These higher-end ones are IP based. To find them properly, the IPs need to be configured

- We forced the IP of the cameras to be on the same subnet as the lidar

Things to are still not clear:

- X11 port forwarding adds additional latency

camera_infotopic published by who? And how does it look like, it’s probably incorrect- gige cables for other 2 cameras

2024-03-08

Today, need to go over Justin’s PRs and make sure that they work with our configuration, and merge them in properly.

Need to spend some energy thinking about how to get our perception models to be more accurate:

- You have a baseline with the traffic lights and traffic signs. The issue is, as you grow your dataset, your ground truth shifts

- So the way to do this is fix your test set. You can grow the training set, but make the test set the same. We don’t have a big enough test set. Only use external images for the training, not for testing

- We need to collect more car data,

And then if you see that the added external images make the prediction better, then we add them.

In terms of changes to the model:

With justin’s model, we have the semantic segmentation model. We need to publish a colormap, similar to what nvidia does: https://nvidia-isaac-ros.github.io/repositories_and_packages/isaac_ros_image_segmentation/isaac_ros_unet/index.html

We used to have 2d occupancy grids.

Why does Tesla do 3d occupancy grids?

https://www.youtube.com/watch?v=ODSJsviD_SU https://www.youtube.com/watch?v=j0z4FweCy4M

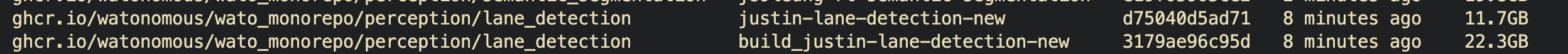

Seems like justin took it from this repo for the lane detection:

Noticing that the image published by NIVIDIA Isaac ROS is a mono8 image.

So that is pretty smart. And we should probably publish this table somewhere. Instead of hardcoding it into the C++ code

Lane Detection

Was having some issues with not having 2 different tagged images. Eddy fixed this in his PR after we use the develop mode flag. Which stops at the build stage, and not deploy.

- love this, halving the image size in production through multi-stage builds

- https://docs.docker.com/build/building/multi-stage/

- We do this by specifying a

target, you’ll see this in the respectivecompose.yaml

2024-03-07

Meeting with the leads. Today is a fundamental day. Need to go over the 4 PRs:

TODO: Make a screen recording of going through the 4 PRs

Fisheye (IMX ???)

- 2048x1536

Regular camera IMX249

- 1920x1200 (16:10 resolution)

Current lens:

These PRs are the ones I am taking care of:

- https://github.com/WATonomous/wato_monorepo/pull/80 → Traffic Light Detection DONE)

- https://github.com/WATonomous/wato_monorepo/pull/83 → Traffic Sign Detection DONE

Traffic light launch file → Pretty straightforward, making use of launch file composition to launch the pretrained yolov8 and the traffic light.

2024-02-22

Is this even necessary? I didn’t see this in the LiDAR code.

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: 1

capabilities: [ gpu ]Like it works for lidarcuz

2024-02-21

version: "3.8"

x-fixuid: &fixuid

build:

target: build

services:

radar_object_detection:

<<: *fixuid

extends:

file: ../docker-compose.perception.yaml

service: radar_object_detection

command: tail -F anything

volumes:

- ${MONO_DIR}/src/perception/radar_object_detection:/home/bolty/ament_ws/src/radar_object_detection- So you can specify a target, this is how in dev mode we choose the build target, whereas normally we choose the target build

But I don’t understand how changing that target removes the ability to see the packages? Like it’s not going to do anything different.

So the problem is that when I do watod -dev up, it doesn’t install anything.

I am trying to get the lidar object detection finalized today. Need to make sure the frame id is set correctly.

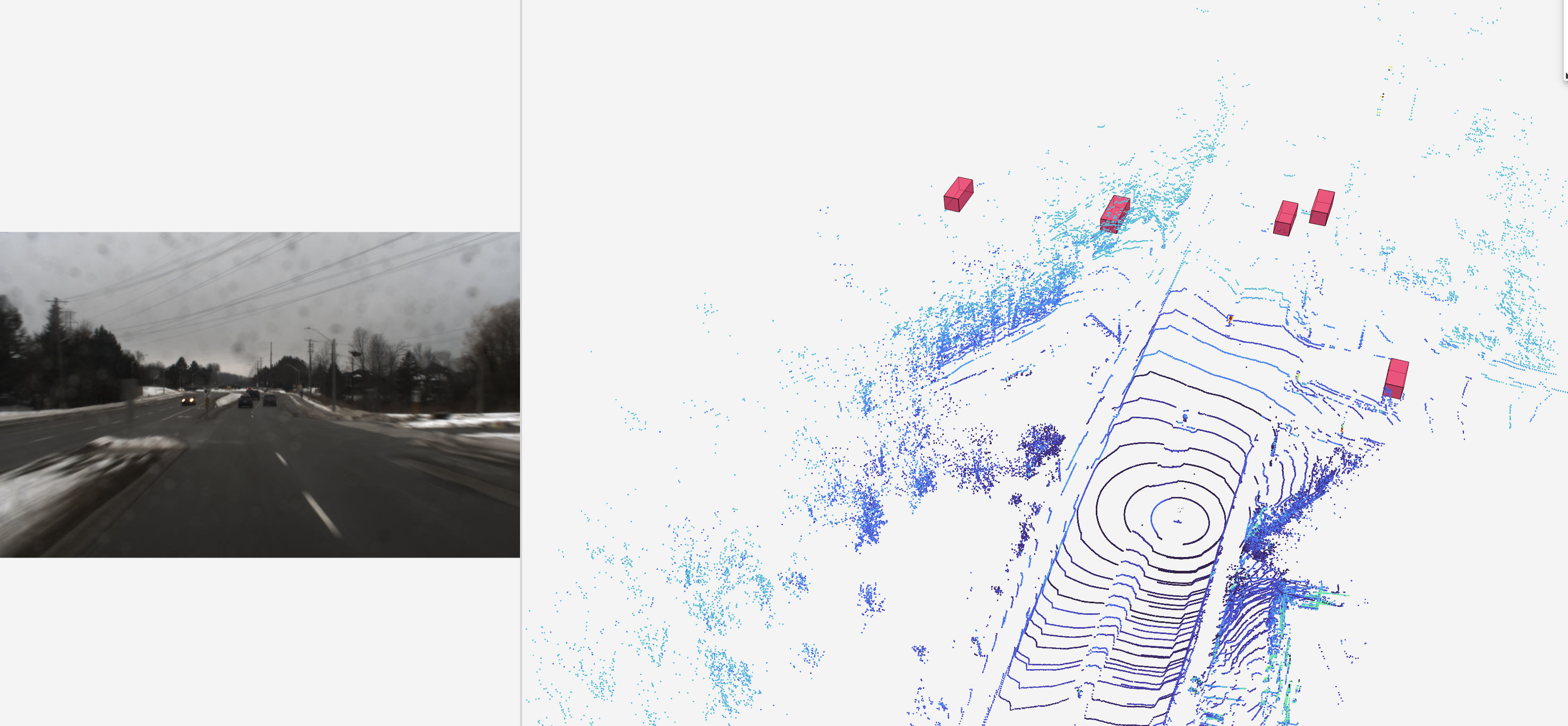

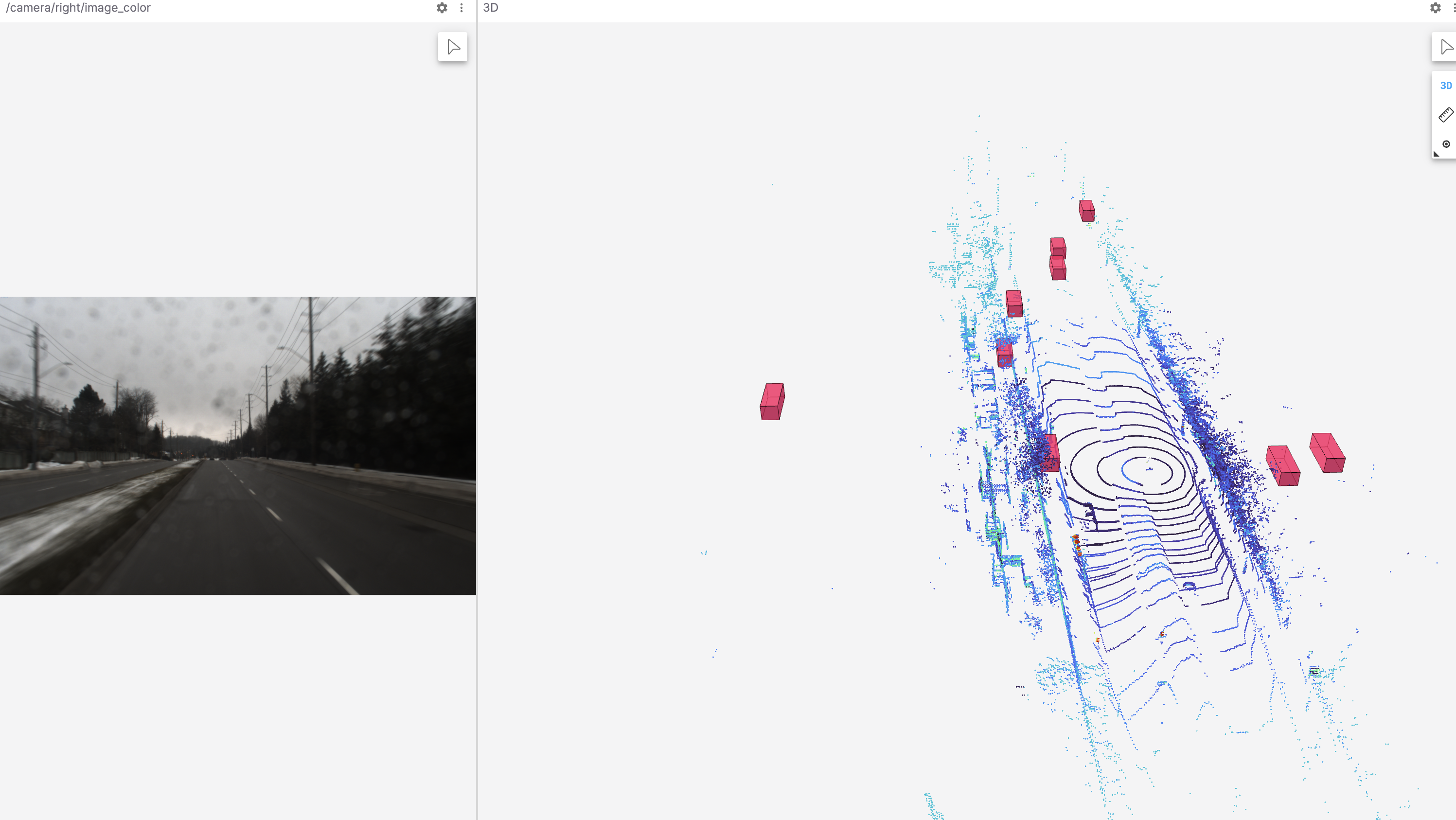

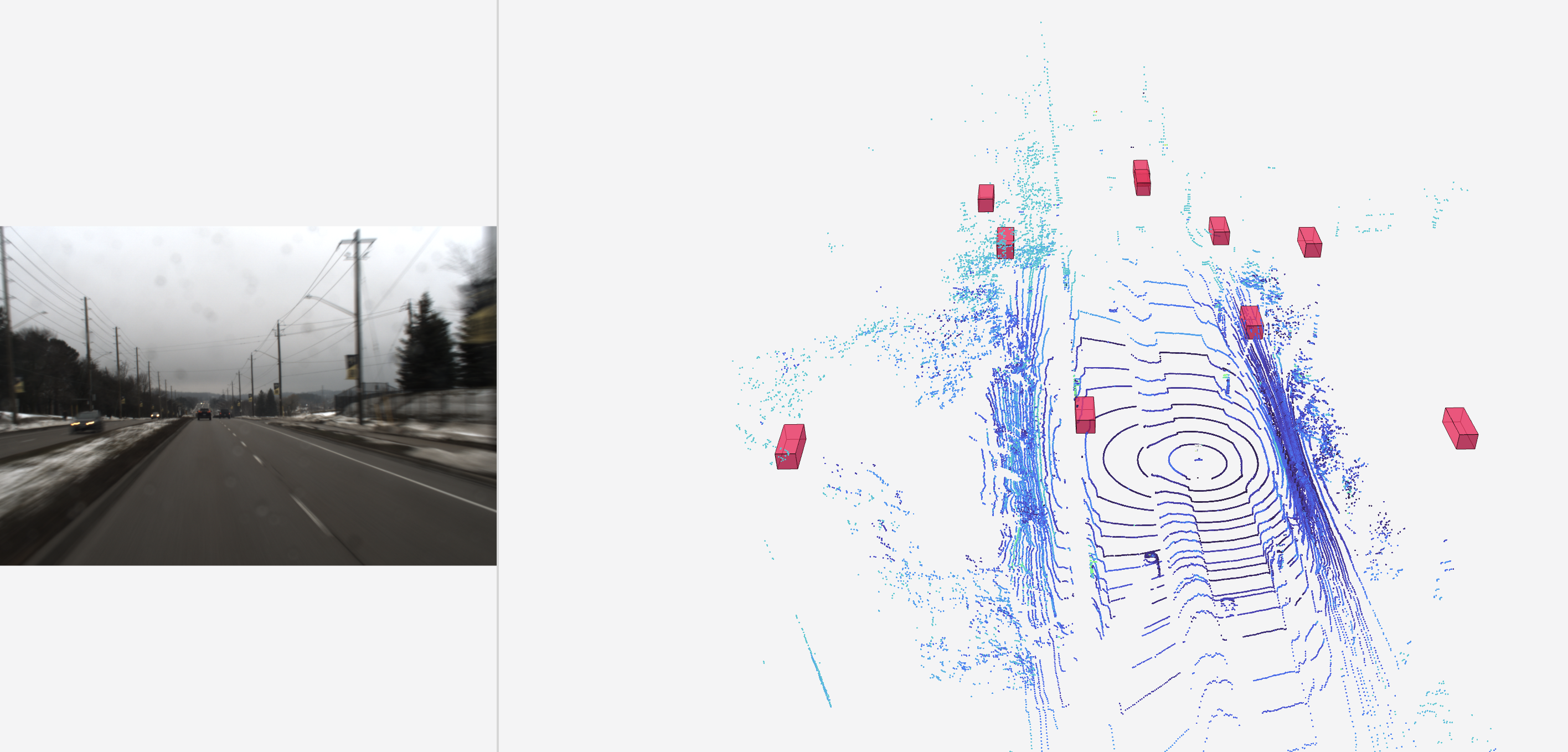

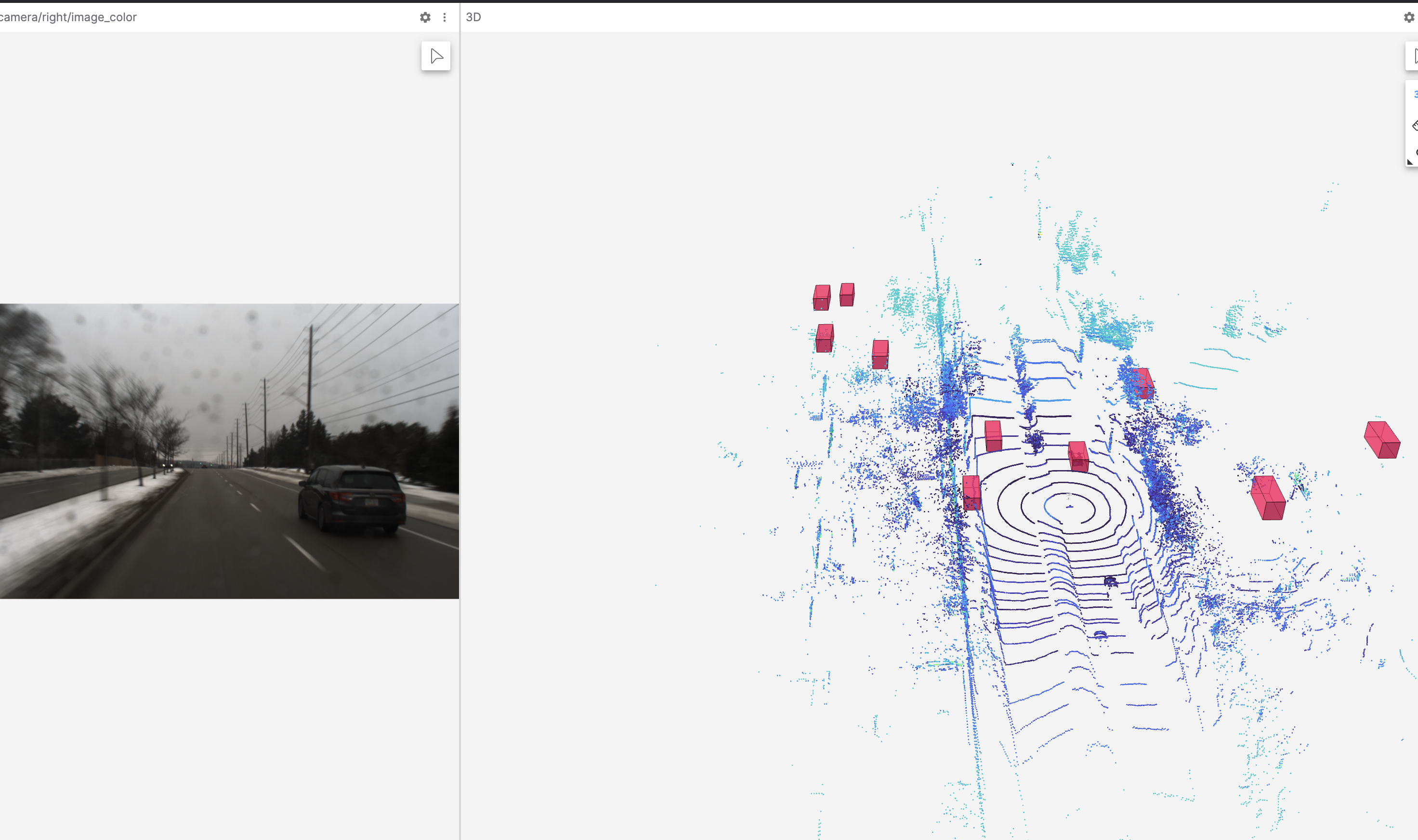

A sample of the detection

CLASS_NAMES: ['Car', 'Pedestrian', 'Cyclist']

DATA_CONFIG:

_BASE_CONFIG_: /home/bolty/OpenPCDet/tools/cfgs/dataset_configs/kitti_dataset.yaml

DATA_AUGMENTOR:

DISABLE_AUG_LIST: ['placeholder']So I was noticing that the detections were very random, and just not very accurate (I thresholded at 50% confidence too)

Lots of false positives

And there are simply cars that aren’t detected, like in this case

- You can see the car behind, also there are 2 cars in front

- two cars coming up behind, and we can’t see them

Speaking with Justin, it’s probably due to the model being trained on the KITTI Dataset which uses a 64-beam LiDAR.

2024-02-20

Alright, we have a fine-tuned model of YOLOv8.

Using Roboflow, the dataset is here: https://universe.roboflow.com/watonomous/traffic-light-detection-pmb05

Running into a small issue trying to train it inside Docker, needed to add shm_size: 8G -.> Increase shared memory

https://github.com/pytorch/pytorch/issues/2244

Trained a yolo model on that dataset. I tried augmenting the dataset, thinking that it would make it better, but it actually didn’t do anything for it. That is really disappointing.

- Retrying a 3rd time without anything augmented

- it’s because I forgot to freeze the backbone

https://github.com/ultralytics/ultralytics/blob/main/ultralytics/cfg/models/v8/yolov8.yaml

- 10 layers in the backbone

I started with 80-10-10 split.

Then tried 60-20-20 split, but not enough data.

So now doing a ~70-20-10 split, with no augmentation. And also freezing the backbone, hopefully should be really good

I think augmentation definitely helps, makes it harder for the model. It’s like Domain Randomization. To make the task easier, we need to zoom in and feed that to the model.

- So that we can detect pedestrians too, and just things that are super far away

- NO, it was just really bad resolution. YOLO uses different image pyramids, that doesn’t work if your image is already so low resolution

Why it wasn't working

Image resolution wasn’t good enough. Switched from default of 480x480 → 1024x1024

- We applied

So the latest model trained on non-augmented data works the best. But the only way I am checking is by looking at the video feed, which is super qualitative. You can look at the training curves, but the dataset is entirely different, so you can’t really quantify?

Another lesson

YOLO expects the image to be square. This has implications once we try to go back from 2D to 3D. Therefore, the image first needs to go back to 3D again.

2024-02-19

Spent the last 2 weeks leading the perception team and trying to parallelize, doing Divide and Conquer. Today, focusing on doing more technical work. Here are everything I am going to tackle in order of priorities:

- Traffic Light Detection

- Traffic Sign Detection

- 3D Object Detection

- 2D / 3D Association with Meshva

- Lane Detection with Justin

I got sidetracked with helping Albert set up the eGPU, and spent like 3 hours with Elbert trying to figure out. Full details in the eGPU page.

Thunderbolt boot means like booting from an external device. We turned it off.

nvidia-settings

Change from NVIDIA On-Demand to NVIDIA (Performance Mode), so we always use the eGPU. This was the right idea, but not entirely

We need to do

sudo prime-select nvidia- so it defaults to using NVIDIA gpu

Then, I spent the rest of the evening working on setting up the sensors.

2024-02-01

Alright, so the eGPU seems to be working, we want to set up docker with it.

I am looking at our older rosbags and seeing what is good and not good:

ros2 bag play -l ~/ament_ws/rosbags2/year3/test_track_days/W20.0/public_road_to_TT

- 3 main topics (other topics are custom messages, such as traffic sign detections):

/camera/right/image_color [sensor_msgs/msg/Image]

/navsat/odom [nav_msgs/msg/Odometry]/velodyne_points [sensor_msgs/msg/PointCloud2]

A lot of the files have bad conversion error.

So no left and right camera

2024-01-27

I spent quite some time thinking about the architecture for Perception.

2024-01-18

Set up the eGPU. See Razer Core X Chroma.

2023-06-18

Alright, good progress, but I think the next logical step is to do object tracking.

Or you could do some sort of frustrum clustering over the bounding boxes.

Also, multi-task learning is super important.

And doing occupancy grids and real-time slam?

I want to check today that the predictions outputted are good.

The inferencing speed is platform specific, so for now, if we don’t need these deep learning models, then don’t work on them.

- Run BEVFusion on ROS2

- This definitely seems the most promising, and they can get up to 30 fps?? Insane

I found this repo maintained by the people at Nvidia: https://github.com/NVIDIA-AI-IOT/Lidar_AI_Solution/tree/master/CUDA-BEVFusion

- It’s a tensorRT implementation, so we can just directly use that!!

Running things on containers is a lot bulkier, but it prevents these really annoying dependency errors from happening:

So I managed to figure out everything (most of it). Needed to use an upgraded TensorRT base image so the repo works. (I see that we previously used 21.07 for Pointpillars).

FROM nvcr.io/nvidia/tensorrt:23.01-py3 as baseYou can check your CUDA driver version with nvidia-smi.

Also, figured out the environment variables for tool/environment.sh by asking chatgpt

There’s this really weird error

failed to solve: rpc error: code = Unknown desc = failed to solve with frontend dockerfile.v0: failed to create LLB definition: failed to authorize: rpc error: code = Unknown desc = failed to fetch anonymous token: unexpected status: 401 Unauthorized

For some reason, I built the file from source, like

docker build . -t cuda-bevfusion

And the issue was gone afterwards??

YESSS, I got BEVFusion running with pretty fast.

-

I want to make this into a clean dockerfile, and then push this

-

Download model weights from Google Drive directly, instead of locally

-

I did store a local copy under

/mnt/scratch/cuda-bevfusion

Alright, the question will be, how can we integrate BEVFusion into ROS2?

- It seems quite straigthforward. All, you need to do is use this script to do the inferencing https://github.com/NVIDIA-AI-IOT/Lidar_AI_Solution/blob/master/CUDA-BEVFusion/tool/pybev.py

I kind of brain farted… it was because the tensorrt base image is using ubuntu 20.04. But humble runs on 22.04.

Alright, so made progress, now I have this error

multimodal_object_detection_1 | rclpy._rclpy_pybind11.RCLError: failed to initialize logging: Failed to create log directory: //.ros/log, at ./src/rcl_logging_spdlog.cpp:90

I think it has to do with permission issues. I need to revisit the dockerfile.

Found the fix through Justin’s implementation.

ENTRYPOINT ["/usr/local/bin/fixuid", "-q", "/home/docker/wato_ros_entrypoint.sh"]

In setup.py, change from packages=[package_name] to

packages=find_packages(exclude=['test']),

Links:

- https://answers.ros.org/question/367793/including-a-python-module-in-a-ros2-package/

- https://answers.ros.org/question/349790/ros2-python-relative-import-of-my-scritps/

Alright, so the node runs. Now, we want to run BEVFusion.

Okay, so when I was building, I was running into errors

bash tool/run.sh

however, I fixed it.. how did I fix it? I think it was an issue with the paths of the executables. ummm i think the culprit is

FROM nvcr.io/nvidia/tensorrt:23.05-py3 as base

This runs Ubuntu 22.04. Previously i used

FROM nvcr.io/nvidia/tensorrt:23.01-py3 as base

which uses Ubuntu 20.04.

Yea, I think I need to figure out how to run Humble on Ubuntu 20.04. Because a lot of the CUDA stuff still is not supported in Ubuntu 22.04.

So I used this Isaac ROS image

FROM nvcr.io/nvidia/isaac/ros:x86_64-ros2_humble_1930bbec7e7704243656b695b9df7844 as base

This seems to be the latest https://catalog.ngc.nvidia.com/orgs/nvidia/teams/isaac/containers/ros

They don’t even have tensorrt installed, so you need to sudo apt-get install tensorrt

So everything was building well until the end. It seems that cuda 12.1 is not compatible yet. So I need to use cuda 12.0. Downgraded to an older version of Isaac ROS.

FROM nvcr.io/nvidia/isaac/ros:x86_64-humble-nav2_7356480e8a4f3484b21ae13ec5c959ee as base

nvcc --versionWell, it seems like no matter which base I use, the cuda version is still 12.1..? When I run nvidia-smi, it gives 12.0.

This is surely the issue. How can I get 12.0??

Trying to update the links

sudo ln -sfn /usr/local/cuda-12 /usr/local/cuda

And then sanity check the link ls -l /usr/local/cuda

this is so annoying. Can i not have an image with cuda 12.0??

- So yea, I don’t know why there are no Isaac ROS container images with cuda 12.0 installed. Maybe I am stupid.

2023-06-17

I think I can get all of this done today:

- Figure out how to run rosbags in humble?

- Seems like there are some infrastructure, use

data_stream - Command is below

- Seems like there are some infrastructure, use

- Implement YOLOv8

- Figure out how to visualize the rosbags in foxglove

- use

vis_tools

- use

I still really believe in multi-task learning. I think I need a very deep and good understanding of how transformers work to do good and important work.

Make sure that the bags are mounted properly:

volumes:

- /mnt/wato-drive2/nuscenes_mcap/ros2bags:/home/docker/ament_ws/nuscenes

- /mnt/wato-drive2/rosbags2:/home/docker/ament_ws/rosbags2I think the data_stream node is fine?

Sidenote: I love how much more confident I am running these commands.

ACTIVE_PROFILES=${ACTIVE_PROFILES:-"vis_tools data_stream"}Great, I did it. So basically, use the Foxglove bridge. No need to use the Rosbridge. Foxglove bridge works because we are currently using Humble. That is great news.

What are the commands to run the rosbags? It was in the README.md.

watod2 run data_stream ros2 bag play ./nuscenes/NuScenes-v1.0-mini-scene-0061/NuScenes-v1.0-mini-scene-0061_0.mcapI want to run our local recorded data. Figured out the path, which was

/mnt/wato-drive2/rosbags2/year3/test_track_days/W20.0/public_road_to_TT/public_road_to_TT.db3

HOWEVER, doesn’t seem to be workikng.

I will use NuScenes for now.

ls /mnt/wato-drive2/nuscenes_mcap/ros2bags

so we can simply run something like

watod2 run data_stream ros2 bag play ./rosbags2/year3/test_track_days/W20.0/public_road_to_TT/public_road_to_TT.db3

So there is an issue, because we are using custom message types. The rosbag player doesn’t recognize those message types. Therefore, what can we do?

I think the conversions of the older bags don’t work. For now, I am going to stick to nuscenes.

Some bags to quantify:

watod2 run data_stream ros2 bag play ./nuscenes/NuScenes-v1.0-mini-scene-0553/NuScenes-v1.0-mini-scene-0553_0.mcap --topics /CAM_FRONT/image_rect_compressed

watod2 run data_stream ros2 bag play ./nuscenes/NuScenes-v1.0-mini-scene-0061/NuScenes-v1.0-mini-scene-0061_0.mcap --topics /CAM_FRONT/image_rect_compressed

watod2 run data_stream ros2 bag play ./nuscenes/NuScenes-v1.0-mini-scene-0757/NuScenes-v1.0-mini-scene-0757_0.mcap --topics /CAM_FRONT/image_rect_compressedI think get YOLOv5 working again on the compressed nuscenes bag.

- Then, you can get YOLOv8 working.

- Then, get the onnx version working so that it runs super fast.

Question is: How do ROS compressed images work?

Alright, got it working. ChatGPT told me the answer

# convert ros Image to cv::Mat

if self.compressed:

np_arr = np.frombuffer(msg.data, np.uint8)

cv_image = cv2.imdecode(np_arr, cv2.IMREAD_COLOR)

else:

try:

cv_image = self.cv_bridge.imgmsg_to_cv2(

image, desired_encoding="passthrough")

except CvBridgeError as e:

self.get_logger().error(str(e))

returnSo basically, high level steps to reproduce this:

- Set the active profiles to use

vis_toolsand camera_detection - Start both nodes with watod up

- Run a demo rosbag, such as

watod2 run data_stream ros2 bag play ./nuscenes/NuScenes-v1.0-mini-scene-0061/NuScenes-v1.0-mini-scene-0061_0.mcapInitial experiments show around 3-5hz, which is really slow. This is because we haven’t really compressed it.

ros2 topic hz /annotated_img

average rate: 4.884

min: 0.119s max: 0.382s std dev: 0.08410s window: 7

average rate: 3.599

min: 0.119s max: 0.804s std dev: 0.20109s window: 9

average rate: 3.324

min: 0.119s max: 0.804s std dev: 0.21217s window: 14

average rate: 3.223

min: 0.119s max: 0.804s std dev: 0.21615s window: 18

average rate: 3.219

min: 0.119s max: 0.804s std dev: 0.19981s window: 22

average rate: 2.986

min: 0.119s max: 1.078s std dev: 0.23996s window: 26

average rate: 3.146

min: 0.119s max: 1.078s std dev: 0.22644s window: 31

Okay, so we gotta compress and get it running fast as possible.

One of the issues that is a bottleneck is actually streaming the data on foxglove. Therefore, you should try only streaming to a particular topic, such as /CAM_FRONT/image_rect_compressed.

i keep getting

foxglove_1 | [foxglove_bridge-1] [WARN] [1687056080.781289199] [foxglove_bridge]: [WS] 192.168.224.1:49448: Send buffer limit reached

I think the fix is to just stream the data that we care about. So only the camera visualizations. ah yess that is a lot better

ros2 bag play ./nuscenes/NuScenes-v1.0-mini-scene-0061/NuScenes-v1.0-mini-scene-0061_0.mcap --topics /CAM_FRONT/image_rect_compressed

Umm, it still seems to slow down sometimes.

ros2 topic hz annotated_img

average rate: 3.031

min: 0.216s max: 0.442s std dev: 0.11012s window: 4

average rate: 3.714

min: 0.180s max: 0.442s std dev: 0.09734s window: 9

average rate: 3.865

min: 0.180s max: 0.442s std dev: 0.08269s window: 14

average rate: 3.736

min: 0.180s max: 0.526s std dev: 0.09795s window: 18

average rate: 3.745

min: 0.180s max: 0.526s std dev: 0.09597s window: 22

average rate: 3.792

min: 0.180s max: 0.526s std dev: 0.09096s window: 27

average rate: 3.762

How fast is the data being published at?

ros2 topic hz /CAM_FRONT/image_rect_compressed

average rate: 12.856

min: 0.002s max: 0.189s std dev: 0.04910s window: 13

average rate: 12.319

min: 0.002s max: 0.189s std dev: 0.03945s window: 26

average rate: 11.894

min: 0.002s max: 0.189s std dev: 0.03897s window: 37

average rate: 11.774

min: 0.002s max: 0.189s std dev: 0.03956s window: 50

average rate: 11.815

min: 0.002s max: 0.189s std dev: 0.03963s window: 62

Yea, so the inference is still really slow.