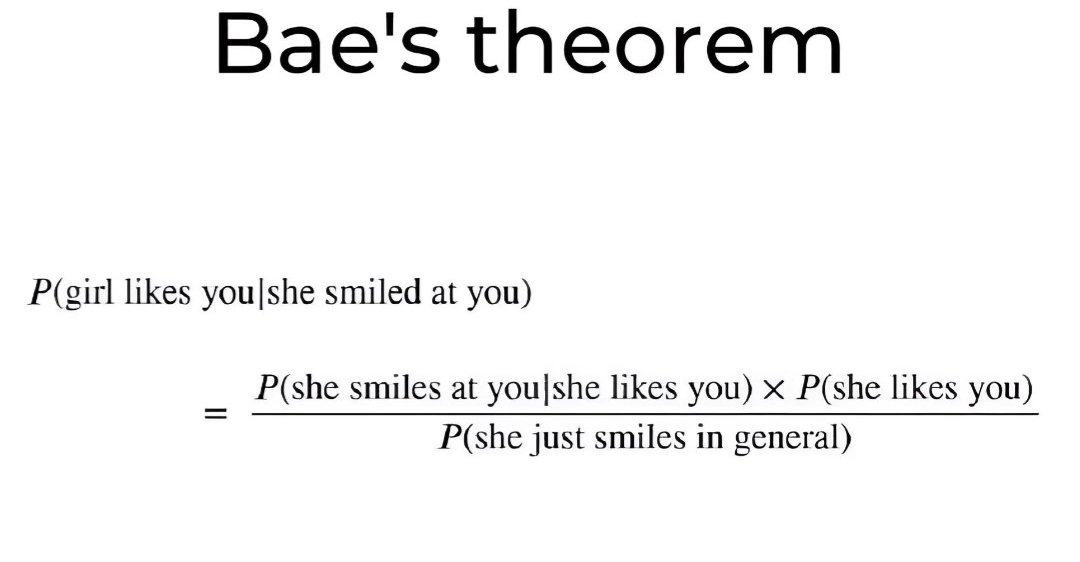

Bayes’ Theorem

Bayes’ Rule is an application of the LOTP with the Conditional Probability rule.

Bayes’ theorem describes the probability of an event based on prior knowledge of conditions that might be related to the event.

Let be a partition of and be any event, then

Alternate notation that is more useful for your ML stuff

is your data and are your model parameters.

Proof of Bayes Theorem

How is bayes rule derived? Apply the basic rule of Conditional Probability, and leverage the fact that AND is commutative.

Example of use of Bayes Theorem

Example

You have one fair coin and a biased coin that lands on heads with a probability of 3 4 . A coin is chosen at random and tossed three times. If we observe three heads in a row, what is the probability that the fair coin was chosen?

Solution: The fair coin was chosen The biased coin was chosen 3 heads observed in 3 tosses

We want to find , so we can use Bayes’ Theorem, and calculate

Relation to more advanced control theory / ML

This is why we say (from Kalman Filter in Python)

- This is at the core of Bayes Filter update

You just continuously update the posterior, setting prior to old posterior every time.

See Kalman Filter.

Bayes theorem is super useful because it turns a hard problem into an easy problem.

Hard problems:

- P(Cancer = True | Test = Positive)

- P(Rain = True | Readings)

Stated like that the problems seem unsolvable.

Easy problems:

- P(Test = Positive | Cancer = True)

- P(Readings | Rain = True)

Bayes’ Theorem lets us solve the hard problem by solving the easy problem.

Bayes rule with Conditioning

From CS287.

Applied

https://x.com/cneuralnetwork/status/1870051432981819836