Kalman Filter

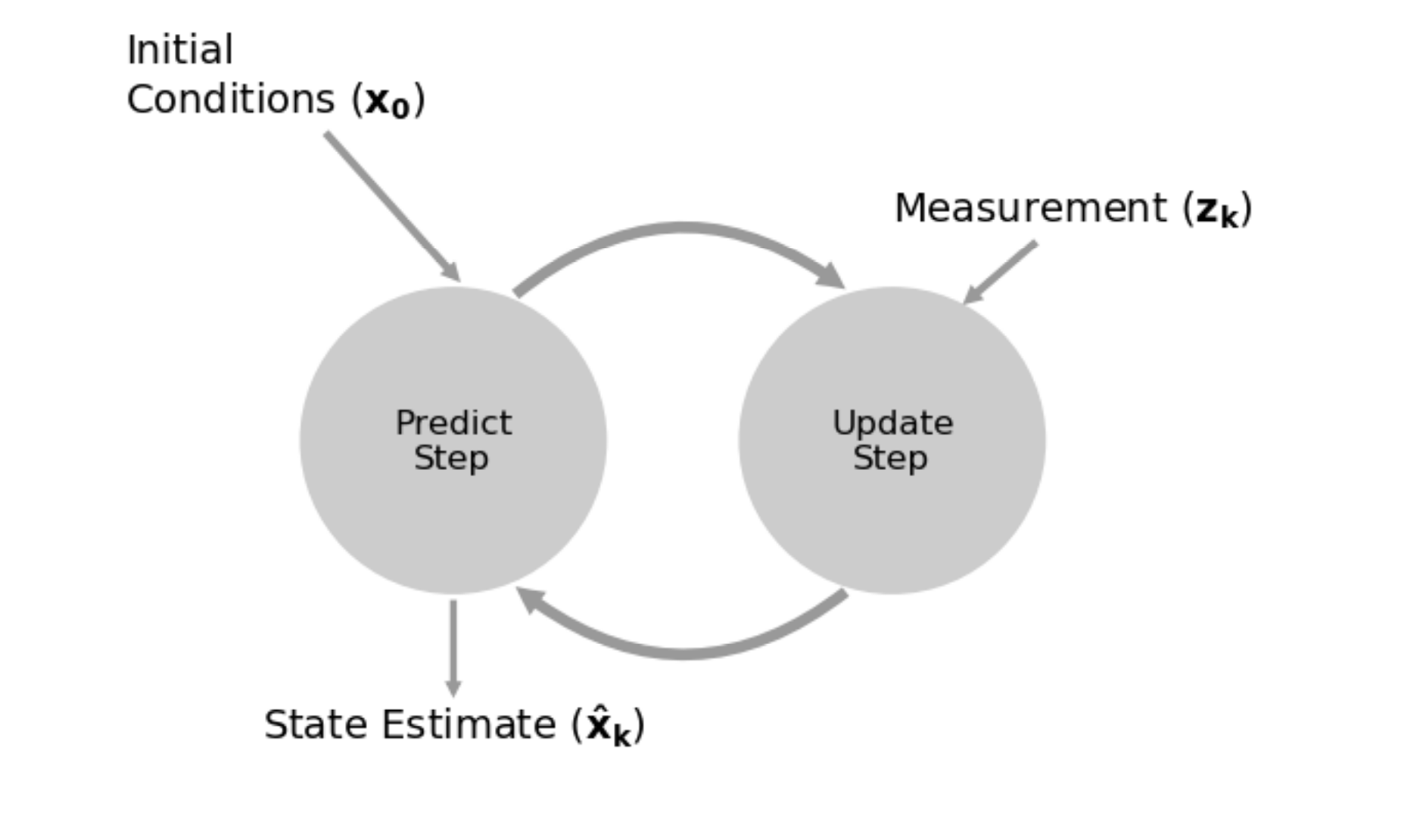

A Kalman filter is a mathematical algorithm for State Estimation based on noisy measurements.

- Recursive algorithm that uses a combination of predictions and measurements to estimate the state of a system over time

Resources

Important Idea

“Multiple data points are more accurate than one data point, so throw nothing away no matter how inaccurate it is”

- Ahh so this is also an idea I heard from Steve Macenski

Kalman Filter Book

These 2 chapters most useful:

- Chapter 2: https://github.com/rlabbe/Kalman-and-Bayesian-Filters-in-Python/blob/master/02-Discrete-Bayes.ipynb

- Chapter 4: https://github.com/rlabbe/Kalman-and-Bayesian-Filters-in-Python/blob/master/04-One-Dimensional-Kalman-Filters.ipynb

First understand Discrete Bayes, which helps you build really solid foundation. Everything else builds on top of this. A kalman filter just uses gaussians.

Instead of histograms used with a discrete Bayes filter, we now use gaussians to model the data.

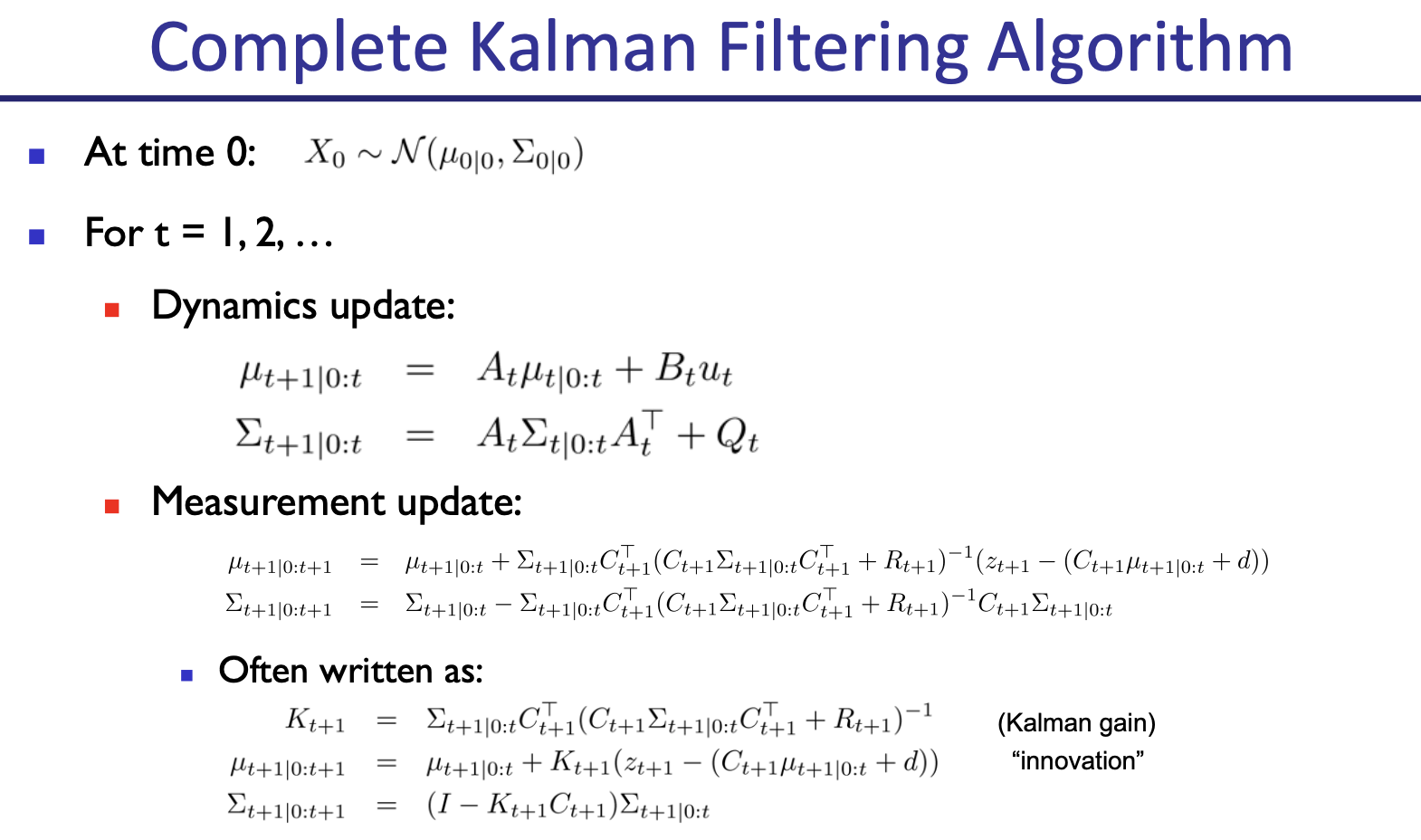

We have two fundamental equations:

- is gaussian addition, is gaussian multiplication (see Gaussian Operator)

- indicates that it is gaussian

The basic things

def predict(pos, movement):

return gaussian(pos.mean + movement.mean, pos.var + movement.var)

def gaussian_multiply(g1, g2):

mean = (g1.var * g2.mean + g2.var * g1.mean) / (g1.var + g2.var)

variance = (g1.var * g2.var) / (g1.var + g2.var)

return gaussian(mean, variance)

def update(prior, likelihood):

posterior = gaussian_multiply(likelihood, prior)

return posterior# perform Kalman filter

x = gaussian(0., 20.**2)

for z in zs:

prior = predict(x, process_model)

likelihood = gaussian(z, sensor_var)

x = update(prior, likelihood)

# save results

predictions.append(prior.mean)

xs.append(x.mean)However, this notation is rarely used. Below, we will introduce conversion to conventional Kalman filter notation.

In literature, this is the notation (an alternative but equivalent implementation):

def update(prior, measurement):

x, P = prior # mean and variance of prior

z, R = measurement # mean and variance of measurement

y = z - x # residual

K = P / (P + R) # Kalman gain

x = x + K*y # posterior

P = (1 - K) * P # posterior variance

return gaussian(x, P)

def predict(posterior, movement):

x, P = posterior # mean and variance of posterior

dx, Q = movement # mean and variance of movement

x = x + dx

P = P + Q

return gaussian(x, P)The kalman gain tells us how much we trust the process vs. the measurement.

Predict

- are the state mean and covariance

- correspond to and

- is the state transition function

- corresponds to

- When multiplied by it computes the prior

- is the process covariance

- corresponds to

- and model control inputs to the system

Addressing discrepancies

So I think in the univariate cases, we didn’t introduce , a possible control input. So then we had for the multivariate case. Which is fine. But we’re also introducing this control input. I was thinking how the control input was , but no, there are like two possible transitions.

Update

- is the measurement function

- are the measurement mean and noise covariance

- Correspond to and in the univariate filter

- and are the residual and Kalman gain

Your job as a designer

Your job as a designer will be to design the state , the process , the measurement , and the measurement function . If the system has control inputs, such as a robot, you will also design and .

CS287

I was learning about Kalman Filters back in like Grade 9 when I was trying to build a self-balancing rocket. I watched through quite a few lectures.

i was trying to build a Rocket.

They used those things in rockets! Actually, Kalman filters are used everywhere. Self-driving cars, drones, everything I am interested in actually uses the Kalman filter.

From CS287, a Kalman filter is just a special case of Bayes Filter, where dynamics and sensory models linear Gaussians.

Kalman Filter from Visual SLAM book

Notation also used in Visual SLAM.

- is the motion data

- is the observation data

- is the state

- are the landmarks